aiXcoder-7B: A Light-weight and Environment friendly Massive Language Mannequin Providing Excessive Accuracy in Code Completion Throughout A number of Languages and Benchmarks

Massive language fashions (LLMs) have revolutionized varied domains, together with code completion, the place synthetic intelligence predicts and suggests code primarily based on a developer’s earlier inputs. This expertise considerably enhances productiveness, enabling builders to write down code sooner and with fewer errors. Regardless of the promise of LLMs, many fashions battle with balancing velocity and accuracy. Bigger fashions typically have greater accuracy however introduce delays that hinder real-time coding duties, resulting in inefficiency. This problem has spurred efforts to create smaller, extra environment friendly fashions that retain excessive efficiency in code completion.

The first drawback within the discipline of LLMs for code completion is the trade-off between mannequin dimension and efficiency. Bigger fashions, whereas highly effective, require extra computational assets and time, resulting in slower response instances for builders. This diminishes their usability, notably in real-time purposes the place fast suggestions is crucial. The necessity for sooner, light-weight fashions that also supply excessive accuracy in code predictions has grow to be a vital analysis focus lately.

Conventional strategies for code completion sometimes contain scaling up LLMs to extend prediction accuracy. These strategies, equivalent to these utilized in CodeLlama-34B and StarCoder2-15B, depend on huge datasets and billions of parameters, considerably rising their dimension and complexity. Whereas this strategy improves the fashions’ means to generate exact code, it comes at the price of greater response instances and higher {hardware} necessities. Builders typically discover that these fashions’ dimension and computational calls for hinder their workflow.

The analysis staff from aiXcoder and Peking College launched aiXcoder-7B, designed to be light-weight and extremely efficient in code completion duties. With solely 7 billion parameters, it achieves outstanding accuracy in comparison with bigger fashions, making it a great resolution for real-time coding environments. aiXcoder-7B focuses on balancing dimension and efficiency, guaranteeing that it may be deployed in academia and trade with out the standard computational burdens of bigger LLMs. The mannequin’s effectivity makes it a standout in a discipline dominated by a lot bigger options.

The analysis staff employed multi-objective coaching, which incorporates strategies like Subsequent-Token Prediction (NTP), Fill-In-the-Center (FIM), and the superior Structured Fill-In-the-Center (SFIM). SFIM, particularly, permits the mannequin to contemplate the syntax and construction of code extra deeply, enabling it to foretell extra precisely throughout a variety of coding situations. This contrasts with different fashions that usually solely take into account code plain textual content with out understanding its structural nuances. aiXcoder-7B’s means to foretell lacking code segments inside a operate or throughout information offers it a singular benefit in real-world programming duties.

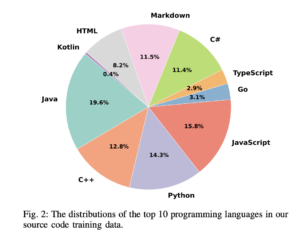

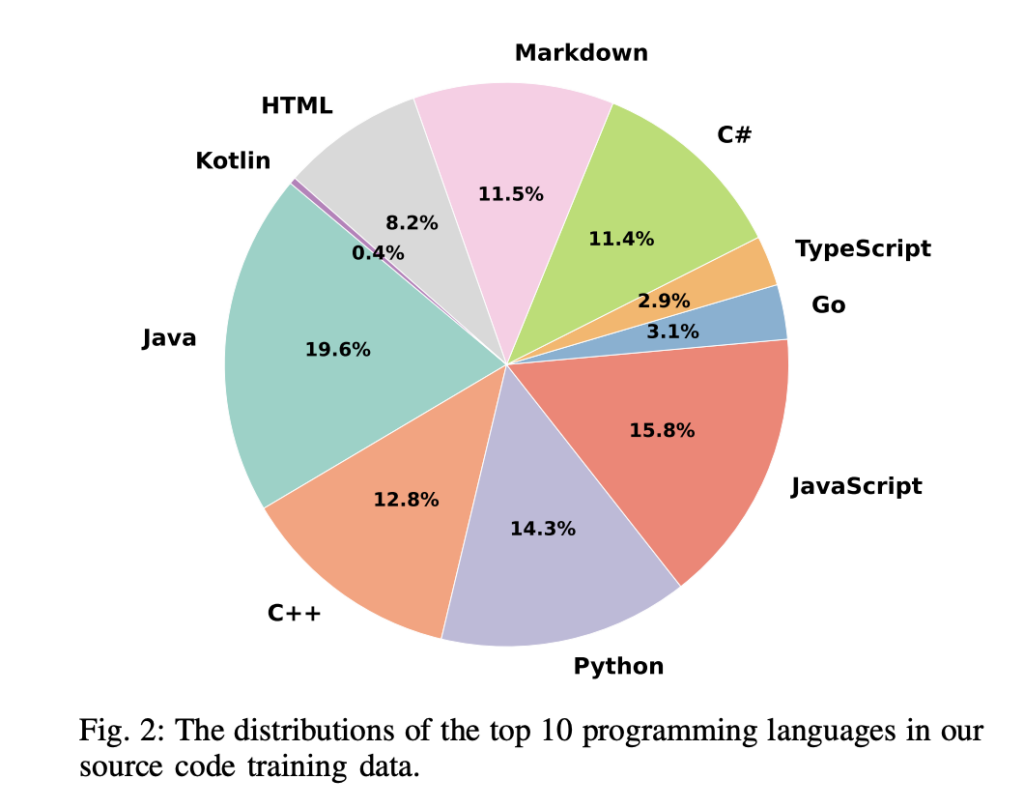

The coaching course of for aiXcoder-7B concerned utilizing an intensive dataset of 1.2 trillion distinctive tokens. The mannequin was skilled utilizing a rigorous information assortment pipeline that concerned information crawling, cleansing, deduplication, and high quality checks. The dataset included 3.5TB of supply code from varied programming languages, guaranteeing the mannequin may deal with a number of languages, together with Python, Java, C++, and JavaScript. To additional improve its efficiency, aiXcoder-7B utilized numerous information sampling methods, equivalent to sampling primarily based on file content material similarity, inter-file dependencies, and file path similarities. These methods helped the mannequin perceive cross-file contexts, which is essential for duties the place code completion depends upon references unfold throughout a number of information.

aiXcoder-7B outperformed six LLMs of comparable dimension in six totally different benchmarks. Notably, the HumanEval benchmark achieved a Cross@1 rating of 54.9%, outperforming even bigger fashions like CodeLlama-34B (48.2%) and StarCoder2-15B (46.3%). In one other benchmark, FIM-Eval, aiXcoder-7B demonstrated robust generalization skills throughout various kinds of code, attaining superior efficiency in languages like Java and Python. Its means to generate code that carefully matches human-written code, each in type and size, additional distinguishes it from rivals. For example, in Java, aiXcoder-7B produced solely 0.97 instances the scale of human-written code in comparison with different fashions that generated for much longer code.

The aiXcoder-7B showcases the potential for creating smaller, sooner, and extra environment friendly LLMs with out sacrificing accuracy. Its efficiency throughout a number of benchmarks and programming languages positions it as an excellent software for builders who want dependable, real-time code completion. The mix of multi-objective coaching, an unlimited dataset, and progressive sampling methods has allowed aiXcoder-7B to set a brand new commonplace for light-weight LLMs on this area.

In conclusion, aiXcoder-7B addresses a vital hole within the discipline of LLMs for code completion by providing a extremely environment friendly and correct mannequin. The analysis behind the mannequin highlights a number of key takeaways that may information future improvement on this space:

- Seven billion parameters guarantee effectivity with out sacrificing accuracy.

- Makes use of multi-objective coaching, together with SFIM, to enhance prediction capabilities.

- Skilled on 1.2 trillion tokens with a complete information assortment course of.

- Outperforms bigger fashions in benchmarks, attaining 54.9% Cross@1 in HumanEval.

- Able to producing code that carefully mirrors human-written code in each type and size.

Try the Paper and GitHub. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t neglect to comply with us on Twitter and be part of our Telegram Channel and LinkedIn Group. When you like our work, you’ll love our newsletter.. Don’t Overlook to hitch our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.