Scaling Legal guidelines and Mannequin Comparability: New Frontiers in Giant-Scale Machine Studying

Giant language fashions (LLMs) have gained vital consideration in machine studying, shifting the main focus from optimizing generalization on small datasets to lowering approximation error on huge textual content corpora. This paradigm shift presents researchers with new challenges in mannequin growth and coaching methodologies. The first goal has advanced from stopping overfitting by way of regularization strategies to successfully scaling up fashions to devour huge quantities of information. Researchers now face the problem of balancing computational constraints with the necessity for improved efficiency on downstream duties. This shift necessitates a reevaluation of conventional approaches and the event of sturdy methods to harness the facility of large-scale language pretraining whereas addressing the constraints imposed by accessible computing assets.

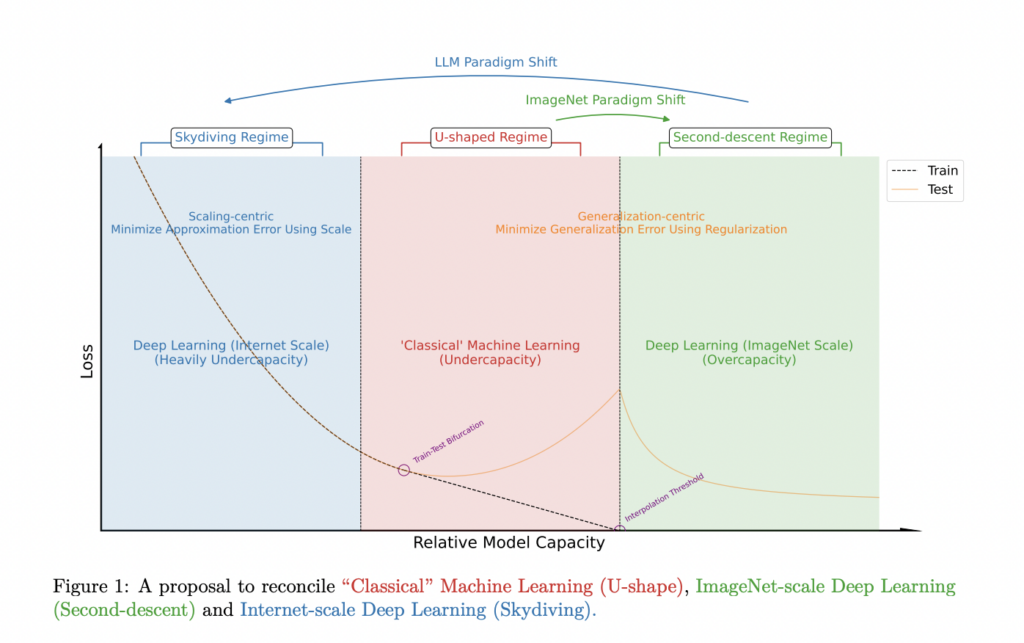

The shift from a generalization-centric paradigm to a scaling-centric paradigm in machine studying has necessitated reevaluating conventional approaches. Google DeepMind researchers have recognized key variations between these paradigms, specializing in minimizing approximation error by way of scaling relatively than lowering generalization error by way of regularization. This shift challenges typical knowledge, as practices that had been efficient within the generalization-centric paradigm could not yield optimum ends in the scaling-centric method. The phenomenon of “scaling regulation crossover” additional complicates issues, as strategies that improve efficiency at smaller scales could not translate successfully to bigger ones. To mitigate these challenges, researchers suggest growing new ideas and methodologies to information scaling efforts and successfully examine fashions at unprecedented scales the place conducting a number of experiments is usually infeasible.

Machine studying goals to develop features able to making correct predictions on unseen knowledge by understanding the underlying construction of the info. This course of entails minimizing the take a look at loss on unseen knowledge whereas studying from a coaching set. The take a look at error may be decomposed into the generalization hole and the approximation error (coaching error).

Two distinct paradigms have emerged in machine studying, differentiated by the relative and absolute scales of information and fashions:

1. The generalization-centric paradigm, which operates with comparatively small knowledge scales, is additional divided into two sub-paradigms:

a) The classical bias-variance trade-off regime, the place mannequin capability is deliberately constrained.

b) The trendy over-parameterized regime, the place mannequin scale considerably surpasses knowledge scale.

2. The scaling-centric paradigm, characterised by massive knowledge and mannequin scales, with knowledge scale exceeding mannequin scale.

These paradigms current totally different challenges and require distinct approaches to optimize mannequin efficiency and obtain desired outcomes.

The proposed methodology employs a decoder-only transformer structure educated on the C4 dataset, using the NanoDO codebase. Key architectural options embody Rotary Positional Embedding, QK-Norm for consideration computation, and untied head and embedding weights. The mannequin makes use of Gelu activation with F = 4D, the place D is the mannequin dimension and F is the hidden dimension of the MLP. Consideration heads are configured with a head dimension of 64, and the sequence size is about to 512.

The mannequin’s vocabulary measurement is 32,101, and the full parameter rely is roughly 12D²L, the place L is the variety of transformer layers. Most fashions are educated to Chinchilla optimality, utilizing 20 × (12D²L + DV) tokens. Compute necessities are estimated utilizing the formulation F = 6ND, the place F represents the variety of floating-point operations.

For optimization, the strategy employs AdamW with β1 = 0.9, β2 = 0.95, ϵ = 1e-20, and a coupled weight decay λ = 0.1. This mixture of architectural decisions and optimization methods goals to boost the mannequin’s efficiency within the scaling-centric paradigm.

Within the scaling-centric paradigm, conventional regularization strategies are being reevaluated for his or her effectiveness. Three widespread regularization strategies generally used within the generalization-centric paradigm are specific L2 regularization and the implicit regularization results of huge studying charges and small batch sizes. These strategies have been instrumental in mitigating overfitting and lowering the hole between coaching and take a look at losses in smaller-scale fashions.

Nevertheless, within the context of huge language fashions and the scaling-centric paradigm, the need of those regularization strategies is being questioned. As fashions function in a regime the place overfitting is much less of a priority because of the huge quantity of coaching knowledge, the normal advantages of regularization could now not apply. This shift prompts researchers to rethink the function of regularization in mannequin coaching and to discover different approaches that could be extra appropriate for the scaling-centric paradigm.

The scaling-centric paradigm presents distinctive challenges in mannequin comparability as conventional validation set approaches turn out to be impractical at huge scales. The phenomenon of scaling regulation crossover additional complicates issues, as efficiency rankings noticed at smaller scales could not maintain true for bigger fashions. This raises the essential query of the right way to successfully examine fashions when coaching is possible solely as soon as at scale.

In distinction, the generalization-centric paradigm depends closely on regularization as a guideline. This method has led to insights into hyperparameter decisions, weight decay results, and the advantages of over-parameterization. It additionally explains the effectiveness of strategies like weight sharing in CNNs, locality, and hierarchy in neural community architectures.

Nevertheless, the scaling-centric paradigm could require new guiding ideas. Whereas regularization has been essential for understanding and enhancing generalization in smaller fashions, its function and effectiveness in large-scale language fashions are being reevaluated. Researchers at the moment are challenged to develop strong methodologies and ideas that may information the event and comparability of fashions on this new paradigm, the place conventional approaches could now not apply.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t overlook to comply with us on Twitter and be a part of our Telegram Channel and LinkedIn Group. In the event you like our work, you’ll love our newsletter..

Don’t Neglect to affix our 52k+ ML SubReddit.

We’re inviting startups, firms, and analysis establishments who’re engaged on small language fashions to take part on this upcoming ‘Small Language Fashions’ Journal/Report by Marketchpost.com. This Journal/Report will probably be launched in late October/early November 2024. Click here to set up a call!