Revolutionize emblem design creation with Amazon Bedrock: Embracing generative artwork, dynamic logos, and AI collaboration

Within the area of expertise and artistic design, emblem design and creation has tailored and developed at a speedy tempo. From the hieroglyphs of historical Egypt to the smooth minimalism of immediately’s tech giants, the visible identities that outline our favourite manufacturers have undergone a exceptional transformation.

In the present day, the world of inventive design is as soon as once more being reworked by the emergence of generative AI. Designers and types now have alternatives to push the boundaries of creativity, crafting logos that aren’t solely visually gorgeous but in addition aware of their environments and tailor-made to the preferences of their goal audiences.

Amazon Bedrock permits entry to highly effective generative AI fashions like Stable Diffusion via a user-friendly API. These fashions could be built-in into the emblem design workflow, permitting designers to quickly ideate, experiment, generate, and edit a variety of distinctive visible pictures. Integrating it with the vary of AWS serverless computing, networking, and content material supply companies like AWS Lambda, Amazon API Gateway, and AWS Amplify facilitates the creation of an interactive instrument to generate dynamic, responsive, and adaptive logos.

On this publish, we stroll via how AWS may help speed up a model’s inventive efforts with entry to a strong image-to-image mannequin from Steady Diffusion obtainable on Amazon Bedrock to interactively create and edit artwork and emblem pictures.

Picture-to-image mannequin

The Stability AI’s image-to-image mannequin, SDXL, is a deep studying mannequin that generates pictures based mostly on textual content descriptions, pictures, or different inputs. It first converts the textual content into numerical values that summarize the immediate, then makes use of these values to generate a picture illustration. Lastly, it upscales the picture illustration right into a high-resolution picture. Steady Diffusion may generate new pictures based mostly on an preliminary picture and a textual content immediate. For instance, it may fill in a line drawing with colours, lighting, and a background that is sensible for the topic. Steady Diffusion may also be used for inpainting (including options to an present picture) and outpainting (eradicating options from an present picture).

One in every of its main purposes lies in promoting and advertising and marketing, the place it may be used to create customized advert campaigns and a limiteless variety of advertising and marketing belongings. Companies can generate visually interesting and tailor-made pictures based mostly on particular prompts, enabling them to face out in a crowded market and successfully talk their model message. Within the media and leisure sector, filmmakers, artists, and content material creators can use this as a instrument for creating inventive belongings and ideating with pictures.

Resolution overview

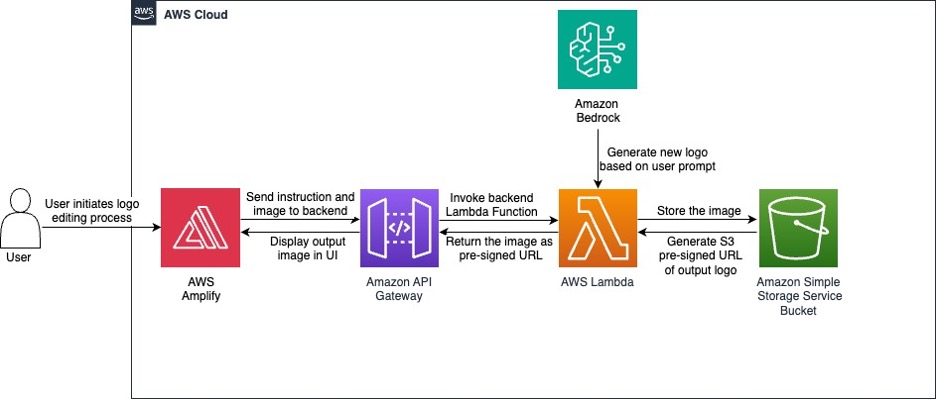

The next diagram illustrates the answer structure.

This structure workflow entails the next steps:

- Within the frontend UI, a person chooses from certainly one of two choices to get began:

- Generate an preliminary picture.

- Present an preliminary picture hyperlink.

- The person supplies a textual content immediate to edit the given picture.

- The person chooses Name API to invoke API Gateway to start processing on the backend.

- The API invokes a Lambda operate, which makes use of the Amazon Bedrock API to invoke the Stability AI SDXL 1.0 mannequin.

- The invoked mannequin generates a picture, and the output picture is saved in an Amazon Simple Storage Service (Amazon S3) bucket.

- The backend companies return the output picture to the frontend UI.

- The person can use this generated picture as a reference picture and edit it, generate a brand new picture, or present a special preliminary picture. They will proceed this course of till the mannequin produces a passable output.

Conditions

To arrange this answer, full the next conditions:

- Choose an AWS Area the place you wish to deploy the answer. We suggest utilizing the

us-east-1 - Receive entry to the Stability SDXL 1.0 mannequin in Amazon Bedrock in the event you don’t have it already. For directions, see Access Amazon Bedrock foundation models.

- In the event you want to make use of a separate S3 bucket for this answer, create a brand new S3 bucket.

- In the event you want to make use of localhost for testing the applying as a substitute of Amplify, make sure that python3 is put in in your native machine.

Deploy the answer

To deploy the backend sources for the answer, we create a stack utilizing an AWS CloudFormation template. You’ll be able to add the template straight, or add it to an S3 bucket and hyperlink to it through the stack creation course of. In the course of the creation course of, present the suitable variable names for apiGatewayName, apiGatewayStageName, s3BucketName, and lambdaFunctionName. In the event you created a brand new S3 bucket earlier, enter that identify in s3BucketName – this bucket is the place output pictures are saved. When the stack creation is full, all of the backend sources are able to be linked to the frontend UI.

The frontend sources play an integral half in creating an interactive setting to your end-users. Full the next steps to combine the frontend and backend:

- When the CloudFormation stack deployment is full, open the created API from the API Gateway console.

- Select Levels within the navigation pane, and on the Stage actions menu, select Generate SDK.

- For Platform, select JavaScript.

- Obtain and unzip the JavaScript SDK .zip file, which accommodates a folder referred to as

apiGateway-js-sdk. - Obtain the frontend UI index.html file and place it within the unzipped folder.

This file is configured to combine with the JavaScript SDK by merely inserting it within the folder.

- After the

index.htmlis positioned within the folder, choose the content material of the folder and compress it right into a .zip file (don’t compress theapiGateway-js-sdkfolder itself.)

- On the Amplify console, select Create new app.

- Choose Deploy with out Git, then select Subsequent.

- Add the compressed .zip file, and alter the applying identify and department identify if most popular.

- Select Save and deploy.

The deployment will take a couple of seconds. When deployment is full, there will probably be a website URL that you need to use to entry the applying. The applying is able to be examined on the area URL.

CloudFormation template overview

Earlier than we transfer on to testing the answer, let’s discover the CloudFormation template. This template units up an API Gateway API with acceptable guidelines and paths, a Lambda operate, and crucial permissions in AWS Identity and Access Management (IAM). Let’s dive deep into the content material of the CloudFormation template to know the sources created:

- PromptProcessingAPI – That is the primary API Gateway REST API. This API will probably be used to invoke the Lambda operate. Different API Gateway sources, strategies, and schemas created within the CloudFormation template are connected to this API.

- ActionResource, ActionInputResource, PromptResource, PromptInputResource, and ProxyResource – These are API Gateway sources that outline the URL path construction for the API. The trail construction is

/motion/{actionInput}/immediate/{promptInput}/{proxy+}.The{promptInput}worth is a placeholder variable for the immediate that customers enter within the frontend. Equally,{actionInput}is the selection the person chosen for a way they wish to generate the picture. These are used within the backend Lambda operate to course of and generate pictures. - ActionInputMethod, PromptInputMethod, and ProxyMethod – These are API Gateway strategies that outline the mixing with the Lambda operate for the POST HTTP methodology.

- ActionMethodCORS, ActionInputMethodCORS, PromptMethodCORS, PromptInputMethodCORS, and ProxyMethodCORS – These are API Gateway strategies that deal with the cross-origin useful resource sharing (CORs) help. These sources are essential in integrating the frontend UI with backend sources. For extra data on CORS, see What is CORS?

- ResponseSchema and RequestSchema – These are API Gateway fashions that outline the anticipated JSON schema for the response and request payloads, respectively.

- Default4xxResponse and Default5xxResponse – These are the gateway responses that outline the default response conduct for 4xx and 5xx HTTP standing codes, respectively.

- ApiDeployment – This useful resource deploys the API Gateway API after all the previous configurations have been set. After the deployment, the API is able to use.

- LambdaFunction – This creates a Lambda operate and specifies the kind of runtime, the service position for Lambda, and the restrict for the reserved concurrent runs.

- LambdaPermission1, LambdaPermission2, and LambdaPermission3 – These are permissions that permit the API Gateway API to invoke the Lambda operate.

- LambdaExecutionRole and lambdaLogGroup – The primary useful resource is the IAM position connected to the Lambda operate permitting it to run on different AWS companies comparable to Amazon S3 and Amazon Bedrock. The second useful resource configures the Lambda operate log group in Amazon CloudWatch.

Lambda operate clarification

Let’s dive into the main points of the Python code that generates and manipulate pictures utilizing the Stability AI mannequin. There are 3 ways of utilizing the Lambda operate: present a textual content immediate to generate an preliminary picture, add a picture and embody a textual content immediate to regulate the picture, or reupload a generated picture and embody a immediate to regulate the picture.

The code accommodates the next constants:

- negative_prompts – An inventory of unfavourable prompts used to information the picture technology.

- style_preset – The fashion preset to make use of for picture technology (for instance,

photographic,digital-art, orcinematic). We useddigital-artfor this publish. - clip_guidance_preset – The Contrastive Language-Picture Pretraining (CLIP) steering preset to make use of (for instance,

FAST_BLUE,FAST_GREEN,NONE,SIMPLE,SLOW,SLOWER,SLOWEST). - sampler – The sampling algorithm to make use of for picture technology (for instance,

DDIM,DDPM,K_DPMPP_SDE,K_DPMPP_2M,K_DPMPP_2S_ANCESTRAL,K_DPM_2,K_DPM_2_ANCESTRAL,K_EULER,K_EULER_ANCESTRAL,K_HEUN,K_LMS). - width – The width of the generated picture.

handler(occasion, context) is the primary entry level for the Lambda operate. It processes the enter occasion, which accommodates the promptInput and actionInput parameters. Primarily based on the actionInput, it performs one of many following actions:

- For

GenerateInit, it generates a brand new picture utilizing thegenerate_image_with_bedrockoperate, uploads it to Amazon S3, and returns the file identify and a pre-signed URL. - Whenever you add an present picture, it performs one of many following actions:

- s3URL – It retrieves a picture from a pre-signed S3 URL, generates a brand new picture utilizing the

generate_image_with_bedrockoperate, uploads the brand new picture to Amazon S3, and returns the file identify and a pre-signed URL. - UseGenerated – It retrieves a picture from a pre-signed S3 URL, generates a brand new picture utilizing the

generate_image_with_bedrockoperate, uploads the brand new picture to Amazon S3, and returns the file identify and a pre-signed URL.

- s3URL – It retrieves a picture from a pre-signed S3 URL, generates a brand new picture utilizing the

The operate generate_image_with_bedrock(immediate, init_image_b64=None) generates a picture utilizing the Amazon Bedrock runtime service, which incorporates the next actions:

- If an preliminary picture is offered (base64-encoded), it makes use of that as the start line for the picture technology.

- If no preliminary picture is offered, it generates a brand new picture based mostly on the offered immediate.

- The operate units numerous parameters for the picture technology, such because the textual content prompts, configuration, and sampling methodology.

- It then invokes the Amazon Bedrock mannequin, retrieves the generated picture as a base64-encoded string, and returns it.

To acquire a extra customized outputs, the hyperparameter values within the operate could be adjusted:

- text_prompts – This can be a record of dictionaries, the place every dictionary accommodates a textual content immediate and an related weight. For a optimistic textual content immediate, one that you just want to affiliate to the output picture, weight is ready as 1.0. For all the unfavourable textual content prompts, weight is ready as -1.0.

- cfg_scale – This parameter controls the potential for randomness within the picture. The default is 7, and 10 appears to work properly from our observations. The next worth means the picture will probably be extra influenced by the textual content, however a worth that’s too excessive or too low will end in visually poor-quality outputs.

- init_image – This parameter is a base64-encoded string representing an preliminary picture. The mannequin makes use of this picture as a place to begin and modifies it based mostly on the textual content prompts. For producing the primary picture, this parameter will not be used.

- start_schedule – This parameter controls the power of the noise added to the preliminary picture at first of the technology course of. A price of 0.6 implies that the preliminary noise will probably be comparatively low.

- steps – This parameter specifies the variety of steps (iterations) the mannequin ought to take through the picture technology course of. On this case, it’s set to 50 steps.

- style_preset – This parameter specifies a predefined fashion or aesthetic to use to the generated picture. As a result of we’re producing emblem pictures, we use

digital-art. - clip_guidance_preset – This parameter specifies a predefined steering setting for the CLIP model, which is used to information the picture technology course of based mostly on the textual content prompts.

- sampler – This parameter specifies the sampling algorithm used through the picture technology course of to repeatedly denoise the picture to supply a high-quality output.

Check and consider the applying

The next screenshot reveals a easy UI. You’ll be able to select to both generate a brand new picture or edit a picture utilizing textual content prompts.

The next screenshots present iterations of pattern logos we created utilizing the UI. The textual content prompts are included underneath every picture.

Clear up

To scrub up, delete the CloudFormation stack and the S3 bucket you created.

Conclusion

On this publish, we explored how you need to use Stability AI and Amazon Bedrock to generate and edit pictures. By following the directions and utilizing the offered CloudFormation template and the frontend code, you possibly can generate distinctive and customized pictures and logos for your corporation. Attempt producing and enhancing your individual logos, and tell us what you assume within the feedback. To discover extra AI use circumstances, seek advice from AI Use Case Explorer.

In regards to the authors

Pyone Thant Win is a Companion Options Architect centered on AI/ML and pc imaginative and prescient. Pyone is enthusiastic about enabling AWS Companions via technical greatest practices and utilizing the most recent applied sciences to showcase the artwork of doable.

Pyone Thant Win is a Companion Options Architect centered on AI/ML and pc imaginative and prescient. Pyone is enthusiastic about enabling AWS Companions via technical greatest practices and utilizing the most recent applied sciences to showcase the artwork of doable.

Nneoma Okoroafor is a Companion Options Architect centered on serving to companions observe greatest practices by conducting technical validations. She focuses on helping AI/ML and generative AI companions, offering steering to verify they’re utilizing the most recent applied sciences and methods to ship revolutionary options to clients.

Nneoma Okoroafor is a Companion Options Architect centered on serving to companions observe greatest practices by conducting technical validations. She focuses on helping AI/ML and generative AI companions, offering steering to verify they’re utilizing the most recent applied sciences and methods to ship revolutionary options to clients.