Rethinking LLM Memorization – Machine Studying Weblog | ML@CMU

Introduction

A central query within the dialogue of huge language fashions (LLMs) issues the extent to which they memorize their coaching information versus how they generalize to new duties and settings. Most practitioners appear to (not less than informally) imagine that LLMs do some extent of each: they clearly memorize elements of the coaching information—for instance, they’re typically capable of reproduce giant parts of coaching information verbatim [Carlini et al., 2023]—however additionally they appear to study from this information, permitting them to generalize to new settings. The exact extent to which they do one or the opposite has large implications for the sensible and authorized facets of such fashions [Cooper et al., 2023]. Do LLMs actually produce new content material, or do they solely remix their coaching information? Ought to the act of coaching on copyrighted information be deemed an unfair use of information, or ought to truthful use be judged by some notion of mannequin memorization? When coping with people, we distinguish plagiarizing content material from studying from it, however how ought to this prolong to LLMs? The reply inherently pertains to the definition of memorization for LLMs and the extent to which they memorize their coaching information.

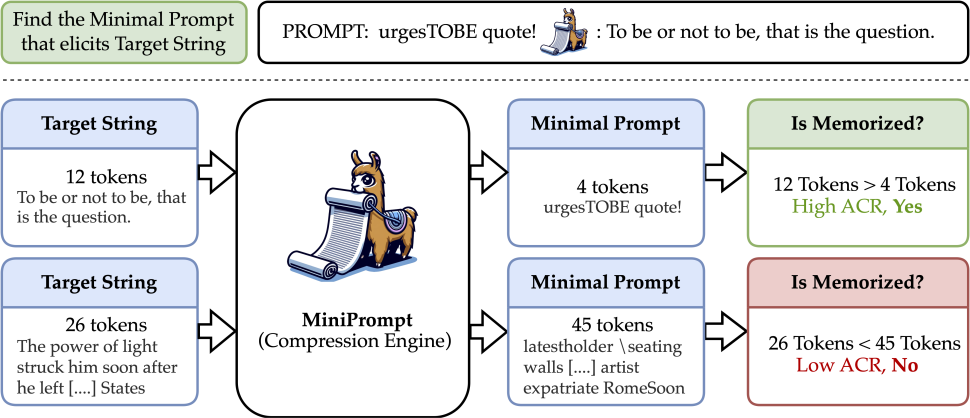

Nevertheless, even defining memorization for LLMs is difficult, and plenty of current definitions go away a lot to be desired. In our latest paper (project page), we suggest a brand new definition of memorization based mostly on a compression argument. Our definition posits that

a phrase current within the coaching information is memorized if we will make the mannequin reproduce the phrase utilizing a immediate (a lot) shorter than the phrase itself.

Operationalizing this definition requires discovering the shortest adversarial enter immediate that’s particularly optimized to provide a goal output. We name this ratio of input-to-output tokens the Adversarial Compression Ratio (ACR). In different phrases, memorization is inherently tied as to if a sure output could be represented in a compressed type past what language fashions can do with typical textual content. We argue that such a definition supplies an intuitive notion of memorization. If a sure phrase exists throughout the LLM coaching information (e.g., is just not itself generated textual content) and it may be reproduced with fewer enter tokens than output tokens, then the phrase have to be saved in some way throughout the weights of the LLM. Though it could be extra pure to think about compression by way of the LLM-based notions of enter/output perplexity, we argue {that a} easy compression ratio based mostly on enter/output token counts supplies a extra intuitive clarification to non-technical audiences and has the potential to function a authorized foundation for vital questions on memorization and permissible information use. Along with its intuitive nature, our definition has a number of different fascinating qualities. We present that it appropriately ascribes many well-known quotes as being memorized by current LLMs (i.e., they’ve excessive ACR values). However, we discover that textual content not within the coaching information of an LLM, equivalent to samples posted on the web after the coaching interval, aren’t compressible, that’s their ACR is low.

We look at a number of unlearning strategies utilizing ACR to indicate that they don’t considerably have an effect on the memorization of the mannequin. That’s, even after specific finetuning, fashions requested to “neglect” sure items of content material are nonetheless capable of reproduce them with a excessive ACR—in reality, not a lot smaller than with the unique mannequin. Our method supplies a easy and sensible perspective on what memorization can imply, offering a useful gizmo for practical and authorized evaluation of LLMs.

Why We Want A New Definition

With LLMs ingesting increasingly more information, questions on their memorization are attracting consideration [e.g., Carlini et al., 2019, 2023; Nasr et al., 2023; Zhang et al., 2023]. There stays a urgent have to precisely outline memorization in a method that serves as a sensible software to determine the truthful use of public information from a authorized standpoint. To floor the issue, contemplate the courtroom’s function in figuring out whether or not an LLM is breaching copyright. What constitutes a breach of copyright stays contentious, and prior work defines this on a spectrum from ‘coaching on an information level itself constitutes violation’ to ‘copyright violation solely happens if a mannequin verbatim regurgitates coaching information.’ To formalize our argument for a brand new notion of memorization, we begin with three definitions from prior work to focus on a number of the gaps within the present interested by memorization.

Discoverable memorization [Carlini et al., 2023], which says a string is memorized if the primary few phrases elicit the remainder of the quote precisely, has three explicit issues. It is rather permissive, simple to evade, and requires validation information to set parameters. One other notion is Extractable Memorization [Nasr et al., 2023], which says that if there exists a immediate that elicits the string in response. This falls too far on the opposite aspect of the problem by being very restrictive—what if the immediate consists of your entire string in query, or worse, the directions to repeat it? LLMs which can be good at repeating will comply with that instruction and output any string they’re requested to. The chance is that it’s potential to label any aspect of the coaching set as memorized, rendering this definition unfit in observe. One other definition is Counterfactual Memorization [Zhang et al., 2023], which goals to separate memorization from generalization and is examined by retraining many LLMs. Given the price of coaching LLMs, such a definition is impractical for authorized use.

Along with these definitions from prior work on LLM memorization, a number of different seemingly viable approaches to memorization exist. Finally, we argue all of those frameworks—the definitions in current work and the approaches described under—are every lacking key parts of a very good definition for assessing truthful use of information.

Membership is just not memorization. Maybe if a copyrighted piece of information is within the coaching set in any respect, we’d contemplate it an issue. Nevertheless, there’s a delicate however essential distinction between coaching set membership and memorization. Particularly, the continuing lawsuits within the discipline [e.g., as covered by Metz and Robertson, 2024] go away open the chance that reproducing one other’s artistic work is problematic, however coaching on samples from that information will not be. That is widespread observe within the arts—contemplate {that a} copycat comic telling another person’s jokes is stealing, however an up-and-comer studying from tapes of the greats is doing nothing mistaken. So whereas membership inference assaults (MIAs) [e.g. Shokri et al., 2017] might appear to be assessments for memorization and they’re even intimately associated to auditing machine unlearning [Carlini et al., 2021, Pawelczyk et al., 2023, Choi et al., 2024], they’ve three points as assessments for memorization. Particularly, they’re very restrictive, they’re arduous to arbitrate, and analysis strategies are brittle.

Adversarial Compression Ratio

Our definition of memorization relies on answering the next query: Given a chunk of textual content, how brief is the minimal immediate that elicits that textual content precisely? On this part, we formally outline and introduce our MiniPrompt algorithm that we use to reply our central query.

To start, let a goal pure textual content string (s) have a token sequence illustration (xin mathcal V^*), which is an inventory of integer-valued indices that index a given vocabulary (mathcal V). We use (|cdot|) to rely the size of a token sequence. A tokenizer (T:smapsto x) maps from strings to token sequences. Let (M) be an LLM that takes an inventory of tokens as enter and outputs the following token possibilities. Contemplate that (M) can carry out era by repeatedly predicting the following token from all of the earlier tokens with the argmax of its output appended to the sequence at every step (this course of is named grasping decoding). With a slight abuse of notation, we will even name the grasping decoding outcome the output of (M). Let (y) be the token sequence generated by (M), which we name a completion or response: (y = M(x)), which in pure language says that the mannequin generates (y) when prompted with (x) or that (x) elicits (y) as a response from (M). So our compression ratio ACR is outlined for a goal sequence (y) as ACR((M, y) = fracyx^*), the place (x^* = textual content{argmin}_{x} |x|) s.t. (M(x) = y).

Definition [(tau)-Compressible Memorization] Given a generative mannequin (M), a pattern (y) from the coaching information is (tau)-memorized if the ACR((M, y) > tau(y)).

The edge (tau(y)) is a configurable parameter of this definition. We would select to check the ACR to the compression ratio of the textual content when run by a general-purpose compression program (explicitly assumed to not have memorized any such textual content) equivalent to GZIP [Gailly and Adler, 1992] or SMAZ [Sanfilippo, 2006]. This quantities to setting (tau(y)) equal to the SMAZ compression ratio of (y), for instance. Alternatively, one may even use the compression ratio of the arithmetic encoding beneath one other LLM as a comparability level, for instance, if it was recognized with certainty that the LLM was by no means educated on the goal output and therefore couldn’t have memorized it [Delétang et al., 2023]. In actuality, copyright attribution circumstances are at all times subjective, and the objective of this work is to not argue for the appropriate threshold operate however slightly to advocate for the adversarial compression framework for arbitrating truthful information use. Thus, we use (tau = 1), which we imagine has substantial sensible worth.

Our definition and the compression ratio result in two pure methods to combination over a set of examples. First, we will common the ratio over all samples/check strings and report the common compression ratio (that is (tau)-independent). Second, we will label samples with a ratio better than one as memorized and focus on the portion memorized over some set of check circumstances (for our alternative of (tau =1 )).

Empirical Findings

Mannequin Dimension vs. Memorization: Since prior work has proposed different definitions of memorization that present that larger fashions memorize extra [Carlini et al., 2023], we ask whether or not our definition results in the identical discovering. We discover the identical tendencies beneath our definition, that means our view of memorization is according to current scientific findings.

Unlearning for Privateness: We additional experiment with fashions finetuned on artificial information, which present that completion-based assessments (i.e., the mannequin’s means to generate a selected output) typically fail to completely mirror the mannequin’s memorization. Nevertheless, the ACR captures the persistence of memorization even after reasonable makes an attempt at unlearning.

4 Categorties of Knowledge for Validation: We additionally validate the ACR as a metric utilizing 4 several types of information: random sequences, well-known quotes, Wikipedia sentences, and up to date Related Press (AP) articles. The objective is to make sure that the ACR aligns with intuitive expectations of memorization. Our outcomes present that random sequences and up to date AP articles, which the fashions weren’t educated on, aren’t compressible (i.e., not memorized). Well-known quotes, that are repeated within the coaching information, present excessive compression ratios, indicating memorization. Wikipedia sentences fall between the 2 extremes, as a few of them are memorized. These outcomes validate that ACR meaningfully identifies memorization in information that’s extra widespread or repeated within the coaching set, whereas appropriately labelling unseen information as not-memorized.

When proposing new definitions, we’re tasked with justifying why a brand new one is required in addition to exhibiting its means to seize a phenomenon of curiosity. This stands in distinction to growing detection/classification instruments whose accuracy can simply be measured utilizing labeled information. It’s troublesome by nature to outline memorization as there is no such thing as a set of floor reality labels that point out which samples are memorized. Consequently, the standards for a memorization definition ought to depend on how helpful it’s. Our definition is a promising route for future regulation on LLM truthful use of information in addition to serving to mannequin homeowners confidently launch fashions educated on delicate information with out releasing that information. Deploying our framework in observe might require cautious thought of how one can set the compression threshold however because it pertains to the authorized setting this isn’t a limitation as regulation fits at all times have some subjectivity [Downing, 2024]. Moreover, as proof in a courtroom, this metric wouldn’t present a binary check on which a swimsuit could possibly be determined, slightly it will be a chunk of a batch of proof, by which some is extra probative than others. Our hope is to offer regulators, mannequin homeowners, and the courts a mechanism to measure the extent to which a mannequin comprises a specific string inside its weights and make dialogue about information utilization extra grounded and quantitative.

References

- Nicholas Carlini, Chang Liu, Úlfar Erlingsson, Jernej Kos, and Daybreak Track. The key sharer: Evaluating and testing unintended memorization in neural networks. In twenty eighth USENIX safety symposium (USENIX safety 19), pages 267–284, 2019.

- Nicholas Carlini, Steve Chien, Milad Nasr, Shuang Track, Andreas Terzis, and Florian Tramer. Membership inference assaults from first ideas. arXiv preprint arXiv:2112.03570, 2021.

- Nicholas Carlini, Daphne Ippolito, Matthew Jagielski, Katherine Lee, Florian Tramer, and Chiyuan Zhang. Quantifying memorization throughout neural language fashions, 2023.

- Dami Choi, Yonadav Shavit, and David Ok Duvenaud. Instruments for verifying neural fashions’ coaching information. Advances in Neural Info Processing Programs, 36, 2024.

- A Feder Cooper, Katherine Lee, James Grimmelmann, Daphne Ippolito, Christo- pher Callison-Burch, Christopher A Choquette-Choo, Niloofar Mireshghallah, Miles Brundage, David Mimno, Madiha Zahrah Choksi, et al. Report of the first workshop on generative ai and regulation. arXiv preprint arXiv:2311.06477, 2023.

- Grégoire Delétang, Anian Ruoss, Paul-Ambroise Duquenne, Elliot Catt, Tim Genewein, Christopher Mattern, Jordi Grau-Moya, Li Kevin Wenliang, Matthew Aitchison, Laurent Orseau, et al. Language modeling is compression. arXiv preprint arXiv:2309.10668, 2023.

- Kate Downing. Copyright fundamentals for AI researchers. In Proceedings of the Twelfth Worldwide Convention on Studying Representations (ICLR), 2024. URL https://iclr.cc/media/iclr-2024/Slides/21804.pdf.

- Jean-Loup Gailly and Mark Adler. gzip. https://www.gnu.org/software program/gzip/, 1992. Accessed: 2024-05-21.

- Cade Metz and Katie Robertson. Openai seeks to dismiss elements of the brand new york instances’s lawsuit. The New York Occasions, 2024. URL https://www.nytimes.com/2024/02/27/ expertise/openai-new-york-times-lawsuit.html#: ̃:textual content=Inpercent20itspercent20suit% 2Cpercent20Thepercent20Times,somebodypercent20topercent20hackpercent20theirpercent20chatbot.

- Milad Nasr, Nicholas Carlini, Jonathan Hayase, Matthew Jagielski, A Feder Cooper, Daphne Ippolito, Christopher A Choquette-Choo, Eric Wallace, Florian Tram`er, and Katherine Lee. Scalable extraction of coaching information from (manufacturing) language fashions. arXiv preprint arXiv:2311.17035, 2023.

- Martin Pawelczyk, Seth Neel, and Himabindu Lakkaraju. In-context unlearning: Language fashions as few shot unlearners. arXiv preprint arXiv:2310.07579, 2023.

- Salvatore Sanfilippo. Smaz: Small strings compression library. https://github.com/ antirez/smaz, 2006. Accessed: 2024-05-21.

- Reza Shokri, Marco Stronati, Congzheng Track, and Vitaly Shmatikov. Membership inference assaults towards machine studying fashions. In 2017 IEEE symposium on safety and privateness (SP), pages 3–18. IEEE, 2017.

- Chiyuan Zhang, Daphne Ippolito, Katherine Lee, Matthew Jagielski, Florian Tramèr, and Nicholas Carlini. Counterfactual memorization in neural language fashions. Advances in Neural Info Processing Programs, 36:39321–39362, 2023.