Introducing the AI Lakehouse – KDnuggets

Sponsored Content material

The Lakehouse is an open knowledge analytics structure that decouples knowledge storage from question engines. The Lakehouse is now the dominant platform for storing knowledge for analytics within the Enterprise, however it lacks the capabilities wanted to help the constructing and working of AI techniques.

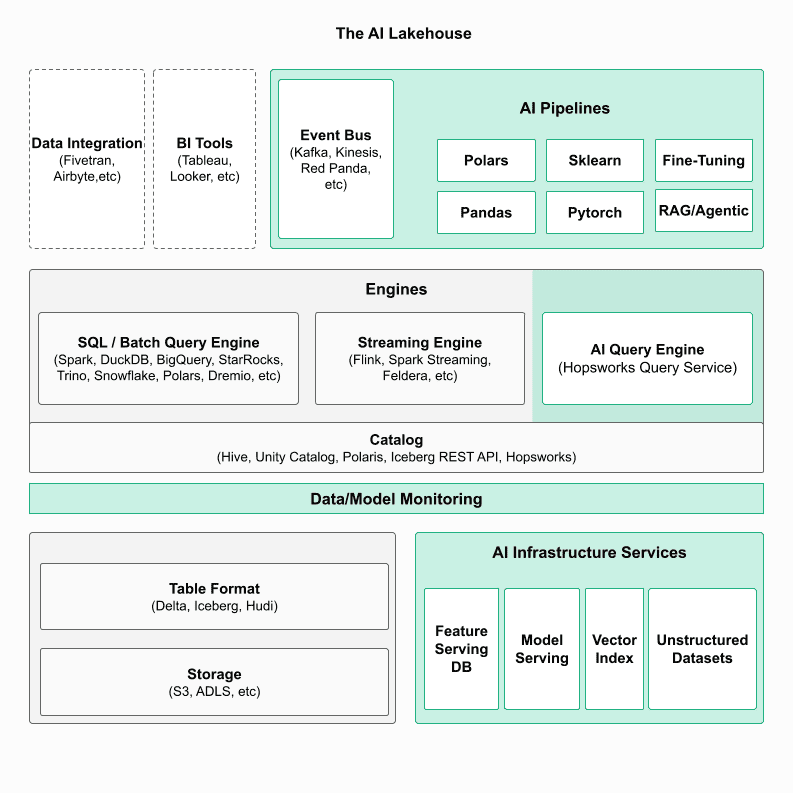

To ensure that Lakehouse to change into a unified knowledge layer for each analytics and AI, it must be prolonged with new capabilities, as proven in Determine 1, for coaching and working batch, real-time, and large-language mannequin (LLM) AI functions.

The AI Lakehouse requires AI pipelines, an AI question engine, catalog(s) for AI property and metadata (characteristic/mannequin registry, lineage, reproducibility), AI infrastructure companies (mannequin serving, a database for characteristic serving, a vector index for RAG, and ruled datasets with unstructured knowledge).

The brand new capabilities embody:

- Actual-Time Knowledge Processing: The AI Lakehouse needs to be able to supporting real-time AI techniques, comparable to TikTok’s video advice engine. This requires “recent” options created by streaming characteristic pipelines, and delivered by a low-latency characteristic serving database.

- Native Python Help: Python is a 2nd class citizen within the Lakehouse, with poor learn/write efficiency. The AI Lakehouse ought to present a Python (AI) Question Engine that gives excessive efficiency studying/writing from/to Lakehouse tables, together with temporal joins to offer point-in-time appropriate coaching knowledge (no knowledge leakage). Netflix applied a quick Python shopper utilizing Arrow for his or her Apache Iceberg Lakehouse, leading to vital productiveness positive aspects.

- Integration Challenges: MLOps platforms join knowledge to fashions however aren’t totally built-in with Lakehouse techniques. This disconnect ends in nearly half of all AI fashions failing to achieve manufacturing because of the siloed nature of knowledge engineering and knowledge science workflows.

- Unified Monitoring: The AI Lakehouse helps unified knowledge and mannequin monitoring by storing inference logs to observe each knowledge high quality and mannequin efficiency, offering a unified resolution that helps detect and tackle drift and different points early.

- Extra Options for Actual-Time AI Programs: The Snowflake schema knowledge mannequin allows each the reuse of options throughout totally different AI fashions in addition to enabling real-time AI techniques to retrieve extra precomputed options utilizing fewer entity IDs (as overseas keys allow retrieval options for a lot of entities with solely a single entity ID).

The AI Lakehouse is the evolution of the Lakehouse to fulfill the calls for of batch, real-time, and LLM AI functions. By addressing real-time processing, enhancing Python help, bettering monitoring, and Snowflake Schema knowledge fashions, the AI Lakehouse will change into the muse for the following technology of clever functions.

This article is an abridged highlight of the main article.

Strive the Hopsworks AI Lakehouse totally free on Serverless or on Kubernetes.

Our High 3 Course Suggestions

![]()

1. Google Cybersecurity Certificate – Get on the quick monitor to a profession in cybersecurity.

![]()

2. Google Data Analytics Professional Certificate – Up your knowledge analytics sport

![]()

3. Google IT Support Professional Certificate – Help your group in IT