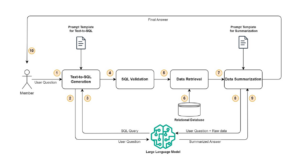

LLM For Structured Information

Giant Language Fashions (LLMs) can be utilized to extract insightful data from structured information, assist customers carry out queries, and generate new datasets.

Retrieval-Augmented Technology is nice for filtering information and extracting observations.

LLMs can be utilized to generate code that executes advanced queries in opposition to structured datasets.

LLMs can even generate artificial information with user-defined sorts and statistical properties.

It’s estimated that 80% to 90% of the info worldwide is unstructured. Nonetheless, once we search for information in a selected area or group, we frequently find yourself discovering structured information. The most certainly purpose is that structured information continues to be the de facto customary for quantitative data.

Consequently, within the age of Giant Language Fashions (LLM), structured information nonetheless is and can proceed to be related—even Microsoft is engaged on adding Large Language Models (LLMs) to Excel!

LLMs are largely used with unstructured information, notably textual content, however with the right instruments, they’ll additionally assist sort out duties with structured information. Given some context or examples of the structured information within the immediate, along with a sentence stating what data we need to be retrieved, LLMs can get insights and patterns from the info, generate code to extract statistics and different metrics, and even generate new information with the identical traits.

On this article, I’ll describe and show with examples three structured information use instances for LLMs, particularly:

Conditions for hands-on examples

All examples on this article use OpenAI GPT-3.5 Turbo, the OpenAI library, and LangChain on Python 3.11. We’ll use the well-known Titanic dataset, which you’ll download as a CSV file and cargo as a Pandas DataFrame.

I’ve ready Jupyter notebooks for the three examples within the article. Observe that you simply’ll want an API key to make use of the GPT-3.5 Turbo mannequin, which you’ll create on the OpenAI platform after registering an account. This utilization has prices, however the invoice might be lower than 5 cents (as per pricing within the fall of 2024) to run the examples from this text.

Listed below are the entire set up directions for all dependencies:

Use case 1: Filtering information with Retrieval-Augmented Technology

Structured datasets normally current massive portions of information organized in lots of rows and columns. When retrieving data from a dataset, probably the most widespread duties is to seek out and filter rows that match particular standards.

With SQL, we have to write a question with WHERE clauses that map our standards. For instance, to seek out the names of all youngsters on the Titanic:

Whereas this question is comparatively simple, it could actually get difficult shortly as we add extra situations. Let’s see how LLMs can simplify this.

What’s Retrieval-Augmented Technology?

Retrieval-Augmented Generation (RAG) is a typical strategy for constructing LLM functions. The concept is to incorporate data from exterior paperwork within the immediate in order that the LLM can use the extra context to supply higher solutions.

Together with exterior information within the immediate solves two widespread points with LLMs:

- The information cutoff drawback arises as a result of any LLM is educated with information curated as much as a sure cut-off date. It will be unable to reply questions on occasions that occurred after that date.

- Hallucinations happen as a result of an LLM generates its output based mostly on probabilistic reasoning, which may make it produce factually incorrect content material.

RAG can overcome these limitations as a result of the extra data included within the immediate extends the information base of the mannequin and reduces the likelihood of it producing outcomes that aren’t aligned with the supplied context.

Making use of Retrieval-Augmented Technology to structured information

To know how we are able to leverage RAG for structured information, let’s think about a easy tabular dataset with just a few observations and variables.

Step one is to transform the info right into a numeric illustration in order that the LLM can use it. This may be achieved through the use of an embedding mannequin, whose purpose is to remodel every dataset commentary into an summary multi-dimensional numeric illustration. All variables of the dataset are mapped to a brand new set of numeric options that seize traits and relationships of the info in a approach that related observations have related embedding representations.

There are lots of pre-trained embedding fashions prepared for use. Selecting the one best suited for our use case isn’t all the time simple: we are able to concentrate on particular traits of the mannequin (e.g., measurement of the embedding illustration), and we are able to verify leaderboards to see what fashions obtain state-of-the-art ends in completely different duties (e.g., the MTEB Leaderboard).

To validate that the mannequin now we have chosen is appropriate, we are able to assess the retrieval high quality by checking the relevance of the retrieved observations contemplating the supplied question. For instance, we are able to choose a question, retrieve the okay most related observations based mostly on the embeddings, and calculate what number of retrieved gadgets are related (i.e., precision).

We retailer the embeddings representing our observations in a vector database. Afterward, when the person sends a question, we are able to fetch the observations which might be most related from the database and use them as context within the immediate. This works nice for queries whose purpose is to seek out and filter particular information. Nonetheless, RAG isn’t appropriate to get statistics and abstract data for the whole structured dataset.

Palms-on instance: Discovering information factors with RAG

We begin by importing all of the required LangChain modules: the OpenAI wrappers, the doc loader for CSV information, the Chroma vector database, and the RAG pipeline wrapper.

We then instantiate the consumer for the embedding mannequin and cargo the Titanic dataset from the CSV file. Afterward, we use the mannequin to generate the embedding representations of the info and save them right into a Chroma vector database.

To create the RAG pipeline, we begin by configuring a retriever for the vector database, the place we specify the variety of information to be returned (on this case, 5). We then load the OpenAI mannequin wrapper and create the RAG chain. chain_type=“stuff” tells the pipeline so as to add the whole paperwork to the immediate.

Lastly, we are able to ship a question to the RAG pipeline. On this instance, we ask for 3 Titanic passengers with at the least two siblings or spouses aboard.

A attainable output can be:

1) Goodwin, Grasp. Sidney Leonard (Age 1, Class 3)

2) Andersson, Miss. Sigrid Elisabeth (Age 11, Class 3)

3) Rice, Grasp. Eric (Age 7, Class 3)

Use case 2: Code era for operations with the whole dataset

Though RAG permits us to seek out and filter particular information, it fails when the purpose is to extract international metrics or statistics that require entry to the whole dataset. One method to deal with this limitation is through the use of the LLM to generate code that may extract the specified data.

That is normally achieved with immediate templates that embody a predefined set of directions, which might be accomplished with a small dataset pattern (e.g., 5 rows), letting the LLM know the info construction. These directions “configure” the LLM for its code era objective, and the person question might be translated into the respective code.

You’ll be able to see beneath an instance of a immediate template, the place {first_5_rows} would get replaced by the primary 5 observations of the dataset, and {enter} would get replaced by the person question.

Creating Pandas queries with LangChain

The LangChain library provides a number of predefined capabilities for various Python information sorts. For instance, it has the create_pandas_dataframe_agent operate that implements the described habits for Pandas DataFrames. You will discover the underlying prompt templates on GitHub.

The instance beneath makes use of this operate to extract a posh statistic based mostly on the whole dataset. Keep in mind that this strategy has a safety threat: the code returned by the LLM is executed mechanically to acquire the anticipated end result. Subsequently, if, for some purpose, malicious code is returned, this will put your machine in danger. To mitigate this concern, observe the LangChain security guidelines and run your code with the minimal required permissions for the appliance.

Palms-on instance: Analyzing a Pandas DataFrame with LLM-generated code

We begin by importing Pandas and the required LangChain modules: the OpenAI wrapper and the create_pandas_dataframe_agent operate.

We then load the Titanic dataset as a Pandas DataFrame from the CSV file, and initialize the OpenAI mannequin. Afterward, we use create_pandas_dataframe_agent to create the agent that may function the LLM in order that it generates the required code.

The agent_type=“tool-calling” tells the agent that we’re working with OpenAI instruments, and allow_dangerous_code=True permits for the automated execution of the returned code.

Lastly, we are able to ship the question to the agent. On this instance, we need to get the imply age per ticket class of the individuals aboard the Titanic.

We will see from the logs that the LLM generates the right code to extract this data from a Pandas DataFrame. The output is:

The imply age per ticket class is:

– Ticket Class 1: 38.23 years

– Ticket Class 2: 29.88 years

– Ticket Class 3: 25.14 years

Use case 3: Artificial structured information era

When working with structured datasets, it is not uncommon to wish extra information with the identical traits: we may have to enhance coaching information for a machine studying mannequin or generate anonymized information to guard delicate data.

A typical answer to handle this want is to generate synthetic data with the identical traits as the unique dataset. LLMs might be utilized to generate high-quality artificial structured information with out requiring any pre-training, which is a significant benefit in comparison with earlier strategies like generative adversarial networks (GANs).

Utilizing LLMs to generate artificial information

One method to permit LLMs to excel at a job or in a site for which they weren’t educated is to supply the mandatory context throughout the immediate. Let’s say I need to ask an LLM to jot down a abstract about me. With none context, it will be unable to do it as a result of it hasn’t realized any details about me in coaching. Nonetheless, if I embody just a few sentences with particulars about myself within the immediate, the mannequin will use that data to jot down a greater abstract.

Leveraging this concept, a straightforward answer to carry out artificial information era with LLMs is to supply details about the info traits as a part of the immediate. We will, for instance, present descriptive statistics as context for the mannequin to make use of. We may embody the whole authentic dataset within the immediate, assuming that the context window is sufficiently big to suit all tokens and that we settle for the efficiency and financial prices. In observe, sending all information turns into unfeasible as a consequence of these limitations. Subsequently, together with summarized data is a extra widespread strategy.

Within the following instance, we embody a Pandas-generated desk with the descriptive statistics of the numeric columns within the immediate and ask for ten new artificial information factors.

Palms-on instance: Producing artificial information factors with GPT-3.5 Turbo

We begin by importing Pandas and the OpenAI library:

We then load the dataset CSV file and save the descriptive statistics desk right into a string variable.

Lastly, we instantiate the OpenAI consumer, ship a system immediate that features the descriptive statistics desk, and ask for ten artificial examples of individuals aboard the Titanic.

A attainable output can be:

Positive! Listed below are 10 artificial information observations generated based mostly on the supplied descriptive statistics:

Conclusion

As we noticed by means of the use instances offered within the article, LLMs can do nice issues with structured information: they’ll discover and filter particular information of curiosity, changing the necessity for SQL queries; they’ll generate code able to extracting significant statistics from the whole dataset; they usually can be utilized to generate artificial information with the identical traits of the unique information.

All of the offered use instances exemplify widespread duties that information scientists and analysts carry out each day. Analyzing and understanding the info they’re working with might be probably the most time-consuming job these professionals face. As considered one of these practitioners, I discover it a lot simpler to pick out the info that I would like by means of a easy English query than to jot down a posh SQL question. The identical might be mentioned about creating code to extract statistics and insights from a dataset or to generate new related information. We’ve seen on this article that LLMs can carry out these duties fairly effectively, reaching the specified outcomes with minimal configuration and few libraries.

The longer term appears brilliant for using LLMs for structured information, with plenty of attention-grabbing challenges but to be addressed. For instance, LLMs generally generate inaccurate outcomes, which can be exhausting to detect, notably when contemplating the natural stochasticity of the model. Within the offered use instances, this may be a difficulty: if I ask for particular standards to be met, I must ensure that the LLM complies. Methods like RAG mitigate this habits, however there may be nonetheless work to be achieved.