Getting began with cross-region inference in Amazon Bedrock

With the arrival of generative AI solutions, a paradigm shift is underway throughout industries, pushed by organizations embracing basis fashions to unlock unprecedented alternatives. Amazon Bedrock has emerged as the popular selection for quite a few clients searching for to innovate and launch generative AI purposes, resulting in an exponential surge in demand for mannequin inference capabilities. Bedrock clients intention to scale their worldwide purposes to accommodate progress, and require extra burst capability to deal with sudden surges in visitors. At present, customers might need to engineer their purposes to deal with eventualities involving visitors spikes that may use service quotas from a number of areas by implementing advanced strategies comparable to client-side load balancing between AWS areas, the place Amazon Bedrock service is supported. Nevertheless, this dynamic nature of demand is tough to foretell, will increase operational overhead, introduces potential factors of failure, and may hinder companies from reaching true international resilience and steady service availability.

At the moment, we’re completely happy to announce the overall availability of cross-region inference, a strong function permitting automated cross-region inference routing for requests coming to Amazon Bedrock. This affords builders utilizing on-demand inference mode, a seamless answer for managing optimum availability, efficiency, and resiliency whereas managing incoming visitors spikes of purposes powered by Amazon Bedrock. By opting in, builders not need to spend effort and time predicting demand fluctuations. As an alternative, cross-region inference dynamically routes visitors throughout a number of areas, making certain optimum availability for every request and smoother efficiency throughout high-usage intervals. Furthermore, this functionality prioritizes the linked Amazon Bedrock API supply/major area when potential, serving to to attenuate latency and enhance responsiveness. Because of this, clients can improve their purposes’ reliability, efficiency, and effectivity.

Allow us to dig deeper into this function the place we are going to cowl:

- Key options and advantages of cross-region inference

- Getting began with cross-region inference

- Code samples for outlining and leveraging this function

- How to consider migrating to cross-region inference

- Key issues

- Greatest Practices to comply with for this function

- Conclusion

Let’s dig in!

Key options and advantages.

One of many essential necessities from our clients is the power to handle bursts and spiky visitors patterns throughout quite a lot of generative AI workloads and disparate request shapes. A few of the key options of cross-region inference embody:

- Make the most of capability from a number of AWS areas permitting generative AI workloads to scale with demand.

- Compatibility with present Amazon Bedrock API

- No extra routing or knowledge switch value and also you pay the identical value per token for fashions as in your supply/major area.

- Change into extra resilient to any visitors bursts. This implies, customers can deal with their core workloads and writing logic for his or her purposes powered by Amazon Bedrock.

- Capability to select from a spread of pre-configured AWS area units tailor-made to your wants.

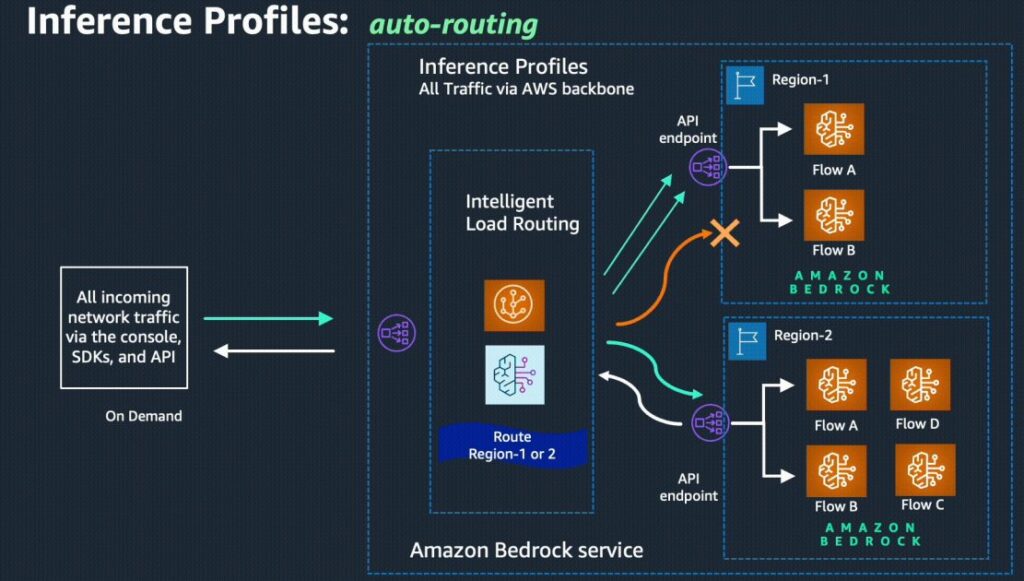

The under picture would assist to grasp how this function works. Amazon Bedrock makes real-time selections for each request made through cross-region inference at any level of time. When a request arrives to Amazon Bedrock, a capability test is carried out in the identical area the place the request originated from, if there may be sufficient capability the request is fulfilled else a second test determines a secondary area which has capability to take the request, it’s then re-routed to that determined area and outcomes are retrieved for buyer request. This capability to carry out capability checks was not out there to clients in order that they needed to implement handbook checks of each area of selection after receiving an error after which re-route. Additional the everyday customized implementation of re-routing is likely to be primarily based on spherical robin mechanism with no insights into the out there capability of a area. With this new functionality, Amazon Bedrock takes into consideration all of the points of visitors and capability in real-time, to make the choice on behalf of shoppers in a fully-managed method with none further prices.

Few factors to concentrate on:

- AWS community spine is used for knowledge switch between areas as an alternative of web or VPC peering, leading to safe and dependable execution.

- The function will attempt to serve the request out of your major area first. It’ll path to different areas in case of heavy visitors, bottlenecks and cargo steadiness the requests.

- You possibly can entry a choose checklist of fashions through cross-region inference, that are basically area agnostic fashions made out there throughout the complete region-set. It is possible for you to to make use of a subset of fashions out there in Amazon Bedrock from wherever contained in the region-set even when the mannequin just isn’t out there in your major area.

- You need to use this function within the Amazon Bedrock mannequin invocation APIs (

InvokeModelandConverseAPI). - You possibly can select whether or not to make use of Basis Fashions instantly through their respective mannequin identifier or use the mannequin through the cross-region inference mechanism. Any inferences carried out through this function will take into account on-demand capability from all of its pre-configured areas to maximise availability.

- There can be extra latency incurred when re-routing occurs and, in our testing, it has been a double-digit milliseconds latency add.

- All phrases relevant to using a selected mannequin, together with any finish person license settlement, nonetheless apply when utilizing cross-region inference.

- When utilizing this function, your throughput can attain as much as double the allotted quotas within the area that the inference profile is in. The rise in throughput solely applies to invocation carried out through inference profiles, the common quota nonetheless applies in case you go for in-region mannequin invocation request. To see quotas for on-demand throughput, discuss with the Runtime quotas part in Quotas for Amazon Bedrock or use the Service Quotas console

Definition of a secondary area

Allow us to dive deep into a number of vital points:

- What’s a secondary area? As a part of this launch, you may choose both a US Mannequin or EU Mannequin, every of which can embody 2-3 preset areas from these geographical places.

- Which fashions are included? As a part of this launch, we can have Claude 3 household of fashions (Haiku, Sonnet, Opus) and Claude 3.5 Sonnet made out there.

- Can we use PrivateLink? Sure, it is possible for you to to leverage your non-public hyperlinks and guarantee visitors flows through your VPC with this function.

- Can we use Provisioned Throughput with this function as nicely? At present, this function won’t apply to Provisioned Throughput and can be utilized for on-demand inference solely.

- When does the workload visitors get re-routed? Cross-region inference will first attempt to service your request through the first area (area of the linked Amazon Bedrock endpoint). Because the visitors patterns spike up and Amazon Bedrock detects potential delays, the visitors will shift pro-actively to the secondary area and get serviced from these areas.

- The place would the logs be for cross-region inference? The logs and invocations will nonetheless be within the major area and account the place the request originates from. Amazon Bedrock will output indicators on the logs which can present which area really serviced the request.

- Right here is an instance of the visitors patterns could be from under (map to not scale).

A buyer with a workload in eu-west-1 (Eire) could select each eu-west-3 (Paris) and eu-central-1 (Frankfurt) as a pair of secondary areas, or a workload in us-east-1 (Northern Virginia) could select us-west-2 (Oregon) as a single secondary area, or vice versa. This may hold all inference visitors inside the USA of America or European Union.

Safety and Structure of how cross-region inference appears to be like like

The next diagram reveals the high-level structure for a cross-region inference request:

The operational circulate begins with an Inference request coming to a major area for an on-demand baseline mannequin. Capability evaluations are made on the first area and the secondary area checklist, making a area capability checklist in capability order. The area with essentially the most out there capability, on this case eu-central-1 (Frankfurt), is chosen as the subsequent goal. The request is re-routed to Frankfurt utilizing the AWS Spine community, making certain that each one visitors stays inside the AWS community. The request bypasses the usual API entry-point for the Amazon Bedrock service within the secondary area and goes on to the Runtime inference service, the place the response is returned again to the first area over the AWS Spine after which returned to the caller as per a standard inference request. If processing within the chosen area fails for any motive, then the subsequent area within the area capability checklist highest out there capability is tried, eu-west-1 (Eire) on this instance, adopted by eu-west-3 (Paris), till all configured areas have been tried. If no area within the secondary area checklist can deal with the inference request, then the API will return the usual “throttled” response.

Networking and knowledge logging

The AWS-to-AWS visitors flows, comparable to Area-to-Area (inclusive of Edge Places and Direct Join paths), will all the time traverse AWS-owned and operated spine paths. This not solely reduces threats, comparable to widespread exploits and DDoS assaults, but in addition ensures that each one inside AWS-to-AWS visitors makes use of solely trusted community paths. That is mixed with inter-Area and intra-Area path encryption and routing coverage enforcement mechanisms, all of which use AWS safe amenities. This mixture of enforcement mechanisms helps be sure that AWS-to-AWS visitors won’t ever use non-encrypted or untrusted paths, such because the web, and therefore because of this all cross-region inference requests will stay on the AWS spine always.

Log entries will proceed to be made within the authentic supply area for each Amazon CloudWatch and AWS CloudTrail, and there can be no extra logs within the re-routed area. With the intention to point out that re-routing occurred the associated entry in AWS CloudTrail can even embody the next extra knowledge – it is just added if the request was processed in a re-routed area.

Throughout an inference request, Amazon Bedrock doesn’t log or in any other case retailer any of a buyer’s prompts or mannequin responses. That is nonetheless true if cross-region inference re-routes a question from a major area to a secondary area for processing – that secondary area doesn’t retailer any knowledge associated to the inference request, and no Amazon CloudWatch or AWS CloudTrail logs are saved in that secondary area.

Identification and Entry Administration

AWS Identity and Access Management (IAM) is vital to securely managing your identities and entry to AWS providers and assets. Earlier than you should utilize cross-region inference, test that your position has entry to the cross-region inference API actions. For extra particulars, see here.An instance coverage, which permits the caller to make use of the cross-region inference with the InvokeModel* APIs for any mannequin within the us-east-1 and us-west-2 area is as follows:

Getting began with Cross-region inference

To get began with cross-region inference, you make use of Inference Profiles in Amazon Bedrock. An inference profile for a mannequin, configures completely different mannequin ARNs from respective AWS areas and abstracts them behind a unified mannequin identifier (each id and ARN). Simply by merely utilizing this new inference profile identifier with the InvokeModel or Converse API, you should utilize the cross-region inference function.

Listed here are the steps to begin utilizing cross-region inference with the assistance of inference profiles:

- Listing Inference Profiles

You possibly can checklist the inference profiles out there in your area by both signing in to Amazon Bedrock AWS console or API.- Console

- From the left-hand pane, choose “Cross-region Inference”

- You possibly can discover completely different inference profiles out there in your area(s).

- Copy the inference profile ID and use it in your utility, as described within the part under

- API

Additionally it is potential to checklist the inference profiles out there in your area through boto3 SDK or AWS CLI.

- Console

You possibly can observe how completely different inference profiles have been configured for numerous geo places comprising of a number of AWS areas. For instance, the fashions with the prefix us. are configured for AWS areas in USA, whereas fashions with eu. are configured with the areas in European Union (EU).

- Modify Your Utility

- Replace your utility to make use of the inference profile ID/ARN from console or from the API response as

modelIdin your requests throughInvokeModelorConverse - This new inference profile will routinely handle inference throttling and re-route your request(s) throughout a number of AWS Areas (as per configuration) throughout peak utilization bursts.

- Replace your utility to make use of the inference profile ID/ARN from console or from the API response as

- Monitor and Modify

- Use Amazon CloudWatch to watch your inference visitors and latency throughout areas.

- Modify using inference profile vs FMs instantly primarily based in your noticed visitors patterns and efficiency necessities.

Code instance to leverage Inference Profiles

Use of inference profiles is much like that of basis fashions in Amazon Bedrock utilizing the InvokeModel or Converse API, the one distinction between the modelId is addition of a prefix comparable to us. or eu.

Basis Mannequin

Inference Profile

Deep Dive

Whereas it’s straight ahead to begin utilizing inference profiles, you first have to know which inference profiles can be found as a part of your area. Begin with the checklist of inference profiles and observe fashions out there for this function. That is performed by means of the AWS CLI or SDK.

You possibly can count on an output much like the one under:

The distinction between ARN for a basis mannequin out there through Amazon Bedrock and the inference profile could be noticed as:

Basis Mannequin: arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-5-sonnet-20240620-v1:0

Inference Profile: arn:aws:bedrock:us-east-1:<account_id>:inference-profile/us.anthropic.claude-3-5-sonnet-20240620-v1:0

Select the configured inference profile, and begin sending inference requests to your mannequin’s endpoint as typical. Amazon Bedrock will routinely route and scale the requests throughout the configured areas as wanted. You possibly can select to make use of each ARN in addition to ID with the Converse API whereas simply the inference profile ID with the InvokeModel API. You will need to notice which fashions are supported by Converse API.

Within the code pattern above you could specify <your-primary-region-name> comparable to US areas together with us-east-1, us-west-2 or EU areas together with eu-central-1, eu-west-1, eu-west-3. The <regional-prefix> will then be relative, both us or eu.

Adapting your purposes to make use of Inference Profiles in your Amazon Bedrock FMs is fast and straightforward with steps above. No vital code modifications are required on the consumer facet. Amazon Bedrock handles the cross-region inference transparently. Monitor CloudTrail logs to test in case your request is routinely re-routed to a different area as described within the part above.

How to consider adopting to the brand new cross-region inference function?

When contemplating the adoption of this new functionality, it’s important to rigorously consider your utility necessities, visitors patterns, and present infrastructure. Right here’s a step-by-step method that will help you plan and undertake cross-region inference:

- Assess your present workload and visitors patterns. Analyze your present generative AI workloads and establish people who expertise vital visitors bursts or have excessive availability necessities together with present visitors patterns, together with peak hundreds, geographical distribution, and any seasonal or cyclical variations

- Consider the potential advantages of cross-region inference. Contemplate the potential benefits of leveraging cross-region inference, comparable to elevated burst capability, improved availability, and higher efficiency for international customers. Estimate the potential value financial savings by not having to implement a customized logic of your individual and pay for knowledge switch (in addition to completely different token pricing for fashions) or effectivity positive factors by off-loading a number of regional deployments right into a single, fully-managed distributed answer.

- Plan and execute the migration. Replace your utility code to make use of the inference profile ID/ARN as an alternative of particular person basis mannequin IDs, following the supplied code pattern above. Check your utility totally in a non-production setting, simulating numerous visitors patterns and failure eventualities. Monitor your utility’s efficiency, latency, and price in the course of the migration course of, and make changes as wanted.

- Develop new purposes with cross-region inference in thoughts. For brand new utility improvement, take into account designing with cross-region inference as the inspiration, leveraging inference profiles from the beginning. Incorporate greatest practices for top availability, resilience, and international efficiency into your utility structure.

Key Issues

Influence on Present Generative AI Workloads

Inference profiles are designed to be appropriate with present Amazon Bedrock APIs, comparable to InvokeModel and Converse. Additionally, any third-party/opensource software which makes use of these APIs comparable to LangChain can be utilized with inference profiles. This implies that you would be able to seamlessly combine inference profiles into your present workloads with out the necessity for vital code modifications. Merely replace your utility to make use of the inference profiles ARN as an alternative of particular person mannequin IDs, and Amazon Bedrock will deal with the cross-region routing transparently.

Influence on Pricing

The function comes with no extra value to you. You pay the identical value per token of particular person fashions in your major/supply area. There isn’t any extra value related to cross-region inference together with the failover capabilities supplied by this function. This contains administration, data-transfer, encryption, community utilization and potential variations in value per million token per mannequin.

Laws, Compliance, and Knowledge Residency

Though not one of the buyer knowledge is saved in both the first or secondary area(s) when utilizing cross-region inference, it’s vital to contemplate that your inference knowledge can be processed and transmitted past your major area. You probably have strict knowledge residency or compliance necessities, you need to rigorously consider whether or not cross-region inference aligns along with your insurance policies and rules.

Conclusion

On this weblog we launched the newest function from Amazon Bedrock, cross-region inference through inference profiles, and a peek into the way it operates and in addition dived into a number of the how-to’s and factors for issues. This function empowers builders to boost the reliability, efficiency, and effectivity of their purposes, with out the necessity to spend effort and time constructing advanced resiliency buildings. This function is now typically out there in US and EU for supported fashions.

In regards to the authors

Talha Chattha is a Generative AI Specialist Options Architect at Amazon Internet Companies, primarily based in Stockholm. Talha helps set up practices to ease the trail to manufacturing for Gen AI workloads. Talha is an knowledgeable in Amazon Bedrock and helps clients throughout complete EMEA. He holds ardour about meta-agents, scalable on-demand inference, superior RAG options and price optimized immediate engineering with LLMs. When not shaping the way forward for AI, he explores the scenic European landscapes and scrumptious cuisines. Join with Talha at LinkedIn utilizing /in/talha-chattha/.

Talha Chattha is a Generative AI Specialist Options Architect at Amazon Internet Companies, primarily based in Stockholm. Talha helps set up practices to ease the trail to manufacturing for Gen AI workloads. Talha is an knowledgeable in Amazon Bedrock and helps clients throughout complete EMEA. He holds ardour about meta-agents, scalable on-demand inference, superior RAG options and price optimized immediate engineering with LLMs. When not shaping the way forward for AI, he explores the scenic European landscapes and scrumptious cuisines. Join with Talha at LinkedIn utilizing /in/talha-chattha/.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He presently focuses on the serving of fashions and MLOps on Amazon SageMaker. Previous to this position, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Outdoors of labor, he enjoys enjoying tennis and biking on mountain trails.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He presently focuses on the serving of fashions and MLOps on Amazon SageMaker. Previous to this position, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Outdoors of labor, he enjoys enjoying tennis and biking on mountain trails.

Sumit Kumar is a Principal Product Supervisor, Technical at AWS Bedrock staff, primarily based in Seattle. He has 12+ years of product administration expertise throughout quite a lot of domains and is keen about AI/ML. Outdoors of labor, Sumit likes to journey and enjoys enjoying cricket and Garden-Tennis.

Sumit Kumar is a Principal Product Supervisor, Technical at AWS Bedrock staff, primarily based in Seattle. He has 12+ years of product administration expertise throughout quite a lot of domains and is keen about AI/ML. Outdoors of labor, Sumit likes to journey and enjoys enjoying cricket and Garden-Tennis.

Dr. Andrew Kane is an AWS Principal WW Tech Lead (AI Language Companies) primarily based out of London. He focuses on the AWS Language and Imaginative and prescient AI providers, serving to our clients architect a number of AI providers right into a single use-case pushed answer. Earlier than becoming a member of AWS at the start of 2015, Andrew spent 20 years working within the fields of sign processing, monetary funds techniques, weapons monitoring, and editorial and publishing techniques. He’s a eager karate fanatic (only one belt away from Black Belt) and can be an avid home-brewer, utilizing automated brewing {hardware} and different IoT sensors.

Dr. Andrew Kane is an AWS Principal WW Tech Lead (AI Language Companies) primarily based out of London. He focuses on the AWS Language and Imaginative and prescient AI providers, serving to our clients architect a number of AI providers right into a single use-case pushed answer. Earlier than becoming a member of AWS at the start of 2015, Andrew spent 20 years working within the fields of sign processing, monetary funds techniques, weapons monitoring, and editorial and publishing techniques. He’s a eager karate fanatic (only one belt away from Black Belt) and can be an avid home-brewer, utilizing automated brewing {hardware} and different IoT sensors.