Reply.AI Releases answerai-colbert-small: A Proof of Idea for Smaller, Sooner, Trendy ColBERT Fashions

AnswerAI has unveiled a strong mannequin known as answerai-colbert-small-v1, showcasing the potential of multi-vector fashions when mixed with superior coaching methods. This proof-of-concept mannequin, developed utilizing the modern JaColBERTv2.5 coaching recipe and extra optimizations, demonstrates exceptional efficiency regardless of its compact measurement of simply 33 million parameters. The mannequin’s effectivity is especially noteworthy, because it achieves these outcomes whereas sustaining a footprint similar to MiniLM.

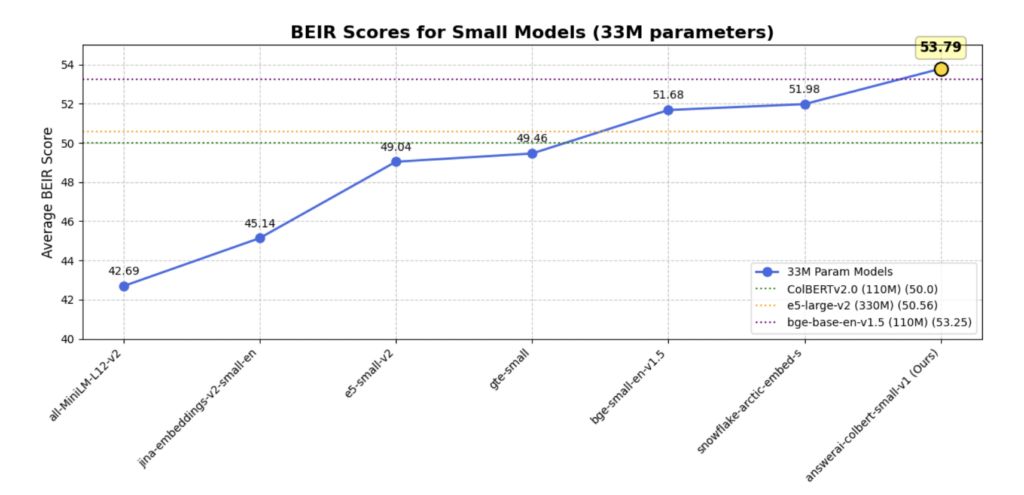

In a stunning flip of occasions, answerai-colbert-small-v1 has surpassed the efficiency of all earlier fashions of comparable measurement on widespread benchmarks. Much more impressively, it has outperformed a lot bigger and extensively used fashions, together with e5-large-v2 and bge-base-en-v1.5. This achievement underscores the potential of AnswerAI’s strategy in pushing the boundaries of what’s potential with smaller, extra environment friendly AI fashions.

Multi-vector retrievers, launched by the ColBERT mannequin structure, supply a novel strategy to doc illustration. Not like conventional strategies that create a single vector per doc, ColBERT generates a number of smaller vectors, every representing a single token. This method addresses the knowledge loss usually related to single-vector representations, significantly in out-of-domain generalization duties. The structure additionally incorporates question augmentation, utilizing masked language modeling to boost retrieval efficiency.

ColBERT’s modern MaxSim scoring mechanism calculates the similarity between question and doc tokens, summing the very best similarities for every question token. Whereas this strategy persistently improves out-of-domain generalization, it initially confronted challenges with in-domain duties and required vital reminiscence and storage sources. ColBERTv2 addressed these points by introducing a extra trendy coaching recipe, together with in-batch negatives and data distillation, together with a novel indexing strategy that lowered storage necessities.

Within the Japanese language context, JaColBERTv1 and v2 have demonstrated even better success than their English counterparts. JaColBERTv1, following the unique ColBERT coaching recipe, grew to become the strongest monolingual Japanese retriever of its time. JaColBERTv2, constructed on the ColBERTv2 recipe, additional improved efficiency and at the moment stands because the strongest out-of-domain retriever throughout all current Japanese benchmarks, although it nonetheless faces some challenges in large-scale retrieval duties like MIRACL.

The answerai-colbert-small-v1 mannequin has been particularly designed with future compatibility in thoughts, significantly for the upcoming RAGatouille overhaul. This forward-thinking strategy ensures that the mannequin will stay related and helpful as new applied sciences emerge. Regardless of its future-oriented design, the mannequin maintains broad compatibility with current ColBERT implementations, providing customers flexibility of their selection of instruments and frameworks.

For these all in favour of using this modern mannequin, there are two major choices accessible. Customers can go for the Stanford ColBERT library, which is a well-established and widely-used implementation. Alternatively, they’ll select RAGatouille, which can supply extra options or optimizations. The set up course of for both or each of those libraries is simple, requiring a easy command execution to get began.

The outcomes of the answerai-colbert-small-v1 mannequin reveal its distinctive efficiency when in comparison with single-vector fashions.

AnswerAI’s answerai-colbert-small-v1 mannequin represents a big development in multi-vector retrieval methods. Regardless of its compact 33 million parameters, it outperforms bigger fashions like e5-large-v2 and bge-base-en-v1.5. Constructed on the ColBERT structure and enhanced by the JaColBERTv2.5 coaching recipe, it excels in out-of-domain generalization. The mannequin’s success stems from its multi-vector strategy, question augmentation, and MaxSim scoring mechanism. Designed for future compatibility, significantly with the upcoming RAGatouille overhaul, it stays suitable with current ColBERT implementations. Customers can simply implement it utilizing both the Stanford ColBERT library or RAGatouille, showcasing AnswerAI’s potential to reshape AI effectivity and efficiency.

Take a look at the Model Card and Details. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t neglect to comply with us on Twitter and be part of our Telegram Channel and LinkedIn Group. When you like our work, you’ll love our newsletter..

Don’t Overlook to affix our 48k+ ML SubReddit

Discover Upcoming AI Webinars here