Node downside detection and restoration for AWS Neuron nodes inside Amazon EKS clusters

Implementing {hardware} resiliency in your coaching infrastructure is essential to mitigating dangers and enabling uninterrupted mannequin coaching. By implementing options comparable to proactive well being monitoring and automatic restoration mechanisms, organizations can create a fault-tolerant setting able to dealing with {hardware} failures or different points with out compromising the integrity of the coaching course of.

Within the put up, we introduce the AWS Neuron node downside detector and restoration DaemonSet for AWS Trainium and AWS Inferentia on Amazon Elastic Kubernetes Service (Amazon EKS). This element can shortly detect uncommon occurrences of points when Neuron units fail by tailing monitoring logs. It marks the employee nodes in a faulty Neuron machine as unhealthy, and promptly replaces them with new employee nodes. By accelerating the velocity of situation detection and remediation, it will increase the reliability of your ML coaching and reduces the wasted time and value resulting from {hardware} failure.

This resolution is relevant if you happen to’re utilizing managed nodes or self-managed node teams (which use Amazon EC2 Auto Scaling groups) on Amazon EKS. On the time of scripting this put up, computerized restoration of nodes provisioned by Karpenter is just not but supported.

Resolution overview

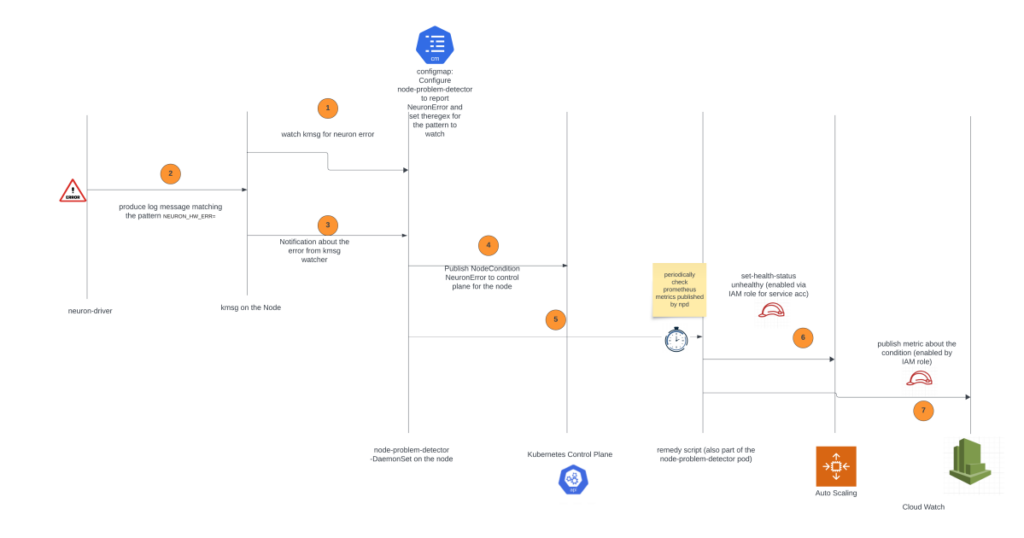

The answer relies on the node downside detector and restoration DaemonSet, a strong device designed to mechanically detect and report varied node-level issues in a Kubernetes cluster.

The node downside detector element will constantly monitor the kernel message (kmsg) logs on the employee nodes. If it detects error messages particularly associated to the Neuron machine (which is the Trainium or AWS Inferentia chip), it’s going to change NodeCondition to NeuronHasError on the Kubernetes API server.

The node restoration agent is a separate element that periodically checks the Prometheus metrics uncovered by the node downside detector. When it finds a node situation indicating a difficulty with the Neuron machine, it’s going to take automated actions. First, it’s going to mark the affected occasion within the related Auto Scaling group as unhealthy, which is able to invoke the Auto Scaling group to cease the occasion and launch a alternative. Moreover, the node restoration agent will publish Amazon CloudWatch metrics for customers to watch and alert on these occasions.

The next diagram illustrates the answer structure and workflow.

Within the following walkthrough, we create an EKS cluster with Trn1 employee nodes, deploy the Neuron plugin for the node downside detector, and inject an error message into the node. We then observe the failing node being stopped and changed with a brand new one, and discover a metric in CloudWatch indicating the error.

Stipulations

Earlier than you begin, be sure you have put in the next instruments in your machine:

Deploy the node downside detection and restoration plugin

Full the next steps to configure the node downside detection and restoration plugin:

- Create an EKS cluster utilizing the information on an EKS Terraform module:

- Set up the required AWS Identity and Access Management (IAM) position for the service account and the node downside detector plugin.

- Create a coverage as proven under. Replace the

Useful resourcekey worth to match your node group ARN that accommodates the Trainium and AWS Inferentia nodes, and replace theec2:ResourceTag/aws:autoscaling:groupNamekey worth to match the Auto Scaling group title.

You may get these values from the Amazon EKS console. Select Clusters within the navigation pane, open the trainium-inferentia cluster, select Node teams, and find your node group.

This element will probably be put in as a DaemonSet in your EKS cluster.

The container pictures within the Kubernetes manifests are saved in public repository comparable to registry.k8s.io and public.ecr.aws. For manufacturing environments, it’s really helpful that clients restrict exterior dependencies that impression these areas and host container pictures in a personal registry and sync from pictures public repositories. For detailed implementation, please consult with the weblog put up: Announcing pull through cache for registry.k8s.io in Amazon Elastic Container Registry.

By default, the node downside detector won’t take any actions on failed node. If you want the EC2 occasion to be terminated mechanically by the agent, replace the DaemonSet as follows:

kubectl edit -n neuron-healthcheck-system ds/node-problem-detector

...

env:

- title: ENABLE_RECOVERY

worth: "true"Take a look at the node downside detector and restoration resolution

After the plugin is put in, you possibly can see Neuron circumstances present up by working kubectl describe node. We simulate a tool error by injecting error logs within the occasion:

Round 2 minutes later, you possibly can see that the error has been recognized:

kubectl describe node ip-100-64-58-151.us-east-2.compute.inner | grep 'Situations:' -A7Now that the error has been detected by the node downside detector, and the restoration agent has mechanically taken the motion to set the node as unhealthy, Amazon EKS will cordon the node and evict the pods on the node:

You may open the CloudWatch console and confirm the metric for NeuronHealthCheck. You may see the CloudWatch NeuronHasError_DMA_ERROR metric has the worth 1.

After alternative, you possibly can see a brand new employee node has been created:

Let’s take a look at a real-world situation, by which you’re working a distributed coaching job, utilizing an MPI operator as outlined in Llama-2 on Trainium, and there may be an irrecoverable Neuron error in one of many nodes. Earlier than the plugin is deployed, the coaching job will turn out to be caught, leading to wasted time and computational prices. With the plugin deployed, the node downside detector will proactively take away the issue node from the cluster. Within the coaching scripts, it saves checkpoints periodically in order that the coaching will resume from the earlier checkpoint.

The next screenshot reveals instance logs from a distributed coaching job.

The coaching has been began. (You may ignore loss=nan for now; it’s a identified situation and will probably be eliminated. For fast use, consult with the reduced_train_loss metric.)

The next screenshot reveals the checkpoint created at step 77.

Coaching stopped after one of many nodes has an issue at step 86. The error was injected manually for testing.

After the defective node was detected and changed by the Neuron plugin for node downside and restoration, the coaching course of resumed at step 77, which was the final checkpoint.

Though Auto Scaling teams will cease unhealthy nodes, they could encounter points stopping the launch of alternative nodes. In such circumstances, coaching jobs will stall and require guide intervention. Nonetheless, the stopped node won’t incur additional fees on the related EC2 occasion.

If you wish to take customized actions along with stopping cases, you possibly can create CloudWatch alarms watching the metrics NeuronHasError_DMA_ERROR,NeuronHasError_HANG_ON_COLLECTIVES, NeuronHasError_HBM_UNCORRECTABLE_ERROR, NeuronHasError_SRAM_UNCORRECTABLE_ERROR, and NeuronHasError_NC_UNCORRECTABLE_ERROR, and use a CloudWatch Metrics Insights question like SELECT AVG(NeuronHasError_DMA_ERROR) FROM NeuronHealthCheck to sum up these values to judge the alarms. The next screenshots present an instance.

Clear up

To wash up all of the provisioned assets for this put up, run the cleanup script:

Conclusion

On this put up, we confirmed how the Neuron downside detector and restoration DaemonSet for Amazon EKS works for EC2 cases powered by Trainium and AWS Inferentia. Should you’re working Neuron based mostly EC2 cases and utilizing managed nodes or self-managed node teams, you possibly can deploy the detector and restoration DaemonSet in your EKS cluster and profit from improved reliability and fault tolerance of your machine studying coaching workloads within the occasion of node failure.

In regards to the authors

Harish Rao is a senior options architect at AWS, specializing in large-scale distributed AI coaching and inference. He empowers clients to harness the facility of AI to drive innovation and resolve complicated challenges. Exterior of labor, Harish embraces an energetic life-style, having fun with the tranquility of climbing, the depth of racquetball, and the psychological readability of mindfulness practices.

Harish Rao is a senior options architect at AWS, specializing in large-scale distributed AI coaching and inference. He empowers clients to harness the facility of AI to drive innovation and resolve complicated challenges. Exterior of labor, Harish embraces an energetic life-style, having fun with the tranquility of climbing, the depth of racquetball, and the psychological readability of mindfulness practices.

Ziwen Ning is a software program improvement engineer at AWS. He presently focuses on enhancing the AI/ML expertise by way of the mixing of AWS Neuron with containerized environments and Kubernetes. In his free time, he enjoys difficult himself with badminton, swimming and different varied sports activities, and immersing himself in music.

Ziwen Ning is a software program improvement engineer at AWS. He presently focuses on enhancing the AI/ML expertise by way of the mixing of AWS Neuron with containerized environments and Kubernetes. In his free time, he enjoys difficult himself with badminton, swimming and different varied sports activities, and immersing himself in music.

Geeta Gharpure is a senior software program developer on the Annapurna ML engineering crew. She is targeted on working massive scale AI/ML workloads on Kubernetes. She lives in Sunnyvale, CA and enjoys listening to Audible in her free time.

Geeta Gharpure is a senior software program developer on the Annapurna ML engineering crew. She is targeted on working massive scale AI/ML workloads on Kubernetes. She lives in Sunnyvale, CA and enjoys listening to Audible in her free time.

Darren Lin is a Cloud Native Specialist Options Architect at AWS who focuses on domains comparable to Linux, Kubernetes, Container, Observability, and Open Supply Applied sciences. In his spare time, he likes to work out and have enjoyable along with his household.

Darren Lin is a Cloud Native Specialist Options Architect at AWS who focuses on domains comparable to Linux, Kubernetes, Container, Observability, and Open Supply Applied sciences. In his spare time, he likes to work out and have enjoyable along with his household.