Accenture creates a customized memory-persistent conversational person expertise utilizing Amazon Q Enterprise

Historically, discovering related data from paperwork has been a time-consuming and infrequently irritating course of. Manually sifting via pages upon pages of textual content, looking for particular particulars, and synthesizing the data into coherent summaries could be a daunting activity. This inefficiency not solely hinders productiveness but in addition will increase the danger of overlooking crucial insights buried inside the doc’s depths.

Think about a state of affairs the place a name middle agent must shortly analyze a number of paperwork to supply summaries for shoppers. Beforehand, this course of would contain painstakingly navigating via every doc, a activity that’s each time-consuming and liable to human error.

With the appearance of chatbots within the conversational synthetic intelligence (AI) area, now you can add your paperwork via an intuitive interface and provoke a dialog by asking particular questions associated to your inquiries. The chatbot then analyzes the uploaded paperwork, utilizing superior pure language processing (NLP) and machine studying (ML) applied sciences to supply complete summaries tailor-made to your questions.

Nonetheless, the true energy lies within the chatbot’s means to protect context all through the dialog. As you navigate via the dialogue, the chatbot ought to keep a reminiscence of earlier interactions, permitting you to evaluation previous discussions and retrieve particular particulars as wanted. This seamless expertise makes certain you possibly can effortlessly discover the depths of your paperwork with out shedding monitor of the dialog’s circulate.

Amazon Q Business is a generative AI-powered assistant that may reply questions, present summaries, generate content material, and securely full duties primarily based on information and data in your enterprise programs. It empowers staff to be extra inventive, data-driven, environment friendly, ready, and productive.

This submit demonstrates how Accenture used Amazon Q Enterprise to implement a chatbot utility that provides simple attachment and dialog ID administration. This answer can velocity up your growth workflow, and you need to use it with out crowding your utility code.

“Amazon Q Enterprise distinguishes itself by delivering customized AI help via seamless integration with numerous information sources. It presents correct, context-specific responses, contrasting with basis fashions that usually require advanced setup for comparable ranges of personalization. Amazon Q Enterprise real-time, tailor-made options drive enhanced decision-making and operational effectivity in enterprise settings, making it superior for quick, actionable insights”

– Dominik Juran, Cloud Architect, Accenture

Resolution overview

On this use case, an insurance coverage supplier makes use of a Retrieval Augmented Technology (RAG) primarily based giant language mannequin (LLM) implementation to add and evaluate coverage paperwork effectively. Coverage paperwork are preprocessed and saved, permitting the system to retrieve related sections primarily based on enter queries. This enhances the accuracy, transparency, and velocity of coverage comparability, ensuring shoppers obtain the very best protection choices.

This answer augments an Amazon Q Enterprise utility with persistent reminiscence and context monitoring all through conversations. As customers pose follow-up questions, Amazon Q Enterprise can regularly refine responses whereas recalling earlier interactions. This preserves conversational circulate when navigating in-depth inquiries.

On the core of this use case lies the creation of a customized Python class for Amazon Q Enterprise, which streamlines the event workflow for this answer. This class presents strong doc administration capabilities, conserving monitor of attachments already shared inside a dialog in addition to new uploads to the Streamlit utility. Moreover, it maintains an inner state to persist dialog IDs for future interactions, offering a seamless person expertise.

The answer includes growing an internet utility utilizing Streamlit, Python, and AWS companies, that includes a chat interface the place customers can work together with an AI assistant to ask questions or add PDF paperwork for evaluation. Behind the scenes, the appliance makes use of Amazon Q Enterprise for dialog historical past administration, vectorizing the information base, context creation, and NLP. The combination of those applied sciences permits for seamless communication between the person and the AI assistant, enabling duties akin to doc summarization, query answering, and comparability of a number of paperwork primarily based on the paperwork hooked up in actual time.

The code makes use of Amazon Q Enterprise APIs to work together with Amazon Q Enterprise and ship and obtain messages inside a dialog, particularly the qbusiness shopper from the boto3 library.

On this use case, we used the German language to check our RAG LLM implementation on 10 completely different paperwork and 10 completely different use instances. Coverage paperwork had been preprocessed and saved, enabling correct retrieval of related sections primarily based on enter queries. This testing demonstrated the system’s accuracy and effectiveness in dealing with German language coverage comparisons.

The next is a code snippet:

The architectural circulate of this answer is proven within the following diagram.

The workflow consists of the next steps:

- The LLM wrapper utility code is containerized utilizing AWS CodePipeline, a totally managed steady supply service that automates the construct, check, and deploy phases of the software program launch course of.

- The appliance is deployed to Amazon Elastic Container Service (Amazon ECS), a extremely scalable and dependable container orchestration service that gives optimum useful resource utilization and excessive availability. As a result of we had been making the calls from a Flask-based ECS activity working Streamlit to Amazon Q Enterprise, we used Amazon Cognito person swimming pools relatively than AWS IAM Identity Center to authenticate customers for simplicity, and we hadn’t experimented with IAM Identification Middle on Amazon Q Enterprise on the time. For directions to arrange IAM Identification Middle integration with Amazon Q Enterprise, check with Setting up Amazon Q Business with IAM Identity Center as identity provider.

- Customers authenticate via an Amazon Cognito UI, a safe person listing that scales to hundreds of thousands of customers and integrates with numerous id suppliers.

- A Streamlit utility working on Amazon ECS receives the authenticated person’s request.

- An occasion of the customized AmazonQ class is initiated. If an ongoing Amazon Q Enterprise dialog is current, the right dialog ID is endured, offering continuity. If no current dialog is discovered, a brand new dialog is initiated.

- Paperwork hooked up to the Streamlit state are handed to the occasion of the AmazonQ class, which retains monitor of the delta between the paperwork already hooked up to the dialog ID and the paperwork but to be shared. This strategy respects and optimizes the five-attachment restrict imposed by Amazon Q Enterprise. To simplify and keep away from repetitions within the middleware library code we’re sustaining on the Streamlit utility, we determined to put in writing a customized wrapper class for the Amazon Q Enterprise calls, which retains the attachment and dialog historical past administration in itself as class variables (versus state-based administration on the Streamlit degree).

- Our wrapper Python class encapsulating the Amazon Q Enterprise occasion parses and returns the solutions primarily based on the dialog ID and the dynamically offered context derived from the person’s query.

- Amazon ECS serves the reply to the authenticated person, offering a safe and scalable supply of the response.

Conditions

This answer has the next conditions:

- You have to have an AWS account the place it is possible for you to to create entry keys and configure companies like Amazon Simple Storage Service (Amazon S3) and Amazon Q Enterprise

- Python have to be put in on the surroundings, in addition to all the mandatory libraries akin to boto3

- It’s assumed that you’ve got Streamlit library put in for Python, together with all the mandatory settings

Deploy the answer

The deployment course of entails provisioning the required AWS infrastructure, configuring surroundings variables, and deploying the appliance code. That is completed by utilizing AWS companies akin to CodePipeline and Amazon ECS for container orchestration and Amazon Q Enterprise for NLP.

Moreover, Amazon Cognito is built-in with Amazon ECS utilizing the AWS Cloud Development Kit (AWS CDK) and person swimming pools are used for person authentication and administration. After deployment, you possibly can entry the appliance via an internet browser. Amazon Q Enterprise is known as from the ECS activity. It’s essential to determine correct entry permissions and safety measures to safeguard person information and uphold the appliance’s integrity.

We use AWS CDK to deploy an internet utility utilizing Amazon ECS with AWS Fargate, Amazon Cognito for person authentication, and AWS Certificate Manager for SSL/TLS certificates.

To deploy the infrastructure, run the next instructions:

npm set upto put in dependenciesnpm run constructto construct the TypeScript codenpx cdk synthto synthesize the AWS CloudFormation templatenpx cdk deployto deploy the infrastructure

The next screenshot reveals our deployed CloudFormation stack.

UI demonstration

The next screenshot reveals the house web page when a person opens the appliance in an internet browser.

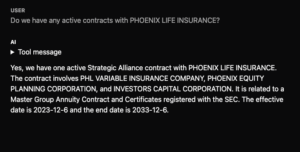

The next screenshot reveals an instance response from Amazon Q Enterprise when no file was uploaded and no related reply to the query was discovered.

The next screenshot illustrates the whole utility circulate, the place the person requested a query earlier than a file was uploaded, then uploaded a file, and requested the identical query once more. The response from Amazon Q Enterprise after importing the file is completely different from the primary question (for testing functions, we used a quite simple file with randomly generated textual content in PDF format).

Resolution advantages

This answer presents the next advantages:

- Effectivity – Automation enhances productiveness by streamlining doc evaluation, saving time, and optimizing assets

- Accuracy – Superior strategies present exact information extraction and interpretation, lowering errors and bettering reliability

- Person-friendly expertise – The intuitive interface and conversational design make it accessible to all customers, encouraging adoption and easy integration into workflows

This containerized structure permits the answer to scale seamlessly whereas optimizing request throughput. Persisting the dialog state enhances precision by constantly increasing dialog context. General, this answer will help you stability efficiency with the constancy of a persistent, context-aware AI assistant via Amazon Q Enterprise.

Clear up

After deployment, you must implement a radical cleanup plan to keep up environment friendly useful resource administration and mitigate pointless prices, notably regarding the AWS companies used within the deployment course of. This plan ought to embrace the next key steps:

- Delete AWS assets – Establish and delete any unused AWS assets, akin to EC2 situations, ECS clusters, and different infrastructure provisioned for the appliance deployment. This may be achieved via the AWS Management Console or AWS Command Line Interface (AWS CLI).

- Delete CodeCommit repositories – Take away any CodeCommit repositories created for storing the appliance’s supply code. This helps declutter the repository listing and prevents extra fees for unused repositories.

- Evaluation and modify CodePipeline configuration – Evaluation the configuration of CodePipeline and ensure there aren’t any energetic pipelines related to the deployed utility. If pipelines are now not required, contemplate deleting them to stop pointless runs and related prices.

- Consider Amazon Cognito person swimming pools – Consider the person swimming pools configured in Amazon Cognito and take away any pointless swimming pools or configurations. Alter the settings to optimize prices and cling to the appliance’s person administration necessities.

By diligently implementing these cleanup procedures, you possibly can successfully decrease bills, optimize useful resource utilization, and keep a tidy surroundings for future growth iterations or deployments. Moreover, common evaluation and adjustment of AWS companies and configurations is really helpful to supply ongoing cost-effectiveness and operational effectivity.

If the answer runs in AWS Amplify or is provisioned by the AWS CDK, you don’t have to maintain eradicating all the things described on this part; deleting the Amplify utility or AWS CDK stack is sufficient to get rid the entire assets related to the appliance.

Conclusion

On this submit, we showcased how Accenture created a customized memory-persistent conversational assistant utilizing AWS generative AI companies. The answer can cater to shoppers growing end-to-end conversational persistent chatbot purposes at a big scale following the offered architectural practices and pointers.

The joint effort between Accenture and AWS builds on the 15-year strategic relationship between the businesses and makes use of the identical confirmed mechanisms and accelerators constructed by the Accenture AWS Business Group (AABG). Join with the AABG staff at accentureaws@amazon.com to drive enterprise outcomes by reworking to an clever information enterprise on AWS.

For additional details about generative AI on AWS utilizing Amazon Bedrock or Amazon Q Enterprise, we advocate the next assets:

It’s also possible to sign up for the AWS generative AI newsletter, which incorporates instructional assets, submit posts, and repair updates.

Concerning the Authors

Dominik Juran works as a full stack developer at Accenture with a deal with AWS applied sciences and AI. He additionally has a ardour for ice hockey.

Dominik Juran works as a full stack developer at Accenture with a deal with AWS applied sciences and AI. He additionally has a ardour for ice hockey.

Milica Bozic works as Cloud Engineer at Accenture, specializing in AWS Cloud options for the precise wants of shoppers with background in telecommunications, notably 4G and 5G applied sciences. Mili is enthusiastic about artwork, books, and motion coaching, discovering inspiration in inventive expression and bodily exercise.

Milica Bozic works as Cloud Engineer at Accenture, specializing in AWS Cloud options for the precise wants of shoppers with background in telecommunications, notably 4G and 5G applied sciences. Mili is enthusiastic about artwork, books, and motion coaching, discovering inspiration in inventive expression and bodily exercise.

Zdenko Estok works as a cloud architect and DevOps engineer at Accenture. He works with AABG to develop and implement progressive cloud options, and makes a speciality of infrastructure as code and cloud safety. Zdenko likes to bike to the workplace and enjoys nice walks in nature.

Zdenko Estok works as a cloud architect and DevOps engineer at Accenture. He works with AABG to develop and implement progressive cloud options, and makes a speciality of infrastructure as code and cloud safety. Zdenko likes to bike to the workplace and enjoys nice walks in nature.

Selimcan “Can” Sakar is a cloud first developer and answer architect at Accenture with a deal with synthetic intelligence and a ardour for watching fashions converge.

Selimcan “Can” Sakar is a cloud first developer and answer architect at Accenture with a deal with synthetic intelligence and a ardour for watching fashions converge.

Shikhar Kwatra is a Sr. AI/ML Specialist Options Architect at Amazon Net Providers, working with main International System Integrators. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and helps the GSI associate in constructing strategic business options on AWS. Shikhar enjoys enjoying guitar, composing music, and practising mindfulness in his spare time.

Shikhar Kwatra is a Sr. AI/ML Specialist Options Architect at Amazon Net Providers, working with main International System Integrators. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and helps the GSI associate in constructing strategic business options on AWS. Shikhar enjoys enjoying guitar, composing music, and practising mindfulness in his spare time.