Duplicate Detection with GenAI. How utilizing LLMs and GenAI strategies can… | by Ian Ormesher | Jul, 2024

Buyer information is commonly saved as data in Buyer Relations Administration methods (CRMs). Knowledge which is manually entered into such methods by one in every of extra customers over time results in information replication, partial duplication or fuzzy duplication. This in flip signifies that there now not a single supply of fact for patrons, contacts, accounts, and many others. Downstream enterprise processes change into growing advanced and contrived with out a distinctive mapping between a report in a CRM and the goal buyer. Present strategies to detect and de-duplicate data use conventional Pure Language Processing strategies generally known as Entity Matching. However it’s doable to make use of the most recent developments in Giant Language Fashions and Generative AI to vastly enhance the identification and restore of duplicated data. On widespread benchmark datasets I discovered an enchancment within the accuracy of information de-duplication charges from 30 p.c utilizing NLP strategies to nearly 60 p.c utilizing my proposed technique.

I wish to clarify the method right here within the hope that others will discover it useful and use it for their very own de-duplication wants. It’s helpful for different situations the place you want to establish duplicate data, not only for Buyer information. I additionally wrote and printed a analysis paper about this which you’ll be able to view on Arxiv, if you wish to know extra in depth:

The duty of figuring out duplicate data is commonly performed by pairwise report comparisons and is known as “Entity Matching” (EM). Typical steps of this course of can be:

- Knowledge Preparation

- Candidate Technology

- Blocking

- Matching

- Clustering

Knowledge Preparation

Knowledge preparation is the cleansing of the information and includes things like eradicating non-ASCII characters, capitalisation and tokenising the textual content. This is a vital and mandatory step for the NLP matching algorithms later within the course of which don’t work effectively with completely different instances or non-ASCII characters.

Candidate Technology

Within the typical EM technique, we might produce candidate data by combining all of the data within the desk with themselves to provide a cartesian product. You’ll take away all combos that are of a row with itself. For lots of the NLP matching algorithms evaluating row A with row B is equal to evaluating row B with row A. For these instances you will get away with holding simply a type of pairs. However even after this, you’re nonetheless left with quite a lot of candidate data. With a purpose to scale back this quantity a method known as “blocking” is commonly used.

Blocking

The thought of blocking is to get rid of these data that we all know couldn’t be duplicates of one another as a result of they’ve completely different values for the “blocked” column. For instance, If we had been contemplating buyer data, a possible column to dam on might be one thing like “Metropolis”. It’s because we all know that even when all the opposite particulars of the report are related sufficient, they can’t be the identical buyer in the event that they’re positioned in several cities. As soon as we’ve generated our candidate data, we then use blocking to get rid of these data which have completely different values for the blocked column.

Matching

Following on from blocking we now study all of the candidate data and calculate conventional NLP similarity-based attribute worth metrics with the fields from the 2 rows. Utilizing these metrics, we will decide if we’ve a possible match or un-match.

Clustering

Now that we’ve an inventory of candidate data that match, we will then group them into clusters.

There are a number of steps to the proposed technique, however crucial factor to notice is that we now not have to carry out the “Knowledge Preparation” or “Candidate Technology” step of the normal strategies. The brand new steps change into:

- Create Match Sentences

- Create Embedding Vectors of these Match Sentences

- Clustering

Create Match Sentences

First a “Match Sentence” is created by concatenating the attributes we’re enthusiastic about and separating them with areas. For instance, let’s say we’ve a buyer report which seems like this:

We’d create a “Match Sentence” by concatenating with areas the name1, name2, name3, handle and metropolis attributes which might give us the next:

“John Hartley Smith 20 Major Road London”

Create Embedding Vectors

As soon as our “Match Sentence” has been created it’s then encoded into vector house utilizing our chosen embedding mannequin. That is achieved through the use of “Sentence Transformers”. The output of this encoding can be a floating-point vector of pre-defined dimensions. These dimensions relate to the embedding mannequin that’s used. I used the all-mpnet-base-v2 embedding mannequin which has a vector house of 768 dimensions. This embedding vector is then appended to the report. That is performed for all of the data.

Clustering

As soon as embedding vectors have been calculated for all of the data, the subsequent step is to create clusters of comparable data. To do that I take advantage of the DBSCAN method. DBSCAN works by first deciding on a random report and discovering data which can be near it utilizing a distance metric. There are 2 completely different sorts of distance metrics that I’ve discovered to work:

- L2 Norm distance

- Cosine Similarity

For every of these metrics you select an epsilon worth as a threshold worth. All data which can be throughout the epsilon distance and have the identical worth for the “blocked” column are then added to this cluster. As soon as that cluster is full one other random report is chosen from the unvisited data and a cluster then created round it. This then continues till all of the data have been visited.

I used this method to establish duplicate data with buyer information in my work. It produced some very good matches. With a purpose to be extra goal, I additionally ran some experiments utilizing a benchmark dataset known as “Musicbrainz 200K”. It produced some quantifiable outcomes that had been an enchancment over customary NLP strategies.

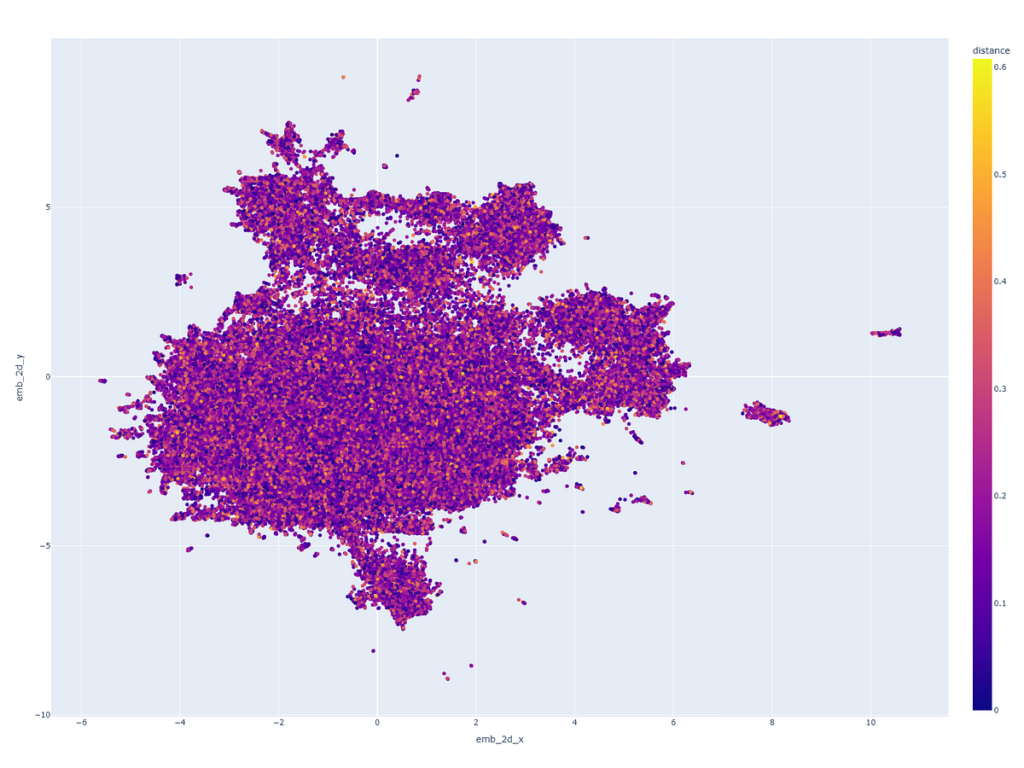

Visualising Clustering

I produced a nearest neighbour cluster map for the Musicbrainz 200K dataset which I then rendered in 2D utilizing the UMAP discount algorithm:

Sources

I’ve created numerous notebooks that may assist with attempting the tactic out for yourselves: