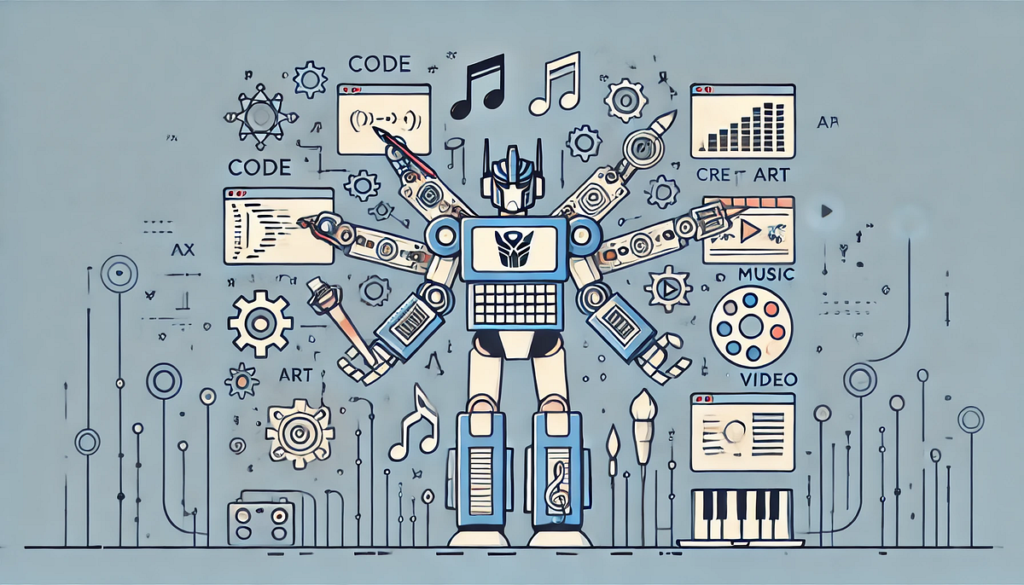

Understanding Transformers. A simple breakdown of… | by Aveek Goswami | Jun, 2024

The transformer got here out in 2017. There have been many, many articles explaining the way it works, however I typically discover them both going too deep into the maths or too shallow on the small print. I find yourself spending as a lot time googling (or chatGPT-ing) as I do studying, which isn’t the most effective strategy to understanding a subject. That introduced me to writing this text, the place I try to elucidate probably the most revolutionary points of the transformer whereas preserving it succinct and easy for anybody to learn.

This text assumes a basic understanding of machine studying rules.

The concepts behind the Transformer led us to the period of Generative AI

Transformers represented a brand new structure of sequence transduction fashions. A sequence mannequin is a kind of mannequin that transforms an enter sequence to an output sequence. This enter sequence might be of varied information varieties, akin to characters, phrases, tokens, bytes, numbers, phonemes (speech recognition), and may additionally be multimodal¹.

Earlier than transformers, sequence fashions have been largely based mostly on recurrent neural networks (RNNs), lengthy short-term reminiscence (LSTM), gated recurrent models (GRUs) and convolutional neural networks (CNNs). They typically contained some type of an consideration mechanism to account for the context supplied by objects in numerous positions of a sequence.

- RNNs: The mannequin tackles the information sequentially, so something discovered from the earlier computation is accounted for within the subsequent computation². Nevertheless, its sequential nature causes just a few issues: the mannequin struggles to account for long-term dependencies for longer sequences (generally known as vanishing or exploding gradients), and prevents parallel processing of the enter sequence as you can’t prepare on totally different chunks of the enter on the similar time (batching) as a result of you’ll lose context of the earlier chunks. This makes it extra computationally costly to coach.

- LSTM and GRUs: Made use of gating mechanisms to protect long-term dependencies³. The mannequin has a cell state which accommodates the related info from the entire sequence. The cell state modifications by gates such because the neglect, enter, output gates (LSTM), and replace, reset gates (GRU). These gates determine, at every sequential iteration, how a lot info from the earlier state must be saved, how a lot info from the brand new replace must be added, after which which a part of the brand new cell state must be saved general. Whereas this improves the vanishing gradient concern, the fashions nonetheless work sequentially and therefore prepare slowly because of restricted parallelisation, particularly when sequences get longer.

- CNNs: Course of information in a extra parallel style, however nonetheless technically operates sequentially. They’re efficient in capturing native patterns however wrestle with long-term dependencies as a result of manner during which convolution works. The variety of operations to seize relationships between two enter positions will increase with distance between the positions.

Therefore, introducing the Transformer, which depends completely on the eye mechanism and does away with the recurrence and convolutions. Consideration is what the mannequin makes use of to concentrate on totally different elements of the enter sequence at every step of producing an output. The Transformer was the primary mannequin to make use of consideration with out sequential processing, permitting for parallelisation and therefore sooner coaching with out dropping long-term dependencies. It additionally performs a fixed variety of operations between enter positions, no matter how far aside they’re.

The necessary options of the transformer are: tokenisation, the embedding layer, the consideration mechanism, the encoder and the decoder. Let’s think about an enter sequence in french: “Je suis etudiant” and a goal output sequence in English “I’m a pupil” (I’m blatantly copying from this link, which explains the method very descriptively)

Tokenisation

The enter sequence of phrases is transformed into tokens of three–4 characters lengthy

Embeddings

The enter and output sequence are mapped to a sequence of steady representations, z, which represents the enter and output embeddings. Every token might be represented by an embedding to seize some type of that means, which helps in computing its relationship to different tokens; this embedding might be represented as a vector. To create these embeddings, we use the vocabulary of the coaching dataset, which accommodates each distinctive output token that’s getting used to coach the mannequin. We then decide an acceptable embedding dimension, which corresponds to the scale of the vector illustration for every token; increased embedding dimensions will higher seize extra complicated / various / intricate meanings and relationships. The scale of the embedding matrix, for vocabulary measurement V and embedding dimension D, therefore turns into V x D, making it a high-dimensional vector.

At initialisation, these embeddings might be initialised randomly and extra correct embeddings are discovered through the coaching course of. The embedding matrix is then up to date throughout coaching.

Positional encodings are added to those embeddings as a result of the transformer doesn’t have a built-in sense of the order of tokens.

Consideration mechanism

Self-attention is the mechanism the place every token in a sequence computes consideration scores with each different token in a sequence to perceive relationships between all tokens no matter distance from one another. I’m going to keep away from an excessive amount of math on this article, however you may learn up here in regards to the totally different matrices fashioned to compute consideration scores and therefore seize relationships between every token and each different token.

These consideration scores end in a new set of representations⁴ for every token which is then used within the subsequent layer of processing. Throughout coaching, the weight matrices are up to date by back-propagation, so the mannequin can higher account for relationships between tokens.

Multi-head consideration is simply an extension of self-attention. Completely different consideration scores are computed, the outcomes are concatenated and reworked and the ensuing illustration enhances the mannequin’s skill to seize numerous complicated relationships between tokens.

Encoder

Enter embeddings (constructed from the enter sequence) with positional encodings are fed into the encoder. The enter embeddings are 6 layers, with every layer containing 2 sub-layers: multi-head consideration and feed ahead networks. There’s additionally a residual connection which ends up in the output of every layer being LayerNorm(x+Sublayer(x)) as proven. The output of the encoder is a sequence of vectors that are contextualised representations of the inputs after accounting for consideration scored. These are then fed to the decoder.

Decoder

Output embeddings (generated from the goal output sequence) with positional encodings are fed into the decoder. The decoder additionally accommodates 6 layers, and there are two variations from the encoder.

First, the output embeddings undergo masked multi-head consideration, which implies that the embeddings from subsequent positions within the sequence are ignored when computing the eye scores. It’s because once we generate the present token (in place i), we should always ignore all output tokens at positions after i. Furthermore, the output embeddings are offset to the best by one place, in order that the expected token at place i solely will depend on outputs at positions lower than it.

For instance, let’s say the enter was “je suis étudiant à l’école” and goal output is “i’m a pupil in class”. When predicting the token for pupil, the encoder takes embeddings for “je suis etudiant” whereas the decoder conceals the tokens after “a” in order that the prediction of pupil solely considers the earlier tokens within the sentence, specifically “I’m a”. This trains the mannequin to foretell tokens sequentially. In fact, the tokens “in class” present added context for the mannequin’s prediction, however we’re coaching the mannequin to seize this context from the enter token,“etudiant” and subsequent enter tokens, “à l’école”.

How is the decoder getting this context? Properly that brings us to the second distinction: The second multi-head consideration layer within the decoder takes within the contextualised representations of the inputs earlier than being handed into the feed-forward community, to make sure that the output representations seize the total context of the enter tokens and prior outputs. This offers us a sequence of vectors corresponding to every goal token, that are contextualised goal representations.

The prediction utilizing the Linear and Softmax layers

Now, we wish to use these contextualised goal representations to determine what the subsequent token is. Utilizing the contextualised goal representations from the decoder, the linear layer initiatives the sequence of vectors right into a a lot bigger logits vector which is identical size as our mannequin’s vocabulary, let’s say of size L. The linear layer accommodates a weight matrix which, when multiplied with the decoder outputs and added with a bias vector, produces a logits vector of measurement 1 x L. Every cell is the rating of a singular token, and the softmax layer than normalises this vector in order that the whole vector sums to 1; every cell now represents the chances of every token. The very best chance token is chosen, and voila! we’ve our predicted token.

Coaching the mannequin

Subsequent, we examine the expected token chances to the precise token probabilites (which can simply be logits vector of 0 for each token aside from the goal token, which has chance 1.0). We calculate an acceptable loss perform for every token prediction and common this loss over the whole goal sequence. We then back-propagate this loss over all of the mannequin’s parameters to calculate acceptable gradients, and use an acceptable optimisation algorithm to replace the mannequin parameters. Therefore, for the basic transformer structure, this results in updates of

- The embedding matrix

- The totally different matrices used to compute consideration scores

- The matrices related to the feed-forward neural networks

- The linear matrix used to make the logits vector

Matrices in 2–4 are weight matrices, and there are further bias phrases related to every output that are additionally up to date throughout coaching.

Be aware: The linear matrix and embedding matrix are sometimes transposes of one another. That is the case for the Consideration is All You Want paper; the method known as “weight-tying”. The variety of parameters to coach are thus lowered.

This represents one epoch of coaching. Coaching contains a number of epochs, with the quantity relying on the scale of the datasets, measurement of the fashions, and the mannequin’s job.

As we talked about earlier, the issues with the RNNs, CNNs, LSTMs and extra embody the dearth of parallel processing, their sequential structure, and insufficient capturing of long-term dependencies. The transformer structure above solves these issues as…

- The Consideration mechanism permits the whole sequence to be processed in parallel quite than sequentially. With self-attention, every token within the enter sequence attends to each different token within the enter sequence (of that mini batch, defined subsequent). This captures all relationships on the similar time, quite than in a sequential method.

- Mini-batching of enter inside every epoch permits parallel processing, sooner coaching, and simpler scalability of the mannequin. In a big textual content stuffed with examples, mini-batches symbolize a smaller assortment of those examples. The examples within the dataset are shuffled earlier than being put into mini-batches, and reshuffled initially of every epoch. Every mini-batch is handed into the mannequin on the similar time.

- Through the use of positional encodings and batch processing, the order of tokens in a sequence is accounted for. Distances between tokens are additionally accounted for equally no matter how far they’re, and the mini-batch processing additional ensures this.

As proven within the paper, the outcomes have been unbelievable.

Welcome to the world of transformers.

The transformer structure was launched by the researcher Ashish Vaswani in 2017 whereas he was working at Google Mind. The Generative Pre-trained Transformer (GPT) was launched by OpenAI in 2018. The first distinction is that GPT’s don’t comprise an encoder stack of their structure. The encoder-decoder make-up is beneficial when have been instantly changing one sequence into one other sequence. The GPT was designed to concentrate on generative capabilities, and it did away with the decoder whereas preserving the remainder of the parts related.

The GPT mannequin is pre-trained on a big corpus of textual content, unsupervised, to study relationships between all phrases and tokens⁵. After fine-tuning for numerous use circumstances (akin to a basic function chatbot), they’ve confirmed to be extraordinarily efficient in generative duties.

Instance

Once you ask it a query, the steps for prediction are largely the identical as a daily transformer. In case you ask it the query “How does GPT predict responses”, these phrases are tokenised, embeddings generated, consideration scores computed, chances of the subsequent phrase are calculated, and a token is chosen to be the subsequent predicted token. For instance, the mannequin would possibly generate the response step-by-step, beginning with “GPT predicts responses by…” and persevering with based mostly on chances till it types a whole, coherent response. (guess what, that final sentence was from chatGPT).