Meta Llama 3 fashions at the moment are obtainable in Amazon SageMaker JumpStart

Right this moment, we’re excited to announce that Meta Llama 3 basis fashions can be found by means of Amazon SageMaker JumpStart to deploy and run inference. The Llama 3 fashions are a group of pre-trained and fine-tuned generative textual content fashions.

On this publish, we stroll by means of tips on how to uncover and deploy Llama 3 fashions by way of SageMaker JumpStart.

What’s Meta Llama 3

Llama 3 is available in two parameter sizes — 8B and 70B with 8k context size — that may assist a broad vary of use instances with enhancements in reasoning, code era, and instruction following. Llama 3 makes use of a decoder-only transformer structure and new tokenizer that gives improved mannequin efficiency with 128k measurement. As well as, Meta improved post-training procedures that considerably decreased false refusal charges, improved alignment, and elevated variety in mannequin responses. Now you can derive the mixed benefits of Llama 3 efficiency and MLOps controls with Amazon SageMaker options comparable to SageMaker Pipelines, SageMaker Debugger, or container logs. As well as, the mannequin might be deployed in an AWS safe setting below your VPC controls, serving to present knowledge safety.

What’s SageMaker JumpStart

With SageMaker JumpStart, you possibly can select from a broad number of publicly obtainable basis fashions. ML practitioners can deploy basis fashions to devoted SageMaker situations from a community remoted setting and customise fashions utilizing SageMaker for mannequin coaching and deployment. Now you can uncover and deploy Llama 3 fashions with a number of clicks in Amazon SageMaker Studio or programmatically by means of the SageMaker Python SDK, enabling you to derive mannequin efficiency and MLOps controls with SageMaker options comparable to SageMaker Pipelines, SageMaker Debugger, or container logs. The mannequin is deployed in an AWS safe setting and below your VPC controls, serving to present knowledge safety. Llama 3 fashions can be found at this time for deployment and inferencing in Amazon SageMaker Studio in us-east-1 (N. Virginia), us-east-2 (Ohio), us-west-2 (Oregon), eu-west-1 (Eire) and ap-northeast-1 (Tokyo) AWS Areas.

Uncover fashions

You may entry the muse fashions by means of SageMaker JumpStart within the SageMaker Studio UI and the SageMaker Python SDK. On this part, we go over tips on how to uncover the fashions in SageMaker Studio.

SageMaker Studio is an built-in improvement setting (IDE) that gives a single web-based visible interface the place you possibly can entry purpose-built instruments to carry out all ML improvement steps, from making ready knowledge to constructing, coaching, and deploying your ML fashions. For extra particulars on tips on how to get began and arrange SageMaker Studio, seek advice from Amazon SageMaker Studio.

In SageMaker Studio, you possibly can entry SageMaker JumpStart, which comprises pre-trained fashions, notebooks, and prebuilt options, below Prebuilt and automatic options.

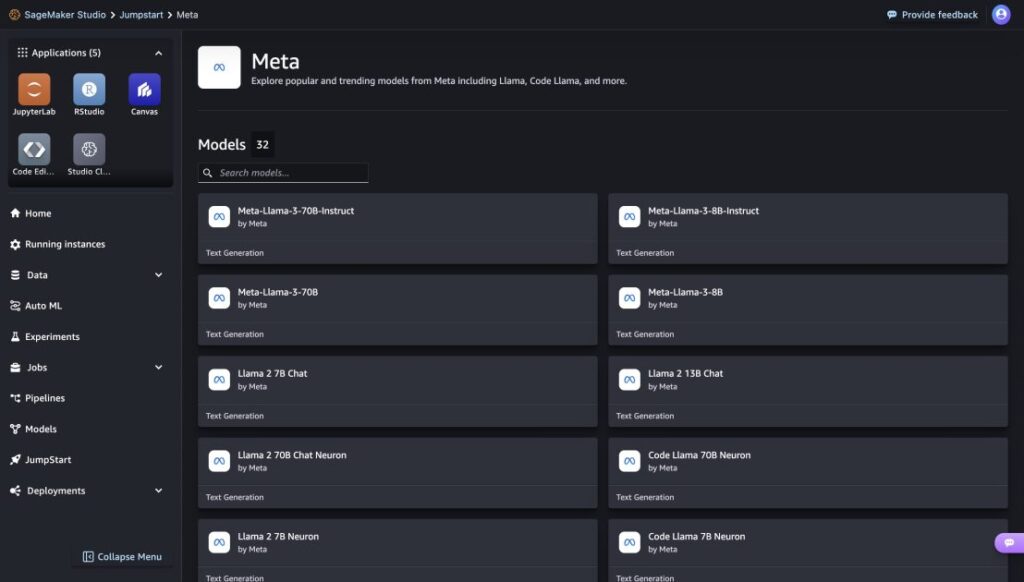

From the SageMaker JumpStart touchdown web page, you possibly can simply uncover numerous fashions by searching by means of completely different hubs that are named after mannequin suppliers. You could find Llama 3 fashions in Meta hub. If you don’t see Llama 3 fashions, please replace your SageMaker Studio model by shutting down and restarting. For extra info, seek advice from Shut down and Update Studio Classic Apps.

You could find Llama 3 fashions by looking for “Meta-llama-3“ from the search field positioned at prime left.

You may uncover all Meta fashions obtainable in SageMaker JumpStart by clicking on Meta hub.

Clicking on a mannequin card opens the corresponding mannequin element web page, from which you’ll be able to simply Deploy the mannequin.

Deploy a mannequin

Once you select Deploy and acknowledge the EULA phrases, deployment will begin.

You may monitor progress of the deployment on the web page that reveals up after clicking the Deploy button.

Alternatively, you possibly can select Open pocket book to deploy by means of the instance pocket book. The instance pocket book gives end-to-end steerage on tips on how to deploy the mannequin for inference and clear up sources.

To deploy utilizing the pocket book, you begin by deciding on an applicable mannequin, specified by the model_id. You may deploy any of the chosen fashions on SageMaker with the next code.

By default accept_eula is about to False. It’s essential to manually settle for the EULA to deploy the endpoint efficiently, By doing so, you settle for the person license settlement and acceptable use coverage. You too can discover the license settlement Llama website. This deploys the mannequin on SageMaker with default configurations together with the default occasion kind and default VPC configurations. You may change these configuration by specifying non-default values in JumpStartModel. To study extra, please seek advice from the next documentation.

The next desk lists all of the Llama 3 fashions obtainable in SageMaker JumpStart together with the model_ids, default occasion varieties and most variety of complete tokens (sum of the variety of enter tokens and variety of generated tokens) supported for every of those fashions.

| Mannequin Identify | Mannequin ID | Max Whole Tokens | Default occasion kind |

| Meta-Llama-3-8B | meta-textgeneration-llama-3-8B | 8192 | ml.g5.12xlarge |

| Meta-Llama-3-8B-Instruct | meta-textgeneration-llama-3-8B-instruct | 8192 | ml.g5.12xlarge |

| Meta-Llama-3-70B | meta-textgeneration-llama-3-70b | 8192 | ml.p4d.24xlarge |

| Meta-Llama-3-70B-Instruct | meta-textgeneration-llama-3-70b-instruct | 8192 | ml.p4d.24xlarge |

Run inference

After you deploy the mannequin, you possibly can run inference in opposition to the deployed endpoint by means of SageMaker predictor. Fantastic-tuned instruct fashions (Llama 3: 8B Instruct and 70B Instruct) settle for a historical past of chats between the person and the chat assistant, and generate the next chat. The pre-trained fashions (Llama 3: 8B and 70B) require a string immediate and carry out textual content completion on the supplied immediate.

Inference parameters management the textual content era course of on the endpoint. The Max new tokens management the dimensions of the output generated by the mannequin. This isn’t identical because the variety of phrases as a result of the vocabulary of the mannequin isn’t the identical because the English language vocabulary, and every token might not be an English language phrase. The temperature parameter controls the randomness within the output. Increased temperature leads to extra inventive and hallucinated outputs. All of the inference parameters are optionally available.

Instance prompts for the 70B mannequin

You should use Llama 3 fashions for textual content completion for any piece of textual content. Via textual content era, you possibly can carry out quite a lot of duties comparable to query answering, language translation, and sentiment evaluation, and extra. The enter payload to the endpoint seems to be like the next code:

The next are some pattern instance prompts and the textual content generated by the mannequin. All outputs are generated with inference parameters {"max_new_tokens":64, "top_p":0.9, "temperature":0.6}.

Within the subsequent instance, we present tips on how to use Llama 3 fashions with few shot in-context studying the place we offer coaching samples obtainable to the mannequin. We solely run inference on the deployed mannequin and through this course of, and mannequin weights don’t change.

Instance prompts for the 70B-Instruct mannequin

With Llama 3 instruct fashions that are optimized for dialogue use instances, the enter to the instruct mannequin endpoints is the earlier historical past between the chat assistant and the person. You may ask questions contextual to the dialog that has occurred to this point. You too can present the system configuration, comparable to personas, which outline the chat assistant’s habits. Whereas the enter payload format is identical as the bottom pre-trained mannequin, the enter textual content needs to be formatted within the following method:

On this instruction template, you possibly can optionally begin with a system function and embody as many alternating roles as desired within the turn-based historical past. The ultimate function ought to all the time be assistant and finish with two new line feeds.

Subsequent, take into account a number of instance prompts and responses from the mannequin. Within the following instance, the person is asking a easy query to the assistant.

Within the following instance, the person has a dialog with the assistant about vacationer websites in Paris. Then the person inquires concerning the first possibility really useful by the chat assistant.

Within the following examples, we set the system’s configuration.

Clear up

After you’re executed working the pocket book, be sure that to delete all of the sources that you simply created within the course of so your billing is stopped. Use the next code:

Conclusion

On this publish, we confirmed you tips on how to get began with Llama 3 fashions in SageMaker Studio. You now have entry to 4 Llama 3 basis fashions that comprise billions of parameters. As a result of basis fashions are pretrained, they will additionally assist decrease coaching and infrastructure prices and allow customization in your use case. Take a look at SageMaker JumpStart in SageMaker Studio now to get began.

About Authors

Kyle Ulrich is an Utilized Scientist II at AWS

Xin Huang is a Senior Utilized Scientist at AWS

Qing Lan is a Senior Software program Developer Engineer at AWS

Haotian An is a Software program Developer Engineer II at AWS

Christopher Whitten is a Software program Improvement Engineer II at AWS

Tyler Osterberg is a Software program Improvement Engineer I at AWS

Manan Shah is a Software program Improvement Supervisor at AWS

Jonathan Guinegagne is a Senior Software program Developer Engineer at AWS

Adriana Simmons is a Senior Product Advertising and marketing Supervisor at AWS

June Gained is a Senior Product Supervisor at AWS

Ashish Khetan is a Senior Utilized Scientist at AWS

Rachna Chadha is a Principal Answer Architect – AI/ML at AWS

Deepak Rupakula is a Principal GTM Specialist at AWS