Speak to your slide deck utilizing multimodal basis fashions hosted on Amazon Bedrock – Half 2

In Part 1 of this sequence, we offered an answer that used the Amazon Titan Multimodal Embeddings mannequin to transform particular person slides from a slide deck into embeddings. We saved the embeddings in a vector database after which used the Large Language-and-Vision Assistant (LLaVA 1.5-7b) mannequin to generate textual content responses to person questions based mostly on essentially the most comparable slide retrieved from the vector database. We used AWS companies together with Amazon Bedrock, Amazon SageMaker, and Amazon OpenSearch Serverless on this resolution.

On this put up, we exhibit a special strategy. We use the Anthropic Claude 3 Sonnet mannequin to generate textual content descriptions for every slide within the slide deck. These descriptions are then transformed into textual content embeddings utilizing the Amazon Titan Text Embeddings mannequin and saved in a vector database. Then we use the Claude 3 Sonnet mannequin to generate solutions to person questions based mostly on essentially the most related textual content description retrieved from the vector database.

You’ll be able to check each approaches on your dataset and consider the outcomes to see which strategy offers you the most effective outcomes. In Half 3 of this sequence, we consider the outcomes of each strategies.

Answer overview

The answer offers an implementation for answering questions utilizing info contained in textual content and visible parts of a slide deck. The design depends on the idea of Retrieval Augmented Era (RAG). Historically, RAG has been related to textual knowledge that may be processed by giant language fashions (LLMs). On this sequence, we lengthen RAG to incorporate photos as nicely. This offers a robust search functionality to extract contextually related content material from visible parts like tables and graphs together with textual content.

This resolution consists of the next parts:

- Amazon Titan Textual content Embeddings is a textual content embeddings mannequin that converts pure language textual content, together with single phrases, phrases, and even giant paperwork, into numerical representations that can be utilized to energy use circumstances resembling search, personalization, and clustering based mostly on semantic similarity.

- Claude 3 Sonnet is the subsequent era of state-of-the-art fashions from Anthropic. Sonnet is a flexible instrument that may deal with a variety of duties, from advanced reasoning and evaluation to speedy outputs, in addition to environment friendly search and retrieval throughout huge quantities of data.

- OpenSearch Serverless is an on-demand serverless configuration for Amazon OpenSearch Service. We use OpenSearch Serverless as a vector database for storing embeddings generated by the Amazon Titan Textual content Embeddings mannequin. An index created within the OpenSearch Serverless assortment serves because the vector retailer for our RAG resolution.

- Amazon OpenSearch Ingestion (OSI) is a totally managed, serverless knowledge collector that delivers knowledge to OpenSearch Service domains and OpenSearch Serverless collections. On this put up, we use an OSI pipeline API to ship knowledge to the OpenSearch Serverless vector retailer.

The answer design consists of two components: ingestion and person interplay. Throughout ingestion, we course of the enter slide deck by changing every slide into a picture, producing descriptions and textual content embeddings for every picture. We then populate the vector knowledge retailer with the embeddings and textual content description for every slide. These steps are accomplished previous to the person interplay steps.

Within the person interplay part, a query from the person is transformed into textual content embeddings. A similarity search is run on the vector database to discover a textual content description similar to a slide that would probably comprise solutions to the person query. We then present the slide description and the person query to the Claude 3 Sonnet mannequin to generate a solution to the question. All of the code for this put up is offered within the GitHub repo.

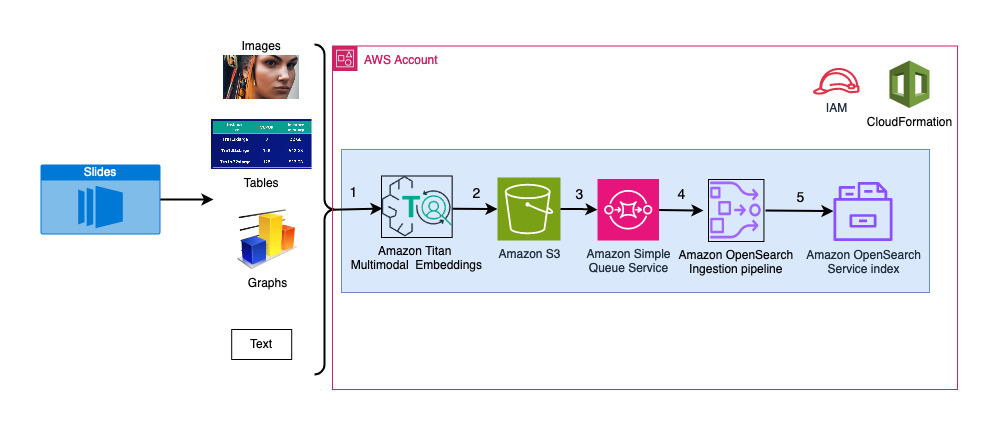

The next diagram illustrates the ingestion structure.

The workflow consists of the next steps:

- Slides are transformed to picture recordsdata (one per slide) in JPG format and handed to the Claude 3 Sonnet mannequin to generate textual content description.

- The information is shipped to the Amazon Titan Textual content Embeddings mannequin to generate embeddings. On this sequence, we use the slide deck Train and deploy Stable Diffusion using AWS Trainium & AWS Inferentia from the AWS Summit in Toronto, June 2023 to exhibit the answer. The pattern deck has 31 slides, due to this fact we generate 31 units of vector embeddings, every with 1536 dimensions. We add further metadata fields to carry out wealthy search queries utilizing OpenSearch’s highly effective search capabilities.

- The embeddings are ingested into an OSI pipeline utilizing an API name.

- The OSI pipeline ingests the info as paperwork into an OpenSearch Serverless index. The index is configured because the sink for this pipeline and is created as a part of the OpenSearch Serverless assortment.

The next diagram illustrates the person interplay structure.

The workflow consists of the next steps:

- A person submits a query associated to the slide deck that has been ingested.

- The person enter is transformed into embeddings utilizing the Amazon Titan Textual content Embeddings mannequin accessed utilizing Amazon Bedrock. An OpenSearch Service vector search is carried out utilizing these embeddings. We carry out a k-nearest neighbor (k-NN) search to retrieve essentially the most related embeddings matching the person question.

- The metadata of the response from OpenSearch Serverless accommodates a path to the picture and outline similar to essentially the most related slide.

- A immediate is created by combining the person query and the picture description. The immediate is offered to Claude 3 Sonnet hosted on Amazon Bedrock.

- The results of this inference is returned to the person.

We talk about the steps for each levels within the following sections, and embody particulars concerning the output.

Stipulations

To implement the answer offered on this put up, you must have an AWS account and familiarity with FMs, Amazon Bedrock, SageMaker, and OpenSearch Service.

This resolution makes use of the Claude 3 Sonnet and Amazon Titan Textual content Embeddings fashions hosted on Amazon Bedrock. Guarantee that these fashions are enabled to be used by navigating to the Mannequin entry web page on the Amazon Bedrock console.

If fashions are enabled, the Entry standing will state Entry granted.

If the fashions will not be obtainable, allow entry by selecting Handle mannequin entry, choosing the fashions, and selecting Request mannequin entry. The fashions are enabled to be used instantly.

Use AWS CloudFormation to create the answer stack

You should use AWS CloudFormation to create the answer stack. When you’ve got created the answer for Half 1 in the identical AWS account, make sure you delete that earlier than creating this stack.

| AWS Area | Hyperlink |

|---|---|

us-east-1 |

|

us-west-2 |

After the stack is created efficiently, navigate to the stack’s Outputs tab on the AWS CloudFormation console and notice the values for MultimodalCollectionEndpoint and OpenSearchPipelineEndpoint. You utilize these within the subsequent steps.

The CloudFormation template creates the next assets:

- IAM roles – The next AWS Identity and Access Management (IAM) roles are created. Replace these roles to use least-privilege permissions, as mentioned in Security best practices.

SMExecutionRolewith Amazon Simple Storage Service (Amazon S3), SageMaker, OpenSearch Service, and Amazon Bedrock full entry.OSPipelineExecutionRolewith entry to the S3 bucket and OSI actions.

- SageMaker pocket book – All code for this put up is run utilizing this pocket book.

- OpenSearch Serverless assortment – That is the vector database for storing and retrieving embeddings.

- OSI pipeline – That is the pipeline for ingesting knowledge into OpenSearch Serverless.

- S3 bucket – All knowledge for this put up is saved on this bucket.

The CloudFormation template units up the pipeline configuration required to configure the OSI pipeline with HTTP as supply and the OpenSearch Serverless index as sink. The SageMaker pocket book 2_data_ingestion.ipynb shows methods to ingest knowledge into the pipeline utilizing the Requests HTTP library.

The CloudFormation template additionally creates network, encryption and data access insurance policies required on your OpenSearch Serverless assortment. Replace these insurance policies to use least-privilege permissions.

The CloudFormation template title and OpenSearch Service index title are referenced within the SageMaker pocket book 3_rag_inference.ipynb. If you happen to change the default names, be sure to replace them within the pocket book.

Check the answer

After you could have created the CloudFormation stack, you’ll be able to check the answer. Full the next steps:

- On the SageMaker console, select Notebooks within the navigation pane.

- Choose

MultimodalNotebookInstanceand select Open JupyterLab.

- In File Browser, traverse to the notebooks folder to see notebooks and supporting recordsdata.

The notebooks are numbered within the sequence through which they run. Directions and feedback in every pocket book describe the actions carried out by that pocket book. We run these notebooks one after the other.

- Select

1_data_prep.ipynbto open it in JupyterLab. - On the Run menu, select Run All Cells to run the code on this pocket book.

This pocket book will obtain a publicly obtainable slide deck, convert every slide into the JPG file format, and add these to the S3 bucket.

- Select

2_data_ingestion.ipynbto open it in JupyterLab. - On the Run menu, select Run All Cells to run the code on this pocket book.

On this pocket book, you create an index within the OpenSearch Serverless assortment. This index shops the embeddings knowledge for the slide deck. See the next code:

You utilize the Claude 3 Sonnet and Amazon Titan Textual content Embeddings fashions to transform the JPG photos created within the earlier pocket book into vector embeddings. These embeddings and extra metadata (such because the S3 path and outline of the picture file) are saved within the index together with the embeddings. The next code snippet exhibits how Claude 3 Sonnet generates picture descriptions:

The picture descriptions are handed to the Amazon Titan Textual content Embeddings mannequin to generate vector embeddings. These embeddings and extra metadata (such because the S3 path and outline of the picture file) are saved within the index together with the embeddings. The next code snippet exhibits the decision to the Amazon Titan Textual content Embeddings mannequin:

The information is ingested into the OpenSearch Serverless index by making an API name to the OSI pipeline. The next code snippet exhibits the decision made utilizing the Requests HTTP library:

- Select

3_rag_inference.ipynbto open it in JupyterLab. - On the Run menu, select Run All Cells to run the code on this pocket book.

This pocket book implements the RAG resolution: you change the person query into embeddings, discover a comparable picture description from the vector database, and supply the retrieved description to Claude 3 Sonnet to generate a solution to the person query. You utilize the next immediate template:

The next code snippet offers the RAG workflow:

Outcomes

The next desk accommodates some person questions and responses generated by our implementation. The Query column captures the person query, and the Reply column is the textual response generated by Claude 3 Sonnet. The Picture column exhibits the k-NN slide match returned by the OpenSearch Serverless vector search.

Multimodal RAG outcomes

Question your index

You should use OpenSearch Dashboards to work together with the OpenSearch API to run fast assessments in your index and ingested knowledge.

Cleanup

To keep away from incurring future expenses, delete the assets. You are able to do this by deleting the stack utilizing the AWS CloudFormation console.

Conclusion

Enterprises generate new content material on a regular basis, and slide decks are a typical solution to share and disseminate info internally throughout the group and externally with clients or at conferences. Over time, wealthy info can stay buried and hidden in non-text modalities like graphs and tables in these slide decks.

You should use this resolution and the facility of multimodal FMs such because the Amazon Titan Textual content Embeddings and Claude 3 Sonnet to find new info or uncover new views on content material in slide decks. You’ll be able to attempt completely different Claude fashions obtainable on Amazon Bedrock by updating the CLAUDE_MODEL_ID within the globals.py file.

That is Half 2 of a three-part sequence. We used the Amazon Titan Multimodal Embeddings and the LLaVA mannequin in Half 1. In Half 3, we’ll evaluate the approaches from Half 1 and Half 2.

Parts of this code are launched underneath the Apache 2.0 License.

In regards to the authors

Amit Arora is an AI and ML Specialist Architect at Amazon Net Companies, serving to enterprise clients use cloud-based machine studying companies to quickly scale their improvements. He’s additionally an adjunct lecturer within the MS knowledge science and analytics program at Georgetown College in Washington D.C.

Amit Arora is an AI and ML Specialist Architect at Amazon Net Companies, serving to enterprise clients use cloud-based machine studying companies to quickly scale their improvements. He’s additionally an adjunct lecturer within the MS knowledge science and analytics program at Georgetown College in Washington D.C.

Manju Prasad is a Senior Options Architect at Amazon Net Companies. She focuses on offering technical steering in a wide range of technical domains, together with AI/ML. Previous to becoming a member of AWS, she designed and constructed options for corporations within the monetary companies sector and likewise for a startup. She is obsessed with sharing data and fostering curiosity in rising expertise.

Manju Prasad is a Senior Options Architect at Amazon Net Companies. She focuses on offering technical steering in a wide range of technical domains, together with AI/ML. Previous to becoming a member of AWS, she designed and constructed options for corporations within the monetary companies sector and likewise for a startup. She is obsessed with sharing data and fostering curiosity in rising expertise.

Archana Inapudi is a Senior Options Architect at AWS, supporting a strategic buyer. She has over a decade of cross-industry experience main strategic technical initiatives. Archana is an aspiring member of the AI/ML technical discipline neighborhood at AWS. Previous to becoming a member of AWS, Archana led a migration from conventional siloed knowledge sources to Hadoop at a healthcare firm. She is obsessed with utilizing expertise to speed up progress, present worth to clients, and obtain enterprise outcomes.

Archana Inapudi is a Senior Options Architect at AWS, supporting a strategic buyer. She has over a decade of cross-industry experience main strategic technical initiatives. Archana is an aspiring member of the AI/ML technical discipline neighborhood at AWS. Previous to becoming a member of AWS, Archana led a migration from conventional siloed knowledge sources to Hadoop at a healthcare firm. She is obsessed with utilizing expertise to speed up progress, present worth to clients, and obtain enterprise outcomes.

Antara Raisa is an AI and ML Options Architect at Amazon Net Companies, supporting strategic clients based mostly out of Dallas, Texas. She additionally has earlier expertise working with giant enterprise companions at AWS, the place she labored as a Companion Success Options Architect for digital-centered clients.

Antara Raisa is an AI and ML Options Architect at Amazon Net Companies, supporting strategic clients based mostly out of Dallas, Texas. She additionally has earlier expertise working with giant enterprise companions at AWS, the place she labored as a Companion Success Options Architect for digital-centered clients.