This AI Analysis from China Gives Empirical Proof on the Relationship between Compression and Intelligence

Many individuals suppose that intelligence and compression go hand in hand, and a few specialists even go as far as to say that the 2 are basically the identical. Current developments in LLMs and their results on AI make this concept far more interesting, prompting researchers to have a look at language modeling by the compression lens. Theoretically, compression permits for changing any prediction mannequin right into a lossless compressor and inversely. Since LLMs have confirmed themselves to be fairly efficient in compressing knowledge, language modeling may be regarded as a kind of compression.

For the current LLM-based AI paradigm, this makes the case that compression results in intelligence all of the extra compelling. Nonetheless, there may be nonetheless a dearth of information demonstrating a causal hyperlink between compression and intelligence, despite the fact that this has been the topic of a lot theoretical debate. Is it an indication of intelligence if a language mannequin can encode a textual content corpus with fewer bits in a lossless method? That’s the query {that a} groundbreaking new examine by Tencent and The Hong Kong College of Science and Expertise goals to deal with empirically. Their examine takes a realistic method to the idea of “intelligence,” concentrating on the mannequin’s functionality to do completely different downstream duties somewhat than straying into philosophical and even contradictory floor. Three predominant talents—data and customary sense, coding, and mathematical reasoning—are used to check intelligence.

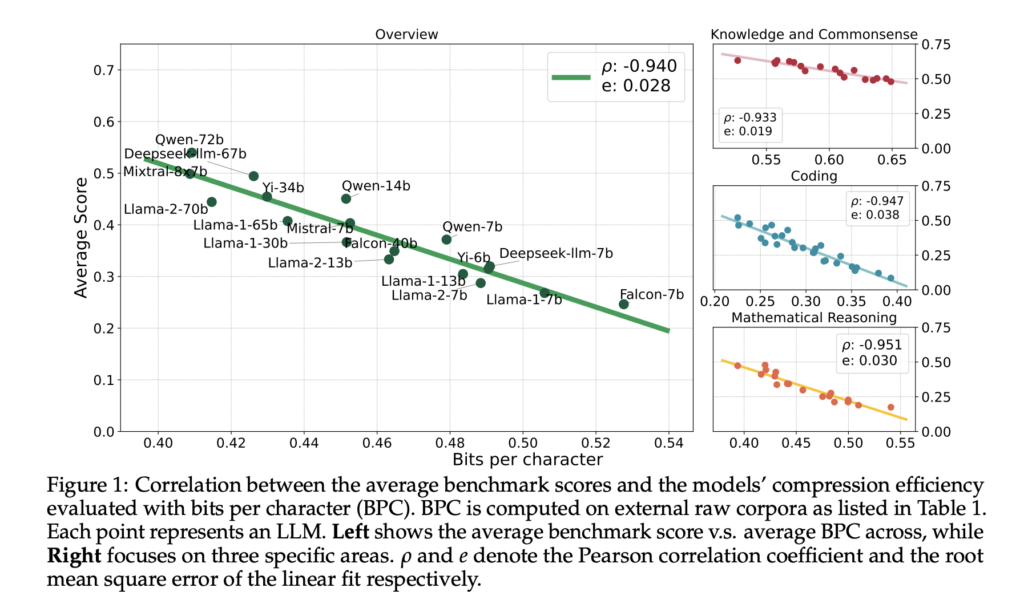

To be extra exact, the crew examined the efficacy of various LLMs in compressing exterior uncooked corpora within the related area (e.g., GitHub code for coding abilities). Then, they use the common benchmark scores to find out the domain-specific intelligence of those fashions and check them on numerous downstream duties.

Researchers set up an astonishing end result based mostly on research with 30 public LLMs and 12 completely different benchmarks: the downstream potential of LLMs is roughly linearly associated to their compression effectivity, with a Pearson correlation coefficient of about -0.95 for every assessed intelligence area. Importantly, the linear hyperlink additionally holds true for many particular person benchmarks. In the identical mannequin collection, the place the mannequin checkpoints share most configurations, together with mannequin designs, tokenizers, and knowledge, there have been latest and parallel investigations on the connection between benchmark scores and compression-equivalent metrics like validation loss.

Whatever the mannequin measurement, tokenizer, context window length, or pre coaching knowledge distribution, this examine is the primary to indicate that intelligence in LLMs correlates linearly with compression. The analysis helps the age-old principle that higher-quality compression signifies greater intelligence by demonstrating a common precept of a linear affiliation between the 2. Compression effectivity is a helpful unsupervised parameter for LLMs because it permits for simple updating of textual content corpora to stop overfitting and check contamination. Due to its linear correlation with the fashions’ talents, compression effectivity is a secure, versatile, and reliable metric that our outcomes assist for assessing LLMs. To make it straightforward for teachers sooner or later to assemble and replace their compression corpora, the crew has made their knowledge accumulating and processing pipelines open supply.

The researchers spotlight a number of caveats to our examine. To start, fine-tuned fashions are usually not appropriate as general-purpose textual content compressors, in order that they limit their consideration to base fashions. Nonetheless, they argue that there are intriguing connections between the compression effectivity of the fundamental mannequin and the benchmark scores of the associated improved fashions that should be investigated additional. Moreover, it’s doable that this examine’s outcomes solely work for absolutely skilled fashions and don’t apply to LMs as a result of the assessed talents haven’t even surfaced. The crew’s work opens up thrilling avenues for future analysis, inspiring the analysis neighborhood to delve deeper into these points.

Try the Paper and Github. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to observe us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Overlook to hitch our 40k+ ML SubReddit

For Content material Partnership, Please Fill Out This Form Here..

Dhanshree Shenwai is a Pc Science Engineer and has an excellent expertise in FinTech firms protecting Monetary, Playing cards & Funds and Banking area with eager curiosity in purposes of AI. She is captivated with exploring new applied sciences and developments in as we speak’s evolving world making everybody’s life straightforward.