Understanding and predicting city warmth islands at Gramener utilizing Amazon SageMaker geospatial capabilities

This can be a visitor submit co-authored by Shravan Kumar and Avirat S from Gramener.

Gramener, a Straive firm, contributes to sustainable improvement by specializing in agriculture, forestry, water administration, and renewable vitality. By offering authorities with the instruments and insights they should make knowledgeable choices about environmental and social impression, Gramener is enjoying an important position in constructing a extra sustainable future.

City warmth islands (UHIs) are areas inside cities that have considerably larger temperatures than their surrounding rural areas. UHIs are a rising concern as a result of they’ll result in numerous environmental and well being points. To deal with this problem, Gramener has developed an answer that makes use of spatial information and superior modeling methods to know and mitigate the next UHI results:

- Temperature discrepancy – UHIs could cause city areas to be hotter than their surrounding rural areas.

- Well being impression – Greater temperatures in UHIs contribute to a 10-20% enhance in heat-related sicknesses and fatalities.

- Power consumption – UHIs amplify air-con calls for, leading to an as much as 20% surge in vitality consumption.

- Air high quality – UHIs worsen air high quality, resulting in elevated ranges of smog and particulate matter, which may enhance respiratory issues.

- Financial impression – UHIs can lead to billions of {dollars} in further vitality prices, infrastructure harm, and healthcare expenditures.

Gramener’s GeoBox resolution empowers customers to effortlessly faucet into and analyze public geospatial information by way of its highly effective API, enabling seamless integration into present workflows. This streamlines exploration and saves beneficial time and sources, permitting communities to rapidly establish UHI hotspots. GeoBox then transforms uncooked information into actionable insights offered in user-friendly codecs like raster, GeoJSON, and Excel, guaranteeing clear understanding and quick implementation of UHI mitigation methods. This empowers communities to make knowledgeable choices and implement sustainable city improvement initiatives, in the end supporting residents by way of improved air high quality, decreased vitality consumption, and a cooler, more healthy setting.

This submit demonstrates how Gramener’s GeoBox resolution uses Amazon SageMaker geospatial capabilities to carry out earth statement evaluation and unlock UHI insights from satellite tv for pc imagery. SageMaker geospatial capabilities make it easy for information scientists and machine studying (ML) engineers to construct, practice, and deploy fashions utilizing geospatial information. SageMaker geospatial capabilities will let you effectively remodel and enrich large-scale geospatial datasets, and speed up product improvement and time to perception with pre-trained ML fashions.

Answer overview

Geobox goals to research and predict the UHI impact by harnessing spatial traits. It helps in understanding how proposed infrastructure and land use adjustments can impression UHI patterns and identifies the important thing elements influencing UHI. This analytical mannequin supplies correct estimates of land floor temperature (LST) at a granular stage, permitting Gramener to quantify adjustments within the UHI impact primarily based on parameters (names of indexes and information used).

Geobox allows metropolis departments to do the next:

- Improved local weather adaptation planning – Knowledgeable choices scale back the impression of maximum warmth occasions.

- Assist for inexperienced area enlargement – Extra inexperienced areas improve air high quality and high quality of life.

- Enhanced interdepartmental collaboration – Coordinated efforts enhance public security.

- Strategic emergency preparedness – Focused planning reduces the potential for emergencies.

- Well being companies collaboration – Cooperation results in simpler well being interventions.

Answer workflow

On this part, we talk about how the completely different parts work collectively, from information acquisition to spatial modeling and forecasting, serving because the core of the UHI resolution. The answer follows a structured workflow, with a main give attention to addressing UHIs in a metropolis of Canada.

Section 1: Information pipeline

The Landsat 8 satellite tv for pc captures detailed imagery of the world of curiosity each 15 days at 11:30 AM, offering a complete view of town’s panorama and setting. A grid system is established with a 48-meter grid measurement utilizing Mapbox’s Supermercado Python library at zoom stage 19, enabling exact spatial evaluation.

Section 2: Exploratory evaluation

Integrating infrastructure and inhabitants information layers, Geobox empowers customers to visualise town’s variable distribution and derive city morphological insights, enabling a complete evaluation of town’s construction and improvement.

Additionally, Landsat imagery from part 1 is used to derive insights just like the Normalized Distinction Vegetation Index (NDVI) and Normalized Distinction Constructed-up Index (NDBI), with information meticulously scaled to the 48-meter grid for consistency and accuracy.

The next variables are used:

- Land floor temperature

- Constructing web site protection

- NDVI

- Constructing block protection

- NDBI

- Constructing space

- Albedo

- Constructing depend

- Modified Normalized Distinction Water Index (MNDWI)

- Constructing top

- Variety of flooring and flooring space

- Flooring space ratio

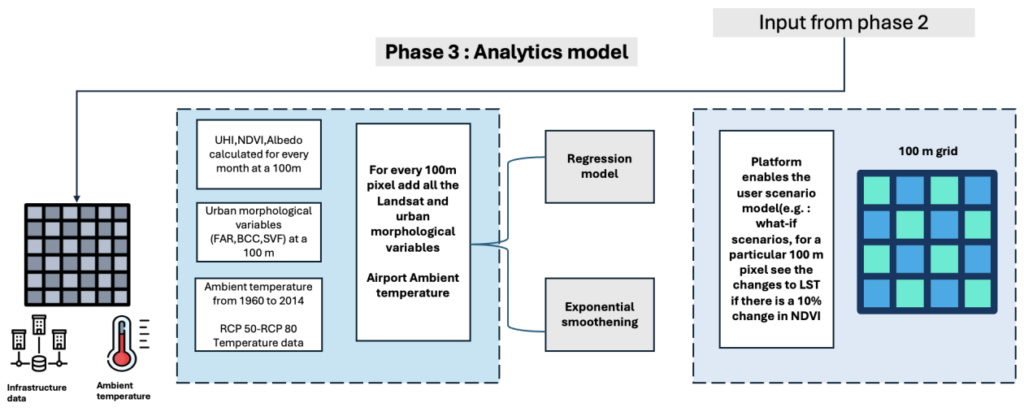

Section 3: Analytics mannequin

This part includes three modules, using ML fashions on information to realize insights into LST and its relationship with different influential elements:

- Module 1: Zonal statistics and aggregation – Zonal statistics play an important position in computing statistics utilizing values from the worth raster. It entails extracting statistical information for every zone primarily based on the zone raster. Aggregation is carried out at a 100-meter decision, permitting for a complete evaluation of the information.

- Module 2: Spatial modeling – Gramener evaluated three regression fashions (linear, spatial, and spatial fastened results) to unravel the correlation between Land Floor Temperature (LST) and different variables. Amongst these fashions, the spatial fastened impact mannequin yielded the very best imply R-squared worth, significantly for the timeframe spanning 2014 to 2020.

- Module 3: Variables forecasting – To forecast variables within the quick time period, Gramener employed exponential smoothing methods. These forecasts aided in understanding future LST values and their developments. Moreover, they delved into long-term scale evaluation by utilizing Consultant Focus Pathway (RCP8.5) information to foretell LST values over prolonged durations.

Information acquisition and preprocessing

To implement the modules, Gramener used the SageMaker geospatial pocket book inside Amazon SageMaker Studio. The geospatial pocket book kernel is pre-installed with generally used geospatial libraries, enabling direct visualization and processing of geospatial information throughout the Python pocket book setting.

Gramener employed numerous datasets to foretell LST developments, together with constructing evaluation and temperature information, in addition to satellite tv for pc imagery. The important thing to the UHI resolution was utilizing information from the Landsat 8 satellite tv for pc. This Earth-imaging satellite tv for pc, a three way partnership of USGS and NASA, served as a elementary part within the venture.

With the SearchRasterDataCollection API, SageMaker supplies a purpose-built performance to facilitate the retrieval of satellite tv for pc imagery. Gramener used this API to retrieve Landsat 8 satellite tv for pc information for the UHI resolution.

The SearchRasterDataCollection API makes use of the next enter parameters:

- Arn – The Amazon Useful resource Title (ARN) of the raster information assortment used within the question

- AreaOfInterest – A GeoJSON polygon representing the world of curiosity

- TimeRangeFilter – The time vary of curiosity, denoted as

{StartTime: <string>, EndTime: <string>} - PropertyFilters – Supplementary property filters, akin to specs for optimum acceptable cloud cowl, will also be integrated

The next instance demonstrates how Landsat 8 information could be queried through the API:

To course of large-scale satellite tv for pc information, Gramener used Amazon SageMaker Processing with the geospatial container. SageMaker Processing allows the versatile scaling of compute clusters to accommodate duties of various sizes, from processing a single metropolis block to managing planetary-scale workloads. Historically, manually creating and managing a compute cluster for such duties was each expensive and time-consuming, significantly as a result of complexities concerned in standardizing an setting appropriate for geospatial information dealing with.

Now, with the specialised geospatial container in SageMaker, managing and working clusters for geospatial processing has develop into extra easy. This course of requires minimal coding effort: you merely outline the workload, specify the placement of the geospatial information in Amazon Simple Storage Service (Amazon S3), and choose the suitable geospatial container. SageMaker Processing then robotically provisions the required cluster sources, facilitating the environment friendly run of geospatial duties on scales that vary from metropolis stage to continent stage.

SageMaker totally manages the underlying infrastructure required for the processing job. It allocates cluster sources throughout the job and removes them upon job completion. Lastly, the outcomes of the processing job are saved within the designated S3 bucket.

A SageMaker Processing job utilizing the geospatial picture could be configured as follows from throughout the geospatial pocket book:

The instance_count parameter defines what number of cases the processing job ought to use, and the instance_type defines what kind of occasion ought to be used.

The next instance reveals how a Python script is run on the processing job cluster. When the run command is invoked, the cluster begins up and robotically provisions the required cluster sources:

Spatial modeling and LST predictions

Within the processing job, a variety of variables, together with top-of-atmosphere spectral radiance, brightness temperature, and reflectance from Landsat 8, are computed. Moreover, morphological variables akin to flooring space ratio (FAR), constructing web site protection, constructing block protection, and Shannon’s Entropy Worth are calculated.

The next code demonstrates how this band arithmetic could be carried out:

After the variables have been calculated, zonal statistics are carried out to mixture information by grid. This entails calculating statistics primarily based on the values of curiosity inside every zone. For these computations a grid measurement of roughly 100 meters has been used.

After aggregating the information, spatial modeling is carried out. Gramener used spatial regression strategies, akin to linear regression and spatial fastened results, to account for spatial dependence within the observations. This method facilitates modeling the connection between variables and LST at a micro stage.

The next code illustrates how such spatial modeling could be run:

Gramener used exponential smoothing to foretell the LST values. Exponential smoothing is an efficient methodology for time collection forecasting that applies weighted averages to previous information, with the weights reducing exponentially over time. This methodology is especially efficient in smoothing out information to establish developments and patterns. Through the use of exponential smoothing, it turns into attainable to visualise and predict LST developments with better precision, permitting for extra correct predictions of future values primarily based on historic patterns.

To visualise the predictions, Gramener used the SageMaker geospatial pocket book with open-source geospatial libraries to overlay mannequin predictions on a base map and supplies layered visualize geospatial datasets straight throughout the pocket book.

Conclusion

This submit demonstrated how Gramener is empowering shoppers to make data-driven choices for sustainable city environments. With SageMaker, Gramener achieved substantial time financial savings in UHI evaluation, lowering processing time from weeks to hours. This speedy perception technology permits Gramener’s shoppers to pinpoint areas requiring UHI mitigation methods, proactively plan city improvement and infrastructure tasks to attenuate UHI, and acquire a holistic understanding of environmental elements for complete threat evaluation.

Uncover the potential of integrating Earth statement information in your sustainability tasks with SageMaker. For extra data, consult with Get started with Amazon SageMaker geospatial capabilities.

In regards to the Authors

Abhishek Mittal is a Options Architect for the worldwide public sector crew with Amazon Internet Companies (AWS), the place he primarily works with ISV companions throughout industries offering them with architectural steering for constructing scalable structure and implementing methods to drive adoption of AWS companies. He’s captivated with modernizing conventional platforms and safety within the cloud. Exterior work, he’s a journey fanatic.

Abhishek Mittal is a Options Architect for the worldwide public sector crew with Amazon Internet Companies (AWS), the place he primarily works with ISV companions throughout industries offering them with architectural steering for constructing scalable structure and implementing methods to drive adoption of AWS companies. He’s captivated with modernizing conventional platforms and safety within the cloud. Exterior work, he’s a journey fanatic.

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps clients globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, information engineering, and scalable distributed programs, augmented by a powerful background in software program engineering and business experience in domains akin to autonomous driving.

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps clients globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, information engineering, and scalable distributed programs, augmented by a powerful background in software program engineering and business experience in domains akin to autonomous driving.

Shravan Kumar is a Senior Director of Consumer success at Gramener, with decade of expertise in Enterprise Analytics, Information Evangelism & forging deep Consumer Relations. He holds a stable basis in Consumer Administration, Account Administration throughout the realm of information analytics, AI & ML.

Shravan Kumar is a Senior Director of Consumer success at Gramener, with decade of expertise in Enterprise Analytics, Information Evangelism & forging deep Consumer Relations. He holds a stable basis in Consumer Administration, Account Administration throughout the realm of information analytics, AI & ML.

Avirat S is a geospatial information scientist at Gramener, leveraging AI/ML to unlock insights from geographic information. His experience lies in catastrophe administration, agriculture, and concrete planning, the place his evaluation informs decision-making processes.

Avirat S is a geospatial information scientist at Gramener, leveraging AI/ML to unlock insights from geographic information. His experience lies in catastrophe administration, agriculture, and concrete planning, the place his evaluation informs decision-making processes.