Improve code assessment and approval effectivity with generative AI utilizing Amazon Bedrock

On the planet of software program improvement, code assessment and approval are necessary processes for making certain the standard, safety, and performance of the software program being developed. Nevertheless, managers tasked with overseeing these important processes typically face quite a few challenges, resembling the next:

- Lack of technical experience – Managers could not have an in-depth technical understanding of the programming language used or could not have been concerned in software program engineering for an prolonged interval. This leads to a information hole that may make it troublesome for them to precisely assess the influence and soundness of the proposed code adjustments.

- Time constraints – Code assessment and approval generally is a time-consuming course of, particularly in bigger or extra complicated initiatives. Managers have to steadiness between the thoroughness of assessment vs. the strain to satisfy venture timelines.

- Quantity of change requests – Coping with a excessive quantity of change requests is a standard problem for managers, particularly in the event that they’re overseeing a number of groups and initiatives. Just like the problem of time constraint, managers want to have the ability to deal with these requests effectively in order to not maintain again venture progress.

- Guide effort – Code assessment requires guide effort by the managers, and the shortage of automation could make it troublesome to scale the method.

- Documentation – Correct documentation of the code assessment and approval course of is necessary for transparency and accountability.

With the rise of generative artificial intelligence (AI), managers can now harness this transformative expertise and combine it with the AWS suite of deployment instruments and providers to streamline the assessment and approval course of in a way not beforehand attainable. On this put up, we discover an answer that provides an built-in end-to-end deployment workflow that comes with automated change evaluation and summarization along with approval workflow performance. We use Amazon Bedrock, a totally managed service that makes basis fashions (FMs) from main AI startups and Amazon obtainable through an API, so you’ll be able to select from a variety of FMs to seek out the mannequin that’s finest suited in your use case. With the Amazon Bedrock serverless expertise, you will get began shortly, privately customise FMs with your individual knowledge, and combine and deploy them into your functions utilizing AWS instruments with out having to handle any infrastructure.

Resolution overview

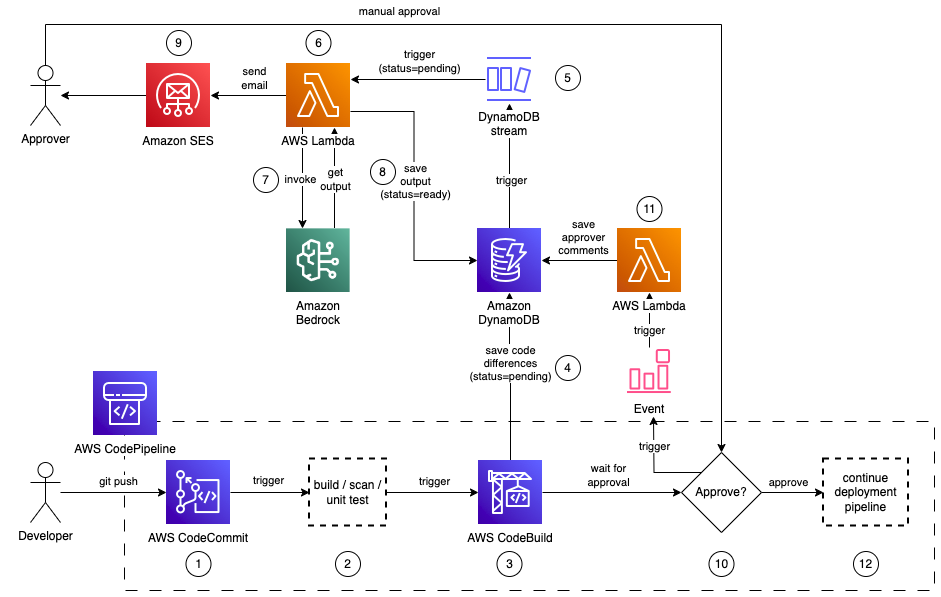

The next diagram illustrates the answer structure.

The workflow consists of the next steps:

- A developer pushes new code adjustments to their code repository (resembling AWS CodeCommit), which mechanically triggers the beginning of an AWS CodePipeline deployment.

- The applying code goes via a code constructing course of, performs vulnerability scans, and conducts unit assessments utilizing your most well-liked instruments.

- AWS CodeBuild retrieves the repository and performs a git present command to extract the code variations between the present commit model and the earlier commit model. This produces a line-by-line output that signifies the code adjustments made on this launch.

- CodeBuild saves the output to an Amazon DynamoDB desk with further reference info:

- CodePipeline run ID

- AWS Area

- CodePipeline identify

- CodeBuild construct quantity

- Date and time

- Standing

- Amazon DynamoDB Streams captures the data modifications made to the desk.

- An AWS Lambda perform is triggered by the DynamoDB stream to course of the report captured.

- The perform invokes the Anthropic Claude v2 mannequin on Amazon Bedrock through the Amazon Bedrock InvokeModel API name. The code variations, along with a immediate, are offered as enter to the mannequin for evaluation, and a abstract of code adjustments is returned as output.

- The output from the mannequin is saved again to the identical DynamoDB desk.

- The supervisor is notified through Amazon Simple Email Service (Amazon SES) of the abstract of code adjustments and that their approval is required for the deployment.

- The supervisor critiques the e-mail and offers their choice (both approve or reject) along with any assessment feedback through the CodePipeline console.

- The approval choice and assessment feedback are captured by Amazon EventBridge, which triggers a Lambda perform to avoid wasting them again to DynamoDB.

- If accredited, the pipeline deploys the applying code utilizing your most well-liked instruments. If rejected, the workflow ends and the deployment doesn’t proceed additional.

Within the following sections, you deploy the answer and confirm the end-to-end workflow.

Stipulations

To observe the directions on this answer, you want the next conditions:

Deploy the answer

To deploy the answer, full the next steps:

- Select Launch Stack to launch a CloudFormation stack in

us-east-1:

- For EmailAddress, enter an e mail handle that you’ve entry to. The abstract of code adjustments will likely be despatched to this e mail handle.

- For modelId, go away because the default anthropic.claude-v2, which is the Anthropic Claude v2 mannequin.

Deploying the template will take about 4 minutes.

- While you obtain an e mail from Amazon SES to confirm your e mail handle, select the hyperlink offered to authorize your e mail handle.

- You’ll obtain an e mail titled “Abstract of Adjustments” for the preliminary commit of the pattern repository into CodeCommit.

- On the AWS CloudFormation console, navigate to the Outputs tab of the deployed stack.

- Copy the worth of RepoCloneURL. You want this to entry the pattern code repository.

Take a look at the answer

You possibly can check the workflow finish to finish by taking over the position of a developer and pushing some code adjustments. A set of pattern codes has been ready for you in CodeCommit. To access the CodeCommit repository, enter the next instructions in your IDE:

You can find the next listing construction for an AWS Cloud Development Kit (AWS CDK) software that creates a Lambda perform to carry out a bubble type on a string of integers. The Lambda perform is accessible through a publicly obtainable URL.

You make three adjustments to the applying codes.

- To boost the perform to assist each fast type and bubble type algorithm, soak up a parameter to permit the number of the algorithm to make use of, and return each the algorithm used and sorted array within the output, substitute the whole content material of

lambda/index.pywith the next code:

- To cut back the timeout setting of the perform from 10 minutes to five seconds (as a result of we don’t count on the perform to run longer than a couple of seconds), replace line 47 in

my_sample_project/my_sample_project_stack.pyas follows:

- To limit the invocation of the perform utilizing IAM for added safety, replace line 56 in

my_sample_project/my_sample_project_stack.pyas follows:

- Push the code adjustments by getting into the next instructions:

This begins the CodePipeline deployment workflow from Steps 1–9 as outlined within the answer overview. When invoking the Amazon Bedrock mannequin, we offered the next immediate:

Xan Huang is a Senior Options Architect with AWS and relies in Singapore. He works with main monetary establishments to design and construct safe, scalable, and extremely obtainable options within the cloud. Outdoors of labor, Xan spends most of his free time together with his household and getting bossed round by his 3-year-old daughter. You will discover Xan on

Xan Huang is a Senior Options Architect with AWS and relies in Singapore. He works with main monetary establishments to design and construct safe, scalable, and extremely obtainable options within the cloud. Outdoors of labor, Xan spends most of his free time together with his household and getting bossed round by his 3-year-old daughter. You will discover Xan on