A framework for well being fairness evaluation of machine studying efficiency – Google Analysis Weblog

Well being fairness is a serious societal concern worldwide with disparities having many causes. These sources embody limitations in entry to healthcare, variations in scientific remedy, and even elementary variations within the diagnostic expertise. In dermatology for instance, pores and skin most cancers outcomes are worse for populations reminiscent of minorities, these with decrease socioeconomic standing, or people with restricted healthcare entry. Whereas there’s nice promise in latest advances in machine studying (ML) and synthetic intelligence (AI) to assist enhance healthcare, this transition from analysis to bedside have to be accompanied by a cautious understanding of whether or not and the way they impression well being fairness.

Well being fairness is outlined by public well being organizations as equity of alternative for everybody to be as wholesome as doable. Importantly, fairness could also be totally different from equality. For instance, individuals with higher boundaries to bettering their well being could require extra or totally different effort to expertise this truthful alternative. Equally, fairness isn’t equity as outlined within the AI for healthcare literature. Whereas AI equity usually strives for equal efficiency of the AI expertise throughout totally different affected person populations, this doesn’t heart the aim of prioritizing efficiency with respect to pre-existing well being disparities.

In “Health Equity Assessment of machine Learning performance (HEAL): a framework and dermatology AI model case study”, revealed in The Lancet eClinicalMedicine, we suggest a strategy to quantitatively assess whether or not ML-based well being applied sciences carry out equitably. In different phrases, does the ML mannequin carry out effectively for these with the worst well being outcomes for the situation(s) the mannequin is supposed to deal with? This aim anchors on the precept that well being fairness ought to prioritize and measure mannequin efficiency with respect to disparate well being outcomes, which can be as a consequence of quite a few elements that embody structural inequities (e.g., demographic, social, cultural, political, financial, environmental and geographic).

The well being fairness framework (HEAL)

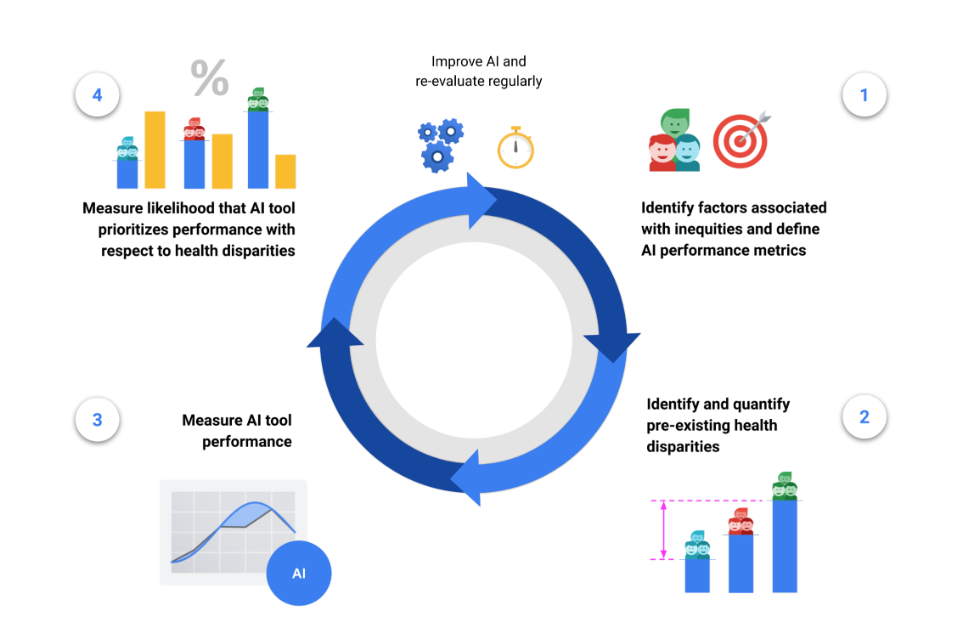

The HEAL framework proposes a 4-step course of to estimate the probability that an ML-based well being expertise performs equitably:

- Establish elements related to well being inequities and outline instrument efficiency metrics,

- Establish and quantify pre-existing well being disparities,

- Measure the efficiency of the instrument for every subpopulation,

- Measure the probability that the instrument prioritizes efficiency with respect to well being disparities.

The ultimate step’s output is termed the HEAL metric, which quantifies how anticorrelated the ML mannequin’s efficiency is with well being disparities. In different phrases, does the mannequin carry out higher with populations which have the more serious well being outcomes?

This 4-step course of is designed to tell enhancements for making ML mannequin efficiency extra equitable, and is supposed to be iterative and re-evaluated regularly. For instance, the provision of well being outcomes information in step (2) can inform the selection of demographic elements and brackets in step (1), and the framework will be utilized once more with new datasets, fashions and populations.

With this work, we take a step in the direction of encouraging express evaluation of the well being fairness concerns of AI applied sciences, and encourage prioritization of efforts throughout mannequin improvement to scale back well being inequities for subpopulations uncovered to structural inequities that may precipitate disparate outcomes. We must always observe that the current framework doesn’t mannequin causal relationships and, subsequently, can’t quantify the precise impression a brand new expertise could have on decreasing well being consequence disparities. Nonetheless, the HEAL metric could assist determine alternatives for enchancment, the place the present efficiency isn’t prioritized with respect to pre-existing well being disparities.

Case examine on a dermatology mannequin

As an illustrative case examine, we utilized the framework to a dermatology mannequin, which makes use of a convolutional neural community much like that described in prior work. This instance dermatology mannequin was educated to categorise 288 pores and skin circumstances utilizing a improvement dataset of 29k instances. The enter to the mannequin consists of three photographs of a pores and skin concern together with demographic data and a short structured medical historical past. The output consists of a ranked checklist of doable matching pores and skin circumstances.

Utilizing the HEAL framework, we evaluated this mannequin by assessing whether or not it prioritized efficiency with respect to pre-existing well being outcomes. The mannequin was designed to foretell doable dermatologic circumstances (from a listing of tons of) primarily based on photographs of a pores and skin concern and affected person metadata. Analysis of the mannequin is finished utilizing a top-3 settlement metric, which quantifies how usually the highest 3 output circumstances match the most probably situation as advised by a dermatologist panel. The HEAL metric is computed by way of the anticorrelation of this top-3 settlement with well being consequence rankings.

We used a dataset of 5,420 teledermatology instances, enriched for variety in age, intercourse and race/ethnicity, to retrospectively consider the mannequin’s HEAL metric. The dataset consisted of “store-and-forward” instances from sufferers of 20 years or older from major care suppliers within the USA and pores and skin most cancers clinics in Australia. Primarily based on a assessment of the literature, we determined to discover race/ethnicity, intercourse and age as potential elements of inequity, and used sampling strategies to make sure that our analysis dataset had enough illustration of all race/ethnicity, intercourse and age teams. To quantify pre-existing well being outcomes for every subgroup we relied on measurements from public databases endorsed by the World Well being Group, reminiscent of Years of Life Lost (YLLs) and Disability-Adjusted Life Years (DALYs; years of life misplaced plus years lived with incapacity).

Our evaluation estimated that the mannequin was 80.5% more likely to carry out equitably throughout race/ethnicity subgroups and 92.1% more likely to carry out equitably throughout sexes.

Nonetheless, whereas the mannequin was more likely to carry out equitably throughout age teams for most cancers circumstances particularly, we found that it had room for enchancment throughout age teams for non-cancer circumstances. For instance, these 70+ have the poorest well being outcomes associated to non-cancer pores and skin circumstances, but the mannequin did not prioritize efficiency for this subgroup.

Placing issues in context

For holistic analysis, the HEAL metric can’t be employed in isolation. As an alternative this metric must be contextualized alongside many different elements starting from computational effectivity and information privateness to moral values, and points which will affect the outcomes (e.g., choice bias or variations in representativeness of the analysis information throughout demographic teams).

As an adversarial instance, the HEAL metric will be artificially improved by intentionally decreasing mannequin efficiency for essentially the most advantaged subpopulation till efficiency for that subpopulation is worse than all others. For illustrative functions, given subpopulations A and B the place A has worse well being outcomes than B, contemplate the selection between two fashions: Mannequin 1 (M1) performs 5% higher for subpopulation A than for subpopulation B. Mannequin 2 (M2) performs 5% worse on subpopulation A than B. The HEAL metric could be greater for M1 as a result of it prioritizes efficiency on a subpopulation with worse outcomes. Nonetheless, M1 could have absolute performances of simply 75% and 70% for subpopulations A and B respectively, whereas M2 has absolute performances of 75% and 80% for subpopulations A and B respectively. Selecting M1 over M2 would result in worse general efficiency for all subpopulations as a result of some subpopulations are worse-off whereas no subpopulation is better-off.

Accordingly, the HEAL metric must be used alongside a Pareto condition (mentioned additional within the paper), which restricts mannequin adjustments in order that outcomes for every subpopulation are both unchanged or improved in comparison with the established order, and efficiency doesn’t worsen for any subpopulation.

The HEAL framework, in its present type, assesses the probability that an ML-based mannequin prioritizes efficiency for subpopulations with respect to pre-existing well being disparities for particular subpopulations. This differs from the aim of understanding whether or not ML will scale back disparities in outcomes throughout subpopulations in actuality. Particularly, modeling enhancements in outcomes requires a causal understanding of steps within the care journey that occur each earlier than and after use of any given mannequin. Future analysis is required to deal with this hole.

Conclusion

The HEAL framework allows a quantitative evaluation of the probability that well being AI applied sciences prioritize efficiency with respect to well being disparities. The case examine demonstrates the right way to apply the framework within the dermatological area, indicating a excessive probability that mannequin efficiency is prioritized with respect to well being disparities throughout intercourse and race/ethnicity, but additionally revealing the potential for enhancements for non-cancer circumstances throughout age. The case examine additionally illustrates limitations within the potential to use all really helpful points of the framework (e.g., mapping societal context, availability of information), thus highlighting the complexity of well being fairness concerns of ML-based instruments.

This work is a proposed method to deal with a grand problem for AI and well being fairness, and will present a helpful analysis framework not solely throughout mannequin improvement, however throughout pre-implementation and real-world monitoring levels, e.g., within the type of well being fairness dashboards. We maintain that the power of the HEAL framework is in its future software to numerous AI instruments and use instances and its refinement within the course of. Lastly, we acknowledge {that a} profitable method in the direction of understanding the impression of AI applied sciences on well being fairness must be greater than a set of metrics. It’ll require a set of objectives agreed upon by a neighborhood that represents those that might be most impacted by a mannequin.

Acknowledgements

The analysis described right here is joint work throughout many groups at Google. We’re grateful to all our co-authors: Terry Spitz, Malcolm Pyles, Heather Cole-Lewis, Ellery Wulczyn, Stephen R. Pfohl, Donald Martin, Jr., Ronnachai Jaroensri, Geoff Keeling, Yuan Liu, Stephanie Farquhar, Qinghan Xue, Jenna Lester, Cían Hughes, Patricia Strachan, Fraser Tan, Peggy Bui, Craig H. Mermel, Lily H. Peng, Yossi Matias, Greg S. Corrado, Dale R. Webster, Sunny Virmani, Christopher Semturs, Yun Liu, and Po-Hsuan Cameron Chen. We additionally thank Lauren Winer, Sami Lachgar, Ting-An Lin, Aaron Loh, Morgan Du, Jenny Rizk, Renee Wong, Ashley Carrick, Preeti Singh, Annisah Um’rani, Jessica Schrouff, Alexander Brown, and Anna Iurchenko for his or her assist of this undertaking.