Revolutionizing LLM Coaching with GaLore: A New Machine Studying Strategy to Improve Reminiscence Effectivity with out Compromising Efficiency

Coaching giant language fashions (LLMs) has posed a big problem as a result of their memory-intensive nature. The traditional method of lowering reminiscence consumption by compressing mannequin weights usually results in efficiency degradation. Nonetheless, a novel technique, Gradient Low-Rank Projection (GaLore), by researchers from the California Institute of Know-how, Meta AI, College of Texas at Austin, and Carnegie Mellon College, gives a contemporary perspective. GaLore focuses on the gradients moderately than the mannequin weights, a singular method that guarantees to boost reminiscence effectivity with out compromising mannequin efficiency.

This method diverges from the normal strategies by specializing in the gradients moderately than the mannequin weights. By projecting gradients right into a lower-dimensional house, GaLore permits for absolutely exploring the parameter house, successfully balancing reminiscence effectivity with the mannequin’s efficiency. This method has proven promise in sustaining or surpassing the efficiency of full-rank coaching strategies, significantly in the course of the pre-training and fine-tuning phases of LLM improvement.

GaLore’s core innovation lies in its distinctive dealing with of the gradient projection, lowering reminiscence utilization in optimizer states by as much as 65.5% with out sacrificing coaching effectivity. That is achieved by incorporating a compact illustration of gradients, which maintains the integrity of the coaching dynamics and allows substantial reductions in reminiscence consumption. Consequently, GaLore facilitates the coaching of fashions with billions of parameters on customary consumer-grade GPUs, which was beforehand solely possible with complicated mannequin parallelism or intensive computational sources.

The efficacy of GaLore extends to its adaptability with numerous optimization algorithms, making it an integral addition to present coaching pipelines. Its software in pre-training and fine-tuning eventualities throughout completely different benchmarks has demonstrated GaLore’s functionality to ship aggressive outcomes with considerably decrease reminiscence necessities. As an example, GaLore has enabled the pre-training of fashions with as much as 7 billion parameters on client GPUs, a milestone in LLM coaching that underscores the strategy’s potential to remodel the panorama of mannequin improvement.

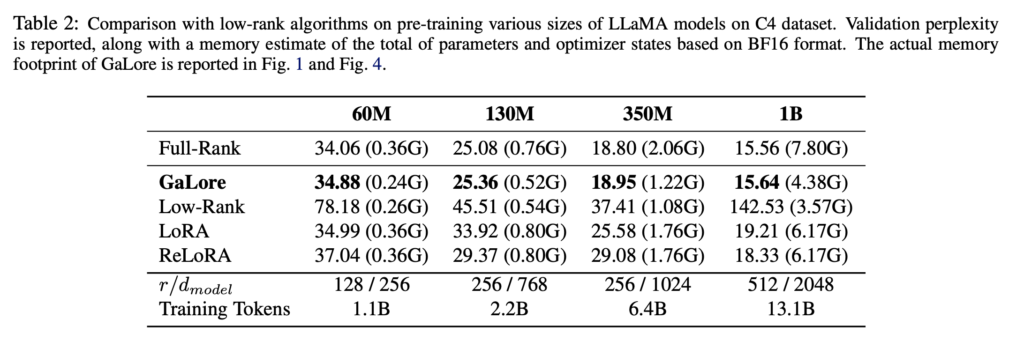

Complete evaluations of GaLore have highlighted its superior efficiency to different low-rank adaptation strategies. GaLore conserves reminiscence and achieves comparable or higher outcomes when utilized to large-scale language fashions, underscoring its effectiveness as a coaching technique. This efficiency is especially evident in pre-training and fine-tuning on established NLP benchmarks, the place GaLore’s memory-efficient method doesn’t compromise the standard of outcomes.

GaLore presents a big breakthrough in LLM coaching, providing a robust answer to the longstanding problem of memory-intensive mannequin improvement. By means of its revolutionary gradient projection method, GaLore demonstrates distinctive reminiscence effectivity whereas preserving and, in some instances, enhancing mannequin efficiency. Its compatibility with numerous optimization algorithms additional solidifies its place as a flexible and impactful software for researchers and practitioners. The appearance of GaLore marks a pivotal second within the democratization of LLM coaching, doubtlessly accelerating developments in pure language processing and associated domains.

In conclusion, key takeaways from the analysis embody:

- GaLore considerably reduces reminiscence utilization in coaching giant language fashions with out compromising efficiency.

- It makes use of a novel gradient projection technique to discover the parameter house absolutely, thus enhancing coaching effectivity.

- GaLore is adaptable with numerous optimization algorithms, seamlessly integrating into present mannequin coaching workflows.

- Complete evaluations have confirmed GaLore’s functionality to ship aggressive outcomes throughout pre-training and fine-tuning benchmarks, demonstrating its potential to revolutionize the coaching of LLMs.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to observe us on Twitter and Google News. Be part of our 38k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

For those who like our work, you’ll love our newsletter..

Don’t Neglect to hitch our Telegram Channel

You might also like our FREE AI Courses….

Hiya, My identify is Adnan Hassan. I’m a consulting intern at Marktechpost and shortly to be a administration trainee at American Categorical. I’m at the moment pursuing a twin diploma on the Indian Institute of Know-how, Kharagpur. I’m keen about know-how and wish to create new merchandise that make a distinction.