Streamline diarization utilizing AI as an assistive expertise: ZOO Digital’s story

ZOO Digital supplies end-to-end localization and media companies to adapt authentic TV and film content material to totally different languages, areas, and cultures. It makes globalization simpler for the world’s greatest content material creators. Trusted by the most important names in leisure, ZOO Digital delivers high-quality localization and media companies at scale, together with dubbing, subtitling, scripting, and compliance.

Typical localization workflows require handbook speaker diarization, whereby an audio stream is segmented based mostly on the identification of the speaker. This time-consuming course of have to be accomplished earlier than content material may be dubbed into one other language. With handbook strategies, a 30-minute episode can take between 1–3 hours to localize. By way of automation, ZOO Digital goals to realize localization in beneath half-hour.

On this submit, we talk about deploying scalable machine studying (ML) fashions for diarizing media content material utilizing Amazon SageMaker, with a give attention to the WhisperX mannequin.

Background

ZOO Digital’s imaginative and prescient is to supply a sooner turnaround of localized content material. This objective is bottlenecked by the manually intensive nature of the train compounded by the small workforce of expert individuals that may localize content material manually. ZOO Digital works with over 11,000 freelancers and localized over 600 million phrases in 2022 alone. Nevertheless, the provision of expert individuals is being outstripped by the rising demand for content material, requiring automation to help with localization workflows.

With an intention to speed up the localization of content material workflows by means of machine studying, ZOO Digital engaged AWS Prototyping, an funding program by AWS to co-build workloads with prospects. The engagement centered on delivering a useful resolution for the localization course of, whereas offering hands-on coaching to ZOO Digital builders on SageMaker, Amazon Transcribe, and Amazon Translate.

Buyer problem

After a title (a film or an episode of a TV collection) has been transcribed, audio system have to be assigned to every section of speech in order that they are often accurately assigned to the voice artists which can be forged to play the characters. This course of is named speaker diarization. ZOO Digital faces the problem of diarizing content material at scale whereas being economically viable.

Resolution overview

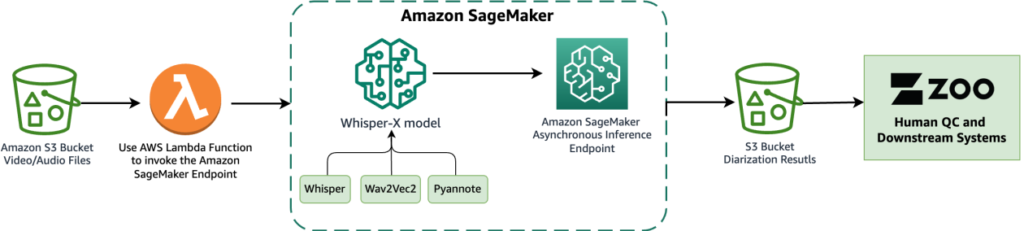

On this prototype, we saved the unique media recordsdata in a specified Amazon Simple Storage Service (Amazon S3) bucket. This S3 bucket was configured to emit an occasion when new recordsdata are detected inside it, triggering an AWS Lambda perform. For directions on configuring this set off, discuss with the tutorial Using an Amazon S3 trigger to invoke a Lambda function. Subsequently, the Lambda perform invoked the SageMaker endpoint for inference utilizing the Boto3 SageMaker Runtime client.

The WhisperX mannequin, based mostly on OpenAI’s Whisper, performs transcriptions and diarization for media property. It’s constructed upon the Faster Whisper reimplementation, providing as much as 4 instances sooner transcription with improved word-level timestamp alignment in comparison with Whisper. Moreover, it introduces speaker diarization, not current within the authentic Whisper mannequin. WhisperX makes use of the Whisper mannequin for transcriptions, the Wav2Vec2 mannequin to boost timestamp alignment (making certain synchronization of transcribed textual content with audio timestamps), and the pyannote mannequin for diarization. FFmpeg is used for loading audio from supply media, supporting numerous media formats. The clear and modular mannequin structure permits flexibility, as a result of every element of the mannequin may be swapped out as wanted sooner or later. Nevertheless, it’s important to notice that WhisperX lacks full administration options and isn’t an enterprise-level product. With out upkeep and assist, it is probably not appropriate for manufacturing deployment.

On this collaboration, we deployed and evaluated WhisperX on SageMaker, utilizing an asynchronous inference endpoint to host the mannequin. SageMaker asynchronous endpoints assist add sizes as much as 1 GB and incorporate auto scaling options that effectively mitigate visitors spikes and save prices throughout off-peak instances. Asynchronous endpoints are notably well-suited for processing giant recordsdata, reminiscent of films and TV collection in our use case.

The next diagram illustrates the core components of the experiments we performed on this collaboration.

Within the following sections, we delve into the small print of deploying the WhisperX mannequin on SageMaker, and consider the diarization efficiency.

Obtain the mannequin and its parts

WhisperX is a system that features a number of fashions for transcription, pressured alignment, and diarization. For easy SageMaker operation with out the necessity to fetch mannequin artifacts throughout inference, it’s important to pre-download all mannequin artifacts. These artifacts are then loaded into the SageMaker serving container throughout initiation. As a result of these fashions aren’t instantly accessible, we provide descriptions and pattern code from the WhisperX supply, offering directions on downloading the mannequin and its parts.

WhisperX makes use of six fashions:

Most of those fashions may be obtained from Hugging Face utilizing the huggingface_hub library. We use the next download_hf_model() perform to retrieve these mannequin artifacts. An entry token from Hugging Face, generated after accepting the consumer agreements for the next pyannote fashions, is required:

import huggingface_hub

import yaml

import torchaudio

import urllib.request

import os

CONTAINER_MODEL_DIR = "/choose/ml/mannequin"

WHISPERX_MODEL = "guillaumekln/faster-whisper-large-v2"

VAD_MODEL_URL = "https://whisperx.s3.eu-west-2.amazonaws.com/model_weights/segmentation/0b5b3216d60a2d32fc086b47ea8c67589aaeb26b7e07fcbe620d6d0b83e209ea/pytorch_model.bin"

WAV2VEC2_MODEL = "WAV2VEC2_ASR_BASE_960H"

DIARIZATION_MODEL = "pyannote/speaker-diarization"

def download_hf_model(model_name: str, hf_token: str, local_model_dir: str) -> str:

"""

Fetches the offered mannequin from HuggingFace and returns the subdirectory it's downloaded to

:param model_name: HuggingFace mannequin identify (and an elective model, appended with @[version])

:param hf_token: HuggingFace entry token approved to entry the requested mannequin

:param local_model_dir: The native listing to obtain the mannequin to

:return: The subdirectory inside local_modeL_dir that the mannequin is downloaded to

"""

model_subdir = model_name.break up('@')[0]

huggingface_hub.snapshot_download(model_subdir, token=hf_token, local_dir=f"{local_model_dir}/{model_subdir}", local_dir_use_symlinks=False)

return model_subdir

The VAD mannequin is fetched from Amazon S3, and the Wav2Vec2 mannequin is retrieved from the torchaudio.pipelines module. Based mostly on the next code, we are able to retrieve all of the fashions’ artifacts, together with these from Hugging Face, and save them to the required native mannequin listing:

def fetch_models(hf_token: str, local_model_dir="./fashions"):

"""

Fetches all required fashions to run WhisperX regionally with out downloading fashions each time

:param hf_token: A huggingface entry token to obtain the fashions

:param local_model_dir: The listing to obtain the fashions to

"""

# Fetch Quicker Whisper's Giant V2 mannequin from HuggingFace

download_hf_model(model_name=WHISPERX_MODEL, hf_token=hf_token, local_model_dir=local_model_dir)

# Fetch WhisperX's VAD Segmentation mannequin from S3

vad_model_dir = "whisperx/vad"

if not os.path.exists(f"{local_model_dir}/{vad_model_dir}"):

os.makedirs(f"{local_model_dir}/{vad_model_dir}")

urllib.request.urlretrieve(VAD_MODEL_URL, f"{local_model_dir}/{vad_model_dir}/pytorch_model.bin")

# Fetch the Wav2Vec2 alignment mannequin

torchaudio.pipelines.__dict__[WAV2VEC2_MODEL].get_model(dl_kwargs={"model_dir": f"{local_model_dir}/wav2vec2/"})

# Fetch pyannote's Speaker Diarization mannequin from HuggingFace

download_hf_model(model_name=DIARIZATION_MODEL,

hf_token=hf_token,

local_model_dir=local_model_dir)

# Learn within the Speaker Diarization mannequin config to fetch fashions and replace with their native paths

with open(f"{local_model_dir}/{DIARIZATION_MODEL}/config.yaml", 'r') as file:

diarization_config = yaml.safe_load(file)

embedding_model = diarization_config['pipeline']['params']['embedding']

embedding_model_dir = download_hf_model(model_name=embedding_model,

hf_token=hf_token,

local_model_dir=local_model_dir)

diarization_config['pipeline']['params']['embedding'] = f"{CONTAINER_MODEL_DIR}/{embedding_model_dir}"

segmentation_model = diarization_config['pipeline']['params']['segmentation']

segmentation_model_dir = download_hf_model(model_name=segmentation_model,

hf_token=hf_token,

local_model_dir=local_model_dir)

diarization_config['pipeline']['params']['segmentation'] = f"{CONTAINER_MODEL_DIR}/{segmentation_model_dir}/pytorch_model.bin"

with open(f"{local_model_dir}/{DIARIZATION_MODEL}/config.yaml", 'w') as file:

yaml.safe_dump(diarization_config, file)

# Learn within the Speaker Embedding mannequin config to replace it with its native path

speechbrain_hyperparams_path = f"{local_model_dir}/{embedding_model_dir}/hyperparams.yaml"

with open(speechbrain_hyperparams_path, 'r') as file:

speechbrain_hyperparams = file.learn()

speechbrain_hyperparams = speechbrain_hyperparams.exchange(embedding_model_dir, f"{CONTAINER_MODEL_DIR}/{embedding_model_dir}")

with open(speechbrain_hyperparams_path, 'w') as file:

file.write(speechbrain_hyperparams)

Choose the suitable AWS Deep Studying Container for serving the mannequin

After the mannequin artifacts are saved utilizing the previous pattern code, you possibly can select pre-built AWS Deep Learning Containers (DLCs) from the next GitHub repo. When deciding on the Docker picture, think about the next settings: framework (Hugging Face), activity (inference), Python model, and {hardware} (for instance, GPU). We advocate utilizing the next picture: 763104351884.dkr.ecr.[REGION].amazonaws.com/huggingface-pytorch-inference:2.0.0-transformers4.28.1-gpu-py310-cu118-ubuntu20.04 This picture has all the required system packages pre-installed, reminiscent of ffmpeg. Bear in mind to interchange [REGION] with the AWS Area you might be utilizing.

For different required Python packages, create a necessities.txt file with a listing of packages and their variations. These packages will probably be put in when the AWS DLC is constructed. The next are the extra packages wanted to host the WhisperX mannequin on SageMaker:

Create an inference script to load the fashions and run inference

Subsequent, we create a customized inference.py script to stipulate how the WhisperX mannequin and its parts are loaded into the container and the way the inference course of must be run. The script comprises two capabilities: model_fn and transform_fn. The model_fn perform is invoked to load the fashions from their respective places. Subsequently, these fashions are handed to the transform_fn perform throughout inference, the place transcription, alignment, and diarization processes are carried out. The next is a code pattern for inference.py:

import io

import json

import logging

import tempfile

import time

import torch

import whisperx

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'

def model_fn(model_dir: str) -> dict:

"""

Deserialize and return the fashions

"""

logging.data("Loading WhisperX mannequin")

mannequin = whisperx.load_model(whisper_arch=f"{model_dir}/guillaumekln/faster-whisper-large-v2",

machine=DEVICE,

language="en",

compute_type="float16",

vad_options={'model_fp': f"{model_dir}/whisperx/vad/pytorch_model.bin"})

logging.data("Loading alignment mannequin")

align_model, metadata = whisperx.load_align_model(language_code="en",

machine=DEVICE,

model_name="WAV2VEC2_ASR_BASE_960H",

model_dir=f"{model_dir}/wav2vec2")

logging.data("Loading diarization mannequin")

diarization_model = whisperx.DiarizationPipeline(model_name=f"{model_dir}/pyannote/speaker-diarization/config.yaml",

machine=DEVICE)

return {

'mannequin': mannequin,

'align_model': align_model,

'metadata': metadata,

'diarization_model': diarization_model

}

def transform_fn(mannequin: dict, request_body: bytes, request_content_type: str, response_content_type="software/json") -> (str, str):

"""

Load in audio from the request, transcribe and diarize, and return JSON output

"""

# Begin a timer in order that we are able to log how lengthy inference takes

start_time = time.time()

# Unpack the fashions

whisperx_model = mannequin['model']

align_model = mannequin['align_model']

metadata = mannequin['metadata']

diarization_model = mannequin['diarization_model']

# Load the media file (the request_body as bytes) into a brief file, then use WhisperX to load the audio from it

logging.data("Loading audio")

with io.BytesIO(request_body) as file:

tfile = tempfile.NamedTemporaryFile(delete=False)

tfile.write(file.learn())

audio = whisperx.load_audio(tfile.identify)

# Run transcription

logging.data("Transcribing audio")

end result = whisperx_model.transcribe(audio, batch_size=16)

# Align the outputs for higher timings

logging.data("Aligning outputs")

end result = whisperx.align(end result["segments"], align_model, metadata, audio, DEVICE, return_char_alignments=False)

# Run diarization

logging.data("Operating diarization")

diarize_segments = diarization_model(audio)

end result = whisperx.assign_word_speakers(diarize_segments, end result)

# Calculate the time it took to carry out the transcription and diarization

end_time = time.time()

elapsed_time = end_time - start_time

logging.data(f"Transcription and Diarization took {int(elapsed_time)} seconds")

# Return the outcomes to be saved in S3

return json.dumps(end result), response_content_type

Inside the mannequin’s listing, alongside the necessities.txt file, make sure the presence of inference.py in a code subdirectory. The fashions listing ought to resemble the next:

Create a tarball of the fashions

After you create the fashions and code directories, you should utilize the next command traces to compress the mannequin right into a tarball (.tar.gz file) and add it to Amazon S3. On the time of writing, utilizing the faster-whisper Giant V2 mannequin, the ensuing tarball representing the SageMaker mannequin is 3 GB in dimension. For extra info, discuss with Model hosting patterns in Amazon SageMaker, Part 2: Getting started with deploying real time models on SageMaker.

Create a SageMaker mannequin and deploy an endpoint with an asynchronous predictor

Now you possibly can create the SageMaker mannequin, endpoint config, and asynchronous endpoint with AsyncPredictor utilizing the mannequin tarball created within the earlier step. For directions, discuss with Create an Asynchronous Inference Endpoint.

Consider diarization efficiency

To evaluate the diarization efficiency of the WhisperX mannequin in numerous situations, we chosen three episodes every from two English titles: one drama title consisting of 30-minute episodes, and one documentary title consisting of 45-minute episodes. We utilized pyannote’s metrics toolkit, pyannote.metrics, to calculate the diarization error rate (DER). Within the analysis, manually transcribed and diarized transcripts offered by ZOO served as the bottom fact.

We outlined the DER as follows:

Complete is the size of the bottom fact video. FA (False Alarm) is the size of segments which can be thought-about as speech in predictions, however not in floor fact. Miss is the size of segments which can be thought-about as speech in floor fact, however not in prediction. Error, additionally known as Confusion, is the size of segments which can be assigned to totally different audio system in prediction and floor fact. All of the items are measured in seconds. The standard values for DER can fluctuate relying on the particular software, dataset, and the standard of the diarization system. Observe that DER may be bigger than 1.0. A decrease DER is healthier.

To have the ability to calculate the DER for a bit of media, a floor fact diarization is required in addition to the WhisperX transcribed and diarized outputs. These have to be parsed and lead to lists of tuples containing a speaker label, speech section begin time, and speech section finish time for every section of speech within the media. The speaker labels don’t have to match between the WhisperX and floor fact diarizations. The outcomes are based mostly totally on the time of the segments. pyannote.metrics takes these tuples of floor fact diarizations and output diarizations (referred to within the pyannote.metrics documentation as reference and speculation) to calculate the DER. The next desk summarizes our outcomes.

| Video Sort | DER | Appropriate | Miss | Error | False Alarm |

| Drama | 0.738 | 44.80% | 21.80% | 33.30% | 18.70% |

| Documentary | 1.29 | 94.50% | 5.30% | 0.20% | 123.40% |

| Common | 0.901 | 71.40% | 13.50% | 15.10% | 61.50% |

These outcomes reveal a major efficiency distinction between the drama and documentary titles, with the mannequin attaining notably higher outcomes (utilizing DER as an mixture metric) for the drama episodes in comparison with the documentary title. A more in-depth evaluation of the titles supplies insights into potential elements contributing to this efficiency hole. One key issue may very well be the frequent presence of background music overlapping with speech within the documentary title. Though preprocessing media to boost diarization accuracy, reminiscent of eradicating background noise to isolate speech, was past the scope of this prototype, it opens avenues for future work that might doubtlessly improve the efficiency of WhisperX.

Conclusion

On this submit, we explored the collaborative partnership between AWS and ZOO Digital, using machine studying strategies with SageMaker and the WhisperX mannequin to boost the diarization workflow. The AWS staff performed a pivotal position in helping ZOO in prototyping, evaluating, and understanding the efficient deployment of customized ML fashions, particularly designed for diarization. This included incorporating auto scaling for scalability utilizing SageMaker.

Harnessing AI for diarization will result in substantial financial savings in each value and time when producing localized content material for ZOO. By aiding transcribers in swiftly and exactly creating and figuring out audio system, this expertise addresses the historically time-consuming and error-prone nature of the duty. The standard course of usually includes a number of passes by means of the video and extra high quality management steps to reduce errors. The adoption of AI for diarization allows a extra focused and environment friendly method, thereby rising productiveness inside a shorter timeframe.

We’ve outlined key steps to deploy the WhisperX mannequin on the SageMaker asynchronous endpoint, and encourage you to strive it your self utilizing the offered code. For additional insights into ZOO Digital’s companies and expertise, go to ZOO Digital’s official site. For particulars on deploying the OpenAI Whisper mannequin on SageMaker and numerous inference choices, discuss with Host the Whisper Model on Amazon SageMaker: exploring inference options. Be at liberty to share your ideas within the feedback.

In regards to the Authors

Ying Hou, PhD, is a Machine Studying Prototyping Architect at AWS. Her main areas of curiosity embody Deep Studying, with a give attention to GenAI, Laptop Imaginative and prescient, NLP, and time collection information prediction. In her spare time, she relishes spending high quality moments together with her household, immersing herself in novels, and mountain climbing within the nationwide parks of the UK.

Ying Hou, PhD, is a Machine Studying Prototyping Architect at AWS. Her main areas of curiosity embody Deep Studying, with a give attention to GenAI, Laptop Imaginative and prescient, NLP, and time collection information prediction. In her spare time, she relishes spending high quality moments together with her household, immersing herself in novels, and mountain climbing within the nationwide parks of the UK.

Ethan Cumberland is an AI Analysis Engineer at ZOO Digital, the place he works on utilizing AI and Machine Studying as assistive applied sciences to enhance workflows in speech, language, and localisation. He has a background in software program engineering and analysis within the safety and policing area, specializing in extracting structured info from the net and leveraging open-source ML fashions for analysing and enriching collected information.

Ethan Cumberland is an AI Analysis Engineer at ZOO Digital, the place he works on utilizing AI and Machine Studying as assistive applied sciences to enhance workflows in speech, language, and localisation. He has a background in software program engineering and analysis within the safety and policing area, specializing in extracting structured info from the net and leveraging open-source ML fashions for analysing and enriching collected information.

Gaurav Kaila leads the AWS Prototyping staff for UK & Eire. His staff works with prospects throughout various industries to ideate & co-develop enterprise essential workloads with a mandate to speed up adoption of AWS companies.

Gaurav Kaila leads the AWS Prototyping staff for UK & Eire. His staff works with prospects throughout various industries to ideate & co-develop enterprise essential workloads with a mandate to speed up adoption of AWS companies.