Run ML inference on unplanned and spiky site visitors utilizing Amazon SageMaker multi-model endpoints

Amazon SageMaker multi-model endpoints (MMEs) are a completely managed functionality of SageMaker inference that means that you can deploy hundreds of fashions on a single endpoint. Beforehand, MMEs pre-determinedly allotted CPU computing energy to fashions statically regardless the mannequin site visitors load, utilizing Multi Model Server (MMS) as its mannequin server. On this put up, we focus on an answer by which an MME can dynamically regulate the compute energy assigned to every mannequin based mostly on the mannequin’s site visitors sample. This answer allows you to use the underlying compute of MMEs extra effectively and save prices.

MMEs dynamically load and unload fashions based mostly on incoming site visitors to the endpoint. When using MMS because the mannequin server, MMEs allocate a set variety of mannequin employees for every mannequin. For extra data, check with Model hosting patterns in Amazon SageMaker, Part 3: Run and optimize multi-model inference with Amazon SageMaker multi-model endpoints.

Nevertheless, this could lead to some points when your site visitors sample is variable. Let’s say you’ve got a singular or few fashions receiving a considerable amount of site visitors. You possibly can configure MMS to allocate a excessive variety of employees for these fashions, however this will get assigned to all of the fashions behind the MME as a result of it’s a static configuration. This results in a lot of employees utilizing {hardware} compute—even the idle fashions. The other downside can occur should you set a small worth for the variety of employees. The favored fashions gained’t have sufficient employees on the mannequin server stage to correctly allocate sufficient {hardware} behind the endpoint for these fashions. The primary difficulty is that it’s troublesome to stay site visitors sample agnostic should you can’t dynamically scale your employees on the mannequin server stage to allocate the required quantity of compute.

The answer we focus on on this put up makes use of DJLServing because the mannequin server, which might help mitigate a number of the points that we mentioned and allow per-model scaling and allow MMEs to be site visitors sample agnostic.

MME structure

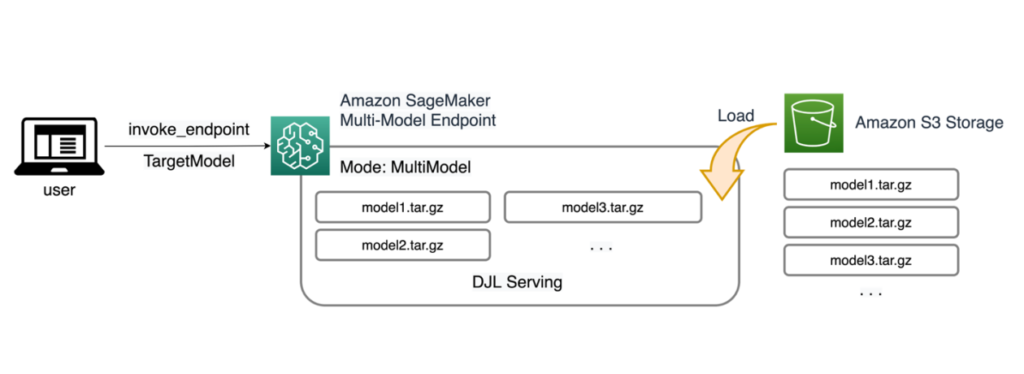

SageMaker MMEs allow you to deploy a number of fashions behind a single inference endpoint which will comprise a number of cases. Every occasion is designed to load and serve a number of fashions as much as its reminiscence and CPU/GPU capability. With this structure, a software program as a service (SaaS) enterprise can break the linearly growing price of internet hosting a number of fashions and obtain reuse of infrastructure in line with the multi-tenancy mannequin utilized elsewhere within the utility stack. The next diagram illustrates this structure.

A SageMaker MME dynamically hundreds fashions from Amazon Simple Storage Service (Amazon S3) when invoked, as an alternative of downloading all of the fashions when the endpoint is first created. Because of this, an preliminary invocation to a mannequin would possibly see larger inference latency than the following inferences, that are accomplished with low latency. If the mannequin is already loaded on the container when invoked, then the obtain step is skipped and the mannequin returns the inferences with low latency. For instance, assume you’ve got a mannequin that’s solely used just a few instances a day. It’s routinely loaded on demand, whereas incessantly accessed fashions are retained in reminiscence and invoked with constantly low latency.

Behind every MME are mannequin internet hosting cases, as depicted within the following diagram. These cases load and evict a number of fashions to and from reminiscence based mostly on the site visitors patterns to the fashions.

SageMaker continues to route inference requests for a mannequin to the occasion the place the mannequin is already loaded such that the requests are served from a cached mannequin copy (see the next diagram, which reveals the request path for the primary prediction request vs. the cached prediction request path). Nevertheless, if the mannequin receives many invocation requests, and there are further cases for the MME, SageMaker routes some requests to a different occasion to accommodate the rise. To benefit from automated mannequin scaling in SageMaker, be sure to have instance auto scaling set up to provision further occasion capability. Arrange your endpoint-level scaling coverage with both customized parameters or invocations per minute (really useful) so as to add extra cases to the endpoint fleet.

Mannequin server overview

A mannequin server is a software program element that gives a runtime surroundings for deploying and serving machine studying (ML) fashions. It acts as an interface between the educated fashions and shopper functions that need to make predictions utilizing these fashions.

The first goal of a mannequin server is to permit easy integration and environment friendly deployment of ML fashions into manufacturing programs. As an alternative of embedding the mannequin straight into an utility or a particular framework, the mannequin server offers a centralized platform the place a number of fashions could be deployed, managed, and served.

Mannequin servers usually supply the next functionalities:

- Mannequin loading – The server hundreds the educated ML fashions into reminiscence, making them prepared for serving predictions.

- Inference API – The server exposes an API that enables shopper functions to ship enter knowledge and obtain predictions from the deployed fashions.

- Scaling – Mannequin servers are designed to deal with concurrent requests from a number of purchasers. They supply mechanisms for parallel processing and managing sources effectively to make sure excessive throughput and low latency.

- Integration with backend engines – Mannequin servers have integrations with backend frameworks like DeepSpeed and FasterTransformer to partition giant fashions and run extremely optimized inference.

DJL structure

DJL Serving is an open supply, excessive efficiency, common mannequin server. DJL Serving is constructed on high of DJL, a deep studying library written within the Java programming language. It could actually take a deep studying mannequin, a number of fashions, or workflows and make them accessible by means of an HTTP endpoint. DJL Serving helps deploying fashions from a number of frameworks like PyTorch, TensorFlow, Apache MXNet, ONNX, TensorRT, Hugging Face Transformers, DeepSpeed, FasterTransformer, and extra.

DJL Serving affords many options that will let you deploy your fashions with excessive efficiency:

- Ease of use – DJL Serving can serve most fashions out of the field. Simply deliver the mannequin artifacts, and DJL Serving can host them.

- A number of gadget and accelerator help – DJL Serving helps deploying fashions on CPU, GPU, and AWS Inferentia.

- Efficiency – DJL Serving runs multithreaded inference in a single JVM to spice up throughput.

- Dynamic batching – DJL Serving helps dynamic batching to extend throughput.

- Auto scaling – DJL Serving will routinely scale employees up and down based mostly on the site visitors load.

- Multi-engine help – DJL Serving can concurrently host fashions utilizing completely different frameworks (reminiscent of PyTorch and TensorFlow).

- Ensemble and workflow fashions – DJL Serving helps deploying advanced workflows comprised of a number of fashions, and runs components of the workflow on CPU and components on GPU. Fashions inside a workflow can use completely different frameworks.

Specifically, the auto scaling function of DJL Serving makes it easy to make sure the fashions are scaled appropriately for the incoming site visitors. By default, DJL Serving determines the utmost variety of employees for a mannequin that may be supported based mostly on the {hardware} accessible (CPU cores, GPU gadgets). You possibly can set decrease and higher bounds for every mannequin to be sure that a minimal site visitors stage can all the time be served, and {that a} single mannequin doesn’t devour all accessible sources.

DJL Serving makes use of a Netty frontend on high of backend employee thread swimming pools. The frontend makes use of a single Netty setup with a number of HttpRequestHandlers. Completely different request handlers will present help for the Inference API, Management API, or different APIs accessible from varied plugins.

The backend relies across the WorkLoadManager (WLM) module. The WLM takes care of a number of employee threads for every mannequin together with the batching and request routing to them. When a number of fashions are served, WLM checks the inference request queue dimension of every mannequin first. If the queue dimension is larger than two instances a mannequin’s batch dimension, WLM scales up the variety of employees assigned to that mannequin.

Answer overview

The implementation of DJL with an MME differs from the default MMS setup. For DJL Serving with an MME, we compress the next information within the mannequin.tar.gz format that SageMaker Inference is anticipating:

- mannequin.joblib – For this implementation, we straight push the mannequin metadata into the tarball. On this case, we’re working with a

.joblibfile, so we offer that file in our tarball for our inference script to learn. If the artifact is just too giant, you can too push it to Amazon S3 and level in direction of that within the serving configuration you outline for DJL. - serving.properties – Right here you may configure any mannequin server-related environment variables. The ability of DJL right here is that you could configure

minWorkersandmaxWorkersfor every mannequin tarball. This enables for every mannequin to scale up and down on the mannequin server stage. As an illustration, if a singular mannequin is receiving nearly all of the site visitors for an MME, the mannequin server will scale the employees up dynamically. On this instance, we don’t configure these variables and let DJL decide the required variety of employees relying on our site visitors sample. - mannequin.py – That is the inference script for any customized preprocessing or postprocessing you wish to implement. The mannequin.py expects your logic to be encapsulated in a deal with technique by default.

- necessities.txt (non-obligatory) – By default, DJL comes put in with PyTorch, however any further dependencies you want could be pushed right here.

For this instance, we showcase the ability of DJL with an MME by taking a pattern SKLearn mannequin. We run a coaching job with this mannequin after which create 1,000 copies of this mannequin artifact to again our MME. We then showcase how DJL can dynamically scale to deal with any kind of site visitors sample that your MME might obtain. This could embrace an excellent distribution of site visitors throughout all fashions or perhaps a few common fashions receiving nearly all of the site visitors. You’ll find all of the code within the following GitHub repo.

Stipulations

For this instance, we use a SageMaker pocket book occasion with a conda_python3 kernel and ml.c5.xlarge occasion. To carry out the load assessments, you need to use an Amazon Elastic Compute Cloud (Amazon EC2) occasion or a bigger SageMaker pocket book occasion. On this instance, we scale to over a thousand transactions per second (TPS), so we propose testing on a heavier EC2 occasion reminiscent of an ml.c5.18xlarge so that you’ve got extra compute to work with.

Create a mannequin artifact

We first have to create our mannequin artifact and knowledge that we use on this instance. For this case, we generate some synthetic knowledge with NumPy and practice utilizing an SKLearn linear regression mannequin with the next code snippet:

After you run the previous code, it’s best to have a mannequin.joblib file created in your native surroundings.

Pull the DJL Docker picture

The Docker picture djl-inference:0.23.0-cpu-full-v1.0 is our DJL serving container used on this instance. You possibly can regulate the next URL relying in your Area:

inference_image_uri = "474422712127.dkr.ecr.us-east-1.amazonaws.com/djl-serving-cpu:newest"

Optionally, you can too use this picture as a base picture and lengthen it to construct your personal Docker picture on Amazon Elastic Container Registry (Amazon ECR) with some other dependencies you want.

Create the mannequin file

First, we create a file known as serving.properties. This instructs DJLServing to make use of the Python engine. We additionally outline the max_idle_time of a employee to be 600 seconds. This makes certain that we take longer to scale down the variety of employees we have now per mannequin. We don’t regulate minWorkers and maxWorkers that we will outline and we let DJL dynamically compute the variety of employees wanted relying on the site visitors every mannequin is receiving. The serving.properties is proven as follows. To see the whole listing of configuration choices, check with Engine Configuration.

Subsequent, we create our mannequin.py file, which defines the mannequin loading and inference logic. For MMEs, every mannequin.py file is particular to a mannequin. Fashions are saved in their very own paths underneath the mannequin retailer (often /decide/ml/mannequin/). When loading fashions, they are going to be loaded underneath the mannequin retailer path in their very own listing. The total mannequin.py instance on this demo could be seen within the GitHub repo.

We create a mannequin.tar.gz file that features our mannequin (mannequin.joblib), mannequin.py, and serving.properties:

For demonstration functions, we make 1,000 copies of the identical mannequin.tar.gz file to symbolize the big variety of fashions to be hosted. In manufacturing, it’s essential create a mannequin.tar.gz file for every of your fashions.

Lastly, we add these fashions to Amazon S3.

Create a SageMaker mannequin

We now create a SageMaker model. We use the ECR picture outlined earlier and the mannequin artifact from the earlier step to create the SageMaker mannequin. Within the mannequin setup, we configure Mode as MultiModel. This tells DJLServing that we’re creating an MME.

Create a SageMaker endpoint

On this demo, we use 20 ml.c5d.18xlarge cases to scale to a TPS within the hundreds vary. Be sure to get a restrict enhance in your occasion kind, if vital, to realize the TPS you might be focusing on.

Load testing

On the time of writing, the SageMaker in-house load testing instrument Amazon SageMaker Inference Recommender doesn’t natively help testing for MMEs. Due to this fact, we use the open supply Python instrument Locust. Locust is simple to arrange and may monitor metrics reminiscent of TPS and end-to-end latency. For a full understanding of find out how to set it up with SageMaker, see Best practices for load testing Amazon SageMaker real-time inference endpoints.

On this use case, we have now three completely different site visitors patterns we need to simulate with MMEs, so we have now the next three Python scripts that align with every sample. Our objective right here is to show that, no matter what our site visitors sample is, we will obtain the identical goal TPS and scale appropriately.

We will specify a weight in our Locust script to assign site visitors throughout completely different parts of our fashions. As an illustration, with our single sizzling mannequin, we implement two strategies as follows:

We will then assign a sure weight to every technique, which is when a sure technique receives a particular share of the site visitors:

For 20 ml.c5d.18xlarge cases, we see the next invocation metrics on the Amazon CloudWatch console. These values stay pretty constant throughout all three site visitors patterns. To grasp CloudWatch metrics for SageMaker real-time inference and MMEs higher, check with SageMaker Endpoint Invocation Metrics.

You’ll find the remainder of the Locust scripts within the locust-utils directory within the GitHub repository.

Abstract

On this put up, we mentioned how an MME can dynamically regulate the compute energy assigned to every mannequin based mostly on the mannequin’s site visitors sample. This newly launched function is offered in all AWS Areas the place SageMaker is offered. Notice that on the time of announcement, solely CPU cases are supported. To study extra, check with Supported algorithms, frameworks, and instances.

In regards to the Authors

Ram Vegiraju is a ML Architect with the SageMaker Service crew. He focuses on serving to clients construct and optimize their AI/ML options on Amazon SageMaker. In his spare time, he loves touring and writing.

Ram Vegiraju is a ML Architect with the SageMaker Service crew. He focuses on serving to clients construct and optimize their AI/ML options on Amazon SageMaker. In his spare time, he loves touring and writing.

Qingwei Li is a Machine Studying Specialist at Amazon Internet Companies. He obtained his Ph.D. in Operations Analysis after he broke his advisor’s analysis grant account and did not ship the Nobel Prize he promised. Presently he helps clients within the monetary service and insurance coverage business construct machine studying options on AWS. In his spare time, he likes studying and educating.

Qingwei Li is a Machine Studying Specialist at Amazon Internet Companies. He obtained his Ph.D. in Operations Analysis after he broke his advisor’s analysis grant account and did not ship the Nobel Prize he promised. Presently he helps clients within the monetary service and insurance coverage business construct machine studying options on AWS. In his spare time, he likes studying and educating.

James Wu is a Senior AI/ML Specialist Answer Architect at AWS. serving to clients design and construct AI/ML options. James’s work covers a variety of ML use instances, with a major curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. Previous to becoming a member of AWS, James was an architect, developer, and expertise chief for over 10 years, together with 6 years in engineering and 4 years in advertising and marketing & promoting industries.

James Wu is a Senior AI/ML Specialist Answer Architect at AWS. serving to clients design and construct AI/ML options. James’s work covers a variety of ML use instances, with a major curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. Previous to becoming a member of AWS, James was an architect, developer, and expertise chief for over 10 years, together with 6 years in engineering and 4 years in advertising and marketing & promoting industries.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s captivated with working with clients and is motivated by the objective of democratizing machine studying. He focuses on core challenges associated to deploying advanced ML functions, multi-tenant ML fashions, price optimizations, and making deployment of deep studying fashions extra accessible. In his spare time, Saurabh enjoys mountaineering, studying about revolutionary applied sciences, following TechCrunch and spending time together with his household.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s captivated with working with clients and is motivated by the objective of democratizing machine studying. He focuses on core challenges associated to deploying advanced ML functions, multi-tenant ML fashions, price optimizations, and making deployment of deep studying fashions extra accessible. In his spare time, Saurabh enjoys mountaineering, studying about revolutionary applied sciences, following TechCrunch and spending time together with his household.

Xu Deng is a Software program Engineer Supervisor with the SageMaker crew. He focuses on serving to clients construct and optimize their AI/ML inference expertise on Amazon SageMaker. In his spare time, he loves touring and snowboarding.

Xu Deng is a Software program Engineer Supervisor with the SageMaker crew. He focuses on serving to clients construct and optimize their AI/ML inference expertise on Amazon SageMaker. In his spare time, he loves touring and snowboarding.

Siddharth Venkatesan is a Software program Engineer in AWS Deep Studying. He presently focusses on constructing options for big mannequin inference. Previous to AWS he labored within the Amazon Grocery org constructing new fee options for purchasers world-wide. Exterior of labor, he enjoys snowboarding, the outside, and watching sports activities.

Siddharth Venkatesan is a Software program Engineer in AWS Deep Studying. He presently focusses on constructing options for big mannequin inference. Previous to AWS he labored within the Amazon Grocery org constructing new fee options for purchasers world-wide. Exterior of labor, he enjoys snowboarding, the outside, and watching sports activities.

Rohith Nallamaddi is a Software program Improvement Engineer at AWS. He works on optimizing deep studying workloads on GPUs, constructing excessive efficiency ML inference and serving options. Previous to this, he labored on constructing microservices based mostly on AWS for Amazon F3 enterprise. Exterior of labor he enjoys taking part in and watching sports activities.

Rohith Nallamaddi is a Software program Improvement Engineer at AWS. He works on optimizing deep studying workloads on GPUs, constructing excessive efficiency ML inference and serving options. Previous to this, he labored on constructing microservices based mostly on AWS for Amazon F3 enterprise. Exterior of labor he enjoys taking part in and watching sports activities.