The Shift from Fashions to Compound AI Techniques – The Berkeley Synthetic Intelligence Analysis Weblog

AI caught everybody’s consideration in 2023 with Giant Language Fashions (LLMs) that may be instructed to carry out normal duties, reminiscent of translation or coding, simply by prompting. This naturally led to an intense give attention to fashions as the first ingredient in AI utility growth, with everybody questioning what capabilities new LLMs will deliver.

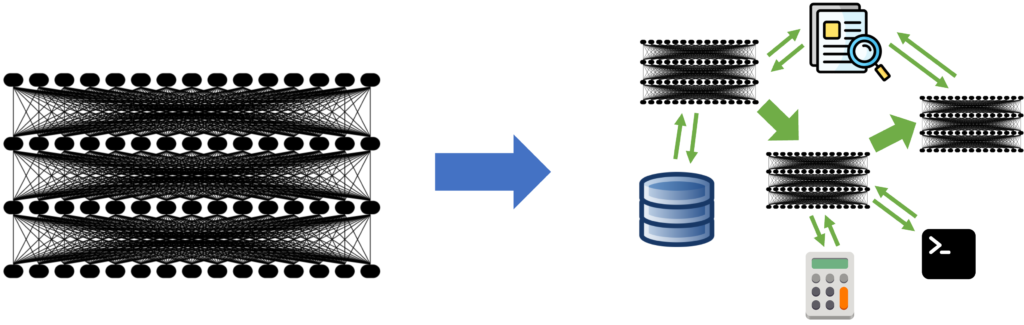

As extra builders start to construct utilizing LLMs, nonetheless, we consider that this focus is quickly altering: state-of-the-art AI outcomes are more and more obtained by compound methods with a number of parts, not simply monolithic fashions.

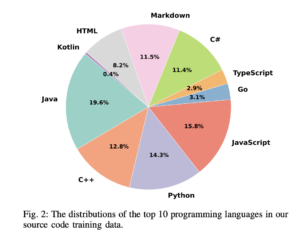

For instance, Google’s AlphaCode 2 set state-of-the-art ends in programming via a fastidiously engineered system that makes use of LLMs to generate as much as 1 million attainable options for a process after which filter down the set. AlphaGeometry, likewise, combines an LLM with a conventional symbolic solver to deal with olympiad issues. In enterprises, our colleagues at Databricks discovered that 60% of LLM purposes use some type of retrieval-augmented generation (RAG), and 30% use multi-step chains.

Even researchers engaged on conventional language mannequin duties, who used to report outcomes from a single LLM name, are actually reporting outcomes from more and more advanced inference methods: Microsoft wrote a couple of chaining technique that exceeded GPT-4’s accuracy on medical exams by 9%, and Google’s Gemini launch post measured its MMLU benchmark outcomes utilizing a brand new CoT@32 inference technique that calls the mannequin 32 occasions, which raised questions on its comparability to only a single name to GPT-4. This shift to compound methods opens many fascinating design questions, however additionally it is thrilling, as a result of it means main AI outcomes might be achieved via intelligent engineering, not simply scaling up coaching.

On this put up, we analyze the development towards compound AI methods and what it means for AI builders. Why are builders constructing compound methods? Is that this paradigm right here to remain as fashions enhance? And what are the rising instruments for creating and optimizing such methods—an space that has acquired far much less analysis than mannequin coaching? We argue that compound AI methods will doubtless be the easiest way to maximise AI outcomes sooner or later, and is likely to be probably the most impactful tendencies in AI in 2024.

More and more many new AI outcomes are from compound methods.

We outline a Compound AI System as a system that tackles AI duties utilizing a number of interacting parts, together with a number of calls to fashions, retrievers, or exterior instruments. In distinction, an AI Mannequin is solely a statistical model, e.g., a Transformer that predicts the subsequent token in textual content.

Our commentary is that regardless that AI fashions are regularly getting higher, and there’s no clear finish in sight to their scaling, increasingly state-of-the-art outcomes are obtained utilizing compound methods. Why is that? Now we have seen a number of distinct causes:

- Some duties are simpler to enhance through system design. Whereas LLMs seem to observe outstanding scaling laws that predictably yield higher outcomes with extra compute, in lots of purposes, scaling affords decrease returns-vs-cost than constructing a compound system. For instance, suppose that the present greatest LLM can resolve coding contest issues 30% of the time, and tripling its coaching finances would enhance this to 35%; that is nonetheless not dependable sufficient to win a coding contest! In distinction, engineering a system that samples from the mannequin a number of occasions, assessments every pattern, and so on. would possibly enhance efficiency to 80% with right this moment’s fashions, as proven in work like AlphaCode. Much more importantly, iterating on a system design is usually a lot sooner than ready for coaching runs. We consider that in any high-value utility, builders will wish to use each instrument out there to maximise AI high quality, so they may use system concepts along with scaling. We steadily see this with LLM customers, the place LLM creates a compelling however frustratingly unreliable first demo, and engineering groups then go on to systematically elevate high quality.

- Techniques might be dynamic. Machine studying fashions are inherently restricted as a result of they’re skilled on static datasets, so their “data” is mounted. Due to this fact, builders want to mix fashions with different parts, reminiscent of search and retrieval, to include well timed information. As well as, coaching lets a mannequin “see” the entire coaching set, so extra advanced methods are wanted to construct AI purposes with entry controls (e.g., reply a consumer’s questions primarily based solely on information the consumer has entry to).

- Bettering management and belief is simpler with methods. Neural community fashions alone are onerous to manage: whereas coaching will affect them, it’s practically unimaginable to ensure {that a} mannequin will keep away from sure behaviors. Utilizing an AI system as an alternative of a mannequin may also help builders management habits extra tightly, e.g., by filtering mannequin outputs. Likewise, even the perfect LLMs nonetheless hallucinate, however a system combining, say, LLMs with retrieval can enhance consumer belief by offering citations or automatically verifying facts.

- Efficiency objectives range extensively. Every AI mannequin has a set high quality stage and price, however purposes typically have to range these parameters. In some purposes, reminiscent of inline code options, the perfect AI fashions are too costly, so instruments like Github Copilot use carefully tuned smaller models and various search heuristics to supply outcomes. In different purposes, even the biggest fashions, like GPT-4, are too low-cost! Many customers could be keen to pay a couple of {dollars} for an accurate authorized opinion, as an alternative of the few cents it takes to ask GPT-4, however a developer would wish to design an AI system to make the most of this bigger finances.

The shift to compound methods in Generative AI additionally matches the trade tendencies in different AI fields, reminiscent of self-driving vehicles: many of the state-of-the-art implementations are methods with a number of specialised parts (more discussion here). For these causes, we consider compound AI methods will stay a number one paradigm whilst fashions enhance.

Whereas compound AI methods can supply clear advantages, the artwork of designing, optimizing, and working them remains to be rising. On the floor, an AI system is a mixture of conventional software program and AI fashions, however there are a lot of fascinating design questions. For instance, ought to the general “management logic” be written in conventional code (e.g., Python code that calls an LLM), or ought to or not it’s pushed by an AI mannequin (e.g. LLM brokers that decision exterior instruments)? Likewise, in a compound system, the place ought to a developer make investments assets—for instance, in a RAG pipeline, is it higher to spend extra FLOPS on the retriever or the LLM, and even to name an LLM a number of occasions? Lastly, how can we optimize an AI system with discrete parts end-to-end to maximise a metric, the identical method we will practice a neural community? On this part, we element a couple of instance AI methods, then talk about these challenges and up to date analysis on them.

The AI System Design House

Beneath are few latest compound AI methods to indicate the breadth of design selections:

| AI System | Elements | Design | Outcomes |

|---|---|---|---|

| AlphaCode 2 |

|

Generates as much as 1 million options for a coding drawback then filters and scores them | Matches eighty fifth percentile of people on coding contests |

| AlphaGeometry |

|

Iteratively suggests constructions in a geometry drawback through LLM and checks deduced information produced by symbolic engine | Between silver and gold Worldwide Math Olympiad medalists on timed check |

| Medprompt |

|

Solutions medical questions by looking for related examples to assemble a few-shot immediate, including model-generated chain-of-thought for every instance, and producing and judging as much as 11 options | Outperforms specialised medical fashions like Med-PaLM used with easier prompting methods |

| Gemini on MMLU |

|

Gemini’s CoT@32 inference technique for the MMLU benchmark samples 32 chain-of-thought solutions from the mannequin, and returns the best choice if sufficient of them agree, or makes use of technology with out chain-of-thought if not | 90.04% on MMLU, in comparison with 86.4% for GPT-4 with 5-shot prompting or 83.7% for Gemini with 5-shot prompting |

| ChatGPT Plus |

|

The ChatGPT Plus providing can name instruments reminiscent of net searching to reply questions; the LLM determines when and the way to name every instrument because it responds | Standard client AI product with hundreds of thousands of paid subscribers |

|

RAG, ORQA, Bing, Baleen, and so on |

|

Mix LLMs with retrieval methods in varied methods, e.g., asking an LLM to generate a search question, or immediately looking for the present context | Broadly used approach in search engines like google and enterprise apps |

Key Challenges in Compound AI Techniques

Compound AI methods pose new challenges in design, optimization and operation in comparison with AI fashions.

Design House

The vary of attainable system designs for a given process is huge. For instance, even within the easy case of retrieval-augmented technology (RAG) with a retriever and language mannequin, there are: (i) many retrieval and language fashions to select from, (ii) different methods to enhance retrieval high quality, reminiscent of question enlargement or reranking fashions, and (iii) methods to enhance the LLM’s generated output (e.g., operating one other LLM to check that the output pertains to the retrieved passages). Builders must discover this huge area to discover a good design.

As well as, builders have to allocate restricted assets, like latency and price budgets, among the many system parts. For instance, if you wish to reply RAG questions in 100 milliseconds, do you have to finances to spend 20 ms on the retriever and 80 on the LLM, or the opposite method round?

Optimization

Usually in ML, maximizing the standard of a compound system requires co-optimizing the parts to work effectively collectively. For instance, contemplate a easy RAG utility the place an LLM sees a consumer query, generates a search question to ship to a retriever, after which generates a solution. Ideally, the LLM could be tuned to generate queries that work effectively for that specific retriever, and the retriever could be tuned to choose solutions that work effectively for that LLM.

In single mannequin growth a la PyTorch, customers can simply optimize a mannequin end-to-end as a result of the entire mannequin is differentiable. Nevertheless, new compound AI methods include non-differentiable parts like search engines like google or code interpreters, and thus require new strategies of optimization. Optimizing these compound AI methods remains to be a brand new analysis space; for instance, DSPy affords a normal optimizer for pipelines of pretrained LLMs and different parts, whereas others methods, like LaMDA, Toolformer and AlphaGeometry, use instrument calls throughout mannequin coaching to optimize fashions for these instruments.

Operation

Machine studying operations (MLOps) change into more difficult for compound AI methods. For instance, whereas it’s simple to trace success charges for a conventional ML mannequin like a spam classifier, how ought to builders observe and debug the efficiency of an LLM agent for a similar process, which could use a variable variety of “reflection” steps or exterior API calls to categorise a message? We consider {that a} new technology of MLOps instruments might be developed to deal with these issues. Attention-grabbing issues embody:

- Monitoring: How can builders most effectively log, analyze, and debug traces from advanced AI methods?

- DataOps: As a result of many AI methods contain information serving parts like vector DBs, and their habits depends upon the standard of information served, any give attention to operations for these methods ought to moreover span information pipelines.

- Safety: Analysis has proven that compound AI methods, reminiscent of an LLM chatbot with a content material filter, can create unforeseen security risks in comparison with particular person fashions. New instruments might be required to safe these methods.

Rising Paradigms

To deal with the challenges of constructing compound AI methods, a number of new approaches are arising within the trade and in analysis. We spotlight a couple of of essentially the most extensively used ones and examples from our analysis on tackling these challenges.

Designing AI Techniques: Composition Frameworks and Methods. Many builders are actually utilizing “language model programming” frameworks that permit them construct purposes out of a number of calls to AI fashions and different parts. These embody element libraries like LangChain and LlamaIndex that builders name from conventional applications, agent frameworks like AutoGPT and BabyAGI that permit an LLM drive the appliance, and instruments for controlling LM outputs, like Guardrails, Outlines, LMQL and SGLang. In parallel, researchers are creating quite a few new inference methods to generate higher outputs utilizing calls to fashions and instruments, reminiscent of chain-of-thought, self-consistency, WikiChat, RAG and others.

Mechanically Optimizing High quality: DSPy. Coming from academia, DSPy is the primary framework that goals to optimize a system composed of LLM calls and different instruments to maximise a goal metric. Customers write an utility out of calls to LLMs and different instruments, and supply a goal metric reminiscent of accuracy on a validation set, after which DSPy robotically tunes the pipeline by creating immediate directions, few-shot examples, and different parameter selections for every module to maximise end-to-end efficiency. The impact is much like end-to-end optimization of a multi-layer neural community in PyTorch, besides that the modules in DSPy usually are not all the time differentiable layers. To do this, DSPy leverages the linguistic skills of LLMs in a clear method: to specify every module, customers write a pure language signature, reminiscent of user_question -> search_query, the place the names of the enter and output fields are significant, and DSPy robotically turns this into appropriate prompts with directions, few-shot examples, and even weight updates to the underlying language fashions.

Optimizing Value: FrugalGPT and AI Gateways. The wide selection of AI fashions and companies out there makes it difficult to choose the appropriate one for an utility. Furthermore, totally different fashions could carry out higher on totally different inputs. FrugalGPT is a framework to robotically route inputs to totally different AI mannequin cascades to maximise high quality topic to a goal finances. Based mostly on a small set of examples, it learns a routing technique that may outperform the perfect LLM companies by as much as 4% on the identical value, or scale back value by as much as 90% whereas matching their high quality. FrugalGPT is an instance of a broader rising idea of AI gateways or routers, carried out in software program like Databricks AI Gateway, OpenRouter, and Martian, to optimize the efficiency of every element of an AI utility. These methods work even higher when an AI process is damaged into smaller modular steps in a compound system, and the gateway can optimize routing individually for every step.

Operation: LLMOps and DataOps. AI purposes have all the time required cautious monitoring of each mannequin outputs and information pipelines to run reliably. With compound AI methods, nonetheless, the habits of the system on every enter might be significantly extra advanced, so it is very important observe all of the steps taken by the appliance and intermediate outputs. Software program like LangSmith, Phoenix Traces, and Databricks Inference Tables can observe, visualize and consider these outputs at a advantageous granularity, in some instances additionally correlating them with information pipeline high quality and downstream metrics. Within the analysis world, DSPy Assertions seeks to leverage suggestions from monitoring checks immediately in AI methods to enhance outputs, and AI-based high quality analysis strategies like MT-Bench, FAVA and ARES goal to automate high quality monitoring.

Generative AI has excited each developer by unlocking a variety of capabilities via pure language prompting. As builders goal to maneuver past demos and maximize the standard of their AI purposes, nonetheless, they’re more and more turning to compound AI methods as a pure method to management and improve the capabilities of LLMs. Determining the perfect practices for creating compound AI methods remains to be an open query, however there are already thrilling approaches to assist with design, end-to-end optimization, and operation. We consider that compound AI methods will stay the easiest way to maximise the standard and reliability of AI purposes going ahead, and could also be probably the most essential tendencies in AI in 2024.