Code Llama 70B is now obtainable in Amazon SageMaker JumpStart

At the moment, we’re excited to announce that Code Llama basis fashions, developed by Meta, can be found for purchasers by way of Amazon SageMaker JumpStart to deploy with one click on for operating inference. Code Llama is a state-of-the-art massive language mannequin (LLM) able to producing code and pure language about code from each code and pure language prompts. You may check out this mannequin with SageMaker JumpStart, a machine studying (ML) hub that gives entry to algorithms, fashions, and ML options so you possibly can rapidly get began with ML. On this submit, we stroll by way of the best way to uncover and deploy the Code Llama mannequin by way of SageMaker JumpStart.

Code Llama

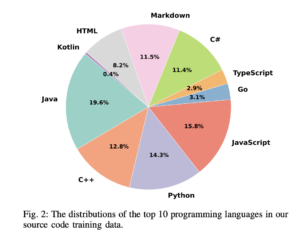

Code Llama is a mannequin launched by Meta that’s constructed on prime of Llama 2. This state-of-the-art mannequin is designed to enhance productiveness for programming duties for builders by serving to them create high-quality, well-documented code. The fashions excel in Python, C++, Java, PHP, C#, TypeScript, and Bash, and have the potential to avoid wasting builders’ time and make software program workflows extra environment friendly.

It is available in three variants, engineered to cowl all kinds of functions: the foundational mannequin (Code Llama), a Python specialised mannequin (Code Llama Python), and an instruction-following mannequin for understanding pure language directions (Code Llama Instruct). All Code Llama variants are available in 4 sizes: 7B, 13B, 34B, and 70B parameters. The 7B and 13B base and instruct variants assist infilling based mostly on surrounding content material, making them superb for code assistant functions. The fashions had been designed utilizing Llama 2 as the bottom after which skilled on 500 billion tokens of code information, with the Python specialised model skilled on an incremental 100 billion tokens. The Code Llama fashions present steady generations with as much as 100,000 tokens of context. All fashions are skilled on sequences of 16,000 tokens and present enhancements on inputs with as much as 100,000 tokens.

The mannequin is made obtainable underneath the identical community license as Llama 2.

Basis fashions in SageMaker

SageMaker JumpStart gives entry to a variety of fashions from common mannequin hubs, together with Hugging Face, PyTorch Hub, and TensorFlow Hub, which you should utilize inside your ML growth workflow in SageMaker. Latest advances in ML have given rise to a brand new class of fashions generally known as basis fashions, that are usually skilled on billions of parameters and are adaptable to a large class of use instances, equivalent to textual content summarization, digital artwork technology, and language translation. As a result of these fashions are costly to coach, clients wish to use present pre-trained basis fashions and fine-tune them as wanted, moderately than practice these fashions themselves. SageMaker gives a curated record of fashions that you would be able to select from on the SageMaker console.

You will discover basis fashions from completely different mannequin suppliers inside SageMaker JumpStart, enabling you to get began with basis fashions rapidly. You will discover basis fashions based mostly on completely different duties or mannequin suppliers, and simply evaluation mannequin traits and utilization phrases. You can too check out these fashions utilizing a take a look at UI widget. Once you wish to use a basis mannequin at scale, you are able to do so with out leaving SageMaker by utilizing pre-built notebooks from mannequin suppliers. As a result of the fashions are hosted and deployed on AWS, you possibly can relaxation assured that your information, whether or not used for evaluating or utilizing the mannequin at scale, isn’t shared with third events.

Uncover the Code Llama mannequin in SageMaker JumpStart

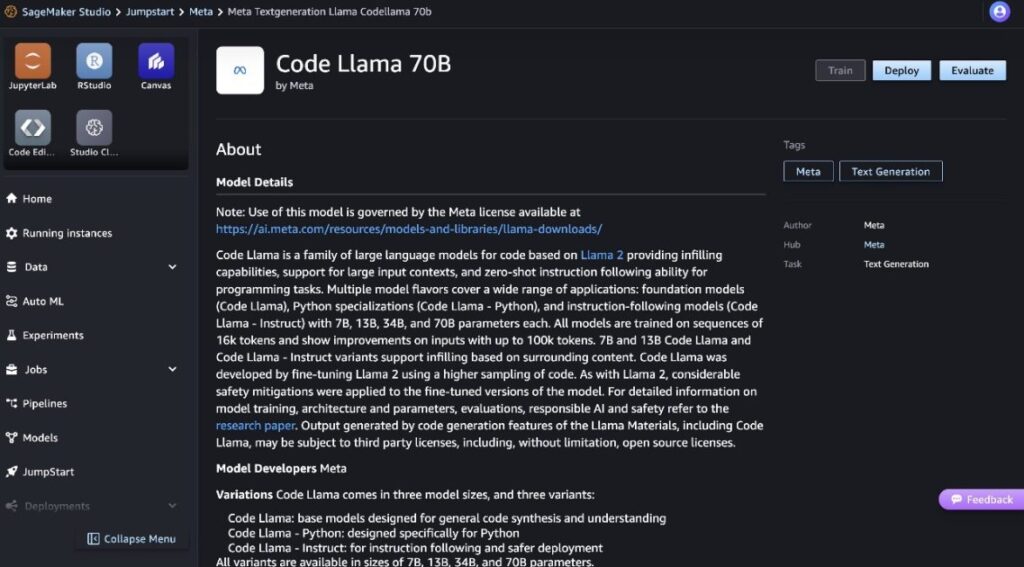

To deploy the Code Llama 70B mannequin, full the next steps in Amazon SageMaker Studio:

- On the SageMaker Studio house web page, select JumpStart within the navigation pane.

- Seek for Code Llama fashions and select the Code Llama 70B mannequin from the record of fashions proven.

You will discover extra details about the mannequin on the Code Llama 70B mannequin card.

The next screenshot reveals the endpoint settings. You may change the choices or use the default ones.

- Settle for the Finish Consumer License Settlement (EULA) and select Deploy.

It will begin the endpoint deployment course of, as proven within the following screenshot.

Deploy the mannequin with the SageMaker Python SDK

Alternatively, you possibly can deploy by way of the instance pocket book by selecting Open Pocket book inside mannequin element web page of Traditional Studio. The instance pocket book gives end-to-end steering on the best way to deploy the mannequin for inference and clear up assets.

To deploy utilizing pocket book, we begin by deciding on an applicable mannequin, specified by the model_id. You may deploy any of the chosen fashions on SageMaker with the next code:

This deploys the mannequin on SageMaker with default configurations, together with default occasion kind and default VPC configurations. You may change these configurations by specifying non-default values in JumpStartModel. Notice that by default, accept_eula is ready to False. It’s essential to set accept_eula=True to deploy the endpoint efficiently. By doing so, you settle for the consumer license settlement and acceptable use coverage as talked about earlier. You can too download the license settlement.

Invoke a SageMaker endpoint

After the endpoint is deployed, you possibly can perform inference by utilizing Boto3 or the SageMaker Python SDK. Within the following code, we use the SageMaker Python SDK to name the mannequin for inference and print the response:

The operate print_response takes a payload consisting of the payload and mannequin response and prints the output. Code Llama helps many parameters whereas performing inference:

- max_length – The mannequin generates textual content till the output size (which incorporates the enter context size) reaches

max_length. If specified, it have to be a optimistic integer. - max_new_tokens – The mannequin generates textual content till the output size (excluding the enter context size) reaches

max_new_tokens. If specified, it have to be a optimistic integer. - num_beams – This specifies the variety of beams used within the grasping search. If specified, it have to be an integer higher than or equal to

num_return_sequences. - no_repeat_ngram_size – The mannequin ensures {that a} sequence of phrases of

no_repeat_ngram_sizeis just not repeated within the output sequence. If specified, it have to be a optimistic integer higher than 1. - temperature – This controls the randomness within the output. Greater

temperatureleads to an output sequence with low-probability phrases, and decreasetemperatureleads to an output sequence with high-probability phrases. Iftemperatureis 0, it leads to grasping decoding. If specified, it have to be a optimistic float. - early_stopping – If

True, textual content technology is completed when all beam hypotheses attain the top of sentence token. If specified, it have to be Boolean. - do_sample – If

True, the mannequin samples the following phrase as per the probability. If specified, it have to be Boolean. - top_k – In every step of textual content technology, the mannequin samples from solely the

top_kmore than likely phrases. If specified, it have to be a optimistic integer. - top_p – In every step of textual content technology, the mannequin samples from the smallest potential set of phrases with cumulative chance

top_p. If specified, it have to be a float between 0 and 1. - return_full_text – If

True, the enter textual content can be a part of the output generated textual content. If specified, it have to be Boolean. The default worth for it’sFalse. - cease – If specified, it have to be a listing of strings. Textual content technology stops if any one of many specified strings is generated.

You may specify any subset of those parameters whereas invoking an endpoint. Subsequent, we present an instance of the best way to invoke an endpoint with these arguments.

Code completion

The next examples exhibit the best way to carry out code completion the place the anticipated endpoint response is the pure continuation of the immediate.

We first run the next code:

We get the next output:

For our subsequent instance, we run the next code:

We get the next output:

Code technology

The next examples present Python code technology utilizing Code Llama.

We first run the next code:

We get the next output:

For our subsequent instance, we run the next code:

We get the next output:

These are among the examples of code-related duties utilizing Code Llama 70B. You should utilize the mannequin to generate much more sophisticated code. We encourage you to attempt it utilizing your individual code-related use instances and examples!

Clear up

After you may have examined the endpoints, be sure to delete the SageMaker inference endpoints and the mannequin to keep away from incurring fees. Use the next code:

Conclusion

On this submit, we launched Code Llama 70B on SageMaker JumpStart. Code Llama 70B is a state-of-the-art mannequin for producing code from pure language prompts in addition to code. You may deploy the mannequin with a couple of easy steps in SageMaker JumpStart after which use it to hold out code-related duties equivalent to code technology and code infilling. As a subsequent step, attempt utilizing the mannequin with your individual code-related use instances and information.

Concerning the authors

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart group. His analysis pursuits embrace scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has printed papers in NeurIPS, Cell, and Neuron.

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart group. His analysis pursuits embrace scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has printed papers in NeurIPS, Cell, and Neuron.

Dr. Farooq Sabir is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He holds PhD and MS levels in Electrical Engineering from the College of Texas at Austin and an MS in Pc Science from Georgia Institute of Know-how. He has over 15 years of labor expertise and likewise likes to show and mentor school college students. At AWS, he helps clients formulate and clear up their enterprise issues in information science, machine studying, pc imaginative and prescient, synthetic intelligence, numerical optimization, and associated domains. Based mostly in Dallas, Texas, he and his household like to journey and go on lengthy highway journeys.

Dr. Farooq Sabir is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He holds PhD and MS levels in Electrical Engineering from the College of Texas at Austin and an MS in Pc Science from Georgia Institute of Know-how. He has over 15 years of labor expertise and likewise likes to show and mentor school college students. At AWS, he helps clients formulate and clear up their enterprise issues in information science, machine studying, pc imaginative and prescient, synthetic intelligence, numerical optimization, and associated domains. Based mostly in Dallas, Texas, he and his household like to journey and go on lengthy highway journeys.

June Received is a product supervisor with SageMaker JumpStart. He focuses on making basis fashions simply discoverable and usable to assist clients construct generative AI functions. His expertise at Amazon additionally contains cell purchasing utility and final mile supply.

June Received is a product supervisor with SageMaker JumpStart. He focuses on making basis fashions simply discoverable and usable to assist clients construct generative AI functions. His expertise at Amazon additionally contains cell purchasing utility and final mile supply.