Construct generative AI chatbots utilizing immediate engineering with Amazon Redshift and Amazon Bedrock

With the arrival of generative AI options, organizations are discovering other ways to use these applied sciences to achieve edge over their rivals. Clever functions, powered by superior basis fashions (FMs) educated on large datasets, can now perceive pure language, interpret that means and intent, and generate contextually related and human-like responses. That is fueling innovation throughout industries, with generative AI demonstrating immense potential to boost numerous enterprise processes, together with the next:

- Speed up analysis and improvement by way of automated speculation era and experiment design

- Uncover hidden insights by figuring out refined tendencies and patterns in information

- Automate time-consuming documentation processes

- Present higher buyer expertise with personalization

- Summarize information from numerous information sources

- Increase worker productiveness by offering software program code suggestions

Amazon Bedrock is a totally managed service that makes it easy to construct and scale generative AI functions. Amazon Bedrock provides a alternative of high-performing basis fashions from main AI corporations, together with AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon, by way of a single API. It allows you to privately customise the FMs along with your information utilizing methods reminiscent of fine-tuning, immediate engineering, and Retrieval Augmented Technology (RAG), and construct brokers that run duties utilizing your enterprise methods and information sources whereas complying with safety and privateness necessities.

On this submit, we focus on learn how to use the great capabilities of Amazon Bedrock to carry out advanced enterprise duties and enhance the client expertise by offering personalization utilizing the info saved in a database like Amazon Redshift. We use immediate engineering methods to develop and optimize the prompts with the info that’s saved in a Redshift database to effectively use the inspiration fashions. We construct a customized generative AI journey itinerary planner as a part of this instance and show how we are able to personalize a journey itinerary for a person based mostly on their reserving and person profile information saved in Amazon Redshift.

Immediate engineering

Immediate engineering is the method the place you possibly can create and design person inputs that may information generative AI options to generate desired outputs. You possibly can select probably the most acceptable phrases, codecs, phrases, and symbols that information the inspiration fashions and in flip the generative AI functions to work together with the customers extra meaningfully. You should utilize creativity and trial-and-error strategies to create a set on enter prompts, so the appliance works as anticipated. Immediate engineering makes generative AI functions extra environment friendly and efficient. You possibly can encapsulate open-ended person enter inside a immediate earlier than passing it to the FMs. For instance, a person might enter an incomplete drawback assertion like, “The place to buy a shirt.” Internally, the appliance’s code makes use of an engineered immediate that claims, “You’re a gross sales assistant for a clothes firm. A person, based mostly in Alabama, United States, is asking you the place to buy a shirt. Reply with the three nearest retailer areas that at the moment inventory a shirt.” The muse mannequin then generates extra related and correct info.

The immediate engineering discipline is evolving continuously and desires artistic expression and pure language abilities to tune the prompts and acquire the specified output from FMs. A immediate can include any of the next parts:

- Instruction – A selected process or instruction you need the mannequin to carry out

- Context – Exterior info or extra context that may steer the mannequin to higher responses

- Enter information – The enter or query that you just wish to discover a response for

- Output indicator – The sort or format of the output

You should utilize immediate engineering for numerous enterprise use circumstances throughout completely different trade segments, reminiscent of the next:

- Banking and finance – Immediate engineering empowers language fashions to generate forecasts, conduct sentiment evaluation, assess dangers, formulate funding methods, generate monetary reviews, and guarantee regulatory compliance. For instance, you should use massive language fashions (LLMs) for a monetary forecast by offering information and market indicators as prompts.

- Healthcare and life sciences – Immediate engineering may also help medical professionals optimize AI methods to help in decision-making processes, reminiscent of prognosis, remedy choice, or threat evaluation. It’s also possible to engineer prompts to facilitate administrative duties, reminiscent of affected person scheduling, document retaining, or billing, thereby rising effectivity.

- Retail – Immediate engineering may also help retailers implement chatbots to deal with frequent buyer requests like queries about order standing, returns, funds, and extra, utilizing pure language interactions. This may improve buyer satisfaction and likewise enable human customer support groups to dedicate their experience to intricate and delicate buyer points.

Within the following instance, we implement a use case from the journey and hospitality trade to implement a customized journey itinerary planner for patrons who’ve upcoming journey plans. We show how we are able to construct a generative AI chatbot that interacts with customers by enriching the prompts from the person profile information that’s saved within the Redshift database. We then ship this enriched immediate to an LLM, particularly, Anthropic’s Claude on Amazon Bedrock, to acquire a personalized journey plan.

Amazon Redshift has introduced a characteristic known as Amazon Redshift ML that makes it easy for information analysts and database builders to create, practice, and apply machine studying (ML) fashions utilizing acquainted SQL instructions in Redshift information warehouses. Nonetheless, this submit makes use of LLMs hosted on Amazon Bedrock to show common immediate engineering methods and its advantages.

Resolution overview

All of us have searched the web for issues to do in a sure place throughout or earlier than we go on a trip. On this resolution, we show how we are able to generate a customized, personalised journey itinerary that customers can reference, which might be generated based mostly on their hobbies, pursuits, favourite meals, and extra. The answer makes use of their reserving information to lookup the cities they will, together with the journey dates, and comes up with a exact, personalised listing of issues to do. This resolution can be utilized by the journey and hospitality trade to embed a customized journey itinerary planner inside their journey reserving portal.

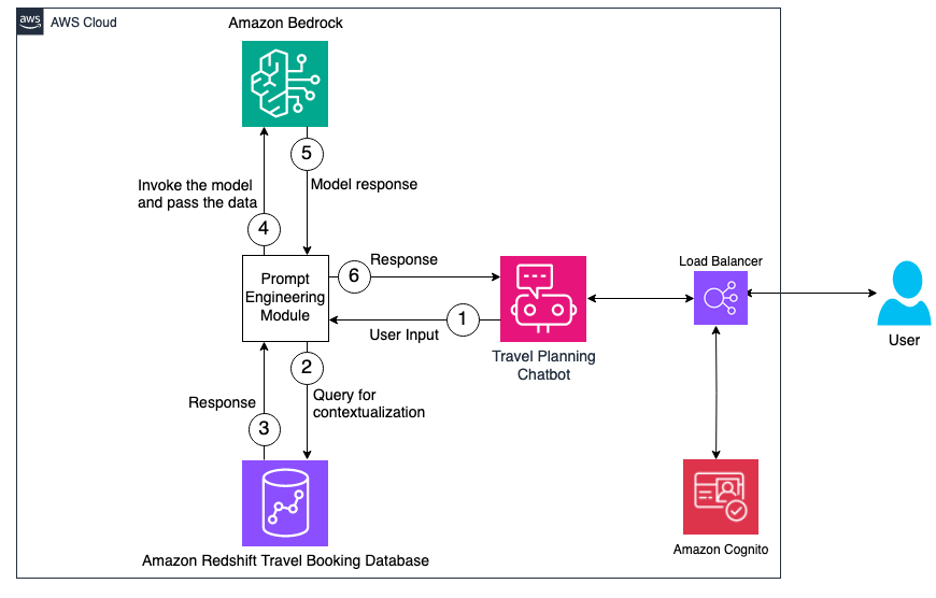

This resolution incorporates two main elements. First, we extract the person’s info like identify, location, hobbies, pursuits, and favourite meals, together with their upcoming journey reserving particulars. With this info, we sew a person immediate collectively and go it to Anthropic’s Claude on Amazon Bedrock to acquire a customized journey itinerary. The next diagram gives a high-level overview of the workflow and the elements concerned on this structure.

First, the person logs in to the chatbot software, which is hosted behind an Utility Load Balancer and authenticated utilizing Amazon Cognito. We acquire the person ID from the person utilizing the chatbot interface, which is shipped to the immediate engineering module. The person’s info like identify, location, hobbies, pursuits, and favourite meals is extracted from the Redshift database together with their upcoming journey reserving particulars like journey metropolis, check-in date, and check-out date.

Conditions

Earlier than you deploy this resolution, be sure you have the next conditions arrange:

Deploy this resolution

Use the next steps to deploy this resolution in your atmosphere. The code used on this resolution is on the market within the GitHub repo.

Step one is to verify the account and the AWS Area the place the answer is being deployed have entry to Amazon Bedrock base fashions.

- On the Amazon Bedrock console, select Mannequin entry within the navigation pane.

- Select Handle mannequin entry.

- Choose the Anthropic Claude mannequin, then select Save adjustments.

It could take a couple of minutes for the entry standing to vary to Entry granted.

Subsequent, we use the next AWS CloudFormation template to deploy an Amazon Redshift Serverless cluster together with all of the associated elements, together with the Amazon Elastic Compute Cloud (Amazon EC2) occasion to host the webapp.

- Select Launch Stack to launch the CloudFormation stack:

- Present a stack identify and SSH keypair, then create the stack.

- On the stack’s Outputs tab, save the values for the Redshift database workgroup identify, secret ARN, URL, and Amazon Redshift service function ARN.

Now you’re prepared to connect with the EC2 occasion utilizing SSH.

- Open an SSH shopper.

- Find your non-public key file that was entered whereas launching the CloudFormation stack.

- Change the permissions of the non-public key file to 400 (

chmod 400 id_rsa). - Connect with the occasion utilizing its public DNS or IP tackle. For instance:

- Replace the configuration file

personalized-travel-itinerary-planner/core/data_feed_config.iniwith the Area, workgroup identify, and secret ARN that you just saved earlier.

- Run the next command to create the database objects that include the person info and journey reserving information:

This command creates the journey schema together with the tables named user_profile and hotel_booking.

- Run the next command to launch the net service:

Within the subsequent steps, you create a person account to log in to the app.

- On the Amazon Cognito console, select Person swimming pools within the navigation pane.

- Choose the person pool that was created as a part of the CloudFormation stack (travelplanner-user-pool).

- Select Create person.

- Enter a person identify, electronic mail, and password, then select Create person.

Now you possibly can replace the callback URL in Amazon Cognito.

- On the

travelplanner-user-poolperson pool particulars web page, navigate to the App integration tab. - Within the App shopper listing part, select the shopper that you just created (

travelplanner-client). - Within the Hosted UI part, select Edit.

- For URL, enter the URL that you just copied from the CloudFormation stack output (ensure to make use of lowercase).

- Select Save adjustments.

Take a look at the answer

Now we are able to take a look at the bot by asking it questions.

- In a brand new browser window, enter the URL you copied from the CloudFormation stack output and log in utilizing the person identify and password that you just created. Change the password if prompted.

- Enter the person ID whose info you wish to use (for this submit, we use person ID 1028169).

- Ask any query to the bot.

The next are some instance questions:

- Can you intend an in depth itinerary for my July journey?

- Ought to I carry a jacket for my upcoming journey?

- Are you able to suggest some locations to journey in March?

Utilizing the person ID you offered, the immediate engineering module will extract the person particulars and design a immediate, together with the query requested by the person, as proven within the following screenshot.

The highlighted textual content within the previous screenshot is the user-specific info that was extracted from the Redshift database and stitched along with some extra directions. The weather of immediate reminiscent of instruction, context, enter information, and output indicator are additionally known as out.

After you go this immediate to the LLM, we get the next output. On this instance, the LLM created a customized journey itinerary for the particular dates of the person’s upcoming reserving. It additionally took into consideration the person’s hobbies, pursuits, and favourite meals whereas planning this itinerary.

|

|

Clear up

To keep away from incurring ongoing expenses, clear up your infrastructure.

- On the AWS CloudFormation console, select Stacks within the navigation pane.

- Choose the stack that you just created and select Delete.

Conclusion

On this submit, we demonstrated how we are able to engineer prompts utilizing information that’s saved in Amazon Redshift and could be handed on to Amazon Bedrock to acquire an optimized response. This resolution gives a simplified strategy for constructing a generative AI software utilizing proprietary information residing in your individual database. By engineering tailor-made prompts based mostly on the info in Amazon Redshift and having Amazon Bedrock generate responses, you possibly can benefit from generative AI in a personalized means utilizing your individual datasets. This enables for extra particular, related, and optimized output than could be doable with extra generalized prompts. The submit exhibits how one can combine AWS companies to create a generative AI resolution that unleashes the complete potential of those applied sciences along with your information.

Keep updated with the most recent developments in generative AI and begin constructing on AWS. In the event you’re searching for help on learn how to start, take a look at the Generative AI Innovation Center.

In regards to the Authors

Ravikiran Rao is a Knowledge Architect at AWS and is obsessed with fixing advanced information challenges for numerous prospects. Outdoors of labor, he’s a theatre fanatic and an novice tennis participant.

Ravikiran Rao is a Knowledge Architect at AWS and is obsessed with fixing advanced information challenges for numerous prospects. Outdoors of labor, he’s a theatre fanatic and an novice tennis participant.

Jigna Gandhi is a Sr. Options Architect at Amazon Internet Companies, based mostly within the Higher New York Metropolis space. She has over 15 years of robust expertise in main a number of advanced, extremely strong, and massively scalable software program options for large-scale enterprise functions.

Jigna Gandhi is a Sr. Options Architect at Amazon Internet Companies, based mostly within the Higher New York Metropolis space. She has over 15 years of robust expertise in main a number of advanced, extremely strong, and massively scalable software program options for large-scale enterprise functions.

Jason Pedreza is a Senior Redshift Specialist Options Architect at AWS with information warehousing expertise dealing with petabytes of information. Previous to AWS, he constructed information warehouse options at Amazon.com and Amazon Units. He focuses on Amazon Redshift and helps prospects construct scalable analytic options.

Jason Pedreza is a Senior Redshift Specialist Options Architect at AWS with information warehousing expertise dealing with petabytes of information. Previous to AWS, he constructed information warehouse options at Amazon.com and Amazon Units. He focuses on Amazon Redshift and helps prospects construct scalable analytic options.

Roopali Mahajan is a Senior Options Architect with AWS based mostly out of New York. She thrives on serving as a trusted advisor for her prospects, serving to them navigate their journey on cloud. Her day is spent fixing advanced enterprise issues by designing efficient options utilizing AWS companies. Throughout off-hours, she likes to spend time together with her household and journey.

Roopali Mahajan is a Senior Options Architect with AWS based mostly out of New York. She thrives on serving as a trusted advisor for her prospects, serving to them navigate their journey on cloud. Her day is spent fixing advanced enterprise issues by designing efficient options utilizing AWS companies. Throughout off-hours, she likes to spend time together with her household and journey.