Skeleton-based pose annotation labeling utilizing Amazon SageMaker Floor Fact

Pose estimation is a pc imaginative and prescient approach that detects a set of factors on objects (resembling folks or automobiles) inside photos or movies. Pose estimation has real-world purposes in sports activities, robotics, safety, augmented actuality, media and leisure, medical purposes, and extra. Pose estimation fashions are educated on photos or movies which might be annotated with a constant set of factors (coordinates) outlined by a rig. To coach correct pose estimation fashions, you first want to amass a big dataset of annotated photos; many datasets have tens or lots of of 1000’s of annotated photos and take vital sources to construct. Labeling errors are vital to determine and stop as a result of mannequin efficiency for pose estimation fashions is closely influenced by labeled knowledge high quality and knowledge quantity.

On this put up, we present how you should utilize a {custom} labeling workflow in Amazon SageMaker Ground Truth particularly designed for keypoint labeling. This tradition workflow helps streamline the labeling course of and reduce labeling errors, thereby lowering the price of acquiring high-quality pose labels.

Significance of high-quality knowledge and lowering labeling errors

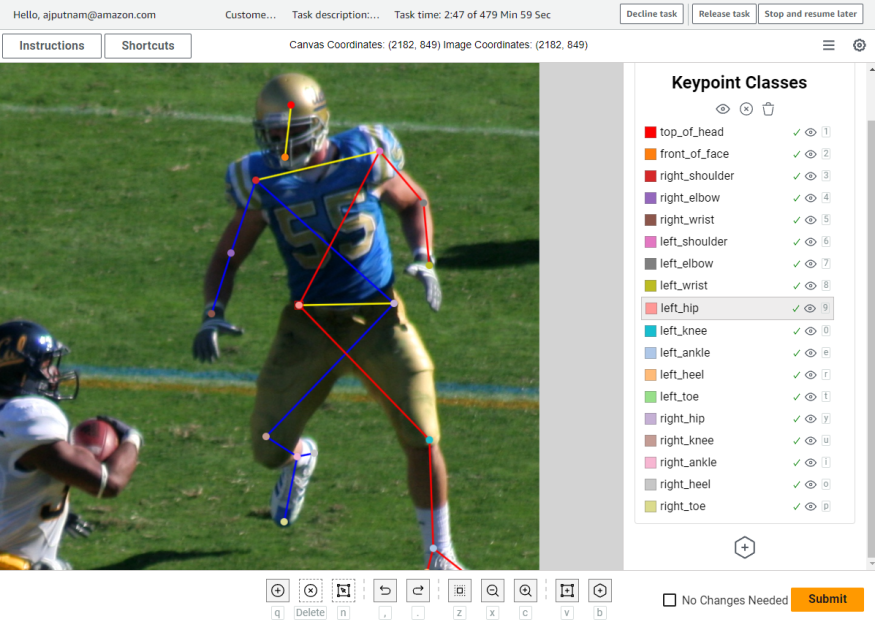

Excessive-quality knowledge is prime for coaching sturdy and dependable pose estimation fashions. The accuracy of those fashions is straight tied to the correctness and precision of the labels assigned to every pose keypoint, which, in flip, is determined by the effectiveness of the annotation course of. Moreover, having a considerable quantity of numerous and well-annotated knowledge ensures that the mannequin can be taught a broad vary of poses, variations, and eventualities, resulting in improved generalization and efficiency throughout totally different real-world purposes. The acquisition of those giant, annotated datasets entails human annotators who fastidiously label photos with pose data. Whereas labeling factors of curiosity throughout the picture, it’s helpful to see the skeletal construction of the thing whereas labeling with the intention to present visible steerage to the annotator. That is useful for figuring out labeling errors earlier than they’re integrated into the dataset like left-right swaps or mislabels (resembling marking a foot as a shoulder). For instance, a labeling error just like the left-right swap made within the following instance can simply be recognized by the crossing of the skeleton rig strains and the mismatching of the colours. These visible cues assist labelers acknowledge errors and can end in a cleaner set of labels.

Because of the handbook nature of labeling, acquiring giant and correct labeled datasets may be cost-prohibitive and much more so with an inefficient labeling system. Due to this fact, labeling effectivity and accuracy are essential when designing your labeling workflow. On this put up, we reveal easy methods to use a {custom} SageMaker Floor Fact labeling workflow to rapidly and precisely annotate photos, lowering the burden of growing giant datasets for pose estimation workflows.

Overview of resolution

This resolution offers an internet net portal the place the labeling workforce can use an online browser to log in, entry labeling jobs, and annotate photos utilizing the crowd-Second-skeleton person interface (UI), a {custom} UI designed for keypoint and pose labeling utilizing SageMaker Floor Fact. The annotations or labels created by the labeling workforce are then exported to an Amazon Simple Storage Service (Amazon S3) bucket, the place they can be utilized for downstream processes like coaching deep studying pc imaginative and prescient fashions. This resolution walks you thru easy methods to arrange and deploy the mandatory parts to create an online portal in addition to easy methods to create labeling jobs for this labeling workflow.

The next is a diagram of the general structure.

This structure is comprised of a number of key parts, every of which we clarify in additional element within the following sections. This structure offers the labeling workforce with an internet net portal hosted by SageMaker Floor Fact. This portal permits every labeler to log in and see their labeling jobs. After they’ve logged in, the labeler can choose a labeling job and start annotating photos utilizing the {custom} UI hosted by Amazon CloudFront. We use AWS Lambda capabilities for pre-annotation and post-annotation knowledge processing.

The next screenshot is an instance of the UI.

The labeler can mark particular keypoints on the picture utilizing the UI. The strains between keypoints shall be routinely drawn for the person primarily based on a skeleton rig definition that the UI makes use of. The UI permits many customizations, resembling the next:

- Customized keypoint names

- Configurable keypoint colours

- Configurable rig line colours

- Configurable skeleton and rig constructions

Every of those are focused options to enhance the benefit and suppleness of labeling. Particular UI customization particulars may be discovered within the GitHub repo and are summarized later on this put up. Be aware that on this put up, we use human pose estimation as a baseline process, however you may develop it to labeling object pose with a pre-defined rig for different objects as effectively, resembling animals or automobiles. Within the following instance, we present how this may be utilized to label the factors of a field truck.

SageMaker Floor Fact

On this resolution, we use SageMaker Floor Fact to supply the labeling workforce with an internet portal and a method to handle labeling jobs. This put up assumes that you simply’re aware of SageMaker Floor Fact. For extra data, consult with Amazon SageMaker Ground Truth.

CloudFront distribution

For this resolution, the labeling UI requires a custom-built JavaScript element referred to as the crowd-Second-skeleton element. This element may be discovered on GitHub as a part of Amazon’s open supply initiatives. The CloudFront distribution shall be used to host the crowd-2d-skeleton.js, which is required by the SageMaker Floor Fact UI. The CloudFront distribution shall be assigned an origin entry identification, which can permit the CloudFront distribution to entry the crowd-Second-skeleton.js residing within the S3 bucket. The S3 bucket will stay non-public and no different objects on this bucket shall be obtainable through the CloudFront distribution attributable to restrictions we place on the origin entry identification by way of a bucket coverage. This can be a beneficial apply for following the least-privilege precept.

Amazon S3 bucket

We use the S3 bucket to retailer the SageMaker Floor Fact enter and output manifest recordsdata, the {custom} UI template, photos for the labeling jobs, and the JavaScript code wanted for the {custom} UI. This bucket shall be non-public and never accessible to the general public. The bucket can even have a bucket coverage that restricts the CloudFront distribution to solely having the ability to entry the JavaScript code wanted for the UI. This prevents the CloudFront distribution from internet hosting some other object within the S3 bucket.

Pre-annotation Lambda operate

SageMaker Floor Fact labeling jobs sometimes use an enter manifest file, which is in JSON Strains format. This enter manifest file comprises metadata for a labeling job, acts as a reference to the info that must be labeled, and helps configure how the info must be offered to the annotators. The pre-annotation Lambda operate processes objects from the enter manifest file earlier than the manifest knowledge is enter to the {custom} UI template. That is the place any formatting or particular modifications to the objects may be completed earlier than presenting the info to the annotators within the UI. For extra data on pre-annotation Lambda capabilities, see Pre-annotation Lambda.

Submit-annotation Lambda operate

Just like the pre-annotation Lambda operate, the post-annotation operate handles further knowledge processing you might wish to do after all of the labelers have completed labeling however earlier than writing the ultimate annotation output outcomes. This processing is finished by a Lambda operate, which is liable for formatting the info for the labeling job output outcomes. On this resolution, we’re merely utilizing it to return the info in our desired output format. For extra data on post-annotation Lambda capabilities, see Post-annotation Lambda.

Submit-annotation Lambda operate function

We use an AWS Identity and Access Management (IAM) function to provide the post-annotation Lambda operate entry to the S3 bucket. That is wanted to learn the annotation outcomes and make any modifications earlier than writing out the ultimate outcomes to the output manifest file.

SageMaker Floor Fact function

We use this IAM function to provide the SageMaker Floor Fact labeling job the flexibility to invoke the Lambda capabilities and to learn the pictures, manifest recordsdata, and {custom} UI template within the S3 bucket.

Stipulations

For this walkthrough, you must have the next conditions:

For this resolution, we use the AWS CDK to deploy the structure. Then we create a pattern labeling job, use the annotation portal to label the pictures within the labeling job, and look at the labeling outcomes.

Create the AWS CDK stack

After you full all of the conditions, you’re able to deploy the answer.

Arrange your sources

Full the next steps to arrange your sources:

- Obtain the instance stack from the GitHub repo.

- Use the cd command to alter into the repository.

- Create your Python surroundings and set up required packages (see the repository README.md for extra particulars).

- Along with your Python surroundings activated, run the next command:

- Run the next command to deploy the AWS CDK:

- Run the next command to run the post-deployment script:

Create a labeling job

After you have got arrange your sources, you’re able to create a labeling job. For the needs of this put up, we create a labeling job utilizing the instance scripts and pictures supplied within the repository.

- CD into the

scriptslisting within the repository. - Obtain the instance photos from the web by operating the next code:

This script downloads a set of 10 photos, which we use in our instance labeling job. We overview easy methods to use your individual {custom} enter knowledge later on this put up.

- Create a labeling job by operating to following code:

This script takes a SageMaker Floor Fact non-public workforce ARN as an argument, which must be the ARN for a workforce you have got in the identical account you deployed this structure into. The script will create the enter manifest file for our labeling job, add it to Amazon S3, and create a SageMaker Floor Fact {custom} labeling job. We take a deeper dive into the main points of this script later on this put up.

Label the dataset

After you have got launched the instance labeling job, it’ll seem on the SageMaker console in addition to the workforce portal.

Within the workforce portal, choose the labeling job and select Begin working.

You’ll be offered with a picture from the instance dataset. At this level, you should utilize the {custom} crowd-Second-skeleton UI to annotate the pictures. You’ll be able to familiarize your self with the crowd-Second-skeleton UI by referring to User Interface Overview. We use the rig definition from the COCO keypoint detection dataset challenge because the human pose rig. To reiterate, you may customise this with out our {custom} UI element to take away or add factors primarily based in your necessities.

If you’re completed annotating a picture, select Submit. This can take you to the subsequent picture within the dataset till all photos are labeled.

Entry the labeling outcomes

When you have got completed labeling all the pictures within the labeling job, SageMaker Floor Fact will invoke the post-annotation Lambda operate and produce an output.manifest file containing the entire annotations. This output.manifest shall be saved within the S3 bucket. In our case, the situation of the output manifest ought to observe the S3 URI path s3://<bucket title> /labeling_jobs/output/<labeling job title>/manifests/output/output.manifest. The output.manifest file is a JSON Strains file, the place every line corresponds to a single picture and its annotations from the labeling workforce. Every JSON Strains merchandise is a JSON object with many fields. The sphere we’re all in favour of is known as label-results. The worth of this discipline is an object containing the next fields:

- dataset_object_id – The ID or index of the enter manifest merchandise

- data_object_s3_uri – The picture’s Amazon S3 URI

- image_file_name – The picture’s file title

- image_s3_location – The picture’s Amazon S3 URL

- original_annotations – The unique annotations (solely set and used if you’re utilizing a pre-annotation workflow)

- updated_annotations – The annotations for the picture

- worker_id – The workforce employee who made the annotations

- no_changes_needed – Whether or not the no modifications wanted verify field was chosen

- was_modified – Whether or not the annotation knowledge differs from the unique enter knowledge

- total_time_in_seconds – The time it took the workforce employee to annotation the picture

With these fields, you may entry your annotation outcomes for every picture and do calculations like common time to label a picture.

Create your individual labeling jobs

Now that we have now created an instance labeling job and also you perceive the general course of, we stroll you thru the code liable for creating the manifest file and launching the labeling job. We deal with the important thing components of the script that you could be wish to modify to launch your individual labeling jobs.

We cowl snippets of code from the create_example_labeling_job.py script positioned within the GitHub repository. The script begins by organising variables which might be used later within the script. A number of the variables are hard-coded for simplicity, whereas others, that are stack dependent, shall be imported dynamically at runtime by fetching the values created from our AWS CDK stack.

The primary key part on this script is the creation of the manifest file. Recall that the manifest file is a JSON strains file that comprises the main points for a SageMaker Floor Fact labeling job. Every JSON Strains object represents one merchandise (for instance, a picture) that must be labeled. For this workflow, the thing ought to include the next fields:

- source-ref – The Amazon S3 URI to the picture you want to label.

- annotations – A listing of annotation objects, which is used for pre-annotating workflows. See the crowd-2d-skeleton documentation for extra particulars on the anticipated values.

The script creates a manifest line for every picture within the picture listing utilizing the next part of code:

If you wish to use totally different photos or level to a unique picture listing, you may modify that part of the code. Moreover, for those who’re utilizing a pre-annotation workflow, you may replace the annotations array with a JSON string consisting of the array and all its annotation objects. The main points of the format of this array are documented within the crowd-2d-skeleton documentation.

With the manifest line objects now created, you may create and add the manifest file to the S3 bucket you created earlier:

Now that you’ve created a manifest file containing the pictures you wish to label, you may create a labeling job. You’ll be able to create the labeling job programmatically utilizing the AWS SDK for Python (Boto3). The code to create a labeling job is as follows:

The elements of this code you might wish to modify are LabelingJobName, TaskTitle, and TaskDescription. The LabelingJobName is the distinctive title of the labeling job that SageMaker will use to reference your job. That is additionally the title that may seem on the SageMaker console. TaskTitle serves the same function, however doesn’t must be distinctive and would be the title of the job that seems within the workforce portal. Chances are you’ll wish to make these extra particular to what you’re labeling or what the labeling job is for. Lastly, we have now the TaskDescription discipline. This discipline seems within the workforce portal to supply additional context to the labelers as to what the duty is, resembling directions and steerage for the duty. For extra data on these fields in addition to the others, consult with the create_labeling_job documentation.

Make changes to the UI

On this part, we go over among the methods you may customise the UI. The next is an inventory of the most typical potential customizations to the UI with the intention to alter it to your modeling process:

- You’ll be able to outline which keypoints may be labeled. This consists of the title of the keypoint and its shade.

- You’ll be able to change the construction of the skeleton (which keypoints are linked).

- You’ll be able to change the road colours for particular strains between particular keypoints.

All of those UI customizations are configurable by way of arguments handed into the crowd-Second-skeleton element, which is the JavaScript element used on this custom workflow template. On this template, you’ll discover the utilization of the crowd-Second-skeleton element. A simplified model is proven within the following code:

Within the previous code instance, you may see the next attributes on the element: imgSrc, keypointClasses, skeletonRig, skeletonBoundingBox, and intialValues. We describe every attribute’s function within the following sections, however customizing the UI is as easy as altering the values for these attributes, saving the template, and rerunning the post_deployment_script.py we used beforehand.

imgSrc attribute

The imgSrc attribute controls which picture to point out within the UI when labeling. Normally, a unique picture is used for every manifest line merchandise, so this attribute is usually populated dynamically utilizing the built-in Liquid templating language. You’ll be able to see within the earlier code instance that the attribute worth is ready to { grant_read_access }, which is Liquid template variable that shall be changed with the precise image_s3_uri worth when the template is being rendered. The rendering course of begins when the person opens a picture for annotation. This course of grabs a line merchandise from the enter manifest file and sends it to the pre-annotation Lambda operate as an occasion.dataObject. The pre-annotation operate takes take the data it wants from the road merchandise and returns a taskInput dictionary, which is then handed to the Liquid rendering engine, which can substitute any Liquid variables in your template. For instance, let’s say you have got a manifest file with the next line:

This knowledge can be handed to the pre-annotation operate. The next code reveals how the operate extracts the values from the occasion object:

The thing returned from the operate on this case would appear to be the next code:

The returned knowledge from the operate is then obtainable to the Liquid template engine, which replaces the template values within the template with the info values returned by the operate. The consequence can be one thing like the next code:

keypointClasses attribute

The keypointClasses attribute defines which keypoints will seem within the UI and be utilized by the annotators. This attribute takes a JSON string containing an inventory of objects. Every object represents a keypoint. Every keypoint object ought to include the next fields:

- id – A novel worth to determine that keypoint.

- shade – The colour of the keypoint represented as an HTML hex shade.

- label – The title or keypoint class.

- x – This optionally available attribute is just wanted if you wish to use the draw skeleton performance within the UI. The worth for this attribute is the x place of the keypoint relative to the skeleton’s bounding field. This worth is normally obtained by the Skeleton Rig Creator tool. In case you are doing keypoint annotations and don’t want to attract a complete skeleton directly, you may set this worth to 0.

- y – This optionally available attribute is just like x, however for the vertical dimension.

For extra data on the keypointClasses attribute, see the keypointClasses documentation.

skeletonRig attribute

The skeletonRig attribute controls which keypoints ought to have strains drawn between them. This attribute takes a JSON string containing an inventory of keypoint label pairs. Every pair informs the UI which keypoints to attract strains between. For instance, '[["left_ankle","left_knee"],["left_knee","left_hip"]]' informs the UI to attract strains between "left_ankle" and "left_knee" and draw strains between "left_knee" and "left_hip". This may be generated by the Skeleton Rig Creator tool.

skeletonBoundingBox attribute

The skeletonBoundingBox attribute is optionally available and solely wanted if you wish to use the draw skeleton performance within the UI. The draw skeleton performance is the flexibility to annotate total skeletons with a single annotation motion. We don’t cowl this characteristic on this put up. The worth for this attribute is the skeleton’s bounding field dimensions. This worth is normally obtained by the Skeleton Rig Creator tool. In case you are doing keypoint annotations and don’t want to attract a complete skeleton directly, you may set this worth to null. It’s endorsed to make use of the Skeleton Rig Creator instrument to get this worth.

intialValues attribute

The initialValues attribute is used to pre-populate the UI with annotations obtained from one other course of (resembling one other labeling job or machine studying mannequin). That is helpful when doing adjustment or overview jobs. The info for this discipline is normally populated dynamically in the identical description for the imgSrc attribute. Extra particulars may be discovered within the crowd-2d-skeleton documentation.

Clear up

To keep away from incurring future fees, you must delete the objects in your S3 bucket and delete your AWS CDK stack. You’ll be able to delete your S3 objects through the Amazon SageMaker console or the AWS Command Line Interface (AWS CLI). After you have got deleted the entire S3 objects within the bucket, you may destroy the AWS CDK by operating the next code:

This can take away the sources you created earlier.

Concerns

Further steps perhaps wanted to productionize your workflow. Listed here are some issues relying in your organizations threat profile:

- Including entry and utility logging

- Including an online utility firewall (WAF)

- Adjusting IAM permissions to observe least privilege

Conclusion

On this put up, we shared the significance of labeling effectivity and accuracy in constructing pose estimation datasets. To assist with each objects, we confirmed how you should utilize SageMaker Floor Fact to construct {custom} labeling workflows to assist skeleton-based pose labeling duties, aiming to reinforce effectivity and precision through the labeling course of. We confirmed how one can additional lengthen the code and examples to varied {custom} pose estimation labeling necessities.

We encourage you to make use of this resolution on your labeling duties and to have interaction with AWS for help or inquiries associated to {custom} labeling workflows.

In regards to the Authors

Arthur Putnam is a Full-Stack Knowledge Scientist in AWS Skilled Companies. Arthur’s experience is centered round growing and integrating front-end and back-end applied sciences into AI methods. Exterior of labor, Arthur enjoys exploring the newest developments in expertise, spending time along with his household, and having fun with the outside.

Arthur Putnam is a Full-Stack Knowledge Scientist in AWS Skilled Companies. Arthur’s experience is centered round growing and integrating front-end and back-end applied sciences into AI methods. Exterior of labor, Arthur enjoys exploring the newest developments in expertise, spending time along with his household, and having fun with the outside.

Ben Fenker is a Senior Knowledge Scientist in AWS Skilled Companies and has helped prospects construct and deploy ML options in industries starting from sports activities to healthcare to manufacturing. He has a Ph.D. in physics from Texas A&M College and 6 years of trade expertise. Ben enjoys baseball, studying, and elevating his youngsters.

Ben Fenker is a Senior Knowledge Scientist in AWS Skilled Companies and has helped prospects construct and deploy ML options in industries starting from sports activities to healthcare to manufacturing. He has a Ph.D. in physics from Texas A&M College and 6 years of trade expertise. Ben enjoys baseball, studying, and elevating his youngsters.

Jarvis Lee is a Senior Knowledge Scientist with AWS Skilled Companies. He has been with AWS for over six years, working with prospects on machine studying and pc imaginative and prescient issues. Exterior of labor, he enjoys using bicycles.

Jarvis Lee is a Senior Knowledge Scientist with AWS Skilled Companies. He has been with AWS for over six years, working with prospects on machine studying and pc imaginative and prescient issues. Exterior of labor, he enjoys using bicycles.