This AI Paper Unveils Combined-Precision Coaching for Fourier Neural Operators: Bridging Effectivity and Precision in Excessive-Decision PDE Options

Neural operators, particularly the Fourier Neural Operators (FNO), have revolutionized how researchers strategy fixing partial differential equations (PDEs), a cornerstone downside in science and engineering. These operators have proven distinctive promise in studying mappings between perform areas, pivotal for precisely simulating phenomena like local weather modeling and fluid dynamics. Regardless of their potential, the substantial computational assets required for coaching these fashions, particularly in GPU reminiscence and processing energy, pose vital challenges.

The analysis’s core downside lies in optimizing neural operator coaching to make it extra possible for real-world purposes. Conventional coaching approaches demand high-resolution knowledge, which in flip requires intensive reminiscence and computational time, limiting the scalability of those fashions. This challenge is especially pronounced when deploying neural operators for fixing complicated PDEs throughout varied scientific domains.

Whereas efficient, present methodologies for coaching neural operators must work on reminiscence utilization and computational pace inefficiencies. These limitations turn out to be stark obstacles when coping with high-resolution knowledge, a necessity for guaranteeing the accuracy and reliability of options produced by neural operators. As such, there’s a urgent want for revolutionary approaches that may mitigate these challenges with out compromising on mannequin efficiency.

The analysis introduces a mixed-precision coaching approach for neural operators, notably the FNO, aiming to scale back reminiscence necessities and improve coaching pace considerably. This technique leverages the inherent approximation error in neural operator studying, arguing that full precision in coaching will not be all the time essential. By rigorously analyzing the approximation and precision errors inside FNOs, the researchers set up {that a} strategic discount in precision can preserve a decent approximation sure, thus preserving the mannequin’s accuracy whereas optimizing reminiscence use.

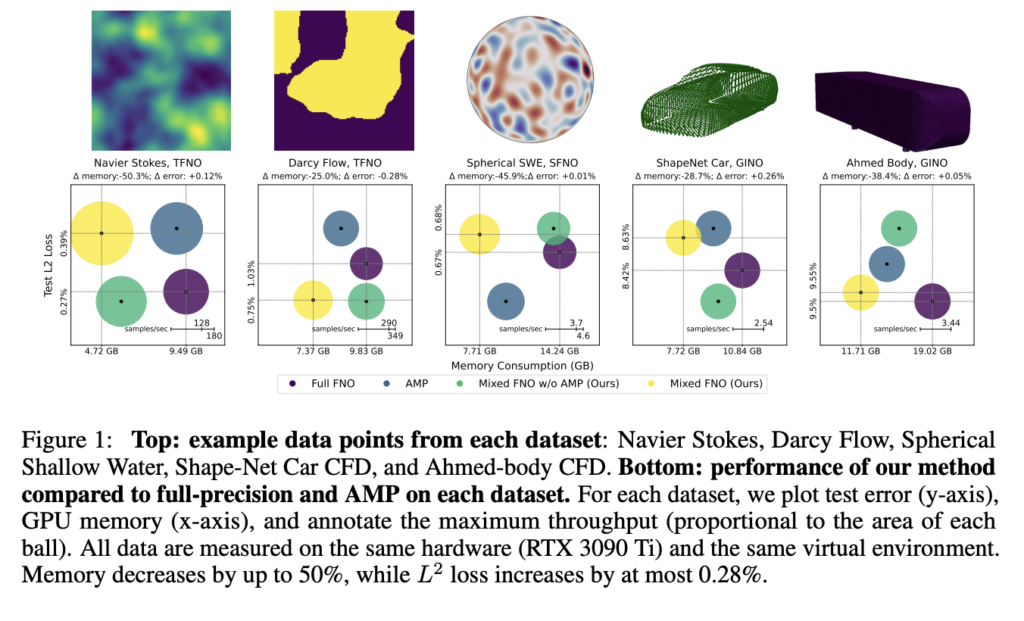

Delving deeper, the proposed technique optimizes tensor contractions, a memory-intensive step in FNO coaching, by using a focused strategy to scale back precision. This optimization addresses the constraints of present mixed-precision strategies. Via intensive experiments, it demonstrates a discount in GPU reminiscence utilization by as much as 50% and an enchancment in coaching throughput by 58% with out vital loss in accuracy.

The exceptional outcomes of this analysis showcase the strategy’s effectiveness throughout varied datasets and neural operator fashions, underscoring its potential to rework neural operator coaching. By reaching comparable ranges of accuracy with considerably decrease computational assets, this mixed-precision coaching strategy paves the way in which for extra scalable and environment friendly options to complicated PDE-based issues in science and engineering.

In conclusion, the introduced analysis offers a compelling resolution to the computational challenges of coaching neural operators to resolve PDEs. By introducing a mixed-precision coaching technique, the analysis group has opened new avenues for making these highly effective fashions extra accessible and sensible for real-world purposes. The strategy conserves precious computational assets and maintains the excessive accuracy important for scientific computations, marking a big step ahead within the area of computational science.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to observe us on Twitter and Google News. Be a part of our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Neglect to affix our Telegram Channel

Hi there, My title is Adnan Hassan. I’m a consulting intern at Marktechpost and shortly to be a administration trainee at American Categorical. I’m at present pursuing a twin diploma on the Indian Institute of Expertise, Kharagpur. I’m obsessed with know-how and need to create new merchandise that make a distinction.