Improve Amazon Join and Lex with generative AI capabilities

Efficient self-service choices have gotten more and more essential for contact facilities, however implementing them properly presents distinctive challenges.

Amazon Lex offers your Amazon Connect contact heart with chatbot functionalities resembling computerized speech recognition (ASR) and pure language understanding (NLU) capabilities by voice and textual content channels. The bot takes pure language speech or textual content enter, acknowledges the intent behind the enter, and fulfills the person’s intent by invoking the suitable response.

Callers can have numerous accents, pronunciation, and grammar. Mixed with background noise, this could make it difficult for speech recognition to precisely perceive statements. For instance, “I need to observe my order” could also be misrecognized as “I need to truck my holder.” Failed intents like these frustrate clients who need to repeat themselves, get routed incorrectly, or are escalated to stay brokers—costing companies extra.

Amazon Bedrock democratizes foundational mannequin (FM) entry for builders to effortlessly construct and scale generative AI-based functions for the trendy contact heart. FMs delivered by Amazon Bedrock, resembling Amazon Titan and Anthropic Claude, are pretrained on internet-scale datasets that provides them sturdy NLU capabilities resembling sentence classification, query and reply, and enhanced semantic understanding regardless of speech recognition errors.

On this submit, we discover an answer that makes use of FMs delivered by Amazon Bedrock to reinforce intent recognition of Amazon Lex built-in with Amazon Join, in the end delivering an improved self-service expertise on your clients.

Overview of resolution

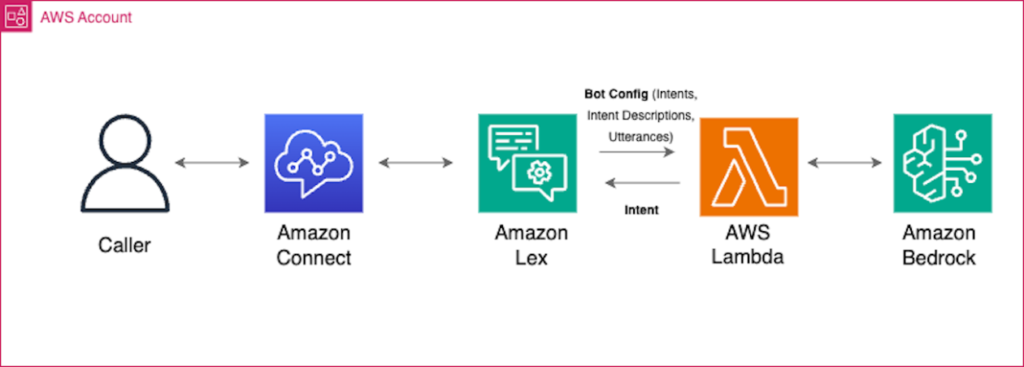

The answer makes use of Amazon Connect, Amazon Lex , AWS Lambda, and Amazon Bedrock within the following steps:

- An Amazon Join contact stream integrates with an Amazon Lex bot through the

GetCustomerInputblock. - When the bot fails to acknowledge the caller’s intent and defaults to the fallback intent, a Lambda perform is triggered.

- The Lambda perform takes the transcript of the buyer utterance and passes it to a basis mannequin in Amazon Bedrock

- Utilizing its superior pure language capabilities, the mannequin determines the caller’s intent.

- The Lambda perform then directs the bot to route the decision to the proper intent for success.

Through the use of Amazon Bedrock basis fashions, the answer permits the Amazon Lex bot to know intents regardless of speech recognition errors. This leads to easy routing and success, stopping escalations to brokers and irritating repetitions for callers.

The next diagram illustrates the answer structure and workflow.

Within the following sections, we take a look at the important thing parts of the answer in additional element.

Lambda features and the LangChain Framework

When the Amazon Lex bot invokes the Lambda perform, it sends an occasion message that accommodates bot info and the transcription of the utterance from the caller. Utilizing this occasion message, the Lambda perform dynamically retrieves the bot’s configured intents, intent description, and intent utterances and builds a immediate utilizing LangChain, which is an open supply machine studying (ML) framework that permits builders to combine giant language fashions (LLMs), information sources, and functions.

An Amazon Bedrock basis mannequin is then invoked utilizing the immediate and a response is obtained with the expected intent and confidence degree. If the arrogance degree is larger than a set threshold, for instance 80%, the perform returns the recognized intent to Amazon Lex with an motion to delegate. If the arrogance degree is under the edge, it defaults again to the default FallbackIntent and an motion to shut it.

In-context studying, immediate engineering, and mannequin invocation

We use in-context studying to have the ability to use a basis mannequin to perform this activity. In-context studying is the flexibility for LLMs to study the duty utilizing simply what’s within the immediate with out being pre-trained or fine-tuned for the actual activity.

Within the immediate, we first present the instruction detailing what must be executed. Then, the Lambda perform dynamically retrieves and injects the Amazon Lex bot’s configured intents, intent descriptions, and intent utterances into the immediate. Lastly, we offer it directions on the best way to output its pondering and closing consequence.

The next immediate template was examined on textual content technology fashions Anthropic Claude On the spot v1.2 and Anthropic Claude v2. We use XML tags to higher enhance the efficiency of the mannequin. We additionally add room for the mannequin to assume earlier than figuring out the ultimate intent to higher enhance its reasoning for selecting the best intent. The {intent_block} accommodates the intent IDs, intent descriptions, and intent utterances. The {enter} block accommodates the transcribed utterance from the caller. Three backticks (“`) are added on the finish to assist the mannequin output a code block extra constantly. A <STOP> sequence is added to cease it from producing additional.

After the mannequin has been invoked, we obtain the next response from the inspiration mannequin:

Filter out there intents primarily based on contact stream session attributes

When utilizing the answer as a part of an Amazon Join contact stream, you’ll be able to additional improve the flexibility of the LLM to determine the proper intent by specifying the session attribute available_intents within the “Get buyer enter” block with a comma-separated checklist of intents, as proven within the following screenshot. By doing so, the Lambda perform will solely embody these specified intents as a part of the immediate to the LLM, lowering the variety of intents that the LLM has to cause by. If the available_intents session attribute shouldn’t be specified, all intents within the Amazon Lex bot might be utilized by default.

Lambda perform response to Amazon Lex

After the LLM has decided the intent, the Lambda perform responds within the specific format required by Amazon Lex to course of the response.

If an identical intent is discovered above the arrogance threshold, it returns a dialog motion sort Delegate to instruct Amazon Lex to make use of the chosen intent and subsequently return the finished intent again to Amazon Join. The response output is as follows:

If the arrogance degree is under the edge or an intent was not acknowledged, a dialog motion sort Shut is returned to instruct Amazon Lex to shut the FallbackIntent, and return the management again to Amazon Join. The response output is as follows:

The entire supply code for this pattern is accessible in GitHub.

Stipulations

Earlier than you get began, be sure to have the next conditions:

Implement the answer

To implement the answer, full the next steps:

- Clone the repository

- Run the next command to initialize the surroundings and create an Amazon Elastic Container Registry (Amazon ECR) repository for our Lambda perform’s picture. Present the AWS Area and ECR repository identify that you just want to create.

- Replace the

ParameterValuefields within thescripts/parameters.jsonfile:ParameterKey ("AmazonECRImageUri")– Enter the repository URL from the earlier step.ParameterKey ("AmazonConnectName")– Enter a singular identify.ParameterKey ("AmazonLexBotName")– Enter a singular identify.ParameterKey ("AmazonLexBotAliasName")– The default is “prodversion”; you’ll be able to change it if wanted.ParameterKey ("LoggingLevel")– The default is “INFO”; you’ll be able to change it if required. Legitimate values are DEBUG, WARN, and ERROR.ParameterKey ("ModelID")– The default is “anthropic.claude-instant-v1”; you’ll be able to change it if you’ll want to use a distinct mannequin.ParameterKey ("AmazonConnectName")– The default is “0.75”; you’ll be able to change it if you’ll want to replace the arrogance rating.

- Run the command to generate the CloudFormation stack and deploy the sources:

For those who don’t need to construct the contact stream from scratch in Amazon Join, you’ll be able to import the pattern stream supplied with this repository filelocation: /contactflowsample/samplecontactflow.json.

- Log in to your Amazon Join occasion. The account should be assigned a safety profile that features edit permissions for flows.

- On the Amazon Join console, within the navigation pane, underneath Routing, select Contact flows.

- Create a brand new stream of the identical sort because the one you might be importing.

- Select Save and Import stream.

- Choose the file to import and select Import.

When the stream is imported into an current stream, the identify of the present stream is up to date, too.

- Evaluate and replace any resolved or unresolved references as vital.

- To avoid wasting the imported stream, select Save. To publish, select Save and Publish.

- After you add the contact stream, replace the next configurations:

- Replace the

GetCustomerInputblocks with the proper Amazon Lex bot identify and model. - Underneath Handle Cellphone Quantity, replace the quantity with the contact stream or IVR imported earlier.

- Replace the

Confirm the configuration

Confirm that the Lambda perform created with the CloudFormation stack has an IAM function with permissions to retrieve bots and intent info from Amazon Lex (checklist and browse permissions), and applicable Amazon Bedrock permissions (checklist and browse permissions).

In your Amazon Lex bot, on your configured alias and language, confirm that the Lambda perform was arrange appropriately. For the FallBackIntent, verify that Fulfillmentis set to Lively to have the ability to run the perform at any time when the FallBackIntent is triggered.

At this level, your Amazon Lex bot will mechanically run the Lambda perform and the answer ought to work seamlessly.

Take a look at the answer

Let’s take a look at a pattern intent, description, and utterance configuration in Amazon Lex and see how properly the LLM performs with pattern inputs that accommodates typos, grammar errors, and even a distinct language.

The next determine exhibits screenshots of our instance. The left aspect exhibits the intent identify, its description, and a single-word pattern utterance. With out a lot configuration on Amazon Lex, the LLM is ready to predict the proper intent (proper aspect). On this check, we have now a easy success message from the proper intent.

Clear up

To wash up your sources, run the next command to delete the ECR repository and CloudFormation stack:

Conclusion

Through the use of Amazon Lex enhanced with LLMs delivered by Amazon Bedrock, you’ll be able to enhance the intent recognition efficiency of your bots. This offers a seamless self-service expertise for a various set of shoppers, bridging the hole between accents and distinctive speech traits, and in the end enhancing buyer satisfaction.

To dive deeper and study extra about generative AI, try these extra sources:

For extra info on how one can experiment with the generative AI-powered self-service resolution, see Deploy self-service question answering with the QnABot on AWS solution powered by Amazon Lex with Amazon Kendra and large language models.

Concerning the Authors

Hamza Nadeem is an Amazon Join Specialist Options Architect at AWS, primarily based in Toronto. He works with clients all through Canada to modernize their Contact Facilities and supply options to their distinctive buyer engagement challenges and enterprise necessities. In his spare time, Hamza enjoys touring, soccer and attempting new recipes together with his spouse.

Hamza Nadeem is an Amazon Join Specialist Options Architect at AWS, primarily based in Toronto. He works with clients all through Canada to modernize their Contact Facilities and supply options to their distinctive buyer engagement challenges and enterprise necessities. In his spare time, Hamza enjoys touring, soccer and attempting new recipes together with his spouse.

Parag Srivastava is a Options Architect at Amazon Internet Companies (AWS), serving to enterprise clients with profitable cloud adoption and migration. Throughout his skilled profession, he has been extensively concerned in advanced digital transformation tasks. He’s additionally obsessed with constructing modern options round geospatial elements of addresses.

Parag Srivastava is a Options Architect at Amazon Internet Companies (AWS), serving to enterprise clients with profitable cloud adoption and migration. Throughout his skilled profession, he has been extensively concerned in advanced digital transformation tasks. He’s additionally obsessed with constructing modern options round geospatial elements of addresses.

Ross Alas is a Options Architect at AWS primarily based in Toronto, Canada. He helps clients innovate with AI/ML and Generative AI options that results in actual enterprise outcomes. He has labored with quite a lot of clients from retail, monetary providers, expertise, pharmaceutical, and others. In his spare time, he loves the outside and having fun with nature together with his household.

Ross Alas is a Options Architect at AWS primarily based in Toronto, Canada. He helps clients innovate with AI/ML and Generative AI options that results in actual enterprise outcomes. He has labored with quite a lot of clients from retail, monetary providers, expertise, pharmaceutical, and others. In his spare time, he loves the outside and having fun with nature together with his household.

Sangeetha Kamatkar is a Options Architect at Amazon Internet Companies (AWS), serving to clients with profitable cloud adoption and migration. She works with clients to craft extremely scalable, versatile, and resilient cloud architectures that tackle buyer enterprise issues. In her spare time, she listens to music, watch motion pictures and luxuriate in gardening throughout summer season.

Sangeetha Kamatkar is a Options Architect at Amazon Internet Companies (AWS), serving to clients with profitable cloud adoption and migration. She works with clients to craft extremely scalable, versatile, and resilient cloud architectures that tackle buyer enterprise issues. In her spare time, she listens to music, watch motion pictures and luxuriate in gardening throughout summer season.