How BigBasket improved AI-enabled checkout at their bodily shops utilizing Amazon SageMaker

This put up is co-written with Santosh Waddi and Nanda Kishore Thatikonda from BigBasket.

BigBasket is India’s largest on-line meals and grocery retailer. They function in a number of ecommerce channels similar to fast commerce, slotted supply, and every day subscriptions. You can too purchase from their bodily shops and merchandising machines. They provide a big assortment of over 50,000 merchandise throughout 1,000 manufacturers, and are working in additional than 500 cities and cities. BigBasket serves over 10 million prospects.

On this put up, we talk about how BigBasket used Amazon SageMaker to coach their pc imaginative and prescient mannequin for Quick-Transferring Client Items (FMCG) product identification, which helped them scale back coaching time by roughly 50% and save prices by 20%.

Buyer challenges

As we speak, most supermarkets and bodily shops in India present guide checkout on the checkout counter. This has two points:

- It requires further manpower, weight stickers, and repeated coaching for the in-store operational staff as they scale.

- In most shops, the checkout counter is completely different from the weighing counters, which provides to the friction within the buyer buy journey. Clients usually lose the load sticker and have to return to the weighing counters to gather one once more earlier than continuing with the checkout course of.

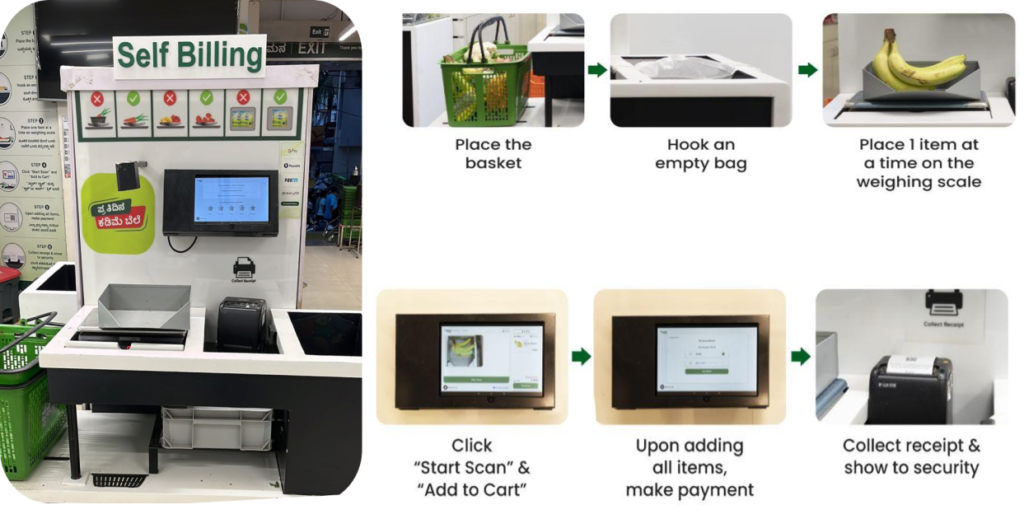

Self-checkout course of

BigBasket launched an AI-powered checkout system of their bodily shops that makes use of cameras to differentiate objects uniquely. The next determine supplies an summary of the checkout course of.

The BigBasket staff was working open supply, in-house ML algorithms for pc imaginative and prescient object recognition to energy AI-enabled checkout at their Fresho (bodily) shops. We have been going through the next challenges to function their present setup:

- With the continual introduction of latest merchandise, the pc imaginative and prescient mannequin wanted to repeatedly incorporate new product info. The system wanted to deal with a big catalog of over 12,000 Inventory Holding Models (SKUs), with new SKUs being frequently added at a fee of over 600 monthly.

- To maintain tempo with new merchandise, a brand new mannequin was produced every month utilizing the most recent coaching knowledge. It was expensive and time consuming to coach the fashions often to adapt to new merchandise.

- BigBasket additionally wished to scale back the coaching cycle time to enhance the time to market. On account of will increase in SKUs, the time taken by the mannequin was rising linearly, which impacted their time to market as a result of the coaching frequency was very excessive and took a very long time.

- Information augmentation for mannequin coaching and manually managing the whole end-to-end coaching cycle was including vital overhead. BigBasket was working this on a third-party platform, which incurred vital prices.

Answer overview

We advisable that BigBasket rearchitect their present FMCG product detection and classification resolution utilizing SageMaker to handle these challenges. Earlier than shifting to full-scale manufacturing, BigBasket tried a pilot on SageMaker to guage efficiency, price, and comfort metrics.

Their goal was to fine-tune an present pc imaginative and prescient machine studying (ML) mannequin for SKU detection. We used a convolutional neural community (CNN) structure with ResNet152 for picture classification. A large dataset of round 300 photos per SKU was estimated for mannequin coaching, leading to over 4 million whole coaching photos. For sure SKUs, we augmented knowledge to embody a broader vary of environmental circumstances.

The next diagram illustrates the answer structure.

The entire course of might be summarized into the next high-level steps:

- Carry out knowledge cleaning, annotation, and augmentation.

- Retailer knowledge in an Amazon Simple Storage Service (Amazon S3) bucket.

- Use SageMaker and Amazon FSx for Lustre for environment friendly knowledge augmentation.

- Break up knowledge into practice, validation, and take a look at units. We used FSx for Lustre and Amazon Relational Database Service (Amazon RDS) for quick parallel knowledge entry.

- Use a customized PyTorch Docker container together with different open supply libraries.

- Use SageMaker Distributed Data Parallelism (SMDDP) for accelerated distributed coaching.

- Log mannequin coaching metrics.

- Copy the ultimate mannequin to an S3 bucket.

BigBasket used SageMaker notebooks to coach their ML fashions and have been in a position to simply port their present open supply PyTorch and different open supply dependencies to a SageMaker PyTorch container and run the pipeline seamlessly. This was the primary profit seen by the BigBasket staff, as a result of there have been hardly any adjustments wanted to the code to make it suitable to run on a SageMaker surroundings.

The mannequin community consists of a ResNet 152 structure adopted by absolutely linked layers. We froze the low-level function layers and retained the weights acquired by switch studying from the ImageNet mannequin. The full mannequin parameters have been 66 million, consisting of 23 million trainable parameters. This switch learning-based method helped them use fewer photos on the time of coaching, and likewise enabled quicker convergence and diminished the overall coaching time.

Constructing and coaching the mannequin inside Amazon SageMaker Studio offered an built-in improvement surroundings (IDE) with every thing wanted to arrange, construct, practice, and tune fashions. Augmenting the coaching knowledge utilizing methods like cropping, rotating, and flipping photos helped enhance the mannequin coaching knowledge and mannequin accuracy.

Mannequin coaching was accelerated by 50% by using the SMDDP library, which incorporates optimized communication algorithms designed particularly for AWS infrastructure. To enhance knowledge learn/write efficiency throughout mannequin coaching and knowledge augmentation, we used FSx for Lustre for high-performance throughput.

Their beginning coaching knowledge dimension was over 1.5 TB. We used two Amazon Elastic Compute Cloud (Amazon EC2) p4d.24 large instances with 8 GPU and 40 GB GPU reminiscence. For SageMaker distributed coaching, the cases must be in the identical AWS Area and Availability Zone. Additionally, coaching knowledge saved in an S3 bucket must be in the identical Availability Zone. This structure additionally permits BigBasket to vary to different occasion sorts or add extra cases to the present structure to cater to any vital knowledge progress or obtain additional discount in coaching time.

How the SMDDP library helped scale back coaching time, price, and complexity

In conventional distributed knowledge coaching, the coaching framework assigns ranks to GPUs (employees) and creates a duplicate of your mannequin on every GPU. Throughout every coaching iteration, the worldwide knowledge batch is split into items (batch shards) and a chunk is distributed to every employee. Every employee then proceeds with the ahead and backward move outlined in your coaching script on every GPU. Lastly, mannequin weights and gradients from the completely different mannequin replicas are synced on the finish of the iteration by a collective communication operation known as AllReduce. After every employee and GPU has a synced reproduction of the mannequin, the following iteration begins.

The SMDDP library is a collective communication library that improves the efficiency of this distributed knowledge parallel coaching course of. The SMDDP library reduces the communication overhead of the important thing collective communication operations similar to AllReduce. Its implementation of AllReduce is designed for AWS infrastructure and may pace up coaching by overlapping the AllReduce operation with the backward move. This method achieves near-linear scaling effectivity and quicker coaching pace by optimizing kernel operations between CPUs and GPUs.

Observe the next calculations:

- The dimensions of the worldwide batch is (variety of nodes in a cluster) * (variety of GPUs per node) * (per batch shard)

- A batch shard (small batch) is a subset of the dataset assigned to every GPU (employee) per iteration

BigBasket used the SMDDP library to scale back their general coaching time. With FSx for Lustre, we diminished the info learn/write throughput throughout mannequin coaching and knowledge augmentation. With knowledge parallelism, BigBasket was in a position to obtain nearly 50% quicker and 20% cheaper coaching in comparison with different alternate options, delivering one of the best efficiency on AWS. SageMaker mechanically shuts down the coaching pipeline post-completion. The mission accomplished efficiently with 50% quicker coaching time in AWS (4.5 days in AWS vs. 9 days on their legacy platform).

On the time of penning this put up, BigBasket has been working the whole resolution in manufacturing for greater than 6 months and scaling the system by catering to new cities, and we’re including new shops each month.

“Our partnership with AWS on migration to distributed coaching utilizing their SMDDP providing has been an amazing win. Not solely did it reduce down our coaching instances by 50%, it was additionally 20% cheaper. In our total partnership, AWS has set the bar on buyer obsession and delivering outcomes—working with us the entire strategy to notice promised advantages.”

– Keshav Kumar, Head of Engineering at BigBasket.

Conclusion

On this put up, we mentioned how BigBasket used SageMaker to coach their pc imaginative and prescient mannequin for FMCG product identification. The implementation of an AI-powered automated self-checkout system delivers an improved retail buyer expertise by innovation, whereas eliminating human errors within the checkout course of. Accelerating new product onboarding by utilizing SageMaker distributed coaching reduces SKU onboarding time and value. Integrating FSx for Lustre permits quick parallel knowledge entry for environment friendly mannequin retraining with a whole bunch of latest SKUs month-to-month. General, this AI-based self-checkout resolution supplies an enhanced buying expertise devoid of frontend checkout errors. The automation and innovation have reworked their retail checkout and onboarding operations.

SageMaker supplies end-to-end ML improvement, deployment, and monitoring capabilities similar to a SageMaker Studio pocket book surroundings for writing code, knowledge acquisition, knowledge tagging, mannequin coaching, mannequin tuning, deployment, monitoring, and far more. If your corporation is going through any of the challenges described on this put up and needs to save lots of time to market and enhance price, attain out to the AWS account staff in your Area and get began with SageMaker.

Concerning the Authors

Santosh Waddi is a Principal Engineer at BigBasket, brings over a decade of experience in fixing AI challenges. With a powerful background in pc imaginative and prescient, knowledge science, and deep studying, he holds a postgraduate diploma from IIT Bombay. Santosh has authored notable IEEE publications and, as a seasoned tech weblog creator, he has additionally made vital contributions to the event of pc imaginative and prescient options throughout his tenure at Samsung.

Santosh Waddi is a Principal Engineer at BigBasket, brings over a decade of experience in fixing AI challenges. With a powerful background in pc imaginative and prescient, knowledge science, and deep studying, he holds a postgraduate diploma from IIT Bombay. Santosh has authored notable IEEE publications and, as a seasoned tech weblog creator, he has additionally made vital contributions to the event of pc imaginative and prescient options throughout his tenure at Samsung.

Nanda Kishore Thatikonda is an Engineering Supervisor main the Information Engineering and Analytics at BigBasket. Nanda has constructed a number of functions for anomaly detection and has a patent filed in an identical area. He has labored on constructing enterprise-grade functions, constructing knowledge platforms in a number of organizations and reporting platforms to streamline choices backed by knowledge. Nanda has over 18 years of expertise working in Java/J2EE, Spring applied sciences, and massive knowledge frameworks utilizing Hadoop and Apache Spark.

Nanda Kishore Thatikonda is an Engineering Supervisor main the Information Engineering and Analytics at BigBasket. Nanda has constructed a number of functions for anomaly detection and has a patent filed in an identical area. He has labored on constructing enterprise-grade functions, constructing knowledge platforms in a number of organizations and reporting platforms to streamline choices backed by knowledge. Nanda has over 18 years of expertise working in Java/J2EE, Spring applied sciences, and massive knowledge frameworks utilizing Hadoop and Apache Spark.

Sudhanshu Hate is a Principal AI & ML Specialist with AWS and works with purchasers to advise them on their MLOps and generative AI journey. In his earlier position, he conceptualized, created, and led groups to construct a ground-up, open source-based AI and gamification platform, and efficiently commercialized it with over 100 purchasers. Sudhanshu has to his credit score a few patents; has written 2 books, a number of papers, and blogs; and has introduced his viewpoint in varied boards. He has been a thought chief and speaker, and has been within the business for almost 25 years. He has labored with Fortune 1000 purchasers throughout the globe and most lately is working with digital native purchasers in India.

Sudhanshu Hate is a Principal AI & ML Specialist with AWS and works with purchasers to advise them on their MLOps and generative AI journey. In his earlier position, he conceptualized, created, and led groups to construct a ground-up, open source-based AI and gamification platform, and efficiently commercialized it with over 100 purchasers. Sudhanshu has to his credit score a few patents; has written 2 books, a number of papers, and blogs; and has introduced his viewpoint in varied boards. He has been a thought chief and speaker, and has been within the business for almost 25 years. He has labored with Fortune 1000 purchasers throughout the globe and most lately is working with digital native purchasers in India.

Ayush Kumar is Options Architect at AWS. He’s working with all kinds of AWS prospects, serving to them undertake the most recent trendy functions and innovate quicker with cloud-native applied sciences. You’ll discover him experimenting within the kitchen in his spare time.

Ayush Kumar is Options Architect at AWS. He’s working with all kinds of AWS prospects, serving to them undertake the most recent trendy functions and innovate quicker with cloud-native applied sciences. You’ll discover him experimenting within the kitchen in his spare time.