Deploy giant language fashions for a healthtech use case on Amazon SageMaker

In 2021, the pharmaceutical industry generated $550 billion in US revenue. Pharmaceutical corporations promote quite a lot of completely different, typically novel, medication available on the market, the place generally unintended however critical adversarial occasions can happen.

These occasions could be reported anyplace, from hospitals or at house, and should be responsibly and effectively monitored. Conventional guide processing of adversarial occasions is made difficult by the rising quantity of well being information and prices. Total, $384 billion is projected as the price of pharmacovigilance actions to the general healthcare trade by 2022. To help overarching pharmacovigilance actions, our pharmaceutical prospects need to use the facility of machine studying (ML) to automate the adversarial occasion detection from numerous information sources, akin to social media feeds, cellphone calls, emails, and handwritten notes, and set off acceptable actions.

On this submit, we present find out how to develop an ML-driven resolution utilizing Amazon SageMaker for detecting adversarial occasions utilizing the publicly out there Hostile Drug Response Dataset on Hugging Face. On this resolution, we fine-tune quite a lot of fashions on Hugging Face that have been pre-trained on medical information and use the BioBERT mannequin, which was pre-trained on the Pubmed dataset and performs the most effective out of these tried.

We carried out the answer utilizing the AWS Cloud Development Kit (AWS CDK). Nevertheless, we don’t cowl the specifics of constructing the answer on this submit. For extra info on the implementation of this resolution, consult with Build a system for catching adverse events in real-time using Amazon SageMaker and Amazon QuickSight.

This submit delves into a number of key areas, offering a complete exploration of the next subjects:

- The information challenges encountered by AWS Skilled Providers

- The panorama and software of huge language fashions (LLMs):

- Transformers, BERT, and GPT

- Hugging Face

- The fine-tuned LLM resolution and its elements:

- Knowledge preparation

- Mannequin coaching

Knowledge problem

Knowledge skew is commonly an issue when developing with classification duties. You’ll ideally prefer to have a balanced dataset, and this use case isn’t any exception.

We tackle this skew with generative AI fashions (Falcon-7B and Falcon-40B), which have been prompted to generate occasion samples primarily based on 5 examples from the coaching set to extend the semantic range and improve the pattern dimension of labeled adversarial occasions. It’s advantageous to us to make use of the Falcon fashions right here as a result of, in contrast to some LLMs on Hugging Face, Falcon offers you the coaching dataset they use, so you may make certain that none of your take a look at set examples are contained inside the Falcon coaching set and keep away from information contamination.

The opposite information problem for healthcare prospects are HIPAA compliance necessities. Encryption at relaxation and in transit needs to be integrated into the answer to fulfill these necessities.

Transformers, BERT, and GPT

The transformer structure is a neural community structure that’s used for pure language processing (NLP) duties. It was first launched within the paper “Attention Is All You Need” by Vaswani et al. (2017). The transformer structure is predicated on the eye mechanism, which permits the mannequin to study long-range dependencies between phrases. Transformers, as specified by the unique paper, include two most important elements: the encoder and the decoder. The encoder takes the enter sequence as enter and produces a sequence of hidden states. The decoder then takes these hidden states as enter and produces the output sequence. The eye mechanism is utilized in each the encoder and the decoder. The eye mechanism permits the mannequin to take care of particular phrases within the enter sequence when producing the output sequence. This permits the mannequin to study long-range dependencies between phrases, which is crucial for a lot of NLP duties, akin to machine translation and textual content summarization.

One of many extra standard and helpful of the transformer architectures, Bidirectional Encoder Representations from Transformers (BERT), is a language illustration mannequin that was introduced in 2018. BERT is educated on sequences the place among the phrases in a sentence are masked, and it has to fill in these phrases considering each the phrases earlier than and after the masked phrases. BERT could be fine-tuned for quite a lot of NLP duties, together with query answering, pure language inference, and sentiment evaluation.

The opposite standard transformer structure that has taken the world by storm is Generative Pre-trained Transformer (GPT). The primary GPT mannequin was introduced in 2018 by OpenAI. It really works by being educated to strictly predict the following phrase in a sequence, solely conscious of the context earlier than the phrase. GPT fashions are educated on a large dataset of textual content and code, and they are often fine-tuned for a spread of NLP duties, together with textual content technology, query answering, and summarization.

Basically, BERT is healthier at duties that require deeper understanding of the context of phrases, whereas GPT is better suited for tasks that require generating text.

Hugging Face

Hugging Face is a man-made intelligence firm that focuses on NLP. It supplies a platform with instruments and assets that allow builders to construct, prepare, and deploy ML fashions centered on NLP duties. One of many key choices of Hugging Face is its library, Transformers, which incorporates pre-trained fashions that may be fine-tuned for numerous language duties akin to textual content classification, translation, summarization, and query answering.

Hugging Face integrates seamlessly with SageMaker, which is a completely managed service that allows builders and information scientists to construct, prepare, and deploy ML fashions at scale. This synergy advantages customers by offering a strong and scalable infrastructure to deal with NLP duties with the state-of-the-art fashions that Hugging Face provides, mixed with the highly effective and versatile ML providers from AWS. It’s also possible to entry Hugging Face fashions instantly from Amazon SageMaker JumpStart, making it handy to begin with pre-built options.

Answer overview

We used the Hugging Face Transformers library to fine-tune transformer fashions on SageMaker for the duty of adversarial occasion classification. The coaching job is constructed utilizing the SageMaker PyTorch estimator. SageMaker JumpStart additionally has some complementary integrations with Hugging Face that makes easy to implement. On this part, we describe the main steps concerned in information preparation and mannequin coaching.

Knowledge preparation

We used the Hostile Drug Response Knowledge (ade_corpus_v2) inside the Hugging Face dataset with an 80/20 coaching/take a look at cut up. The required information construction for our mannequin coaching and inference has two columns:

- One column for textual content content material as mannequin enter information.

- One other column for the label class. We have now two potential lessons for a textual content:

Not_AEandAdverse_Event.

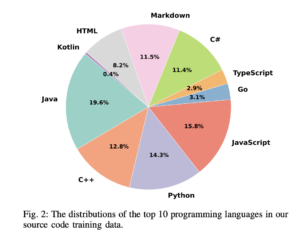

Mannequin coaching and experimentation

So as to effectively discover the house of potential Hugging Face fashions to fine-tune on our mixed information of adversarial occasions, we constructed a SageMaker hyperparameter optimization (HPO) job and handed in numerous Hugging Face fashions as a hyperparameter, together with different essential hyperparameters akin to coaching batch dimension, sequence size, fashions, and studying price. The coaching jobs used an ml.p3dn.24xlarge occasion and took a median of half-hour per job with that occasion sort. Coaching metrics have been captured although the Amazon SageMaker Experiments software, and every coaching job ran by 10 epochs.

We specify the next in our code:

- Coaching batch dimension – Variety of samples which might be processed collectively earlier than the mannequin weights are up to date

- Sequence size – Most size of the enter sequence that BERT can course of

- Studying price – How shortly the mannequin updates its weights throughout coaching

- Fashions – Hugging Face pretrained fashions

Outcomes

The mannequin that carried out the most effective in our use case was the monologg/biobert_v1.1_pubmed mannequin hosted on Hugging Face, which is a model of the BERT structure that has been pre-trained on the Pubmed dataset, which consists of 19,717 scientific publications. Pre-training BERT on this dataset offers this mannequin further experience with regards to figuring out context round medically associated scientific phrases. This boosts the mannequin’s efficiency for the adversarial occasion detection job as a result of it has been pre-trained on medically particular syntax that exhibits up typically in our dataset.

The next desk summarizes our analysis metrics.

| Mannequin | Precision | Recall | F1 |

| Base BERT | 0.87 | 0.95 | 0.91 |

| BioBert | 0.89 | 0.95 | 0.92 |

| BioBERT with HPO | 0.89 | 0.96 | 0.929 |

| BioBERT with HPO and synthetically generated adversarial occasion | 0.90 | 0.96 | 0.933 |

Though these are comparatively small and incremental enhancements over the bottom BERT mannequin, this nonetheless demonstrates some viable methods to enhance mannequin efficiency by these strategies. Artificial information technology with Falcon appears to carry lots of promise and potential for efficiency enhancements, particularly as these generative AI fashions get higher over time.

Clear up

To keep away from incurring future expenses, delete any assets created just like the mannequin and mannequin endpoints you created with the next code:

Conclusion

Many pharmaceutical corporations right this moment want to automate the method of figuring out adversarial occasions from their buyer interactions in a scientific means with a view to assist enhance buyer security and outcomes. As we confirmed on this submit, the fine-tuned LLM BioBERT with synthetically generated adversarial occasions added to the info classifies the adversarial occasions with excessive F1 scores and can be utilized to construct a HIPAA-compliant resolution for our prospects.

As at all times, AWS welcomes your suggestions. Please depart your ideas and questions within the feedback part.

Concerning the authors

Zack Peterson is a knowledge scientist in AWS Skilled Providers. He has been palms on delivering machine studying options to prospects for a few years and has a grasp’s diploma in Economics.

Zack Peterson is a knowledge scientist in AWS Skilled Providers. He has been palms on delivering machine studying options to prospects for a few years and has a grasp’s diploma in Economics.

Dr. Adewale Akinfaderin is a senior information scientist in Healthcare and Life Sciences at AWS. His experience is in reproducible and end-to-end AI/ML strategies, sensible implementations, and serving to international healthcare prospects formulate and develop scalable options to interdisciplinary issues. He has two graduate levels in Physics and a doctorate diploma in Engineering.

Dr. Adewale Akinfaderin is a senior information scientist in Healthcare and Life Sciences at AWS. His experience is in reproducible and end-to-end AI/ML strategies, sensible implementations, and serving to international healthcare prospects formulate and develop scalable options to interdisciplinary issues. He has two graduate levels in Physics and a doctorate diploma in Engineering.

Ekta Walia Bhullar, PhD, is a senior AI/ML guide with the AWS Healthcare and Life Sciences (HCLS) Skilled Providers enterprise unit. She has intensive expertise within the software of AI/ML inside the healthcare area, particularly in radiology. Outdoors of labor, when not discussing AI in radiology, she likes to run and hike.

Ekta Walia Bhullar, PhD, is a senior AI/ML guide with the AWS Healthcare and Life Sciences (HCLS) Skilled Providers enterprise unit. She has intensive expertise within the software of AI/ML inside the healthcare area, particularly in radiology. Outdoors of labor, when not discussing AI in radiology, she likes to run and hike.

Han Man is a Senior Knowledge Science & Machine Studying Supervisor with AWS Skilled Providers primarily based in San Diego, CA. He has a PhD in Engineering from Northwestern College and has a number of years of expertise as a administration guide advising purchasers in manufacturing, monetary providers, and power. At the moment, he’s passionately working with key prospects from quite a lot of trade verticals to develop and implement ML and generative AI options on AWS.

Han Man is a Senior Knowledge Science & Machine Studying Supervisor with AWS Skilled Providers primarily based in San Diego, CA. He has a PhD in Engineering from Northwestern College and has a number of years of expertise as a administration guide advising purchasers in manufacturing, monetary providers, and power. At the moment, he’s passionately working with key prospects from quite a lot of trade verticals to develop and implement ML and generative AI options on AWS.