Sentiment Evaluation in Python: Going Past Bag of Phrases

Picture created on DALL-E

Are you aware that election outcomes may be predicted to some extent by doing sentiment evaluation? Knowledge science may be each amusing and really helpful when utilized to real-life conditions moderately than working with mock datasets.

On this article, we are going to conduct a quick case research utilizing Twitter information. In the long run, you will note a case research that has a big impression on actual life, which is able to certainly pique your curiosity. However first, let’s begin with the fundamentals.

Sentiment evaluation is a technique, used to foretell emotions, like digital psychologists. With this, psychologist you created, the future of the textual content you’ll analyze shall be in your palms. You are able to do it just like the well-known psychologist Freud, or you may simply be there like a psychologist, charging 10 {dollars} per session.

Identical to your psychologist listens and understands your feelings, sentiment evaluation does the identical issues on textual content, like evaluations, feedback, or tweets, as we are going to do within the subsequent part. To do this, let’s begin doing a case research on the prepared dataset.

To do sentiment evaluation, we are going to use datasets from Kaggle. Right here this dataset was collected through the use of twitter api. Right here is the hyperlink to this dataset: https://www.kaggle.com/datasets/kazanova/sentiment140

Now, let’s begin exploring the dataset.

Discover Dataset

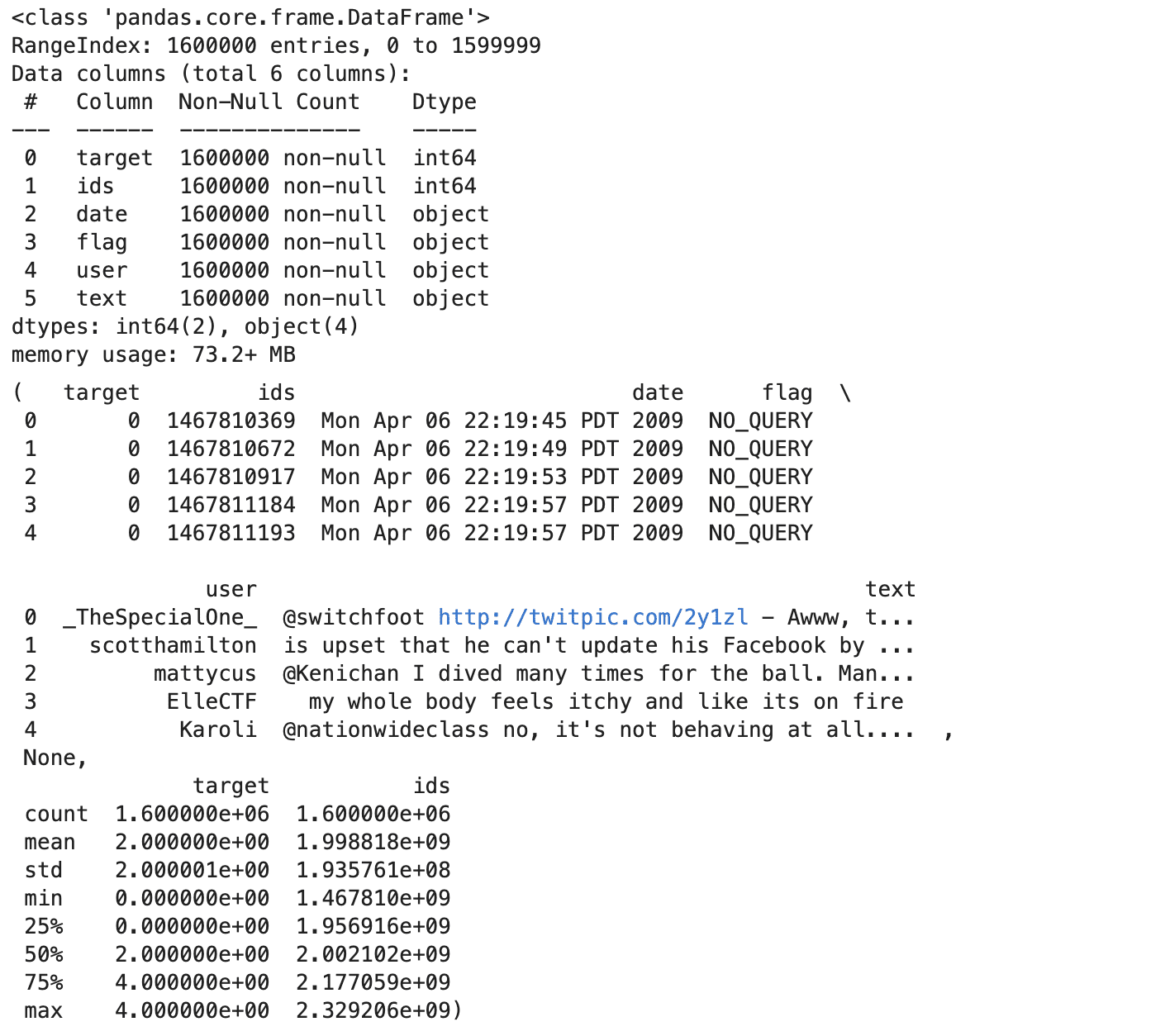

Now, earlier than doing sentiment evaluation, let’s discover our dataset. To learn it, use encoding. Due to this, we are going to add column names afterwards. You’ll be able to improve the strategies to do information exploration. Head, information, and describe methodology provides you with an excellent heads up; let’s see the code.

import pandas as pd

information = pd.read_csv('coaching.csv', encoding='ISO-8859-1', header=None)

column_names = ['target', 'ids', 'date', 'flag', 'user', 'text']

information.columns = column_names

head = information.head()

information = information.information()

describe = information.describe()

head, information, describe

Right here is the output.

Of coure, you may run these strategies one after the other when you don’t have picture restrict in your undertaking. Let’s see the insights we gather from these exploration strategies above.

Insights

- The dataset has 1.6 million tweets, with no lacking values in any column.

- Every tweet has a goal sentiment (0 for unfavorable,2 impartial, 4 for optimistic), an ID, a timestamp, a flag (question or ‘NO_QUERY’), the username, and the textual content.

- The sentiment targets are balanced, with an equal variety of optimistic and unfavorable labels.

Visualize the Dataset

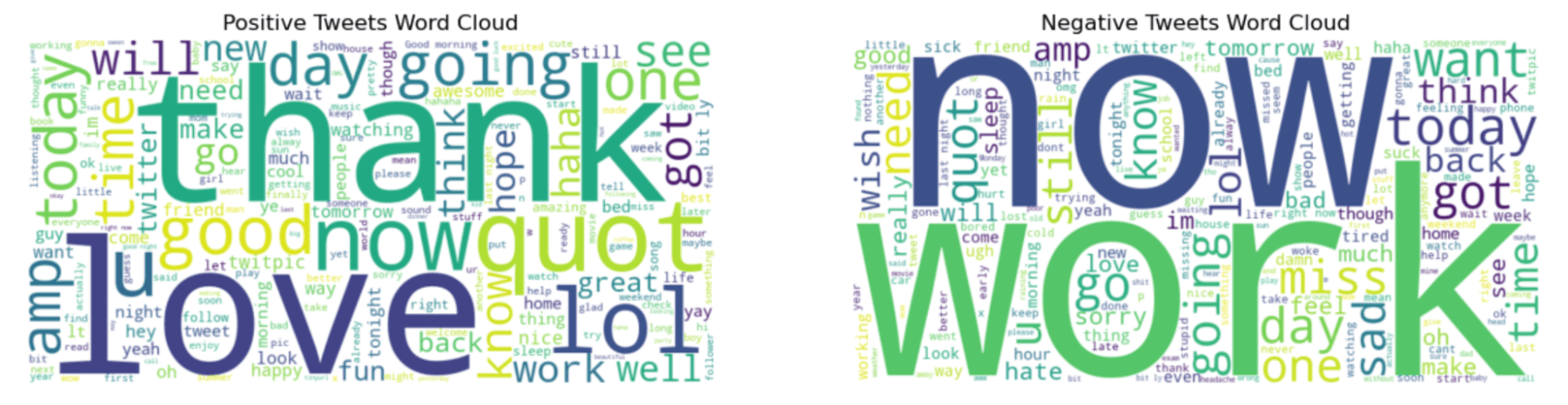

Great, we’ve each statistical and structural information about our dataset. Now, let’s create some visualizations to image it. Now, everyone knows the sharpest sentiments, optimistic and unfavorable. To see which phrases shall be utilizing for that, we shall be utilizing one of many python libraries referred to as wordcloud.

This library will visualize your datasets in line with the frequency of the phrases in it. If phrases are used incessantly, you’ll perceive it by taking a look at their measurement of it, there’s a optimistic relation, if the phrase is larger, it must be used so much.

However first, we should always choose optimistic and unfavorable tweets and mix them collectively through the use of python join method afterwards. Let’s see the code.

# Separate optimistic and unfavorable tweets primarily based on the 'goal' column

positive_tweets = information[data['target'] == 4]['text']

negative_tweets = information[data['target'] == 0]['text']

# Pattern some optimistic and unfavorable tweets to create phrase clouds

sample_positive_text = " ".be part of(textual content for textual content in positive_tweets.pattern(frac=0.1, random_state=23))

sample_negative_text = " ".be part of(textual content for textual content in negative_tweets.pattern(frac=0.1, random_state=23))

# Generate phrase cloud photos for each optimistic and unfavorable sentiments

wordcloud_positive = WordCloud(width=800, peak=400, max_words=200, background_color="white").generate(sample_positive_text)

wordcloud_negative = WordCloud(width=800, peak=400, max_words=200, background_color="white").generate(sample_negative_text)

# Show the generated picture utilizing matplotlib

plt.determine(figsize=(15, 7.5))

# Constructive phrase cloud

plt.subplot(1, 2, 1)

plt.imshow(wordcloud_positive, interpolation='bilinear')

plt.title('Constructive Tweets Phrase Cloud')

plt.axis("off")

# Unfavorable phrase cloud

plt.subplot(1, 2, 2)

plt.imshow(wordcloud_negative, interpolation='bilinear')

plt.title('Unfavorable Tweets Phrase Cloud')

plt.axis("off")

plt.present()

Right here is the output.

“Thank” and “now” phrases within the graph left sound extra optimistic. Nevertheless, “work” and “now” seem like attention-grabbing as a result of these phrases seem like typically be in unfavorable tweets.

Sentiment Evaluation

To carry out sentiment evaluation, listed below are the steps we are going to observe;

- Preprocess the textual content information

- Break up the dataset

- Vectorize the dataset

- Knowledge Conversion

- Label Encoding

- Prepare a Neural Networks

- Prepare the mannequin

- Consider the Mannequin ( With Plotting)

Now, engaged on 1.6 million tweets could be an excellent workload on your pc or platform; that’s why I chosen 50K optimistic and 50K unfavorable tweets at first.

# Since we have to use a smaller dataset as a consequence of useful resource constraints, let's pattern 100k tweets

# Balanced sampling: 50k optimistic and 50k unfavorable

sample_size_per_class = 50000

positive_sample = information[data['target'] == 4].pattern(n=sample_size_per_class, random_state=23)

negative_sample = information[data['target'] == 0].pattern(n=sample_size_per_class, random_state=23)

# Mix the samples into one dataset

balanced_sample = pd.concat([positive_sample, negative_sample])

# Verify the steadiness of the sampled information

balanced_sample['target'].value_counts()

Subsequent, let’s construct our neural nets.

import tensorflow as tf

import matplotlib.pyplot as plt

from sklearn.preprocessing import LabelEncoder

from tensorflow.keras.fashions import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.utils import to_categorical

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.textual content import TfidfVectorizer

vectorizer = TfidfVectorizer(max_features=10000, ngram_range=(1, 2))

# Prepare and check cut up

X_train, X_val, y_train, y_val = train_test_split(balanced_sample['text'], balanced_sample['target'], test_size=0.2, random_state=23)

# After vectorizing the textual content information utilizing TF-IDF

X_train_vectorized = vectorizer.fit_transform(X_train)

X_val_vectorized = vectorizer.rework(X_val)

# Convert the sparse matrix to a dense matrix

X_train_vectorized = X_train_vectorized.todense()

X_val_vectorized = X_val_vectorized.todense()

# Convert labels to one-hot encoding

encoder = LabelEncoder()

y_train_encoded = to_categorical(encoder.fit_transform(y_train))

y_val_encoded = to_categorical(encoder.rework(y_val))

# Outline a easy neural community mannequin

mannequin = Sequential()

mannequin.add(Dense(512, input_shape=(X_train_vectorized.form[1],), activation='relu'))

mannequin.add(Dense(2, activation='softmax')) # 2 as a result of we've two lessons

# Compile the mannequin

mannequin.compile(optimizer="adam", loss="categorical_crossentropy", metrics=['accuracy'])

# Prepare the mannequin over epochs

historical past = mannequin.match(X_train_vectorized, y_train_encoded, epochs=10, batch_size=128,

validation_data=(X_val_vectorized, y_val_encoded), verbose=1)

# Plotting the mannequin accuracy over epochs

plt.determine(figsize=(10, 6))

plt.plot(historical past.historical past['accuracy'], label="Prepare Accuracy", marker="o")

plt.plot(historical past.historical past['val_accuracy'], label="Validation Accuracy", marker="o")

plt.title('Mannequin Accuracy over Epochs')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

plt.grid(True)

plt.present()

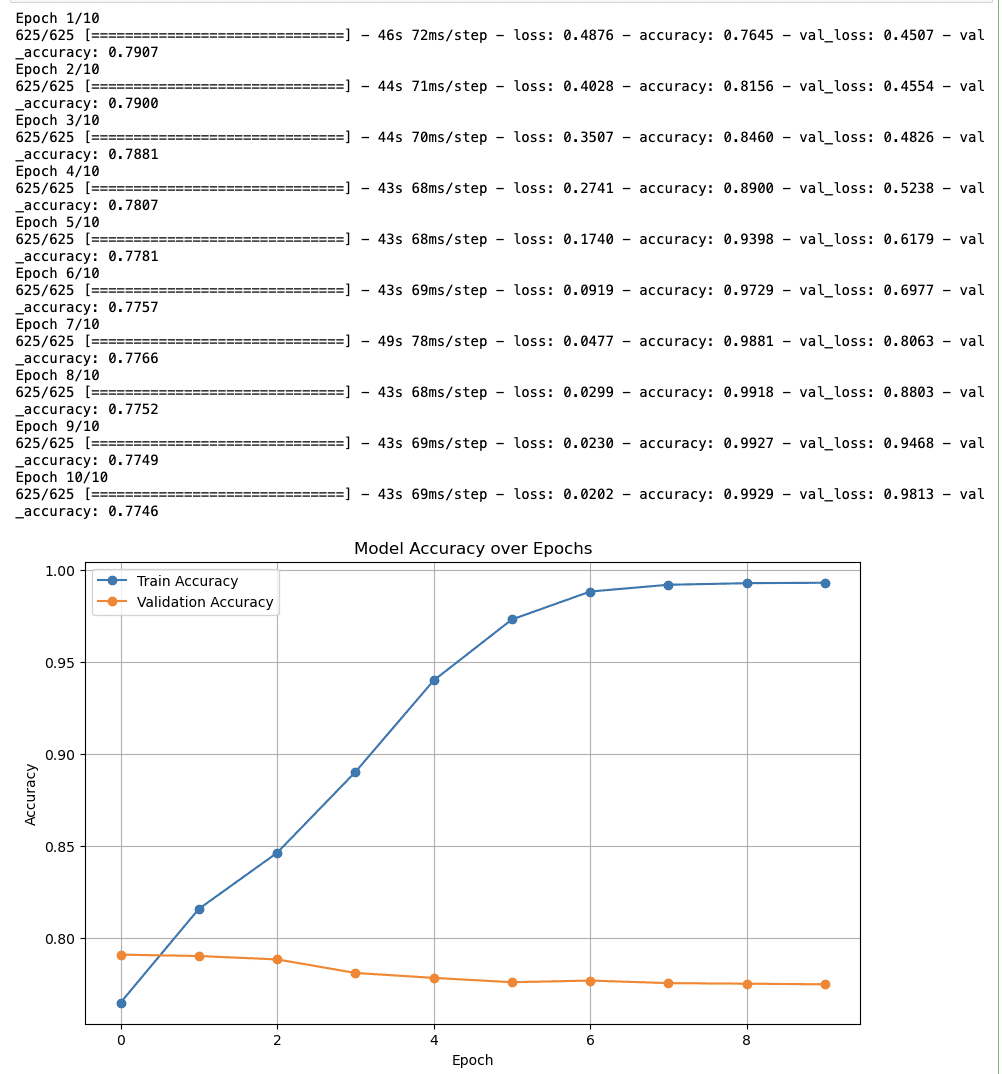

Right here is the output.

Ultimate Insights About Sentiment Evaluation

- Coaching Accuracy: The accuracy begins at practically 80% and consistently will increase to close 100% by the tenth epoch. So, it seems to be just like the mannequin is successfully studying.

- Validation Accuracy: The validation accuracy once more begins round 80% and continues steadily shortly, which may point out that the mannequin will not be generalizing to unseen information.

Initially of this text, your curiosity was piqued. And let’s now clarify the actual story behind this.

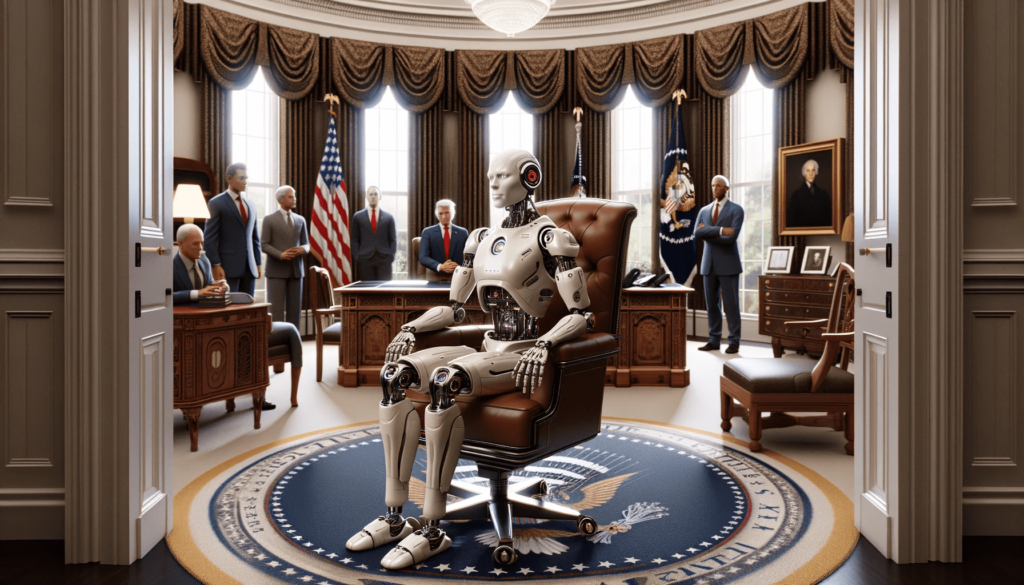

The paper from Predicting Election Outcomes from Twitter Utilizing Machine Studying Algorithms,

revealed in “Latest Advances in Laptop Science and Communications”, presents a machine learning-based methodology for predicting election outcomes. Here you may learn the entire.

In abstract, they did sentiment evaluation, and achieved 94.2 % accuracy, on the AP Meeting Election 2019. It seems to be like they actually obtained shut.

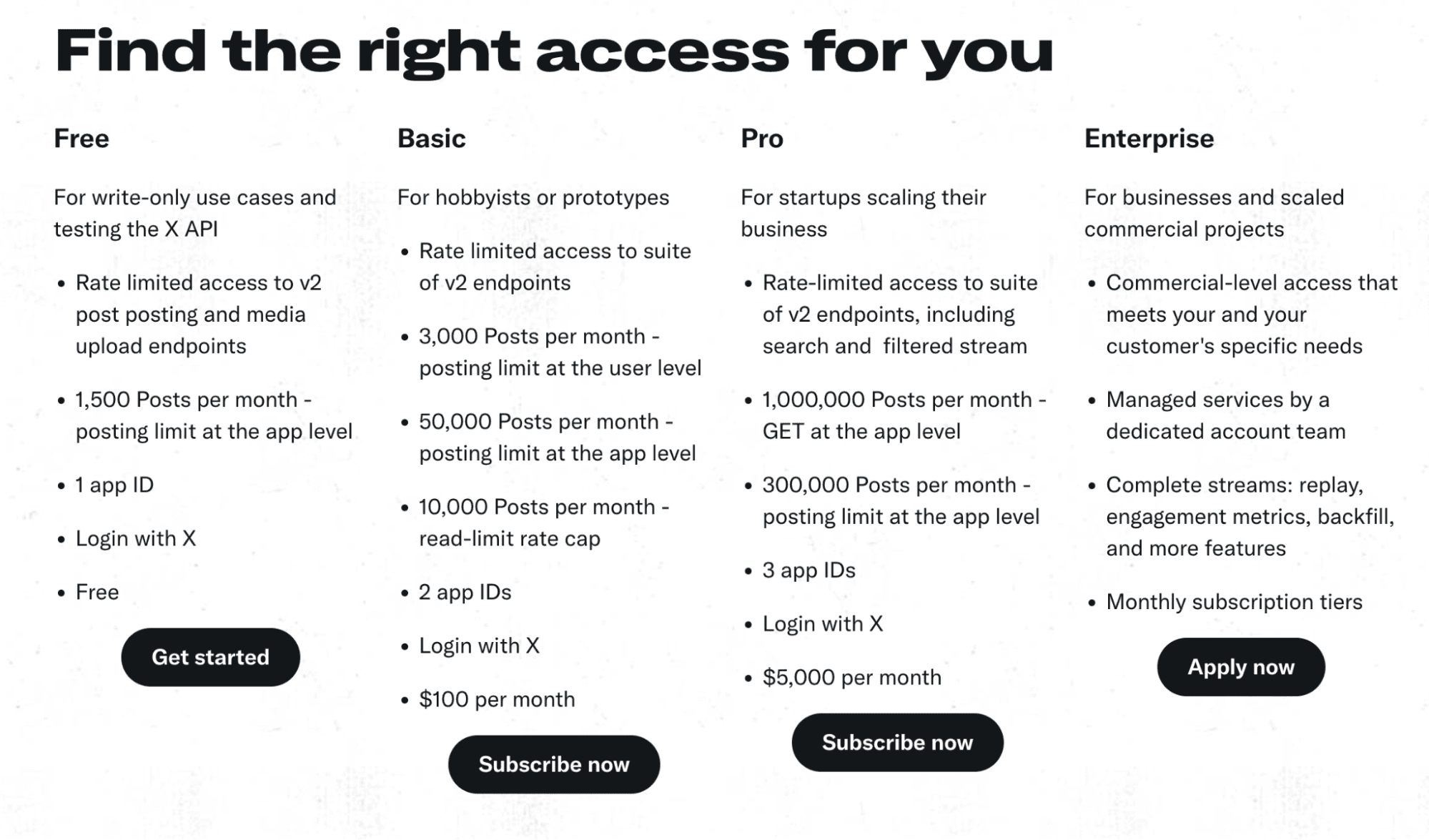

In case you plan to do a portfolio undertaking, analysis like this, or intend to go farther from this case research, you should utilize Twitter API, or x API. Listed below are the plans: https://developer.twitter.com/en/products/twitter-api

You are able to do hashtag sentiment evaluation on Twitter after main sports activities or political occasions. In 2024, there shall be an election in a bunch of nations like america, the place you may examine the news.

The ability of Knowledge Science can actually be seen on this instance. This yr, we are going to witness quite a few elections worldwide, so when you intention to attract consideration to your undertaking, this could be a good suggestion. In case you are a newbie trying to find methods to be taught information science, you’ll find many real-life tasks, data science interview questions, and weblog posts that includes data science projects like this on StrataScratch.

Nate Rosidi is an information scientist and in product technique. He is additionally an adjunct professor instructing analytics, and is the founding father of StrataScratch, a platform serving to information scientists put together for his or her interviews with actual interview questions from high firms. Join with him on Twitter: StrataScratch or LinkedIn.