Architect defense-in-depth safety for generative AI functions utilizing the OWASP High 10 for LLMs

Generative synthetic intelligence (AI) functions constructed round massive language fashions (LLMs) have demonstrated the potential to create and speed up financial worth for companies. Examples of functions embrace conversational search, customer support agent assistance, customer support analytics, self-service virtual assistants, chatbots, rich media generation, content moderation, coding companions to accelerate secure, high-performance software development, deeper insights from multimodal content sources, acceleration of your organization’s security investigations and mitigations, and way more. Many purchasers are on the lookout for steerage on the right way to handle safety, privateness, and compliance as they develop generative AI functions. Understanding and addressing LLM vulnerabilities, threats, and dangers in the course of the design and structure phases helps groups deal with maximizing the financial and productiveness advantages generative AI can carry. Being conscious of dangers fosters transparency and belief in generative AI functions, encourages elevated observability, helps to fulfill compliance necessities, and facilitates knowledgeable decision-making by leaders.

The aim of this publish is to empower AI and machine studying (ML) engineers, knowledge scientists, options architects, safety groups, and different stakeholders to have a standard psychological mannequin and framework to use safety greatest practices, permitting AI/ML groups to maneuver quick with out buying and selling off safety for pace. Particularly, this publish seeks to assist AI/ML and knowledge scientists who could not have had earlier publicity to safety rules achieve an understanding of core safety and privateness greatest practices within the context of growing generative AI functions utilizing LLMs. We additionally focus on frequent safety issues that may undermine belief in AI, as recognized by the Open Worldwide Application Security Project (OWASP) Top 10 for LLM Applications, and present methods you need to use AWS to extend your safety posture and confidence whereas innovating with generative AI.

This publish supplies three guided steps to architect threat administration methods whereas growing generative AI functions utilizing LLMs. We first delve into the vulnerabilities, threats, and dangers that come up from the implementation, deployment, and use of LLM options, and supply steerage on the right way to begin innovating with safety in thoughts. We then focus on how constructing on a safe basis is crucial for generative AI. Lastly, we join these along with an instance LLM workload to explain an method in the direction of architecting with defense-in-depth safety throughout belief boundaries.

By the top of this publish, AI/ML engineers, knowledge scientists, and security-minded technologists will be capable of establish methods to architect layered defenses for his or her generative AI functions, perceive the right way to map OWASP High 10 for LLMs safety issues to some corresponding controls, and construct foundational information in the direction of answering the next high AWS buyer query themes for his or her functions:

- What are a number of the frequent safety and privateness dangers with utilizing generative AI based mostly on LLMs in my functions that I can most influence with this steerage?

- What are some methods to implement safety and privateness controls within the improvement lifecycle for generative AI LLM functions on AWS?

- What operational and technical greatest practices can I combine into how my group builds generative AI LLM functions to handle threat and improve confidence in generative AI functions utilizing LLMs?

Enhance safety outcomes whereas growing generative AI

Innovation with generative AI utilizing LLMs requires beginning with safety in thoughts to develop organizational resiliency, construct on a safe basis, and combine safety with a protection in depth safety method. Safety is a shared responsibility between AWS and AWS clients. All of the rules of the AWS Shared Duty Mannequin are relevant to generative AI options. Refresh your understanding of the AWS Shared Duty Mannequin because it applies to infrastructure, companies, and knowledge once you construct LLM options.

Begin with safety in thoughts to develop organizational resiliency

Begin with safety in thoughts to develop organizational resiliency for growing generative AI functions that meet your safety and compliance aims. Organizational resiliency attracts on and extends the definition of resiliency in the AWS Well-Architected Framework to incorporate and put together for the power of a company to get better from disruptions. Contemplate your safety posture, governance, and operational excellence when assessing total readiness to develop generative AI with LLMs and your organizational resiliency to any potential impacts. As your group advances its use of rising applied sciences similar to generative AI and LLMs, total organizational resiliency ought to be thought of as a cornerstone of a layered defensive technique to guard property and features of enterprise from unintended penalties.

Organizational resiliency issues considerably for LLM functions

Though all threat administration applications can profit from resilience, organizational resiliency issues considerably for generative AI. 5 of the OWASP-identified high 10 dangers for LLM functions depend on defining architectural and operational controls and implementing them at an organizational scale so as to handle threat. These 5 dangers are insecure output dealing with, provide chain vulnerabilities, delicate data disclosure, extreme company, and overreliance. Start growing organizational resiliency by socializing your groups to think about AI, ML, and generative AI safety a core enterprise requirement and high precedence all through the entire lifecycle of the product, from inception of the thought, to analysis, to the appliance’s improvement, deployment, and use. Along with consciousness, your groups ought to take motion to account for generative AI in governance, assurance, and compliance validation practices.

Construct organizational resiliency round generative AI

Organizations can begin adopting methods to construct their capability and capabilities for AI/ML and generative AI safety inside their organizations. You must start by extending your present safety, assurance, compliance, and improvement applications to account for generative AI.

The next are the 5 key areas of curiosity for organizational AI, ML, and generative AI safety:

- Perceive the AI/ML safety panorama

- Embrace numerous views in safety methods

- Take motion proactively for securing analysis and improvement actions

- Align incentives with organizational outcomes

- Put together for real looking safety situations in AI/ML and generative AI

Develop a risk mannequin all through your generative AI Lifecycle

Organizations constructing with generative AI ought to deal with threat administration, not threat elimination, and embrace threat modeling in and business continuity planning the planning, improvement, and operations of generative AI workloads. Work backward from manufacturing use of generative AI by growing a risk mannequin for every software utilizing conventional safety dangers in addition to generative AI-specific dangers. Some dangers could also be acceptable to your small business, and a risk modeling train will help your organization establish what your acceptable threat urge for food is. For instance, your small business could not require 99.999% uptime on a generative AI software, so the extra restoration time related to restoration utilizing AWS Backup with Amazon S3 Glacier could also be a suitable threat. Conversely, the information in your mannequin could also be extraordinarily delicate and extremely regulated, so deviation from AWS Key Management Service (AWS KMS) customer managed key (CMK) rotation and use of AWS Network Firewall to assist implement Transport Layer Safety (TLS) for ingress and egress visitors to guard towards knowledge exfiltration could also be an unacceptable threat.

Consider the dangers (inherent vs. residual) of utilizing the generative AI software in a manufacturing setting to establish the correct foundational and application-level controls. Plan for rollback and restoration from manufacturing safety occasions and repair disruptions similar to immediate injection, coaching knowledge poisoning, mannequin denial of service, and mannequin theft early on, and outline the mitigations you’ll use as you outline software necessities. Studying in regards to the dangers and controls that must be put in place will assist outline the very best implementation method for constructing a generative AI software, and supply stakeholders and decision-makers with data to make knowledgeable enterprise choices about threat. If you’re unfamiliar with the general AI and ML workflow, begin by reviewing 7 ways to improve security of your machine learning workloads to extend familiarity with the safety controls wanted for conventional AI/ML methods.

Similar to constructing any ML software, constructing a generative AI software includes going by means of a set of analysis and improvement lifecycle phases. It’s possible you’ll wish to evaluate the AWS Generative AI Security Scoping Matrix to assist construct a psychological mannequin to grasp the important thing safety disciplines that you must contemplate relying on which generative AI resolution you choose.

Generative AI functions utilizing LLMs are sometimes developed and operated following ordered steps:

- Utility necessities – Determine use case enterprise aims, necessities, and success standards

- Mannequin choice – Choose a basis mannequin that aligns with use case necessities

- Mannequin adaptation and fine-tuning – Put together knowledge, engineer prompts, and fine-tune the mannequin

- Mannequin analysis – Consider basis fashions with use case-specific metrics and choose the best-performing mannequin

- Deployment and integration – Deploy the chosen basis mannequin in your optimized infrastructure and combine together with your generative AI software

- Utility monitoring – Monitor software and mannequin efficiency to allow root trigger evaluation

Guarantee groups perceive the essential nature of safety as a part of the design and structure phases of your software program improvement lifecycle on Day 1. This implies discussing safety at every layer of your stack and lifecycle, and positioning safety and privateness as enablers to reaching enterprise aims.Architect controls for threats earlier than you launch your LLM software, and contemplate whether or not the information and data you’ll use for mannequin adaptation and fine-tuning warrants controls implementation within the analysis, improvement, and coaching environments. As a part of high quality assurance exams, introduce artificial safety threats (similar to making an attempt to poison coaching knowledge, or making an attempt to extract delicate knowledge by means of malicious immediate engineering) to check out your defenses and safety posture frequently.

Moreover, stakeholders ought to set up a constant evaluate cadence for manufacturing AI, ML, and generative AI workloads and set organizational precedence on understanding trade-offs between human and machine management and error previous to launch. Validating and assuring that these trade-offs are revered within the deployed LLM functions will improve the chance of threat mitigation success.

Construct generative AI functions on safe cloud foundations

At AWS, safety is our high precedence. AWS is architected to be essentially the most safe world cloud infrastructure on which to construct, migrate, and handle functions and workloads. That is backed by our deep set of over 300 cloud safety instruments and the belief of our hundreds of thousands of shoppers, together with essentially the most security-sensitive organizations like authorities, healthcare, and monetary companies. When constructing generative AI functions utilizing LLMs on AWS, you achieve safety advantages from the secure, reliable, and flexible AWS Cloud computing environment.

Use an AWS world infrastructure for safety, privateness, and compliance

While you develop data-intensive functions on AWS, you may profit from an AWS world Area infrastructure, architected to supply capabilities to fulfill your core safety and compliance necessities. That is strengthened by our AWS Digital Sovereignty Pledge, our dedication to providing you essentially the most superior set of sovereignty controls and options out there within the cloud. We’re dedicated to increasing our capabilities to permit you to meet your digital sovereignty wants, with out compromising on the efficiency, innovation, safety, or scale of the AWS Cloud. To simplify implementation of safety and privateness greatest practices, think about using reference designs and infrastructure as code sources such because the AWS Security Reference Architecture (AWS SRA) and the AWS Privacy Reference Architecture (AWS PRA). Learn extra about architecting privacy solutions, sovereignty by design, and compliance on AWS and use companies similar to AWS Config, AWS Artifact, and AWS Audit Manager to help your privateness, compliance, audit, and observability wants.

Perceive your safety posture utilizing AWS Nicely-Architected and Cloud Adoption Frameworks

AWS presents greatest observe steerage developed from years of expertise supporting clients in architecting their cloud environments with the AWS Well-Architected Framework and in evolving to comprehend enterprise worth from cloud applied sciences with the AWS Cloud Adoption Framework (AWS CAF). Perceive the safety posture of your AI, ML, and generative AI workloads by performing a Nicely-Architected Framework evaluate. Opinions could be carried out utilizing instruments just like the AWS Well-Architected Tool, or with the assistance of your AWS crew by means of AWS Enterprise Support. The AWS Nicely-Architected Instrument automatically integrates insights from AWS Trusted Advisor to guage what greatest practices are in place and what alternatives exist to enhance performance and cost-optimization. The AWS Nicely-Architected Instrument additionally presents custom-made lenses with particular greatest practices such because the Machine Learning Lens so that you can often measure your architectures towards greatest practices and establish areas for enchancment. Checkpoint your journey on the trail to worth realization and cloud maturity by understanding how AWS clients undertake methods to develop organizational capabilities within the AWS Cloud Adoption Framework for Artificial Intelligence, Machine Learning, and Generative AI. You may additionally discover profit in understanding your total cloud readiness by collaborating in an AWS Cloud Readiness Assessment. AWS presents extra alternatives for engagement—ask your AWS account crew for extra data on the right way to get began with the Generative AI Innovation Center.

Speed up your safety and AI/ML studying with greatest practices steerage, coaching, and certification

AWS additionally curates suggestions from Best Practices for Security, Identity, & Compliance and AWS Security Documentation that can assist you establish methods to safe your coaching, improvement, testing, and operational environments. In case you’re simply getting began, dive deeper on safety coaching and certification, contemplate beginning with AWS Security Fundamentals and the AWS Security Learning Plan. You may as well use the AWS Security Maturity Model to assist information you discovering and prioritizing the very best actions at completely different phases of maturity on AWS, beginning with fast wins, by means of foundational, environment friendly, and optimized phases. After you and your groups have a fundamental understanding of safety on AWS, we strongly suggest reviewing How to approach threat modeling after which main a risk modeling train together with your groups beginning with the Threat Modeling For Builders Workshop coaching program. There are various different AWS Security training and certification resources out there.

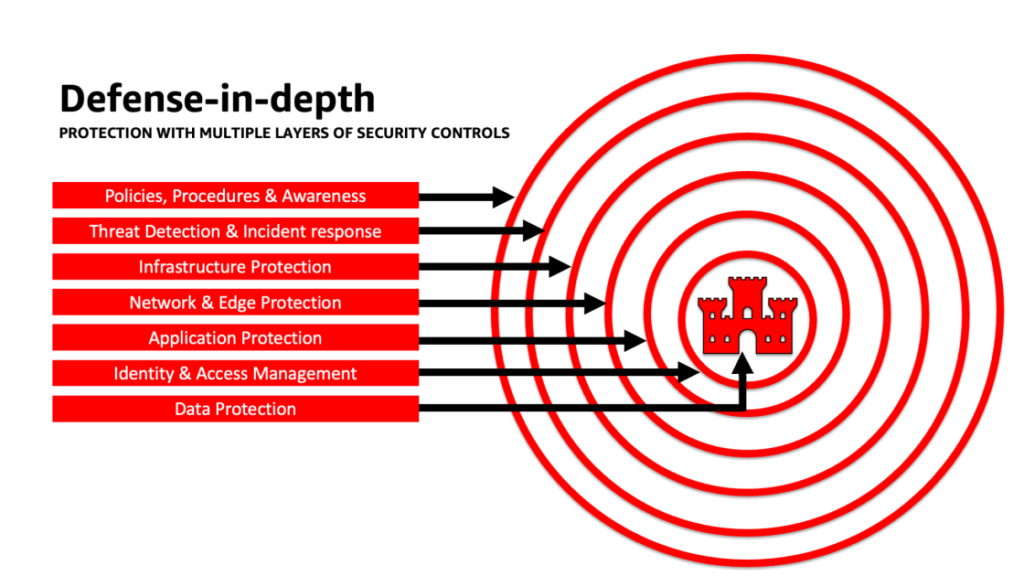

Apply a defense-in-depth method to safe LLM functions

Making use of a defense-in-depth safety method to your generative AI workloads, knowledge, and data will help create the very best situations to attain your small business aims. Protection-in-depth safety greatest practices mitigate lots of the frequent dangers that any workload faces, serving to you and your groups speed up your generative AI innovation. A defense-in-depth safety technique makes use of a number of redundant defenses to guard your AWS accounts, workloads, knowledge, and property. It helps be sure that if anybody safety management is compromised or fails, extra layers exist to assist isolate threats and stop, detect, reply, and get better from safety occasions. You should use a mix of methods, together with AWS companies and options, at every layer to enhance the safety and resiliency of your generative AI workloads.

Many AWS clients align to business normal frameworks, such because the NIST Cybersecurity Framework. This framework helps be certain that your safety defenses have safety throughout the pillars of Determine, Defend, Detect, Reply, Get better, and most just lately added, Govern. This framework can then simply map to AWS Safety companies and people from built-in third events as nicely that can assist you validate ample protection and insurance policies for any safety occasion your group encounters.

Protection in depth: Safe your atmosphere, then add enhanced AI/ML-specific safety and privateness capabilities

A defense-in-depth technique ought to begin by defending your accounts and group first, after which layer on the extra built-in safety and privateness enhanced options of companies similar to Amazon Bedrock and Amazon SageMaker. Amazon has over 30 services in the Security, Identity, and Compliance portfolio that are built-in with AWS AI/ML companies, and can be utilized collectively to assist safe your workloads, accounts, group. To correctly defend towards the OWASP High 10 for LLM, these ought to be used along with the AWS AI/ML companies.

Begin by implementing a coverage of least privilege, utilizing companies like IAM Access Analyzer to look for overly permissive accounts, roles, and resources to restrict access using short-termed credentials. Subsequent, be sure that all knowledge at relaxation is encrypted with AWS KMS, together with contemplating using CMKs, and all knowledge and fashions are versioned and backed up utilizing Amazon Simple Storage Service (Amazon S3) versioning and making use of object-level immutability with Amazon S3 Object Lock. Defend all knowledge in transit between companies utilizing AWS Certificate Manager and/or AWS Private CA, and hold it inside VPCs utilizing AWS PrivateLink. Outline strict knowledge ingress and egress guidelines to assist defend towards manipulation and exfiltration utilizing VPCs with AWS Network Firewall insurance policies. Contemplate inserting AWS Web Application Firewall (AWS WAF) in entrance to protect web applications and APIs from malicious bots, SQL injection attacks, cross-site scripting (XSS), and account takeovers with Fraud Control. Logging with AWS CloudTrail, Amazon Virtual Private Cloud (Amazon VPC) move logs, and Amazon Elastic Kubernetes Service (Amazon EKS) audit logs will assist present forensic evaluate of every transaction out there to companies similar to Amazon Detective. You should use Amazon Inspector to automate vulnerability discovery and administration for Amazon Elastic Compute Cloud (Amazon EC2) cases, containers, AWS Lambda capabilities, and identify the network reachability of your workloads. Defend your knowledge and fashions from suspicious exercise utilizing Amazon GuardDuty’s ML-powered risk fashions and intelligence feeds, and enabling its extra options for EKS Safety, ECS Safety, S3 Safety, RDS Safety, Malware Safety, Lambda Safety, and extra. You should use companies like AWS Security Hub to centralize and automate your safety checks to detect deviations from safety greatest practices and speed up investigation and automate remediation of safety findings with playbooks. You may as well contemplate implementing a zero trust structure on AWS to additional improve fine-grained authentication and authorization controls for what human customers or machine-to-machine processes can entry on a per-request foundation. Additionally think about using Amazon Security Lake to mechanically centralize safety knowledge from AWS environments, SaaS suppliers, on premises, and cloud sources right into a purpose-built knowledge lake saved in your account. With Safety Lake, you may get a extra full understanding of your safety knowledge throughout your complete group.

After your generative AI workload atmosphere has been secured, you may layer in AI/ML-specific options, similar to Amazon SageMaker Data Wrangler to establish potential bias throughout knowledge preparation and Amazon SageMaker Clarify to detect bias in ML knowledge and fashions. You may as well use Amazon SageMaker Model Monitor to guage the standard of SageMaker ML fashions in manufacturing, and notify you when there’s drift in knowledge high quality, mannequin high quality, and have attribution. These AWS AI/ML companies working collectively (together with SageMaker working with Amazon Bedrock) with AWS Safety companies will help you establish potential sources of pure bias and defend towards malicious knowledge tampering. Repeat this course of for every of the OWASP High 10 for LLM vulnerabilities to make sure you’re maximizing the worth of AWS companies to implement protection in depth to guard your knowledge and workloads.

As AWS Enterprise Strategist Clarke Rodgers wrote in his weblog publish “CISO Insight: Every AWS Service Is A Security Service”, “I might argue that nearly each service throughout the AWS cloud both allows a safety end result by itself, or can be utilized (alone or together with a number of companies) by clients to attain a safety, threat, or compliance goal.” And “Buyer Chief Info Safety Officers (CISOs) (or their respective groups) could wish to take the time to make sure that they’re nicely versed with all AWS companies as a result of there could also be a safety, threat, or compliance goal that may be met, even when a service doesn’t fall into the ‘Safety, Identification, and Compliance’ class.”

Layer defenses at belief boundaries in LLM functions

When growing generative AI-based methods and functions, you must contemplate the identical issues as with all different ML software, as talked about within the MITRE ATLAS Machine Learning Threat Matrix, similar to being conscious of software program and knowledge element origins (similar to performing an open supply software program audit, reviewing software program invoice of supplies (SBOMs), and analyzing knowledge workflows and API integrations) and implementing vital protections towards LLM provide chain threats. Embrace insights from business frameworks, and pay attention to methods to make use of a number of sources of risk intelligence and threat data to regulate and lengthen your safety defenses to account for AI, ML, and generative AI safety dangers which might be emergent and never included in conventional frameworks. Search out companion data on AI-specific dangers from business, protection, governmental, worldwide, and tutorial sources, as a result of new threats emerge and evolve on this area often and companion frameworks and guides are up to date incessantly. For instance, when utilizing a Retrieval Augmented Era (RAG) mannequin, if the mannequin doesn’t embrace the information it wants, it might request it from an exterior knowledge supply for utilizing throughout inferencing and fine-tuning. The supply that it queries could also be outdoors of your management, and could be a potential supply of compromise in your provide chain. A defense-in-depth method ought to be prolonged in the direction of exterior sources to determine belief, authentication, authorization, entry, safety, privateness, and accuracy of the information it’s accessing. To dive deeper, learn “Build a secure enterprise application with Generative AI and RAG using Amazon SageMaker JumpStart”

Analyze and mitigate threat in your LLM functions

On this part, we analyze and focus on some threat mitigation strategies based mostly on belief boundaries and interactions, or distinct areas of the workload with related applicable controls scope and threat profile. On this pattern structure of a chatbot software, there are 5 belief boundaries the place controls are demonstrated, based mostly on how AWS clients generally construct their LLM functions. Your LLM software could have extra or fewer definable belief boundaries. Within the following pattern structure, these belief boundaries are outlined as:

- Person interface interactions (request and response)

- Utility interactions

- Mannequin interactions

- Knowledge interactions

- Organizational interactions and use

Person interface interactions: Develop request and response monitoring

Detect and reply to cyber incidents associated to generative AI in a well timed method by evaluating a method to deal with threat from the inputs and outputs of the generative AI software. For instance, extra monitoring for behaviors and knowledge outflow could must be instrumented to detect delicate data disclosure outdoors your area or group, within the case that it’s used within the LLM software.

Generative AI functions ought to nonetheless uphold the usual safety greatest practices relating to defending knowledge. Set up a secure data perimeter and secure sensitive data stores. Encrypt knowledge and data used for LLM functions at relaxation and in transit. Defend knowledge used to coach your mannequin from coaching knowledge poisoning by understanding and controlling which customers, processes, and roles are allowed to contribute to the information shops, in addition to how knowledge flows within the software, monitor for bias deviations, and utilizing versioning and immutable storage in storage companies similar to Amazon S3. Set up strict knowledge ingress and egress controls utilizing companies like AWS Community Firewall and AWS VPCs to guard towards suspicious enter and the potential for knowledge exfiltration.

In the course of the coaching, retraining, or fine-tuning course of, you have to be conscious of any delicate knowledge that’s utilized. After knowledge is used throughout certainly one of these processes, you must plan for a state of affairs the place any consumer of your mannequin all of a sudden turns into capable of extract the information or data again out by using immediate injection strategies. Perceive the dangers and advantages of utilizing delicate knowledge in your fashions and inferencing. Implement strong authentication and authorization mechanisms for establishing and managing fine-grained entry permissions, which don’t depend on LLM software logic to stop disclosure. Person-controlled enter to a generative AI software has been demonstrated underneath some situations to have the ability to present a vector to extract data from the mannequin or any non-user-controlled components of the enter. This could happen by way of immediate injection, the place the consumer supplies enter that causes the output of the mannequin to deviate from the anticipated guardrails of the LLM software, together with offering clues to the datasets that the mannequin was initially educated on.

Implement user-level entry quotas for customers offering enter and receiving output from a mannequin. You must contemplate approaches that don’t enable nameless entry underneath situations the place the mannequin coaching knowledge and data is delicate, or the place there’s threat from an adversary coaching a facsimile of your mannequin based mostly on their enter and your aligned mannequin output. Basically, if a part of the enter to a mannequin consists of arbitrary user-provided textual content, contemplate the output to be inclined to immediate injection, and accordingly guarantee use of the outputs consists of applied technical and organizational countermeasures to mitigate insecure output dealing with, extreme company, and overreliance. Within the instance earlier associated to filtering for malicious enter utilizing AWS WAF, contemplate constructing a filter in entrance of your software for such potential misuse of prompts, and develop a coverage for the right way to deal with and evolve these as your mannequin and knowledge grows. Additionally contemplate a filtered evaluate of the output earlier than it’s returned to the consumer to make sure it meets high quality, accuracy, or content material moderation requirements. It’s possible you’ll wish to additional customise this to your group’s wants with a further layer of management on inputs and outputs in entrance of your fashions to mitigate suspicious visitors patterns.

Utility interactions: Utility safety and observability

Evaluation your LLM software with consideration to how a consumer might make the most of your mannequin to bypass normal authorization to a downstream device or toolchain that they don’t have authorization to entry or use. One other concern at this layer includes accessing exterior knowledge shops by utilizing a mannequin as an assault mechanism utilizing unmitigated technical or organizational LLM dangers. For instance, in case your mannequin is educated to entry sure knowledge shops that would comprise delicate knowledge, you must guarantee that you’ve got correct authorization checks between your mannequin and the information shops. Use immutable attributes about customers that don’t come from the mannequin when performing authorization checks. Unmitigated insecure output dealing with, insecure plugin design, and extreme company can create situations the place a risk actor could use a mannequin to trick the authorization system into escalating efficient privileges, resulting in a downstream element believing the consumer is permitted to retrieve knowledge or take a particular motion.

When implementing any generative AI plugin or device, it’s crucial to look at and comprehend the extent of entry being granted, in addition to scrutinize the entry controls which have been configured. Utilizing unmitigated insecure generative AI plugins could render your system inclined to provide chain vulnerabilities and threats, probably resulting in malicious actions, together with working distant code.

Mannequin interactions: Mannequin assault prevention

You have to be conscious of the origin of any fashions, plugins, instruments, or knowledge you utilize, so as to consider and mitigate towards provide chain vulnerabilities. For instance, some frequent mannequin codecs allow the embedding of arbitrary runnable code within the fashions themselves. Use bundle mirrors, scanning, and extra inspections as related to your organizations safety objectives.

The datasets you prepare and fine-tune your fashions on should even be reviewed. In case you additional mechanically fine-tune a mannequin based mostly on consumer suggestions (or different end-user-controllable data), it’s essential to contemplate if a malicious risk actor might change the mannequin arbitrarily based mostly on manipulating their responses and obtain coaching knowledge poisoning.

Knowledge interactions: Monitor knowledge high quality and utilization

Generative AI fashions similar to LLMs typically work nicely as a result of they’ve been educated on a considerable amount of knowledge. Though this knowledge helps LLMs full advanced duties, it can also expose your system to threat of coaching knowledge poisoning, which happens when inappropriate knowledge is included or omitted inside a coaching dataset that may alter a mannequin’s conduct. To mitigate this threat, you must take a look at your provide chain and perceive the information evaluate course of to your system earlier than it’s used inside your mannequin. Though the coaching pipeline is a first-rate supply for knowledge poisoning, you also needs to take a look at how your mannequin will get knowledge, similar to in a RAG mannequin or knowledge lake, and if the supply of that knowledge is trusted and guarded. Use AWS Safety companies similar to AWS Safety Hub, Amazon GuardDuty, and Amazon Inspector to assist repeatedly monitor for suspicious exercise in Amazon EC2, Amazon EKS, Amazon S3, Amazon Relational Database Service (Amazon RDS), and community entry which may be indicators of rising threats, and use Detective to visualise safety investigations. Additionally think about using companies similar to Amazon Security Lake to speed up safety investigations by making a purpose-built knowledge lake to mechanically centralize safety knowledge from AWS environments, SaaS suppliers, on premises, and cloud sources which contribute to your AI/ML workloads.

Organizational interactions: Implement enterprise governance guardrails for generative AI

Determine dangers related to using generative AI to your companies. You must construct your group’s threat taxonomy and conduct threat assessments to make knowledgeable choices when deploying generative AI options. Develop a business continuity plan (BCP) that features AI, ML, and generative AI workloads and that may be enacted shortly to switch the misplaced performance of an impacted or offline LLM software to fulfill your SLAs.

Determine course of and useful resource gaps, inefficiencies, and inconsistencies, and enhance consciousness and possession throughout your small business. Threat model all generative AI workloads to establish and mitigate potential safety threats that will result in business-impacting outcomes, together with unauthorized entry to knowledge, denial of service, and useful resource misuse. Make the most of the brand new AWS Threat Composer Modeling Tool to assist cut back time-to-value when performing risk modeling. Later in your improvement cycles, contemplate together with introducing security chaos engineering fault injection experiments to create real-world situations to grasp how your system will react to unknowns and construct confidence within the system’s resiliency and safety.

Embrace numerous views in growing safety methods and threat administration mechanisms to make sure adherence and protection for AI/ML and generative safety throughout all job roles and capabilities. Carry a safety mindset to the desk from the inception and analysis of any generative AI software to align on necessities. In case you want further help from AWS, ask your AWS account supervisor to be sure that there’s equal help by requesting AWS Options Architects from AWS Safety and AI/ML to assist in tandem.

Be certain that your safety group routinely takes actions to foster communication round each threat consciousness and threat administration understanding amongst generative AI stakeholders similar to product managers, software program builders, knowledge scientists, and govt management, permitting risk intelligence and controls steerage to achieve the groups which may be impacted. Safety organizations can help a tradition of accountable disclosure and iterative enchancment by collaborating in discussions and bringing new concepts and data to generative AI stakeholders that relate to their enterprise aims. Study extra about our commitment to Responsible AI and extra responsible AI resources to assist our clients.

Achieve benefit in enabling higher organizational posture for generative AI by unblocking time to worth within the present safety processes of your group. Proactively consider the place your group could require processes which might be overly burdensome given the generative AI safety context and refine these to supply builders and scientists a transparent path to launch with the proper controls in place.

Assess the place there could also be alternatives to align incentives, derisk, and supply a transparent line of sight on the specified outcomes. Replace controls steerage and defenses to fulfill the evolving wants of AI/ML and generative AI software improvement to scale back confusion and uncertainty that may price improvement time, improve threat, and improve influence.

Be certain that stakeholders who will not be safety specialists are capable of each perceive how organizational governance, insurance policies, and threat administration steps apply to their workloads, in addition to apply threat administration mechanisms. Put together your group to answer real looking occasions and situations that will happen with generative AI functions, and be certain that generative AI builder roles and response groups are conscious of escalation paths and actions in case of concern for any suspicious exercise.

Conclusion

To efficiently commercialize innovation with any new and rising know-how requires beginning with a security-first mindset, constructing on a safe infrastructure basis, and fascinated with the right way to additional combine safety at every stage of the know-how stack early with a defense-in-depth safety method. This consists of interactions at a number of layers of your know-how stack, and integration factors inside your digital provide chain, to make sure organizational resiliency. Though generative AI introduces some new safety and privateness challenges, should you observe elementary safety greatest practices similar to utilizing defense-in-depth with layered safety companies, you may assist defend your group from many frequent points and evolving threats. You must implement layered AWS Safety companies throughout your generative AI workloads and bigger group, and deal with integration factors in your digital provide chains to safe your cloud environments. Then you need to use the improved safety and privateness capabilities in AWS AI/ML companies similar to Amazon SageMaker and Amazon Bedrock so as to add additional layers of enhanced safety and privateness controls to your generative AI functions. Embedding safety from the beginning will make it sooner, simpler, and cheaper to innovate with generative AI, whereas simplifying compliance. It will make it easier to improve controls, confidence, and observability to your generative AI functions to your staff, clients, companions, regulators, and different involved stakeholders.

Further references

- Trade normal frameworks for AI/ML-specific threat administration and safety:

Concerning the authors

Christopher Rae is a Principal Worldwide Safety GTM Specialist targeted on growing and executing strategic initiatives that speed up and scale adoption of AWS safety companies. He’s passionate in regards to the intersection of cybersecurity and rising applied sciences, with 20+ years of expertise in world strategic management roles delivering safety options to media, leisure, and telecom clients. He recharges by means of studying, touring, meals and wine, discovering new music, and advising early-stage startups.

Christopher Rae is a Principal Worldwide Safety GTM Specialist targeted on growing and executing strategic initiatives that speed up and scale adoption of AWS safety companies. He’s passionate in regards to the intersection of cybersecurity and rising applied sciences, with 20+ years of expertise in world strategic management roles delivering safety options to media, leisure, and telecom clients. He recharges by means of studying, touring, meals and wine, discovering new music, and advising early-stage startups.

Elijah Winter is a Senior Safety Engineer in Amazon Safety, holding a BS in Cyber Safety Engineering and infused with a love for Harry Potter. Elijah excels in figuring out and addressing vulnerabilities in AI methods, mixing technical experience with a contact of wizardry. Elijah designs tailor-made safety protocols for AI ecosystems, bringing a magical aptitude to digital defenses. Integrity pushed, Elijah has a safety background in each public and industrial sector organizations targeted on defending belief.

Elijah Winter is a Senior Safety Engineer in Amazon Safety, holding a BS in Cyber Safety Engineering and infused with a love for Harry Potter. Elijah excels in figuring out and addressing vulnerabilities in AI methods, mixing technical experience with a contact of wizardry. Elijah designs tailor-made safety protocols for AI ecosystems, bringing a magical aptitude to digital defenses. Integrity pushed, Elijah has a safety background in each public and industrial sector organizations targeted on defending belief.

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 a long time of expertise architecting and constructing distributed, hybrid, and cloud functions. He’s obsessed with constructing safe and scalable AI/ML and massive knowledge options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he rides his motorbike and walks along with his 3-year-old Sheepadoodle!

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 a long time of expertise architecting and constructing distributed, hybrid, and cloud functions. He’s obsessed with constructing safe and scalable AI/ML and massive knowledge options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he rides his motorbike and walks along with his 3-year-old Sheepadoodle!

Navneet Tuteja is a Knowledge Specialist at Amazon Net Companies. Earlier than becoming a member of AWS, Navneet labored as a facilitator for organizations looking for to modernize their knowledge architectures and implement complete AI/ML options. She holds an engineering diploma from Thapar College, in addition to a grasp’s diploma in statistics from Texas A&M College.

Navneet Tuteja is a Knowledge Specialist at Amazon Net Companies. Earlier than becoming a member of AWS, Navneet labored as a facilitator for organizations looking for to modernize their knowledge architectures and implement complete AI/ML options. She holds an engineering diploma from Thapar College, in addition to a grasp’s diploma in statistics from Texas A&M College.

Emily Soward is a Knowledge Scientist with AWS Skilled Companies. She holds a Grasp of Science with Distinction in Synthetic Intelligence from the College of Edinburgh in Scotland, United Kingdom with emphasis on Pure Language Processing (NLP). Emily has served in utilized scientific and engineering roles targeted on AI-enabled product analysis and improvement, operational excellence, and governance for AI workloads working at organizations in the private and non-private sector. She contributes to buyer steerage as an AWS Senior Speaker and just lately, as an writer for AWS Nicely-Architected within the Machine Studying Lens.

Emily Soward is a Knowledge Scientist with AWS Skilled Companies. She holds a Grasp of Science with Distinction in Synthetic Intelligence from the College of Edinburgh in Scotland, United Kingdom with emphasis on Pure Language Processing (NLP). Emily has served in utilized scientific and engineering roles targeted on AI-enabled product analysis and improvement, operational excellence, and governance for AI workloads working at organizations in the private and non-private sector. She contributes to buyer steerage as an AWS Senior Speaker and just lately, as an writer for AWS Nicely-Architected within the Machine Studying Lens.