Construct an Amazon SageMaker Mannequin Registry approval and promotion workflow with human intervention

This submit is co-written with Jayadeep Pabbisetty, Sr. Specialist Information Engineering at Merck, and Prabakaran Mathaiyan, Sr. ML Engineer at Tiger Analytics.

The big machine studying (ML) mannequin improvement lifecycle requires a scalable mannequin launch course of much like that of software program improvement. Mannequin builders typically work collectively in creating ML fashions and require a strong MLOps platform to work in. A scalable MLOps platform wants to incorporate a course of for dealing with the workflow of ML mannequin registry, approval, and promotion to the subsequent setting stage (improvement, take a look at, UAT, or manufacturing).

A mannequin developer sometimes begins to work in a person ML improvement setting inside Amazon SageMaker. When a mannequin is educated and prepared for use, it must be authorized after being registered within the Amazon SageMaker Model Registry. On this submit, we focus on how the AWS AI/ML crew collaborated with the Merck Human Well being IT MLOps crew to construct an answer that makes use of an automatic workflow for ML mannequin approval and promotion with human intervention within the center.

Overview of resolution

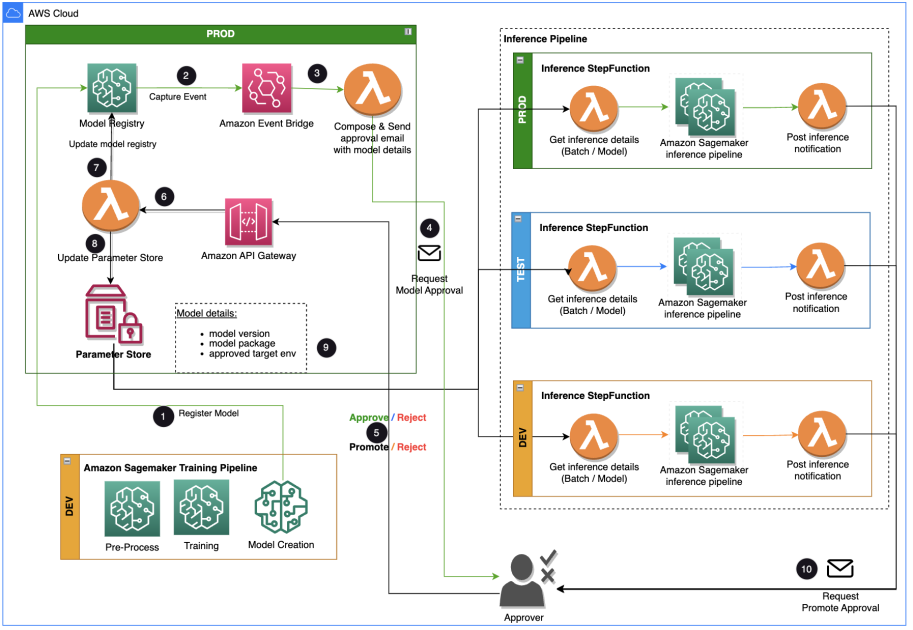

This submit focuses on a workflow resolution that the ML mannequin improvement lifecycle can use between the coaching pipeline and inferencing pipeline. The answer supplies a scalable workflow for MLOps in supporting the ML mannequin approval and promotion course of with human intervention. An ML mannequin registered by an information scientist wants an approver to evaluation and approve earlier than it’s used for an inference pipeline and within the subsequent setting stage (take a look at, UAT, or manufacturing). The answer makes use of AWS Lambda, Amazon API Gateway, Amazon EventBridge, and SageMaker to automate the workflow with human approval intervention within the center. The next structure diagram reveals the general system design, the AWS companies used, and the workflow for approving and selling ML fashions with human intervention from improvement to manufacturing.

The workflow consists of the next steps:

- The coaching pipeline develops and registers a mannequin within the SageMaker mannequin registry. At this level, the mannequin standing is

PendingManualApproval. - EventBridge displays standing change occasions to robotically take actions with easy guidelines.

- The EventBridge mannequin registration occasion rule invokes a Lambda operate that constructs an e mail with a hyperlink to approve or reject the registered mannequin.

- The approver will get an e mail with the hyperlink to evaluation and approve or reject the mannequin.

- The approver approves the mannequin by following the hyperlink within the e mail to an API Gateway endpoint.

- API Gateway invokes a Lambda operate to provoke mannequin updates.

- The mannequin registry is up to date for the mannequin standing (

Authorizedfor the dev setting, howeverPendingManualApprovalfor take a look at, UAT, and manufacturing). - The mannequin element is saved in AWS Parameter Store, a functionality of AWS Systems Manager, together with the mannequin model, authorized goal setting, mannequin package deal.

- The inference pipeline fetches the mannequin authorized for the goal setting from Parameter Retailer.

- The post-inference notification Lambda operate collects batch inference metrics and sends an e mail to the approver to advertise the mannequin to the subsequent setting.

Conditions

The workflow on this submit assumes the setting for the coaching pipeline is about up in SageMaker, together with different sources. The enter to the coaching pipeline is the options dataset. The function technology particulars are usually not included on this submit, however it focuses on the registry, approval, and promotion of ML fashions after they’re educated. The mannequin is registered within the mannequin registry and is ruled by a monitoring framework in Amazon SageMaker Model Monitor to detect for any drift and proceed to retraining in case of mannequin drift.

Workflow particulars

The approval workflow begins with a mannequin developed from a coaching pipeline. When information scientists develop a mannequin, they register it to the SageMaker Mannequin Registry with the mannequin standing of PendingManualApproval. EventBridge displays SageMaker for the mannequin registration occasion and triggers an occasion rule that invokes a Lambda operate. The Lambda operate dynamically constructs an e mail for an approval of the mannequin with a hyperlink to an API Gateway endpoint to a different Lambda operate. When the approver follows the hyperlink to approve the mannequin, API Gateway forwards the approval motion to the Lambda operate, which updates the SageMaker Mannequin Registry and the mannequin attributes in Parameter Retailer. The approver should be authenticated and a part of the approver group managed by Lively Listing. The preliminary approval marks the mannequin as Authorized for dev however PendingManualApproval for take a look at, UAT, and manufacturing. The mannequin attributes saved in Parameter Retailer embody the mannequin model, mannequin package deal, and authorized goal setting.

When an inference pipeline must fetch a mannequin, it checks Parameter Retailer for the most recent mannequin model authorized for the goal setting and will get the inference particulars. When the inference pipeline is full, a post-inference notification e mail is distributed to a stakeholder requesting an approval to advertise the mannequin to the subsequent setting stage. The e-mail has the main points in regards to the mannequin and metrics in addition to an approval hyperlink to an API Gateway endpoint for a Lambda operate that updates the mannequin attributes.

The next is the sequence of occasions and implementation steps for the ML mannequin approval/promotion workflow from mannequin creation to manufacturing. The mannequin is promoted from improvement to check, UAT, and manufacturing environments with an specific human approval in every step.

We begin with the coaching pipeline, which is prepared for mannequin improvement. The mannequin model begins as 0 in SageMaker Mannequin Registry.

- The SageMaker coaching pipeline develops and registers a mannequin in SageMaker Mannequin Registry. Mannequin model 1 is registered and begins with Pending Handbook Approval standing.

The Mannequin Registry metadata has 4 customized fields for the environments:

The Mannequin Registry metadata has 4 customized fields for the environments: dev, take a look at, uat, andprod.

- EventBridge displays the SageMaker Mannequin Registry for the standing change to robotically take motion with easy guidelines.

- The mannequin registration occasion rule invokes a Lambda operate that constructs an e mail with the hyperlink to approve or reject the registered mannequin.

- The approver will get an e mail with the hyperlink to evaluation and approve (or reject) the mannequin.

- The approver approves the mannequin by following the hyperlink to the API Gateway endpoint within the e mail.

- API Gateway invokes the Lambda operate to provoke mannequin updates.

- The SageMaker Mannequin Registry is up to date with the mannequin standing.

- The mannequin element data is saved in Parameter Retailer, together with the mannequin model, authorized goal setting, and mannequin package deal.

- The inference pipeline fetches the mannequin authorized for the goal setting from Parameter Retailer.

- The post-inference notification Lambda operate collects batch inference metrics and sends an e mail to the approver to advertise the mannequin to the subsequent setting.

- The approver approves the mannequin promotion to the subsequent stage by following the hyperlink to the API Gateway endpoint, which triggers the Lambda operate to replace the SageMaker Mannequin Registry and Parameter Retailer.

The whole historical past of the mannequin versioning and approval is saved for evaluation in Parameter Retailer.

Conclusion

The big ML mannequin improvement lifecycle requires a scalable ML mannequin approval course of. On this submit, we shared an implementation of an ML mannequin registry, approval, and promotion workflow with human intervention utilizing SageMaker Mannequin Registry, EventBridge, API Gateway, and Lambda. If you’re contemplating a scalable ML mannequin improvement course of to your MLOps platform, you may observe the steps on this submit to implement an analogous workflow.

Concerning the authors

Tom Kim is a Senior Answer Architect at AWS, the place he helps his clients obtain their enterprise aims by creating options on AWS. He has in depth expertise in enterprise techniques structure and operations throughout a number of industries – notably in Well being Care and Life Science. Tom is all the time studying new applied sciences that result in desired enterprise end result for patrons – e.g. AI/ML, GenAI and Information Analytics. He additionally enjoys touring to new locations and taking part in new golf programs at any time when he can discover time.

Tom Kim is a Senior Answer Architect at AWS, the place he helps his clients obtain their enterprise aims by creating options on AWS. He has in depth expertise in enterprise techniques structure and operations throughout a number of industries – notably in Well being Care and Life Science. Tom is all the time studying new applied sciences that result in desired enterprise end result for patrons – e.g. AI/ML, GenAI and Information Analytics. He additionally enjoys touring to new locations and taking part in new golf programs at any time when he can discover time.

Shamika Ariyawansa, serving as a Senior AI/ML Options Architect within the Healthcare and Life Sciences division at Amazon Net Companies (AWS),focuses on Generative AI, with a give attention to Massive Language Mannequin (LLM) coaching, inference optimizations, and MLOps (Machine Studying Operations). He guides clients in embedding superior Generative AI into their initiatives, guaranteeing sturdy coaching processes, environment friendly inference mechanisms, and streamlined MLOps practices for efficient and scalable AI options. Past his skilled commitments, Shamika passionately pursues snowboarding and off-roading adventures.

Shamika Ariyawansa, serving as a Senior AI/ML Options Architect within the Healthcare and Life Sciences division at Amazon Net Companies (AWS),focuses on Generative AI, with a give attention to Massive Language Mannequin (LLM) coaching, inference optimizations, and MLOps (Machine Studying Operations). He guides clients in embedding superior Generative AI into their initiatives, guaranteeing sturdy coaching processes, environment friendly inference mechanisms, and streamlined MLOps practices for efficient and scalable AI options. Past his skilled commitments, Shamika passionately pursues snowboarding and off-roading adventures.

Jayadeep Pabbisetty is a Senior ML/Information Engineer at Merck, the place he designs and develops ETL and MLOps options to unlock information science and analytics for the enterprise. He’s all the time keen about studying new applied sciences, exploring new avenues, and buying the abilities essential to evolve with the ever-changing IT business. In his spare time, he follows his ardour for sports activities and likes to journey and discover new locations.

Jayadeep Pabbisetty is a Senior ML/Information Engineer at Merck, the place he designs and develops ETL and MLOps options to unlock information science and analytics for the enterprise. He’s all the time keen about studying new applied sciences, exploring new avenues, and buying the abilities essential to evolve with the ever-changing IT business. In his spare time, he follows his ardour for sports activities and likes to journey and discover new locations.

Prabakaran Mathaiyan is a Senior Machine Studying Engineer at Tiger Analytics LLC, the place he helps his clients to attain their enterprise aims by offering options for the mannequin constructing, coaching, validation, monitoring, CICD and enchancment of machine studying options on AWS. Prabakaran is all the time studying new applied sciences that result in desired enterprise end result for patrons – e.g. AI/ML, GenAI, GPT and LLM. He additionally enjoys taking part in cricket at any time when he can discover time.

Prabakaran Mathaiyan is a Senior Machine Studying Engineer at Tiger Analytics LLC, the place he helps his clients to attain their enterprise aims by offering options for the mannequin constructing, coaching, validation, monitoring, CICD and enchancment of machine studying options on AWS. Prabakaran is all the time studying new applied sciences that result in desired enterprise end result for patrons – e.g. AI/ML, GenAI, GPT and LLM. He additionally enjoys taking part in cricket at any time when he can discover time.