LLMs and Transformers from Scratch: the Decoder | by Luís Roque

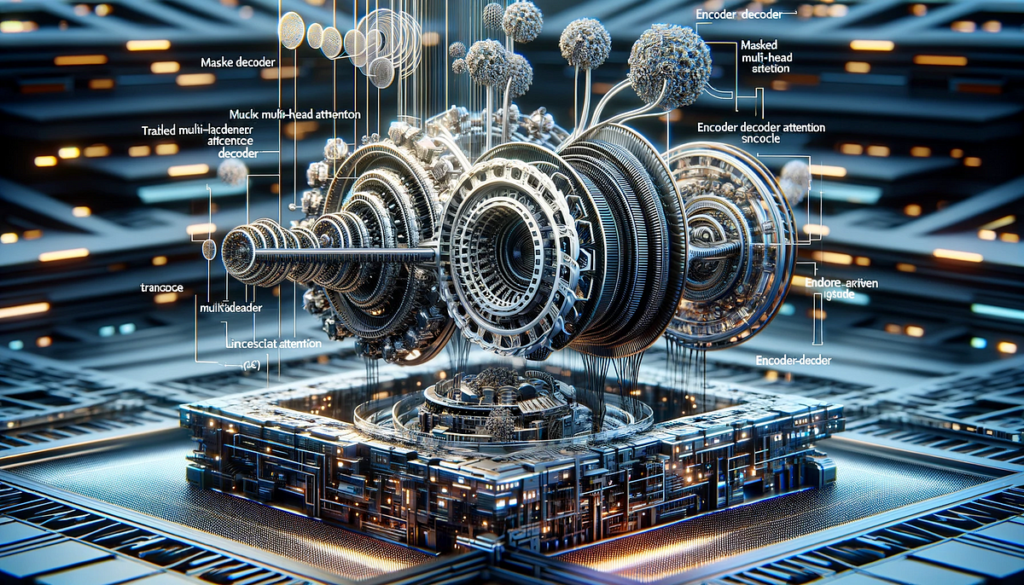

Exploring the Transformer’s Decoder Structure: Masked Multi-Head Consideration, Encoder-Decoder Consideration, and Sensible Implementation

This submit was co-authored with Rafael Nardi.

On this article, we delve into the decoder element of the transformer structure, specializing in its variations and similarities with the encoder. The decoder’s distinctive characteristic is its loop-like, iterative nature, which contrasts with the encoder’s linear processing. Central to the decoder are two modified types of the eye mechanism: masked multi-head consideration and encoder-decoder multi-head consideration.

The masked multi-head consideration within the decoder ensures sequential processing of tokens, a way that forestalls every generated token from being influenced by subsequent tokens. This masking is essential for sustaining the order and coherence of the generated knowledge. The interplay between the decoder’s output (from masked consideration) and the encoder’s output is highlighted within the encoder-decoder consideration. This final step offers the enter context into the decoder’s course of.

We may even exhibit how these ideas are carried out utilizing Python and NumPy. We have now created a easy instance of translating a sentence from English to Portuguese. This sensible method will assist illustrate the interior workings of the decoder in a transformer mannequin and supply a clearer understanding of its function in Massive Language Fashions (LLMs).

As at all times, the code is on the market on our GitHub.

After describing the interior workings of the encoder in transformer structure in our earlier article, we will see the subsequent section, the decoder half. When evaluating the 2 elements of the transformer we consider it’s instructive to emphasise the primary similarities and variations. The eye mechanism is the core of each. Particularly, it happens in two locations on the decoder. They each have essential modifications in comparison with the only model current on the encoder: masked multi-head consideration and encoder-decoder multi-head consideration. Speaking about variations, we level out the…