Meet GigaGPT: Cerebras’ Implementation of Andrei Karpathy’s nanoGPT that Trains GPT-3 Sized AI Fashions in Simply 565 Strains of Code

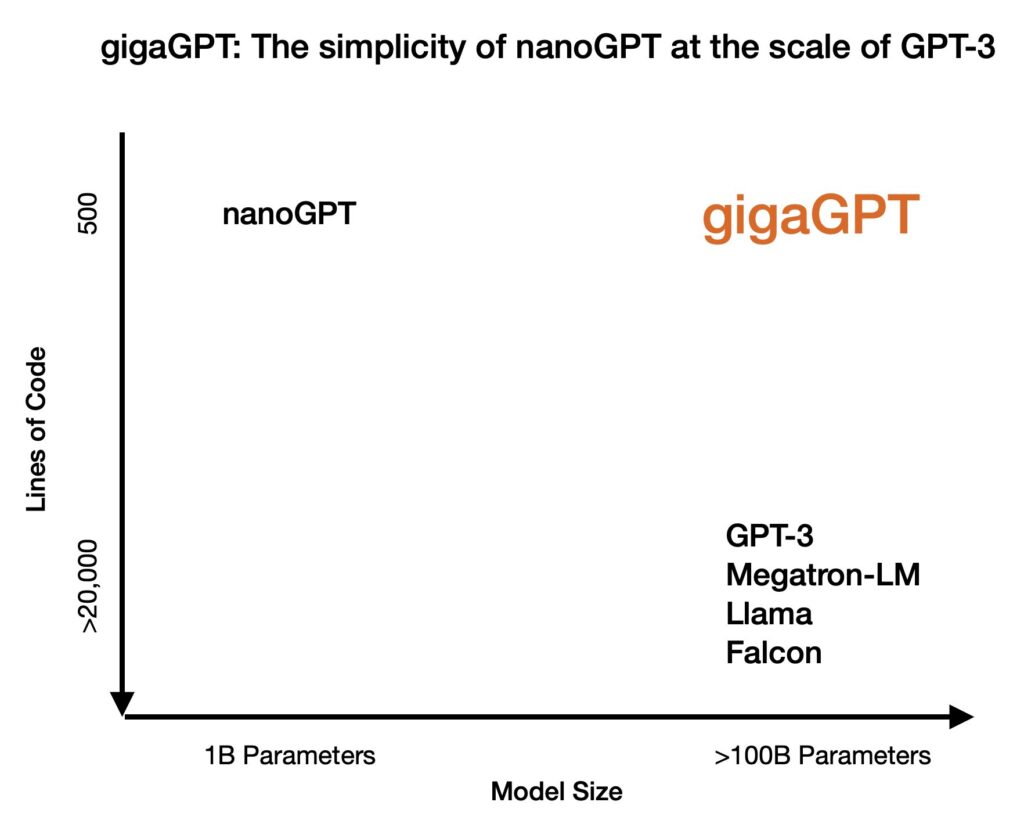

Coaching massive transformer fashions poses vital challenges, particularly when aiming for fashions with billions and even trillions of parameters. The first hurdle lies within the battle to effectively distribute the workload throughout a number of GPUs whereas mitigating reminiscence limitations. The present panorama depends on complicated Giant Language Mannequin (LLM) scaling frameworks, reminiscent of Megatron, DeepSpeed, NeoX, Fairscale, and Mosaic Foundry. Nevertheless, these frameworks introduce appreciable complexity as mannequin sizes improve. The analysis underneath dialogue introduces Cerebras’ gigaGPT as a novel resolution to handle these challenges, providing an alternate strategy that eliminates the necessity for intricate parallelization methods.

For coaching massive transformer fashions, the prevailing strategies, as exemplified by frameworks like Megatron and DeepSpeed, depend on distributed computing throughout a number of GPUs. Nevertheless, as mannequin sizes exceed a couple of billion parameters, these strategies encounter reminiscence constraints, necessitating intricate options. In distinction, gigaGPT by Cerebras introduces a paradigm shift. It implements nanoGPT, that includes a remarkably compact code base of solely 565 traces. This implementation can practice fashions with nicely over 100 billion parameters with out extra code or reliance on third-party frameworks. GigaGPT makes use of the in depth reminiscence and compute capability of Cerebras {hardware}. In contrast to its counterparts, it operates seamlessly with out introducing additional complexities, providing the very best of each worlds—a concise, hackable codebase and the aptitude to coach GPT-3-sized fashions.

GigaGPT, at its core, implements the essential GPT-2 structure, aligning carefully with nanoGPT’s rules. It employs realized place embeddings, normal consideration, biases all through the mannequin, and decisions to reflect nanoGPT’s construction. Notably, the implementation is open to greater than only a particular mannequin measurement; gigaGPT validates its versatility by coaching fashions with 111M, 13B, 70B, and 175B parameters.

The OpenWebText dataset, coupled with the GPT-2 tokenizer and preprocessing code from nanoGPT, serves because the testing floor. GigaGPT’s efficiency is underscored by the truth that it scales from fashions within the hundreds of thousands to these with lots of of billions of parameters with out the necessity for specialised parallelization methods. The 565 traces of code embody the whole repository, demonstrating its simplicity and effectivity.

The implementation’s success is additional exemplified in particular mannequin configurations. As an illustration, the 111M configuration aligns with Cerebras-GPT, sustaining the identical mannequin dimensions, studying fee, batch measurement, and coaching schedule. Equally, the 13B configuration carefully matches the corresponding Cerebras-GPT configuration for its measurement, and the 70B configuration attracts inspiration from Llama-2 70B. The 70B mannequin maintains stability and efficiency, showcasing its scalability. After validating the 70B mannequin, the researchers pushed the boundaries by configuring a 175B mannequin primarily based on the GPT-3 paper. The preliminary steps exhibit the mannequin’s capability to deal with the elevated scale with out reminiscence points, hinting that gigaGPT may scale to fashions exceeding 1 trillion parameters.

In conclusion, gigaGPT emerges as a groundbreaking resolution to the challenges of coaching massive transformer fashions. The analysis group’s implementation not solely simplifies the method by offering a concise and hackable codebase but in addition allows coaching GPT-3-sized fashions. The utilization of Cerebras {hardware}, with its in depth reminiscence and compute capability, marks a big leap in making large-scale AI mannequin coaching extra accessible, scalable, and environment friendly. This modern strategy presents a promising avenue for machine studying researchers and practitioners in search of to deal with the complexities of coaching huge language fashions.

Madhur Garg is a consulting intern at MarktechPost. He’s at present pursuing his B.Tech in Civil and Environmental Engineering from the Indian Institute of Know-how (IIT), Patna. He shares a robust ardour for Machine Studying and enjoys exploring the most recent developments in applied sciences and their sensible purposes. With a eager curiosity in synthetic intelligence and its various purposes, Madhur is decided to contribute to the sphere of Information Science and leverage its potential affect in varied industries.