Create an internet UI to work together with LLMs utilizing Amazon SageMaker JumpStart

The launch of ChatGPT and rise in recognition of generative AI have captured the creativeness of shoppers who’re inquisitive about how they’ll use this expertise to create new services and products on AWS, resembling enterprise chatbots, that are extra conversational. This submit exhibits you how one can create an internet UI, which we name Chat Studio, to begin a dialog and work together with basis fashions accessible in Amazon SageMaker JumpStart resembling Llama 2, Secure Diffusion, and different fashions accessible on Amazon SageMaker. After you deploy this answer, customers can get began shortly and expertise the capabilities of a number of basis fashions in conversational AI although an internet interface.

Chat Studio also can optionally invoke the Secure Diffusion mannequin endpoint to return a collage of related photographs and movies if the consumer requests for media to be displayed. This function may help improve the consumer expertise with using media as accompanying property to the response. This is only one instance of how one can enrich Chat Studio with extra integrations to fulfill your targets.

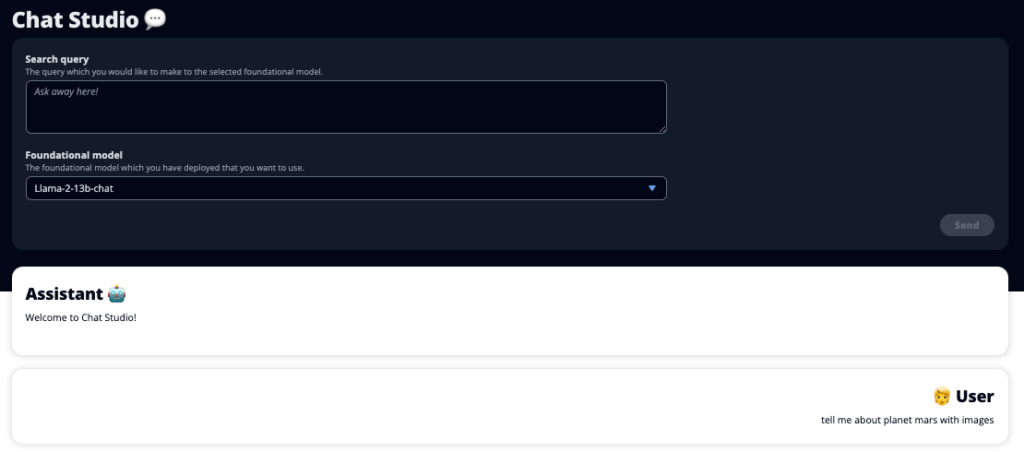

The next screenshots present examples of what a consumer question and response appear to be.

Giant language fashions

Generative AI chatbots resembling ChatGPT are powered by massive language fashions (LLMs), that are primarily based on a deep studying neural community that may be educated on massive portions of unlabeled textual content. The usage of LLMs permits for a greater conversational expertise that intently resembles interactions with actual people, fostering a way of connection and improved consumer satisfaction.

SageMaker basis fashions

In 2021, the Stanford Institute for Human-Centered Synthetic Intelligence termed some LLMs as basis fashions. Basis fashions are pre-trained on a big and broad set of basic knowledge and are supposed to function the muse for additional optimizations in a variety of use circumstances, from producing digital artwork to multilingual textual content classification. These basis fashions are in style with clients as a result of coaching a brand new mannequin from scratch takes time and may be costly. SageMaker JumpStart offers entry to tons of of basis fashions maintained from third-party open supply and proprietary suppliers.

Answer overview

This submit walks by means of a low-code workflow for deploying pre-trained and customized LLMs by means of SageMaker, and creating an internet UI to interface with the fashions deployed. We cowl the next steps:

- Deploy SageMaker basis fashions.

- Deploy AWS Lambda and AWS Identity and Access Management (IAM) permissions utilizing AWS CloudFormation.

- Arrange and run the consumer interface.

- Optionally, add different SageMaker basis fashions. This step extends Chat Studio’s functionality to work together with extra basis fashions.

- Optionally, deploy the appliance utilizing AWS Amplify. This step deploys Chat Studio to the online.

Confer with the next diagram for an outline of the answer structure.

Conditions

To stroll by means of the answer, you have to have the next conditions:

- An AWS account with adequate IAM consumer privileges.

npmput in in your native atmosphere. For directions on the best way to set upnpm, confer with Downloading and installing Node.js and npm.- A service quota of 1 for the corresponding SageMaker endpoints. For Llama 2 13b Chat, we use an ml.g5.48xlarge occasion and for Secure Diffusion 2.1, we use an ml.p3.2xlarge occasion.

To request a service quota enhance, on the AWS Service Quotas console, navigate to AWS companies, SageMaker, and request for a service quota elevate to a price of 1 for ml.g5.48xlarge for endpoint utilization and ml.p3.2xlarge for endpoint utilization.

The service quota request could take just a few hours to be permitted, relying on the occasion sort availability.

Deploy SageMaker basis fashions

SageMaker is a completely managed machine studying (ML) service for builders to shortly construct and prepare ML fashions with ease. Full the next steps to deploy the Llama 2 13b Chat and Secure Diffusion 2.1 basis fashions utilizing Amazon SageMaker Studio:

- Create a SageMaker area. For directions, confer with Onboard to Amazon SageMaker Domain using Quick setup.

A site units up all of the storage and means that you can add customers to entry SageMaker.

- On the SageMaker console, select Studio within the navigation pane, then select Open Studio.

- Upon launching Studio, beneath SageMaker JumpStart within the navigation pane, select Fashions, notebooks, options.

- Within the search bar, seek for Llama 2 13b Chat.

- Beneath Deployment Configuration, for SageMaker internet hosting occasion, select ml.g5.48xlarge and for Endpoint identify, enter

meta-textgeneration-llama-2-13b-f. - Select Deploy.

After the deployment succeeds, you need to be capable of see the In Service standing.

- On the Fashions, notebooks, options web page, seek for Secure Diffusion 2.1.

- Beneath Deployment Configuration, for SageMaker internet hosting occasion, select ml.p3.2xlarge and for Endpoint identify, enter

jumpstart-dft-stable-diffusion-v2-1-base. - Select Deploy.

After the deployment succeeds, you need to be capable of see the In Service standing.

Deploy Lambda and IAM permissions utilizing AWS CloudFormation

This part describes how one can launch a CloudFormation stack that deploys a Lambda perform that processes your consumer request and calls the SageMaker endpoint that you simply deployed, and deploys all the required IAM permissions. Full the next steps:

- Navigate to the GitHub repository and obtain the CloudFormation template (

lambda.cfn.yaml) to your native machine. - On the CloudFormation console, select the Create stack drop-down menu and select With new sources (customary).

- On the Specify template web page, choose Add a template file and Select file.

- Select the

lambda.cfn.yamlfile that you simply downloaded, then select Subsequent. - On the Specify stack particulars web page, enter a stack identify and the API key that you simply obtained within the conditions, then select Subsequent.

- On the Configure stack choices web page, select Subsequent.

- Overview and acknowledge the adjustments and select Submit.

Arrange the online UI

This part describes the steps to run the online UI (created utilizing Cloudscape Design System) in your native machine:

- On the IAM console, navigate to the consumer

functionUrl. - On the Safety Credentials tab, select Create entry key.

- On the Entry key greatest practices & options web page, choose Command Line Interface (CLI) and select Subsequent.

- On the Set description tag web page, select Create entry key.

- Copy the entry key and secret entry key.

- Select Completed.

- Navigate to the GitHub repository and obtain the

react-llm-chat-studiocode. - Launch the folder in your most well-liked IDE and open a terminal.

- Navigate to

src/configs/aws.jsonand enter the entry key and secret entry key you obtained. - Enter the next instructions within the terminal:

- Open http://localhost:3000 in your browser and begin interacting together with your fashions!

To make use of Chat Studio, select a foundational mannequin on the drop-down menu and enter your question within the textual content field. To get AI-generated photographs together with the response, add the phrase “with photographs” to the top of your question.

Add different SageMaker basis fashions

You’ll be able to additional lengthen the potential of this answer to incorporate extra SageMaker basis fashions. As a result of each mannequin expects completely different enter and output codecs when invoking its SageMaker endpoint, you will want to write down some transformation code within the callSageMakerEndpoints Lambda perform to interface with the mannequin.

This part describes the overall steps and code adjustments required to implement a further mannequin of your selection. Word that fundamental data of Python language is required for Steps 6–8.

- In SageMaker Studio, deploy the SageMaker basis mannequin of your selection.

- Select SageMaker JumpStart and Launch JumpStart property.

- Select your newly deployed mannequin endpoint and select Open Pocket book.

- On the pocket book console, discover the payload parameters.

These are the fields that the brand new mannequin expects when invoking its SageMaker endpoint. The next screenshot exhibits an instance.

- On the Lambda console, navigate to

callSageMakerEndpoints. - Add a customized enter handler in your new mannequin.

Within the following screenshot, we reworked the enter for Falcon 40B Instruct BF16 and GPT NeoXT Chat Base 20B FP16. You’ll be able to insert your customized parameter logic as indicated so as to add the enter transformation logic as regards to the payload parameters that you simply copied.

- Return to the pocket book console and find

query_endpoint.

This perform offers you an concept the best way to rework the output of the fashions to extract the ultimate textual content response.

- Just about the code in

query_endpoint, add a customized output handler in your new mannequin.

- Select Deploy.

- Open your IDE, launch the

react-llm-chat-studiocode, and navigate tosrc/configs/fashions.json. - Add your mannequin identify and mannequin endpoint, and enter the payload parameters from Step 4 beneath

payloadutilizing the next format: - Refresh your browser to begin interacting together with your new mannequin!

Deploy the appliance utilizing Amplify

Amplify is a whole answer that means that you can shortly and effectively deploy your software. This part describes the steps to deploy Chat Studio to an Amazon CloudFront distribution utilizing Amplify if you happen to want to share your software with different customers.

- Navigate to the

react-llm-chat-studiocode folder you created earlier. - Enter the next instructions within the terminal and comply with the setup directions:

- Initialize a brand new Amplify mission through the use of the next command. Present a mission identify, settle for the default configurations, and select AWS entry keys when prompted to pick the authentication methodology.

- Host the Amplify mission through the use of the next command. Select Amazon CloudFront and S3 when prompted to pick the plugin mode.

- Lastly, construct and deploy the mission with the next command:

- After the deployment succeeds, open the URL offered in your browser and begin interacting together with your fashions!

Clear up

To keep away from incurring future costs, full the next steps:

- Delete the CloudFormation stack. For directions, confer with Deleting a stack on the AWS CloudFormation console.

- Delete the SageMaker JumpStart endpoint. For directions, confer with Delete Endpoints and Resources.

- Delete the SageMaker area. For directions, confer with Delete an Amazon SageMaker Domain.

Conclusion

On this submit, we defined the best way to create an internet UI for interfacing with LLMs deployed on AWS.

With this answer, you possibly can work together together with your LLM and maintain a dialog in a user-friendly method to check or ask the LLM questions, and get a collage of photographs and movies if required.

You’ll be able to lengthen this answer in numerous methods, resembling to combine extra basis fashions, integrate with Amazon Kendra to allow ML-powered clever seek for understanding enterprise content material, and extra!

We invite you to experiment with different pre-trained LLMs available on AWS, or construct on high of and even create your individual LLMs in SageMaker. Tell us your questions and findings within the feedback, and have enjoyable!

In regards to the authors

Jarrett Yeo Shan Wei is an Affiliate Cloud Architect in AWS Skilled Providers protecting the Public Sector throughout ASEAN and is an advocate for serving to clients modernize and migrate into the cloud. He has attained 5 AWS certifications, and has additionally printed a analysis paper on gradient boosting machine ensembles within the eighth Worldwide Convention on AI. In his free time, Jarrett focuses on and contributes to the generative AI scene at AWS.

Jarrett Yeo Shan Wei is an Affiliate Cloud Architect in AWS Skilled Providers protecting the Public Sector throughout ASEAN and is an advocate for serving to clients modernize and migrate into the cloud. He has attained 5 AWS certifications, and has additionally printed a analysis paper on gradient boosting machine ensembles within the eighth Worldwide Convention on AI. In his free time, Jarrett focuses on and contributes to the generative AI scene at AWS.

Tammy Lim Lee Xin is an Affiliate Cloud Architect at AWS. She makes use of expertise to assist clients ship their desired outcomes of their cloud adoption journey and is keen about AI/ML. Outdoors of labor she loves travelling, mountaineering, and spending time with household and associates.

Tammy Lim Lee Xin is an Affiliate Cloud Architect at AWS. She makes use of expertise to assist clients ship their desired outcomes of their cloud adoption journey and is keen about AI/ML. Outdoors of labor she loves travelling, mountaineering, and spending time with household and associates.