Cut back mannequin deployment prices by 50% on common utilizing the newest options of Amazon SageMaker

As organizations deploy fashions to manufacturing, they’re continuously searching for methods to optimize the efficiency of their basis fashions (FMs) operating on the newest accelerators, akin to AWS Inferentia and GPUs, to allow them to scale back their prices and reduce response latency to offer the most effective expertise to end-users. Nonetheless, some FMs don’t absolutely make the most of the accelerators out there with the cases they’re deployed on, resulting in an inefficient use of {hardware} sources. Some organizations deploy a number of FMs to the identical occasion to raised make the most of all the out there accelerators, however this requires advanced infrastructure orchestration that’s time consuming and troublesome to handle. When a number of FMs share the identical occasion, every FM has its personal scaling wants and utilization patterns, making it difficult to foretell when it’s good to add or take away cases. For instance, one mannequin could also be used to energy a person utility the place utilization can spike throughout sure hours, whereas one other mannequin might have a extra constant utilization sample. Along with optimizing prices, clients wish to present the most effective end-user expertise by decreasing latency. To do that, they typically deploy a number of copies of a FM to discipline requests from customers in parallel. As a result of FM outputs might vary from a single sentence to a number of paragraphs, the time it takes to finish the inference request varies considerably, resulting in unpredictable spikes in latency if the requests are routed randomly between cases. Amazon SageMaker now helps new inference capabilities that enable you scale back deployment prices and latency.

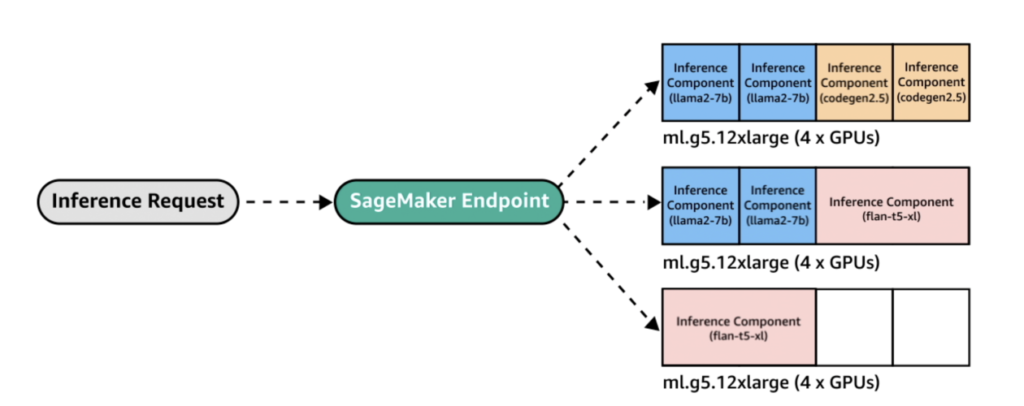

Now you can create inference component-based endpoints and deploy machine studying (ML) fashions to a SageMaker endpoint. An inference part (IC) abstracts your ML mannequin and lets you assign CPUs, GPU, or AWS Neuron accelerators, and scaling insurance policies per mannequin. Inference elements provide the next advantages:

- SageMaker will optimally place and pack fashions onto ML cases to maximise utilization, resulting in value financial savings.

- SageMaker will scale every mannequin up and down primarily based in your configuration to satisfy your ML utility necessities.

- SageMaker will scale so as to add and take away cases dynamically to make sure capability is out there whereas holding idle compute to a minimal.

- You possibly can scale right down to zero copies of a mannequin to liberate sources for different fashions. You may also specify to maintain necessary fashions at all times loaded and able to serve site visitors.

With these capabilities, you possibly can scale back mannequin deployment prices by 50% on common. The price financial savings will differ relying in your workload and site visitors patterns. Let’s take a easy instance for instance how packing a number of fashions on a single endpoint can maximize utilization and save prices. Let’s say you have got a chat utility that helps vacationers perceive native customs and finest practices constructed utilizing two variants of Llama 2: one fine-tuned for European guests and the opposite fine-tuned for American guests. We count on site visitors for the European mannequin between 00:01–11:59 UTC and the American mannequin between 12:00–23:59 UTC. As an alternative of deploying these fashions on their very own devoted cases the place they are going to sit idle half the time, now you can deploy them on a single endpoint to save lots of prices. You possibly can scale down the American mannequin to zero when it isn’t wanted to liberate capability for the European mannequin and vice versa. This lets you make the most of your {hardware} effectively and keep away from waste. This can be a easy instance utilizing two fashions, however you possibly can simply lengthen this concept to pack lots of of fashions onto a single endpoint that routinely scales up and down along with your workload.

On this publish, we present you the brand new capabilities of IC-based SageMaker endpoints. We additionally stroll you thru deploying a number of fashions utilizing inference elements and APIs. Lastly, we element among the new observability capabilities and the right way to arrange auto scaling insurance policies to your fashions and handle occasion scaling to your endpoints. You may also deploy fashions via our new simplified, interactive person expertise. We additionally assist superior routing capabilities to optimize the latency and efficiency of your inference workloads.

Constructing blocks

Let’s take a deeper look and perceive how these new capabilities work. The next is a few new terminology for SageMaker internet hosting:

- Inference part – A SageMaker internet hosting object that you should use to deploy a mannequin to an endpoint. You possibly can create an inference part by supplying the next:

- The SageMaker mannequin or specification of a SageMaker-compatible picture and mannequin artifacts.

- Compute useful resource necessities, which specify the wants of every copy of your mannequin, together with CPU cores, host reminiscence, and variety of accelerators.

- Mannequin copy – A runtime copy of an inference part that’s able to serving requests.

- Managed occasion auto scaling – A SageMaker internet hosting functionality to scale up or down the variety of compute cases used for an endpoint. Occasion scaling reacts to the scaling of inference elements.

To create a brand new inference part, you possibly can specify a container picture and a mannequin artifact, or you should use SageMaker fashions that you might have already created. You additionally have to specify the compute useful resource necessities such because the variety of host CPU cores, host reminiscence, or the variety of accelerators your mannequin must run.

Once you deploy an inference part, you possibly can specify MinCopies to make sure that the mannequin is already loaded within the amount that you simply require, able to serve requests.

You even have the choice to set your insurance policies in order that inference part copies scale to zero. For instance, when you have no load operating towards an IC, the mannequin copy can be unloaded. This may liberate sources that may be changed by energetic workloads to optimize the utilization and effectivity of your endpoint.

As inference requests enhance or lower, the variety of copies of your ICs may also scale up or down primarily based in your auto scaling insurance policies. SageMaker will deal with the position to optimize the packing of your fashions for availability and price.

As well as, when you allow managed occasion auto scaling, SageMaker will scale compute cases in accordance with the variety of inference elements that should be loaded at a given time to serve site visitors. SageMaker will scale up the cases and pack your cases and inference elements to optimize for value whereas preserving mannequin efficiency. Though we advocate the usage of managed occasion scaling, you even have the choice to handle the scaling your self, must you select to, via utility auto scaling.

SageMaker will rebalance inference elements and scale down the cases if they’re now not wanted by inference elements and save your prices.

Walkthrough of APIs

SageMaker has launched a brand new entity referred to as the InferenceComponent. This decouples the small print of internet hosting the ML mannequin from the endpoint itself. The InferenceComponent lets you specify key properties for internet hosting the mannequin just like the SageMaker mannequin you wish to use or the container particulars and mannequin artifacts. You additionally specify variety of copies of the elements itself to deploy, and variety of accelerators (GPUs, Inf, or Trn accelerators) or CPU (vCPUs) required. This offers extra flexibility so that you can use a single endpoint for any variety of fashions you intend to deploy to it sooner or later.

Let’s have a look at the Boto3 API calls to create an endpoint with an inference part. Notice that there are some parameters that we tackle later on this publish.

The next is instance code for CreateEndpointConfig:

The next is instance code for CreateEndpoint:

The next is instance code for CreateInferenceComponent:

This decoupling of InferenceComponent to an endpoint offers flexibility. You possibly can host a number of fashions on the identical infrastructure, including or eradicating them as your necessities change. Every mannequin will be up to date independently as wanted. Moreover, you possibly can scale fashions in accordance with your small business wants. InferenceComponent additionally lets you management capability per mannequin. In different phrases, you possibly can decide what number of copies of every mannequin to host. This predictable scaling helps you meet the precise latency necessities for every mannequin. Total, InferenceComponent offers you far more management over your hosted fashions.

Within the following desk, we present a side-by-side comparability of the high-level strategy to creating and invoking an endpoint with out InferenceComponent and with InferenceComponent. Notice that CreateModel() is now optionally available for IC-based endpoints.

| Step | Mannequin-Based mostly Endpoints | Inference Part-Based mostly Endpoints |

| 1 | CreateModel(…) | CreateEndpointConfig(…) |

| 2 | CreateEndpointConfig(…) | CreateEndpoint(…) |

| 3 | CreateEndpoint(…) | CreateInferenceComponent(…) |

| 4 | InvokeEndpoint(…) | InvokeEndpoint(InferneceComponentName=’worth’…) |

The introduction of InferenceComponent lets you scale at a mannequin degree. See beneath for extra particulars on how InferenceComponent works with auto scaling.

When invoking the SageMaker endpoint, now you can specify the brand new parameter InferenceComponentName to hit the specified InferenceComponentName. SageMaker will deal with routing the request to the occasion internet hosting the requested InferenceComponentName. See the next code:

By default, SageMaker makes use of random routing of the requests to the cases backing your endpoint. If you wish to allow least excellent requests routing, you possibly can set the routing technique within the endpoint config’s RoutingConfig:

Least excellent requests routing routes to the precise cases which have extra capability to course of requests. This can present extra uniform load-balancing and useful resource utilization.

Along with CreateInferenceComponent, the next APIs at the moment are out there:

DescribeInferenceComponentDeleteInferenceComponentUpdateInferenceComponentListInferenceComponents

InferenceComponent logs and metrics

InferenceComponent logs are positioned in /aws/sagemaker/InferenceComponents/<InferenceComponentName>. All logs despatched to stderr and stdout within the container are despatched to those logs in Amazon CloudWatch.

With the introduction of IC-based endpoints, you now have the power to view further occasion metrics, inference part metrics, and invocation metrics.

For SageMaker cases, now you can monitor the GPUReservation and CPUReservation metrics to see the sources reserved for an endpoint primarily based on the inference elements that you’ve got deployed. These metrics might help you measurement your endpoint and auto scaling insurance policies. You may also view the combination metrics related to all fashions deployed to an endpoint.

SageMaker additionally exposes metrics at an inference part degree, which may present a extra granular view of the utilization of sources for the inference elements that you’ve got deployed. This lets you get a view of how a lot combination useful resource utilization akin to GPUUtilizationNormalized and GPUMemoryUtilizationNormalized for every inference part you have got deployed which will have zero or many copies.

Lastly, SageMaker offers invocation metrics, which now tracks invocations for inference elements aggregately (Invocations) or per copy instantiated (InvocationsPerCopy)

For a complete record of metrics, seek advice from SageMaker Endpoint Invocation Metrics.

Mannequin-level auto scaling

To implement the auto scaling habits we described, when creating the SageMaker endpoint configuration and inference part, you outline the preliminary occasion rely and preliminary mannequin copy rely, respectively. After you create the endpoint and corresponding ICs, to use auto scaling on the IC degree, it’s good to first register the scaling goal after which affiliate the scaling coverage to the IC.

When implementing the scaling coverage, we use SageMakerInferenceComponentInvocationsPerCopy, which is a brand new metric launched by SageMaker. It captures the common variety of invocations per mannequin copy per minute.

After you set the scaling coverage, SageMaker creates two CloudWatch alarms for every autoscaling goal: one to set off scale-out if in alarm for 3 minutes (three 1-minute information factors) and one to set off scale-in if in alarm for quarter-hour (15 1-minute information factors), as proven within the following screenshot. The time to set off the scaling motion is normally 1–2 minutes longer than these minutes as a result of it takes time for the endpoint to publish metrics to CloudWatch, and it additionally takes time for AutoScaling to react. The cool-down interval is the period of time, in seconds, after a scale-in or scale-out exercise completes earlier than one other scale-out exercise can begin. If the scale-out cool-down is shorter than that the endpoint replace time, then it takes no impact, as a result of it’s not potential to replace a SageMaker endpoint when it’s in Updating standing.

Notice that, when organising IC-level auto scaling, it’s good to be certain that the MaxInstanceCount parameter is the same as or smaller than the utmost variety of ICs this endpoint can deal with. For instance, in case your endpoint is barely configured to have one occasion within the endpoint configuration and this occasion can solely host a most of 4 copies of the mannequin, then the MaxInstanceCount ought to be equal to or smaller than 4. Nonetheless, you may also use the managed auto scaling functionality offered by SageMaker to routinely scale the occasion rely primarily based on the required mannequin copy quantity to fulfil the necessity of extra compute sources. The next code snippet demonstrates the right way to arrange managed occasion scaling throughout the creation of the endpoint configuration. This manner, when the IC-level auto scaling requires extra occasion rely to host the mannequin copies, SageMaker will routinely scale out the occasion quantity to permit the IC-level scaling to achieve success.

You possibly can apply a number of auto scaling insurance policies towards the identical endpoint, which implies it is possible for you to to use the normal auto scaling coverage to the endpoints created with ICs and scale up and down primarily based on the opposite endpoint metrics. For extra info, seek advice from Optimize your machine learning deployments with auto scaling on Amazon SageMaker. Nonetheless, though that is potential, we nonetheless advocate utilizing managed occasion scaling over managing the scaling your self.

Conclusion

On this publish, we launched a brand new function in SageMaker inference that can enable you maximize the utilization of compute cases, scale to lots of of fashions, and optimize prices, whereas offering predictable efficiency. Moreover, we offered a walkthrough of the APIs and confirmed you the right way to configure and deploy inference elements to your workloads.

We additionally assist advanced routing capabilities to optimize the latency and efficiency of your inference workloads. SageMaker might help you optimize your inference workloads for value and efficiency and offer you model-level granularity for administration. We now have created a set of notebooks that can present you the right way to deploy three totally different fashions, utilizing totally different containers and making use of auto scaling insurance policies in GitHub. We encourage you to start out with pocket book 1 and get palms on with the brand new SageMaker internet hosting capabilities in the present day!

Concerning the authors

James Park is a Options Architect at Amazon Internet Companies. He works with Amazon.com to design, construct, and deploy know-how options on AWS, and has a specific curiosity in AI and machine studying. In h is spare time he enjoys searching for out new cultures, new experiences, and staying updated with the newest know-how tendencies. You could find him on LinkedIn.

James Park is a Options Architect at Amazon Internet Companies. He works with Amazon.com to design, construct, and deploy know-how options on AWS, and has a specific curiosity in AI and machine studying. In h is spare time he enjoys searching for out new cultures, new experiences, and staying updated with the newest know-how tendencies. You could find him on LinkedIn.

Melanie Li, PhD, is a Senior AI/ML Specialist TAM at AWS primarily based in Sydney, Australia. She helps enterprise clients construct options utilizing state-of-the-art AI/ML instruments on AWS and offers steerage on architecting and implementing ML options with finest practices. In her spare time, she likes to discover nature and spend time with household and buddies.

Melanie Li, PhD, is a Senior AI/ML Specialist TAM at AWS primarily based in Sydney, Australia. She helps enterprise clients construct options utilizing state-of-the-art AI/ML instruments on AWS and offers steerage on architecting and implementing ML options with finest practices. In her spare time, she likes to discover nature and spend time with household and buddies.

Marc Karp is an ML Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients design, deploy, and handle ML workloads at scale. In his spare time, he enjoys touring and exploring new locations.

Marc Karp is an ML Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients design, deploy, and handle ML workloads at scale. In his spare time, he enjoys touring and exploring new locations.

Alan Tan is a Senior Product Supervisor with SageMaker, main efforts on massive mannequin inference. He’s captivated with making use of machine studying to the world of analytics. Exterior of labor, he enjoys the outside.

Alan Tan is a Senior Product Supervisor with SageMaker, main efforts on massive mannequin inference. He’s captivated with making use of machine studying to the world of analytics. Exterior of labor, he enjoys the outside.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s captivated with working with clients and is motivated by the aim of democratizing machine studying. He focuses on core challenges associated to deploying advanced ML functions, multi-tenant ML fashions, value optimizations, and making deployment of deep studying fashions extra accessible. In his spare time, Saurabh enjoys mountain climbing, studying about progressive applied sciences, following TechCrunch and spending time along with his household.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s captivated with working with clients and is motivated by the aim of democratizing machine studying. He focuses on core challenges associated to deploying advanced ML functions, multi-tenant ML fashions, value optimizations, and making deployment of deep studying fashions extra accessible. In his spare time, Saurabh enjoys mountain climbing, studying about progressive applied sciences, following TechCrunch and spending time along with his household.

Raj Vippagunta is a Principal Engineer at Amazon SageMaker Machine Studying(ML) platform workforce in AWS. He makes use of his huge expertise of 18+ years in large-scale distributed methods and his ardour for machine studying to construct sensible service choices within the AI and ML area. He has helped construct varied at-scale options for AWS and Amazon. In his spare time, he likes studying books, pursue lengthy distance operating and exploring new locations along with his household.

Raj Vippagunta is a Principal Engineer at Amazon SageMaker Machine Studying(ML) platform workforce in AWS. He makes use of his huge expertise of 18+ years in large-scale distributed methods and his ardour for machine studying to construct sensible service choices within the AI and ML area. He has helped construct varied at-scale options for AWS and Amazon. In his spare time, he likes studying books, pursue lengthy distance operating and exploring new locations along with his household.

Lakshmi Ramakrishnan is a Senior Principal Engineer at Amazon SageMaker Machine Studying (ML) platform workforce in AWS, offering technical management for the product. He has labored in a number of engineering roles in Amazon for over 9 years. He has a Bachelor of Engineering diploma in Info Expertise from Nationwide Institute of Expertise, Karnataka, India and a Grasp of Science diploma in Pc Science from the College of Minnesota Twin Cities.

Lakshmi Ramakrishnan is a Senior Principal Engineer at Amazon SageMaker Machine Studying (ML) platform workforce in AWS, offering technical management for the product. He has labored in a number of engineering roles in Amazon for over 9 years. He has a Bachelor of Engineering diploma in Info Expertise from Nationwide Institute of Expertise, Karnataka, India and a Grasp of Science diploma in Pc Science from the College of Minnesota Twin Cities.

David Nigenda is a Senior Software program Growth Engineer on the Amazon SageMaker workforce, presently engaged on enhancing manufacturing machine studying workflows, in addition to launching new inference options. In his spare time, he tries to maintain up along with his children.

David Nigenda is a Senior Software program Growth Engineer on the Amazon SageMaker workforce, presently engaged on enhancing manufacturing machine studying workflows, in addition to launching new inference options. In his spare time, he tries to maintain up along with his children.

Raghu Ramesha is a Senior ML Options Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients construct, deploy, and migrate ML manufacturing workloads to SageMaker at scale. He makes a speciality of machine studying, AI, and pc imaginative and prescient domains, and holds a grasp’s diploma in Pc Science from UT Dallas. In his free time, he enjoys touring and pictures.

Raghu Ramesha is a Senior ML Options Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients construct, deploy, and migrate ML manufacturing workloads to SageMaker at scale. He makes a speciality of machine studying, AI, and pc imaginative and prescient domains, and holds a grasp’s diploma in Pc Science from UT Dallas. In his free time, he enjoys touring and pictures.

Rupinder Grewal is a Sr Ai/ML Specialist Options Architect with AWS. He presently focuses on serving of fashions and MLOps on SageMaker. Previous to this function he has labored as Machine Studying Engineer constructing and internet hosting fashions. Exterior of labor he enjoys enjoying tennis and biking on mountain trails.

Rupinder Grewal is a Sr Ai/ML Specialist Options Architect with AWS. He presently focuses on serving of fashions and MLOps on SageMaker. Previous to this function he has labored as Machine Studying Engineer constructing and internet hosting fashions. Exterior of labor he enjoys enjoying tennis and biking on mountain trails.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from massive enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Pc Imaginative and prescient domains. He helps clients obtain excessive efficiency mannequin inference on SageMaker.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from massive enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Pc Imaginative and prescient domains. He helps clients obtain excessive efficiency mannequin inference on SageMaker.