Boosting RAG-based clever doc assistants utilizing entity extraction, SQL querying, and brokers with Amazon Bedrock

Conversational AI has come a great distance in recent times due to the fast developments in generative AI, particularly the efficiency enhancements of enormous language fashions (LLMs) launched by coaching strategies reminiscent of instruction fine-tuning and reinforcement studying from human suggestions. When prompted appropriately, these fashions can carry coherent conversations with none task-specific coaching knowledge. Nevertheless, they’ll’t generalize effectively to enterprise-specific questions as a result of, to generate a solution, they depend on the general public knowledge they have been uncovered to throughout pre-training. Such knowledge usually lacks the specialised information contained in inner paperwork obtainable in trendy companies, which is usually wanted to get correct solutions in domains reminiscent of pharmaceutical analysis, monetary investigation, and buyer help.

To create AI assistants which are able to having discussions grounded in specialised enterprise information, we have to join these highly effective however generic LLMs to inner information bases of paperwork. This methodology of enriching the LLM era context with info retrieved out of your inner knowledge sources is known as Retrieval Augmented Era (RAG), and produces assistants which are area particular and extra reliable, as proven by Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. One other driver behind RAG’s recognition is its ease of implementation and the existence of mature vector search options, reminiscent of these provided by Amazon Kendra (see Amazon Kendra launches Retrieval API) and Amazon OpenSearch Service (see k-Nearest Neighbor (k-NN) search in Amazon OpenSearch Service), amongst others.

Nevertheless, the favored RAG design sample with semantic search can’t reply all varieties of questions which are attainable on paperwork. That is very true for questions that require analytical reasoning throughout a number of paperwork. For instance, think about that you’re planning subsequent 12 months’s technique of an funding firm. One important step could be to investigate and evaluate the monetary outcomes and potential dangers of candidate corporations. This job entails answering analytical reasoning questions. As an example, the question “Give me the highest 5 corporations with the very best income within the final 2 years and establish their foremost dangers” requires a number of steps of reasoning, a few of which might use semantic search retrieval, whereas others require analytical capabilities.

On this put up, we present the way to design an clever doc assistant able to answering analytical and multi-step reasoning questions in three components. In Half 1, we evaluation the RAG design sample and its limitations on analytical questions. Then we introduce you to a extra versatile structure that overcomes these limitations. Half 2 helps you dive deeper into the entity extraction pipeline used to arrange structured knowledge, which is a key ingredient for analytical query answering. Half 3 walks you thru the way to use Amazon Bedrock LLMs to question that knowledge and construct an LLM agent that enhances RAG with analytical capabilities, thereby enabling you to construct clever doc assistants that may reply advanced domain-specific questions throughout a number of paperwork.

Half 1: RAG limitations and answer overview

On this part, we evaluation the RAG design sample and focus on its limitations on analytical questions. We additionally current a extra versatile structure that overcomes these limitations.

Overview of RAG

RAG options are impressed by representation learning and semantic search concepts which have been steadily adopted in rating issues (for instance, advice and search) and pure language processing (NLP) duties since 2010.

The favored method used at present is shaped of three steps:

- An offline batch processing job ingests paperwork from an enter information base, splits them into chunks, creates an embedding for every chunk to characterize its semantics utilizing a pre-trained embedding mannequin, reminiscent of Amazon Titan embedding fashions, then makes use of these embeddings as enter to create a semantic search index.

- When answering a brand new query in actual time, the enter query is transformed to an embedding, which is used to seek for and extract probably the most comparable chunks of paperwork utilizing a similarity metric, reminiscent of cosine similarity, and an approximate nearest neighbors algorithm. The search precision will also be improved with metadata filtering.

- A immediate is constructed from the concatenation of a system message with a context that’s shaped of the related chunks of paperwork extracted in step 2, and the enter query itself. This immediate is then introduced to an LLM mannequin to generate the ultimate reply to the query from the context.

With the best underlying embedding mannequin, able to producing correct semantic representations of the enter doc chunks and the enter questions, and an environment friendly semantic search module, this answer is ready to reply questions that require retrieving existent info in a database of paperwork. For instance, in case you have a service or a product, you could possibly begin by indexing its FAQ part or documentation and have an preliminary conversational AI tailor-made to your particular providing.

Limitations of RAG primarily based on semantic search

Though RAG is an integral part in trendy domain-specific AI assistants and a wise start line for constructing a conversational AI round a specialised information base, it will probably’t reply questions that require scanning, evaluating, and reasoning throughout all paperwork in your information base concurrently, particularly when the augmentation is predicated solely on semantic search.

To grasp these limitations, let’s take into account once more the instance of deciding the place to speculate primarily based on monetary studies. If we have been to make use of RAG to converse with these studies, we might ask questions reminiscent of “What are the dangers that confronted firm X in 2022,” or “What’s the internet income of firm Y in 2022?” For every of those questions, the corresponding embedding vector, which encodes the semantic that means of the query, is used to retrieve the top-Ok semantically comparable chunks of paperwork obtainable within the search index. That is usually achieved by using an approximate nearest neighbors answer reminiscent of FAISS, NMSLIB, pgvector, or others, which attempt to strike a stability between retrieval pace and recall to realize real-time efficiency whereas sustaining passable accuracy.

Nevertheless, the previous method can’t precisely reply analytical questions throughout all paperwork, reminiscent of “What are the highest 5 corporations with the very best internet revenues in 2022?”

It is because semantic search retrieval makes an attempt to search out the Ok most comparable chunks of paperwork to the enter query. However as a result of not one of the paperwork comprise complete summaries of revenues, it’s going to return chunks of paperwork that merely comprise mentions of “internet income” and presumably “2022,” with out fulfilling the important situation of specializing in corporations with the very best income. If we current these retrieval outcomes to an LLM as context to reply the enter query, it could formulate a deceptive reply or refuse to reply, as a result of the required appropriate info is lacking.

These limitations come by design as a result of semantic search doesn’t conduct an intensive scan of all embedding vectors to search out related paperwork. As a substitute, it makes use of approximate nearest neighbor strategies to keep up affordable retrieval pace. A key technique for effectivity in these strategies is segmenting the embedding area into teams throughout indexing. This permits for shortly figuring out which teams could comprise related embeddings throughout retrieval, with out the necessity for pairwise comparisons. Moreover, even conventional nearest neighbors strategies like KNN, which scan all paperwork, solely compute primary distance metrics and aren’t appropriate for the advanced comparisons wanted for analytical reasoning. Due to this fact, RAG with semantic search is just not tailor-made for answering questions that contain analytical reasoning throughout all paperwork.

To beat these limitations, we suggest an answer that mixes RAG with metadata and entity extraction, SQL querying, and LLM brokers, as described within the following sections.

Overcoming RAG limitations with metadata, SQL, and LLM brokers

Let’s study extra deeply a query on which RAG fails, in order that we are able to hint again the reasoning required to reply it successfully. This evaluation ought to level us in direction of the best method that would complement RAG within the total answer.

Think about the query: “What are the highest 5 corporations with the very best income in 2022?”

To have the ability to reply this query, we would want to:

- Establish the income for every firm.

- Filter right down to hold the revenues of 2022 for every of them.

- Kind the revenues in descending order.

- Slice out the highest 5 revenues alongside the corporate names.

Usually, these analytical operations are achieved on structured knowledge, utilizing instruments reminiscent of pandas or SQL engines. If we had entry to a SQL desk containing the columns firm, income, and 12 months, we might simply reply our query by working a SQL question, much like the next instance:

SELECT firm, income FROM table_name WHERE 12 months = 2022 ORDER BY income DESC LIMIT 5;Storing structured metadata in a SQL desk that accommodates details about related entities allows you to reply many varieties of analytical questions by writing the proper SQL question. That is why we complement RAG in our answer with a real-time SQL querying module in opposition to a SQL desk, populated by metadata extracted in an offline course of.

However how can we implement and combine this method to an LLM-based conversational AI?

There are three steps to have the ability to add SQL analytical reasoning:

- Metadata extraction – Extract metadata from unstructured paperwork right into a SQL desk

- Textual content to SQL – Formulate SQL queries from enter questions precisely utilizing an LLM

- Device choice – Establish if a query have to be answered utilizing RAG or a SQL question

To implement these steps, first we acknowledge that info extraction from unstructured paperwork is a conventional NLP job for which LLMs present promise in attaining excessive accuracy by zero-shot or few-shot studying. Second, the power of those fashions to generate SQL queries from pure language has been confirmed for years, as seen within the 2020 release of Amazon QuickSight Q. Lastly, robotically deciding on the best device for a particular query enhances the person expertise and permits answering advanced questions by multi-step reasoning. To implement this characteristic, we delve into LLM brokers in a later part.

To summarize, the answer we suggest consists of the next core parts:

- Semantic search retrieval to reinforce era context

- Structured metadata extraction and querying with SQL

- An agent able to utilizing the best instruments to reply a query

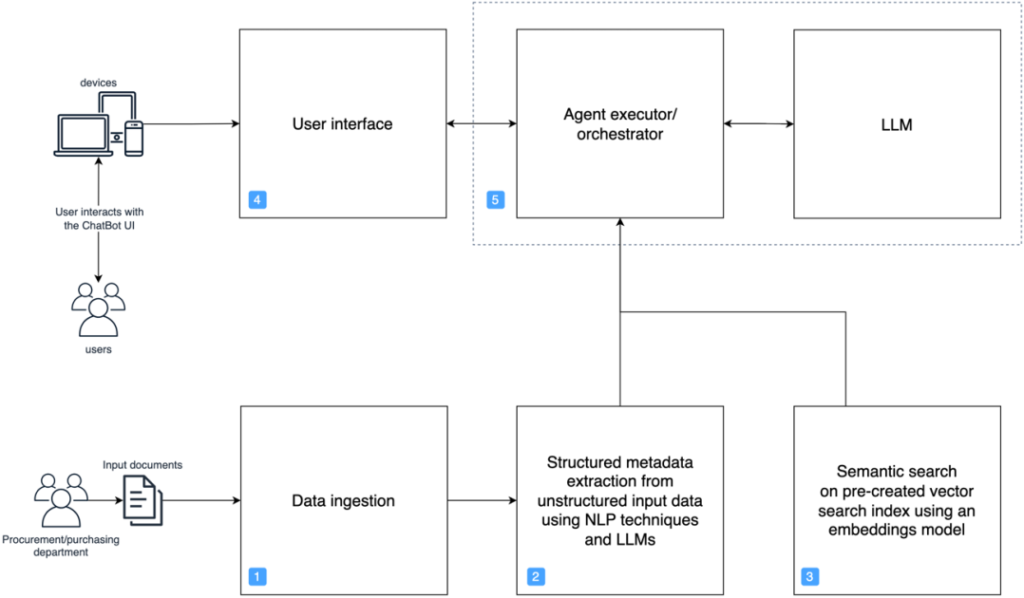

Resolution overview

The next diagram depicts a simplified structure of the answer. It helps you establish and perceive the position of the core parts and the way they work together to implement the complete LLM-assistant habits. The numbering aligns with the order of operations when implementing this answer.

In apply, we applied this answer as outlined within the following detailed structure.

For this structure, we suggest an implementation on GitHub, with loosely coupled parts the place the backend (5), knowledge pipelines (1, 2, 3) and entrance finish (4) can evolve individually. That is to simplify the collaboration throughout competencies when customizing and bettering the answer for manufacturing.

Deploy the answer

To put in this answer in your AWS account, full the next steps:

- Clone the repository on GitHub.

- Set up the backend AWS Cloud Development Kit (AWS CDK) app:

- Open the

backendfolder. - Run

npm set upto put in the dependencies. - When you have by no means used the AWS CDK within the present account and Area, run bootstrapping with

npx cdk bootstrap. - Run

npx cdk deployto deploy the stack.

- Open the

- Optionally, run the

streamlit-uias follows:- We advocate cloning this repository into an Amazon SageMaker Studio atmosphere. For extra info, seek advice from Onboard to Amazon SageMaker Domain using Quick setup.

- Contained in the

frontend/streamlit-uifolder, runbash run-streamlit-ui.sh. - Select the hyperlink with the next format to open the demo:

https://{domain_id}.studio.{area}.sagemaker.aws/jupyter/default/proxy/{port_number}/.

- Lastly, you’ll be able to run the Amazon SageMaker pipeline outlined within the

data-pipelines/04-sagemaker-pipeline-for-documents-processing.ipynbpocket book to course of the enter PDF paperwork and put together the SQL desk and the semantic search index utilized by the LLM assistant.

In the remainder of this put up, we concentrate on explaining crucial parts and design decisions, to hopefully encourage you when designing your personal AI assistant on an inner information base. We assume that parts 1 and 4 are simple to grasp, and concentrate on the core parts 2, 3, and 5.

Half 2: Entity extraction pipeline

On this part, we dive deeper into the entity extraction pipeline used to arrange structured knowledge, which is a key ingredient for analytical query answering.

Textual content extraction

Paperwork are usually saved in PDF format or as scanned photos. They might be shaped of easy paragraph layouts or advanced tables, and comprise digital or handwritten textual content. To extract info appropriately, we have to rework these uncooked paperwork into plain textual content, whereas preserving their unique construction. To do that, you need to use Amazon Textract, which is a machine studying (ML) service that gives mature APIs for textual content, tables, and kinds extraction from digital and handwritten inputs.

In element 2, we extract textual content and tables as follows:

- For every doc, we name Amazon Textract to extract the textual content and tables.

- We use the next Python script to recreate tables as pandas DataFrames.

- We consolidate the outcomes right into a single doc and insert tables as markdown.

This course of is printed by the next move diagram and concretely demonstrated in notebooks/03-pdf-document-processing.ipynb.

Entity extraction and querying utilizing LLMs

To reply analytical questions successfully, it’s essential to extract related metadata and entities out of your doc’s information base to an accessible structured knowledge format. We propose utilizing SQL to retailer this info and retrieve solutions because of its recognition, ease of use, and scalability. This selection additionally advantages from the confirmed language fashions’ capability to generate SQL queries from pure language.

On this part, we dive deeper into the next parts that allow analytical questions:

- A batch course of that extracts structured knowledge out of unstructured knowledge utilizing LLMs

- An actual-time module that converts pure language inquiries to SQL queries and retrieves outcomes from a SQL database

You possibly can extract the related metadata to help analytical questions as follows:

- Outline a JSON schema for info it’s essential to extract, which accommodates an outline of every subject and its knowledge kind, and consists of examples of the anticipated values.

- For every doc, immediate an LLM with the JSON schema and ask it to extract the related knowledge precisely.

- When the doc size is past the context size, and to scale back the extraction price with LLMs, you need to use semantic search to retrieve and current the related chunks of paperwork to the LLM throughout extraction.

- Parse the JSON output and validate the LLM extraction.

- Optionally, again up the outcomes on Amazon S3 as CSV recordsdata.

- Load into the SQL database for later querying.

This course of is managed by the next structure, the place the paperwork in textual content format are loaded with a Python script that runs in an Amazon SageMaker Processing job to carry out the extraction.

For every group of entities, we dynamically assemble a immediate that features a clear description of the data extraction job, and features a JSON schema that defines the anticipated output and consists of the related doc chunks as context. We additionally add a couple of examples of enter and proper output to enhance the extraction efficiency with few-shot studying. That is demonstrated in notebooks/05-entities-extraction-to-structured-metadata.ipynb.

Half 3: Construct an agentic doc assistant with Amazon Bedrock

On this part, we exhibit the way to use Amazon Bedrock LLMs to question knowledge and construct an LLM agent that enhances RAG with analytical capabilities, thereby enabling you to construct clever doc assistants that may reply advanced domain-specific questions throughout a number of paperwork. You possibly can seek advice from the Lambda function on GitHub for the concrete implementation of the agent and instruments described on this half.

Formulate SQL queries and reply analytical questions

Now that we now have a structured metadata retailer with the related entities extracted and loaded right into a SQL database that we are able to question, the query that is still is the way to generate the best SQL question from the enter pure language questions?

Fashionable LLMs are good at producing SQL. As an example, should you request from the Anthropic Claude LLM by Amazon Bedrock to generate a SQL question, you will note believable solutions. Nevertheless, we have to abide by a couple of guidelines when writing the immediate to achieve extra correct SQL queries. These guidelines are particularly vital for advanced queries to scale back hallucination and syntax errors:

- Describe the duty precisely inside the immediate

- Embrace the schema of the SQL tables inside the immediate, whereas describing every column of the desk and specifying its knowledge kind

- Explicitly inform the LLM to solely use present column names and knowledge varieties

- Add a couple of rows of the SQL tables

You would additionally postprocess the generated SQL question utilizing a linter reminiscent of sqlfluff to appropriate formatting, or a parser reminiscent of sqlglot to detect syntax errors and optimize the question. Furthermore, when the efficiency doesn’t meet the requirement, you could possibly present a couple of examples inside the immediate to steer the mannequin with few-shot studying in direction of producing extra correct SQL queries.

From an implementation perspective, we use an AWS Lambda operate to orchestrate the next course of:

- Name an Anthropic Claude mannequin in Amazon Bedrock with the enter query to get the corresponding SQL question. Right here, we use the SQLDatabase class from LangChain so as to add schema descriptions of related SQL tables, and use a customized immediate.

- Parse, validate, and run the SQL question in opposition to the Amazon Aurora PostgreSQL-Compatible Edition database.

The structure for this a part of the answer is highlighted within the following diagram.

Safety issues to forestall SQL injection assaults

As we allow the AI assistant to question a SQL database, we now have to verify this doesn’t introduce safety vulnerabilities. To realize this, we suggest the next safety measures to forestall SQL injection assaults:

- Apply least privilege IAM permissions – Restrict the permission of the Lambda operate that runs the SQL queries utilizing an AWS Identity and Access Management (IAM) coverage and position that follows the least privilege principle. On this case, we grant read-only entry.

- Restrict knowledge entry – Solely present entry to the naked minimal of tables and columns to forestall info disclosure assaults.

- Add a moderation layer – Introduce a moderation layer that detects immediate injection makes an attempt early on and prevents them from propagating to the remainder of the system. It might take the type of rule-based filters, similarity matching in opposition to a database of identified immediate injection examples, or an ML classifier.

Semantic search retrieval to reinforce era context

The answer we suggest makes use of RAG with semantic search in element 3. You possibly can implement this module utilizing knowledge bases for Amazon Bedrock. Moreover, there are a selection of others choices to implement RAG, such because the Amazon Kendra Retrieval API, Amazon OpenSearch vector database, and Amazon Aurora PostgreSQL with pgvector, amongst others. The open supply bundle aws-genai-llm-chatbot demonstrates the way to use many of those vector search choices to implement an LLM-powered chatbot.

On this answer, as a result of we’d like each SQL querying and vector search, we determined to make use of Amazon Aurora PostgreSQL with the pgvector extension, which helps each options. Due to this fact, we implement the semantic-search RAG element with the next structure.

The method of answering questions utilizing the previous structure is finished in two foremost phases.

First, an offline-batch course of, run as a SageMaker Processing job, creates the semantic search index as follows:

- Both periodically, or upon receiving new paperwork, a SageMaker job is run.

- It masses the textual content paperwork from Amazon S3 and splits them into overlapping chunks.

- For every chunk, it makes use of an Amazon Titan embedding mannequin to generate an embedding vector.

- It makes use of the PGVector class from LangChain to ingest the embeddings, with their doc chunks and metadata, into Amazon Aurora PostgreSQL and create a semantic search index on all of the embedding vectors.

Second, in actual time and for every new query, we assemble a solution as follows:

- The query is acquired by the orchestrator that runs on a Lambda operate.

- The orchestrator embeds the query with the identical embedding mannequin.

- It retrieves the top-Ok most related paperwork chunks from the PostgreSQL semantic search index. It optionally makes use of metadata filtering to enhance precision.

- These chunks are inserted dynamically in an LLM immediate alongside the enter query.

- The immediate is introduced to Anthropic Claude on Amazon Bedrock, to instruct it to reply the enter query primarily based on the obtainable context.

- Lastly, the generated reply is shipped again to the orchestrator.

An agent able to utilizing instruments to cause and act

Thus far on this put up, we now have mentioned treating questions that require both RAG or analytical reasoning individually. Nevertheless, many real-world questions demand each capabilities, generally over a number of steps of reasoning, to be able to attain a last reply. To help these extra advanced questions, we have to introduce the notion of an agent.

LLM brokers, such because the agents for Amazon Bedrock, have emerged lately as a promising answer able to utilizing LLMs to cause and adapt utilizing the present context and to decide on acceptable actions from a listing of choices, which presents a normal problem-solving framework. As mentioned in LLM Powered Autonomous Agents, there are a number of prompting methods and design patterns for LLM brokers that help advanced reasoning.

One such design sample is Cause and Act (ReAct), launched in ReAct: Synergizing Reasoning and Acting in Language Models. In ReAct, the agent takes as enter a objective that may be a query, identifies the items of knowledge lacking to reply it, and proposes iteratively the best device to collect info primarily based on the obtainable instruments’ descriptions. After receiving the reply from a given device, the LLM reassesses whether or not it has all the data it wants to completely reply the query. If not, it does one other step of reasoning and makes use of the identical or one other device to collect extra info, till a last response is prepared or a restrict is reached.

The next sequence diagram explains how a ReAct agent works towards answering the query “Give me the highest 5 corporations with the very best income within the final 2 years and establish the dangers related to the highest one.”

The main points of implementing this method in Python are described in Custom LLM Agent. In our answer, the agent and instruments are applied with the next highlighted partial structure.

To reply an enter query, we use AWS providers as follows:

- A person inputs their query by a UI, which calls an API on Amazon API Gateway.

- API Gateway sends the query to a Lambda operate implementing the agent executor.

- The agent calls the LLM with a immediate that accommodates an outline of the instruments obtainable, the ReAct instruction format, and the enter query, after which parses the subsequent motion to finish.

- The motion accommodates which device to name and what the motion enter is.

- If the device to make use of is SQL, the agent executor calls SQLQA to transform the query to SQL and run it. Then it provides the outcome to the immediate and calls the LLM once more to see if it will probably reply the unique query or if extra actions are wanted.

- Equally, if the device to make use of is semantic search, then the motion enter is parsed out and used to retrieve from the PostgreSQL semantic search index. It provides the outcomes to the immediate and checks if the LLM is ready to reply or wants one other motion.

- In any case the data to reply a query is obtainable, the LLM agent formulates a last reply and sends it again to the person.

You possibly can lengthen the agent with additional instruments. Within the implementation obtainable on GitHub, we exhibit how one can add a search engine and a calculator as additional instruments to the aforementioned SQL engine and semantic search instruments. To retailer the continuing dialog historical past, we use an Amazon DynamoDB desk.

From our expertise thus far, we now have seen that the next are keys to a profitable agent:

- An underlying LLM able to reasoning with the ReAct format

- A transparent description of the obtainable instruments, when to make use of them, and an outline of their enter arguments with, probably, an instance of the enter and anticipated output

- A transparent define of the ReAct format that the LLM should observe

- The correct instruments for fixing the enterprise query made obtainable to the LLM agent to make use of

- Appropriately parsing out the outputs from the LLM agent responses because it causes

To optimize prices, we advocate caching the most typical questions with their solutions and updating this cache periodically to scale back calls to the underlying LLM. As an example, you’ll be able to create a semantic search index with the most typical questions as defined beforehand, and match the brand new person query in opposition to the index first earlier than calling the LLM. To discover different caching choices, seek advice from LLM Caching integrations.

Supporting different codecs reminiscent of video, picture, audio, and 3D recordsdata

You possibly can apply the identical answer to varied varieties of info, reminiscent of photos, movies, audio, and 3D design recordsdata like CAD or mesh recordsdata. This entails utilizing established ML strategies to explain the file content material in textual content, which might then be ingested into the answer that we explored earlier. This method allows you to conduct QA conversations on these numerous knowledge varieties. As an example, you’ll be able to develop your doc database by creating textual descriptions of photos, movies, or audio content material. You can too improve the metadata desk by figuring out properties by classification or object detection on parts inside these codecs. After this extracted knowledge is listed in both the metadata retailer or the semantic search index for paperwork, the general structure of the proposed system stays largely constant.

Conclusion

On this put up, we confirmed how utilizing LLMs with the RAG design sample is critical for constructing a domain-specific AI assistant, however is inadequate to achieve the required stage of reliability to generate enterprise worth. Due to this, we proposed extending the favored RAG design sample with the ideas of brokers and instruments, the place the pliability of instruments permits us to make use of each conventional NLP strategies and trendy LLM capabilities to allow an AI assistant with extra choices to hunt info and help customers in fixing enterprise issues effectively.

The answer demonstrates the design course of in direction of an LLM assistant in a position to reply numerous varieties of retrieval, analytical reasoning, and multi-step reasoning questions throughout your whole information base. We additionally highlighted the significance of considering backward from the varieties of questions and duties that your LLM assistant is predicted to assist customers with. On this case, the design journey led us to an structure with the three parts: semantic search, metadata extraction and SQL querying, and LLM agent and instruments, which we expect is generic and versatile sufficient for a number of use instances. We additionally consider that by getting inspiration from this answer and diving deep into your customers’ wants, it is possible for you to to increase this answer additional towards what works finest for you.

In regards to the authors

Mohamed Ali Jamaoui is a Senior ML Prototyping Architect with 10 years of expertise in manufacturing machine studying. He enjoys fixing enterprise issues with machine studying and software program engineering, and serving to prospects extract enterprise worth with ML. As a part of AWS EMEA Prototyping and Cloud Engineering, he helps prospects construct enterprise options that leverage improvements in MLOPs, NLP, CV and LLMs.

Mohamed Ali Jamaoui is a Senior ML Prototyping Architect with 10 years of expertise in manufacturing machine studying. He enjoys fixing enterprise issues with machine studying and software program engineering, and serving to prospects extract enterprise worth with ML. As a part of AWS EMEA Prototyping and Cloud Engineering, he helps prospects construct enterprise options that leverage improvements in MLOPs, NLP, CV and LLMs.

Giuseppe Hannen is a ProServe Affiliate Marketing consultant. Giuseppe applies his analytical abilities together with AI&ML to develop clear and efficient options for his prospects. He likes to give you easy options to sophisticated issues, particularly those who contain the newest technological developments and analysis.

Giuseppe Hannen is a ProServe Affiliate Marketing consultant. Giuseppe applies his analytical abilities together with AI&ML to develop clear and efficient options for his prospects. He likes to give you easy options to sophisticated issues, particularly those who contain the newest technological developments and analysis.

Laurens ten Cate is a Senior Information Scientist. Laurens works with enterprise prospects in EMEA serving to them speed up their enterprise outcomes utilizing AWS AI/ML applied sciences. He focuses on NLP options and focusses on the Provide Chain & Logistics trade. In his free time he enjoys studying and artwork.

Laurens ten Cate is a Senior Information Scientist. Laurens works with enterprise prospects in EMEA serving to them speed up their enterprise outcomes utilizing AWS AI/ML applied sciences. He focuses on NLP options and focusses on the Provide Chain & Logistics trade. In his free time he enjoys studying and artwork.

Irina Radu is a Prototyping Engagement Supervisor, a part of AWS EMEA Prototyping and Cloud Engineering. She helps prospects get one of the best out of the newest tech, innovate quicker and suppose larger.

Irina Radu is a Prototyping Engagement Supervisor, a part of AWS EMEA Prototyping and Cloud Engineering. She helps prospects get one of the best out of the newest tech, innovate quicker and suppose larger.