WTF is the Distinction Between GBM and XGBoost?

Picture by upklyak on Freepik

I’m certain everybody is aware of in regards to the algorithms GBM and XGBoost. They’re go-to algorithms for a lot of real-world use instances and competitors as a result of the metric output is usually higher than the opposite fashions.

For many who don’t find out about GBM and XGBoost, GBM (Gradient Boosting Machine) and XGBoost (eXtreme Gradient Boosting) are ensemble studying strategies. Ensemble studying is a machine studying approach the place a number of “weak” fashions (typically choice timber) are skilled and mixed for additional functions.

The algorithm was primarily based on the ensemble studying boosting approach proven of their identify. Boosting methods is a technique that tries to mix a number of weak learners sequentially, with each correcting its predecessor. Every learner would study from their earlier errors and proper the errors of the earlier fashions.

That’s the elemental similarity between GBM and XGB, however how in regards to the variations? We’ll focus on that on this article, so let’s get into it.

As talked about above, GBM relies on boosting, which tries sequentially iterating the weak learner to study from the error and develop a sturdy mannequin. GBM developed a greater mannequin for every iteration by minimizing the loss perform utilizing gradient descent. Gradient descent is an idea to seek out the minimal perform with every iteration, such because the loss perform. The iteration would hold going till it achieves the stopping criterion.

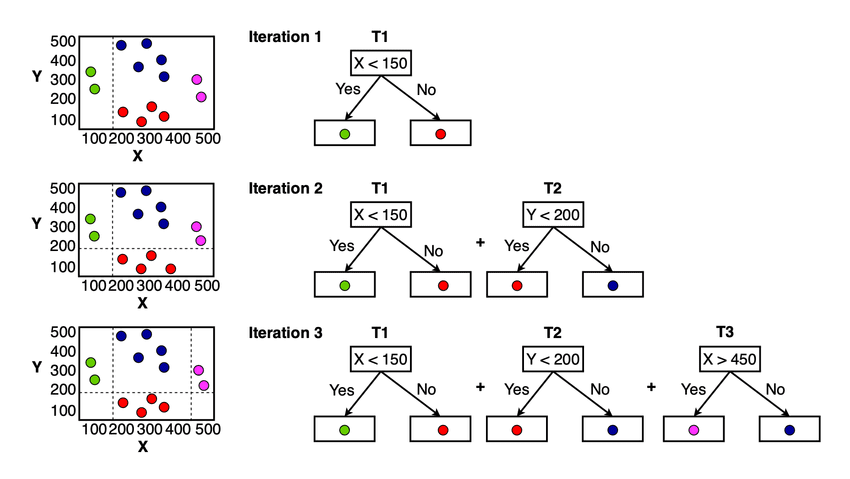

For the GBM ideas, you may see it within the picture under.

GBM Mannequin Idea (Chhetri et al. (2022))

You may see within the picture above that for every iteration, the mannequin tries to reduce the loss perform and study from the earlier mistake. The ultimate mannequin can be the entire weak learner that sums up all of the predictions from the mannequin.

XGBoost or eXtreme Gradient Boosting is a machine-learning algorithm primarily based on the gradient boosting algorithm developed by Tiangqi Chen and Carlos Guestrin in 2016. At a fundamental stage, the algorithm nonetheless follows a sequential technique to enhance the subsequent mannequin primarily based on gradient descent. Nonetheless, just a few variations of XGBoost push this mannequin as probably the greatest when it comes to efficiency and velocity.

1. Regularization

Regularization is a way in machine studying to keep away from overfitting. It’s a group of strategies to constrain the mannequin to turn into overcomplicated and have unhealthy generalization energy. It’s turn into an vital approach as many fashions match the coaching knowledge too properly.

GBM doesn’t implement Regularization of their algorithm, which makes the algorithm solely concentrate on attaining minimal loss features. In comparison with the GBM, XGBoost implements the regularization strategies to penalize the overfitting mannequin.

There are two sorts of regularization that XGBoost might apply: L1 Regularization (Lasso) and L2 Regularization (Ridge). L1 Regularization tries to reduce the characteristic weights or coefficients to zero (successfully changing into a characteristic choice), whereas L2 Regularization tries to shrink the coefficient evenly (assist to cope with multicollinearity). By implementing each regularizations, XGBoost might keep away from overfitting higher than the GBM.

2. Parallelization

GBM tends to have a slower coaching time than the XGBoost as a result of the latter algorithm implements parallelization through the coaching course of. The boosting approach may be sequential, however parallelization might nonetheless be executed throughout the XGBoost course of.

The parallelization goals to hurry up the tree-building course of, primarily through the splitting occasion. By using all of the obtainable processing cores, the XGBoost coaching time might be shortened.

Talking of dashing up the XGBoost course of, the developer additionally preprocessed the information into their developed knowledge format, DMatrix, for reminiscence effectivity and improved coaching velocity.

3. Lacking Information Dealing with

Our coaching dataset might include lacking knowledge, which we should explicitly deal with earlier than passing them into the algorithm. Nonetheless, XGBoost has its personal in-built lacking knowledge handler, whereas GBM doesn’t.

XGBoost applied their approach to deal with lacking knowledge, known as Sparsity-aware Break up Discovering. For any sparsities knowledge that XGBoost encounters (Lacking Information, Dense Zero, OHE), the mannequin would study from these knowledge and discover essentially the most optimum break up. The mannequin would assign the place the lacking knowledge ought to be positioned throughout splitting and see which course minimizes the loss.

4. Tree Pruning

The expansion technique for the GBM is to cease splitting after the algorithm arrives on the damaging loss within the break up. The technique might result in suboptimal outcomes as a result of it’s solely primarily based on native optimization and would possibly neglect the general image.

XGBoost tries to keep away from the GBM technique and grows the tree till the set parameter max depth begins pruning backward. The break up with damaging loss is pruned, however there’s a case when the damaging loss break up was not eliminated. When the break up arrives at a damaging loss, however the additional break up is constructive, it could nonetheless be retained if the general break up is constructive.

5. In-Constructed Cross-Validation

Cross-validation is a way to evaluate our mannequin generalization and robustness means by splitting the information systematically throughout a number of iterations. Collectively, their outcome would present if the mannequin is overfitting or not.

Usually, the machine algorithm would require exterior assist to implement the Cross-Validation, however XGBoost has an in-built Cross-Validation that could possibly be used through the coaching session. The Cross-Validation can be carried out at every boosting iteration and make sure the produce tree is powerful.

GBM and XGBoost are widespread algorithms in lots of real-world instances and competitions. Conceptually, each a boosting algorithms that use weak learners to realize higher fashions. Nonetheless, they include few variations of their algorithm implementation. XGBoost enhances the algorithm by embedding regularization, performing parallelization, better-missing knowledge dealing with, completely different tree pruning methods, and in-built cross-validation methods.

Cornellius Yudha Wijaya is an information science assistant supervisor and knowledge author. Whereas working full-time at Allianz Indonesia, he likes to share Python and Information ideas through social media and writing media.