A Information on 12 Tuning Methods for Manufacturing-Prepared RAG Functions | by Leonie Monigatti | Dec, 2023

Tips on how to enhance the efficiency of your Retrieval-Augmented Era (RAG) pipeline with these “hyperparameters” and tuning methods

Data Science is an experimental science. It begins with the “No Free Lunch Theorem,” which states that there isn’t a one-size-fits-all algorithm that works greatest for each drawback. And it ends in information scientists utilizing experiment tracking systems to assist them tune the hyperparameters of their Machine Learning (ML) projects to achieve the best performance.

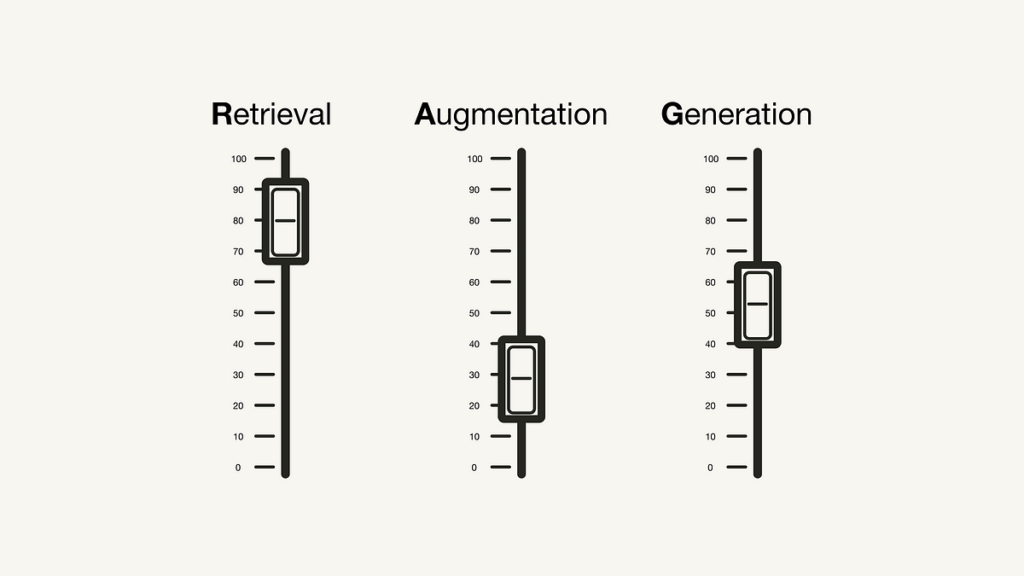

This text seems at a Retrieval-Augmented Generation (RAG) pipeline by means of the eyes of an information scientist. It discusses potential “hyperparameters” you’ll be able to experiment with to enhance your RAG pipeline’s efficiency. Just like experimentation in Deep Studying, the place, e.g., information augmentation methods aren’t a hyperparameter however a knob you’ll be able to tune and experiment with, this text may even cowl totally different methods you’ll be able to apply, which aren’t per se hyperparameters.

This text covers the next “hyperparameters” sorted by their related stage. Within the ingestion stage of a RAG pipeline, you’ll be able to obtain efficiency enhancements by:

And within the inferencing stage (retrieval and generation), you’ll be able to tune:

Notice that this text covers text-use instances of RAG. For multimodal RAG functions, totally different issues might apply.

The ingestion stage is a preparation step for constructing a RAG pipeline, much like the info cleansing and preprocessing steps in an ML pipeline. Normally, the ingestion stage consists of the next steps:

- Gather information

- Chunk information

- Generate vector embeddings of chunks

- Retailer vector embeddings and chunks in a vector database

This part discusses impactful methods and hyperparameters you could apply and tune to enhance the relevance of the retrieved contexts within the inferencing stage.

Information cleansing

Like all Information Science pipeline, the standard of your information closely impacts the end result in your RAG pipeline [8, 9]. Earlier than transferring on to any of the next steps, be sure that your information meets the next standards:

- Clear: Apply at the least some primary information cleansing methods generally utilized in Pure Language Processing, similar to ensuring all particular characters are encoded appropriately.

- Right: Ensure that your data is constant and factually correct to keep away from conflicting data complicated your LLM.

Chunking

Chunking your paperwork is an important preparation step in your exterior data supply in a RAG pipeline that may affect the efficiency [1, 8, 9]. It’s a method to generate logically coherent snippets of knowledge, normally by breaking apart lengthy paperwork into smaller sections (however it may possibly additionally mix smaller snippets into coherent paragraphs).

One consideration you have to make is the selection of the chunking method. For instance, in LangChain, different text splitters break up up paperwork by totally different logics, similar to by characters, tokens, and many others. This will depend on the kind of information you’ve got. For instance, you will have to make use of totally different chunking methods in case your enter information is code vs. if it’s a Markdown file.

The best size of your chunk (chunk_size) will depend on your use case: In case your use case is query answering, it’s possible you’ll want shorter particular chunks, but when your use case is summarization, it’s possible you’ll want longer chunks. Moreover, if a bit is just too brief, it won’t include sufficient context. However, if a bit is just too lengthy, it would include an excessive amount of irrelevant data.

Moreover, you will have to consider a “rolling window” between chunks (overlap) to introduce some extra context.

Embedding fashions

Embedding fashions are on the core of your retrieval. The high quality of your embeddings closely impacts your retrieval outcomes [1, 4]. Normally, the upper the dimensionality of the generated embeddings, the upper the precision of your embeddings.

For an thought of what various embedding fashions can be found, you’ll be able to take a look at the Massive Text Embedding Benchmark (MTEB) Leaderboard, which covers 164 textual content embedding fashions (on the time of this writing).

Whereas you should use general-purpose embedding fashions out-of-the-box, it could make sense to fine-tune your embedding mannequin to your particular use case in some instances to keep away from out-of-domain points in a while [9]. In keeping with experiments carried out by LlamaIndex, fine-tuning your embedding mannequin can result in a 5–10% performance increase in retrieval evaluation metrics [2].

Notice that you just can not fine-tune all embedding fashions (e.g., OpenAI’s text-ebmedding-ada-002 can’t be fine-tuned at the moment).

Metadata

Once you retailer vector embeddings in a vector database, some vector databases allow you to retailer them along with metadata (or information that isn’t vectorized). Annotating vector embeddings with metadata may be useful for added post-processing of the search outcomes, similar to metadata filtering [1, 3, 8, 9]. For instance, you possibly can add metadata, such because the date, chapter, or subchapter reference.

Multi-indexing

If the metadata just isn’t ample sufficient to offer extra data to separate several types of context logically, it’s possible you’ll need to experiment with a number of indexes [1, 9]. For instance, you should use totally different indexes for several types of paperwork. Notice that you’ll have to incorporate some index routing at retrieval time [1, 9]. If you’re eager about a deeper dive into metadata and separate collections, you would possibly need to study extra concerning the idea of native multi-tenancy.

Indexing algorithms

To allow lightning-fast similarity search at scale, vector databases and vector indexing libraries use an Approximate Nearest Neighbor (ANN) search as a substitute of a k-nearest neighbor (kNN) search. Because the identify suggests, ANN algorithms approximate the closest neighbors and thus may be much less exact than a kNN algorithm.

There are totally different ANN algorithms you possibly can experiment with, similar to Facebook Faiss (clustering), Spotify Annoy (bushes), Google ScaNN (vector compression), and HNSWLIB (proximity graphs). Additionally, many of those ANN algorithms have some parameters you possibly can tune, similar to ef, efConstruction, and maxConnections for HNSW [1].

Moreover, you’ll be able to allow vector compression for these indexing algorithms. Analogous to ANN algorithms, you’ll lose some precision with vector compression. Nonetheless, relying on the selection of the vector compression algorithm and its tuning, you’ll be able to optimize this as nicely.

Nonetheless, in apply, these parameters are already tuned by analysis groups of vector databases and vector indexing libraries throughout benchmarking experiments and never by builders of RAG programs. Nonetheless, if you wish to experiment with these parameters to squeeze out the final bits of efficiency, I like to recommend this text as a place to begin:

The principle elements of the RAG pipeline are the retrieval and the generative elements. This part primarily discusses methods to enhance the retrieval (Query transformations, retrieval parameters, advanced retrieval strategies, and re-ranking models) as that is the extra impactful part of the 2. Nevertheless it additionally briefly touches on some methods to enhance the era (LLM and prompt engineering).

Question transformations

For the reason that search question to retrieve extra context in a RAG pipeline can also be embedded into the vector house, its phrasing can even affect the search outcomes. Thus, in case your search question doesn’t end in passable search outcomes, you’ll be able to experiment with varied query transformation techniques [5, 8, 9], similar to:

- Rephrasing: Use an LLM to rephrase the question and take a look at once more.

- Hypothetical Doc Embeddings (HyDE): Use an LLM to generate a hypothetical response to the search question and use each for retrieval.

- Sub-queries: Break down longer queries into a number of shorter queries.

Retrieval parameters

The retrieval is a vital part of the RAG pipeline. The primary consideration is whether or not semantic search shall be ample in your use case or if you wish to experiment with hybrid search.

Within the latter case, you have to experiment with weighting the aggregation of sparse and dense retrieval strategies in hybrid search [1, 4, 9]. Thus, tuning the parameter alpha, which controls the weighting between semantic (alpha = 1) and keyword-based search (alpha = 0), will turn out to be vital.

Additionally, the variety of search outcomes to retrieve will play an important position. The variety of retrieved contexts will affect the size of the used context window (see Prompt Engineering). Additionally, if you’re utilizing a re-ranking mannequin, you have to think about what number of contexts to enter to the mannequin (see Re-ranking models).

Notice, whereas the used similarity measure for semantic search is a parameter you’ll be able to change, you shouldn’t experiment with it however as a substitute set it based on the used embedding mannequin (e.g., text-embedding-ada-002 helps cosine similarity or multi-qa-MiniLM-l6-cos-v1 helps cosine similarity, dot product, and Euclidean distance).

Superior retrieval methods

This part may technically be its personal article. For this overview, we’ll hold this as concise as potential. For an in-depth clarification of the next methods, I like to recommend this DeepLearning.AI course:

The underlying thought of this part is that the chunks for retrieval shouldn’t essentially be the identical chunks used for the era. Ideally, you’d embed smaller chunks for retrieval (see Chunking) however retrieve greater contexts. [7]

- Sentence-window retrieval: Don’t simply retrieve the related sentence, however the window of applicable sentences earlier than and after the retrieved one.

- Auto-merging retrieval: The paperwork are organized in a tree-like construction. At question time, separate however associated, smaller chunks may be consolidated into a bigger context.

Re-ranking fashions

Whereas semantic search retrieves context primarily based on its semantic similarity to the search question, “most related” doesn’t essentially imply “most related”. Re-ranking fashions, similar to Cohere’s Rerank mannequin, can assist eradicate irrelevant search outcomes by computing a rating for the relevance of the question for every retrieved context [1, 9].

“most related” doesn’t essentially imply “most related”

If you’re utilizing a re-ranker mannequin, it’s possible you’ll must re-tune the variety of search outcomes for the enter of the re-ranker and the way lots of the reranked outcomes you need to feed into the LLM.

As with the embedding models, it’s possible you’ll need to experiment with fine-tuning the re-ranker to your particular use case.

LLMs

The LLM is the core part for producing the response. Equally to the embedding fashions, there’s a variety of LLMs you’ll be able to select from relying in your necessities, similar to open vs. proprietary fashions, inferencing prices, context size, and many others. [1]

As with the embedding models or re-ranking models, it’s possible you’ll need to experiment with fine-tuning the LLM to your particular use case to include particular wording or tone of voice.

Immediate engineering

The way you phrase or engineer your prompt will considerably affect the LLM’s completion [1, 8, 9].

Please base your reply solely on the search outcomes and nothing else!

Crucial! Your reply MUST be grounded within the search outcomes offered.

Please clarify why your reply is grounded within the search outcomes!

Moreover, utilizing few-shot examples in your immediate can enhance the standard of the completions.

As talked about in Retrieval parameters, the variety of contexts fed into the immediate is a parameter you must experiment with [1]. Whereas the efficiency of your RAG pipeline can enhance with rising related context, you can even run right into a “Misplaced within the Center” [6] impact the place related context just isn’t acknowledged as such by the LLM whether it is positioned in the course of many contexts.

As increasingly builders achieve expertise with prototyping RAG pipelines, it turns into extra necessary to debate methods to convey RAG pipelines to production-ready performances. This text mentioned totally different “hyperparameters” and different knobs you’ll be able to tune in a RAG pipeline based on the related phases:

This text lined the next methods within the ingestion stage:

- Data cleaning: Guarantee information is clear and proper.

- Chunking: Selection of chunking method, chunk measurement (

chunk_size) and chunk overlap (overlap). - Embedding models: Selection of the embedding mannequin, incl. dimensionality, and whether or not to fine-tune it.

- Metadata: Whether or not to make use of metadata and selection of metadata.

- Multi-indexing: Resolve whether or not to make use of a number of indexes for various information collections.

- Indexing algorithms: Selection and tuning of ANN and vector compression algorithms may be tuned however are normally not tuned by practitioners.

And the next methods within the inferencing stage (retrieval and generation):

- Query transformations: Experiment with rephrasing, HyDE, or sub-queries.

- Retrieval parameters: Selection of search method (

alphayou probably have hybrid search enabled) and the variety of retrieved search outcomes. - Advanced retrieval strategies: Whether or not to make use of superior retrieval methods, similar to sentence-window or auto-merging retrieval.

- Re-ranking models: Whether or not to make use of a re-ranking mannequin, selection of re-ranking mannequin, variety of search outcomes to enter into the re-ranking mannequin, and whether or not to fine-tune the re-ranking mannequin.

- LLMs: Selection of LLM and whether or not to fine-tune it.

- Prompt engineering: Experiment with totally different phrasing and few-shot examples.