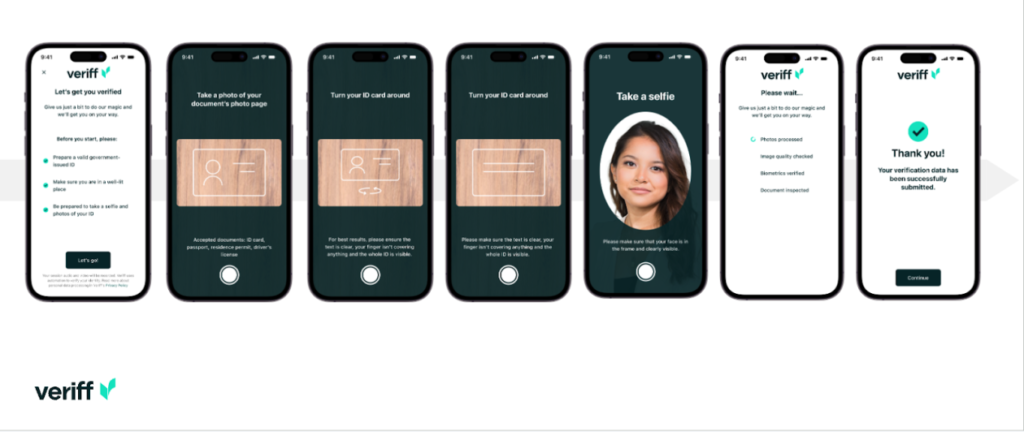

How Veriff decreased deployment time by 80% utilizing Amazon SageMaker multi-model endpoints

Veriff is an identification verification platform companion for revolutionary growth-driven organizations, together with pioneers in monetary providers, FinTech, crypto, gaming, mobility, and on-line marketplaces. They supply superior expertise that mixes AI-powered automation with human suggestions, deep insights, and experience.

Veriff delivers a confirmed infrastructure that permits their prospects to have belief within the identities and private attributes of their customers throughout all of the related moments of their buyer journey. Veriff is trusted by prospects comparable to Bolt, Deel, Monese, Starship, Tremendous Superior, Trustpilot, and Clever.

As an AI-powered answer, Veriff must create and run dozens of machine studying (ML) fashions in a cheap manner. These fashions vary from light-weight tree-based fashions to deep studying laptop imaginative and prescient fashions, which must run on GPUs to realize low latency and enhance the person expertise. Veriff can also be at present including extra merchandise to its providing, focusing on a hyper-personalized answer for its prospects. Serving completely different fashions for various prospects provides to the necessity for a scalable mannequin serving answer.

On this put up, we present you ways Veriff standardized their mannequin deployment workflow utilizing Amazon SageMaker, lowering prices and growth time.

Infrastructure and growth challenges

Veriff’s backend structure is predicated on a microservices sample, with providers operating on completely different Kubernetes clusters hosted on AWS infrastructure. This method was initially used for all firm providers, together with microservices that run costly laptop imaginative and prescient ML fashions.

A few of these fashions required deployment on GPU cases. Acutely aware of the comparatively greater value of GPU-backed occasion varieties, Veriff developed a custom solution on Kubernetes to share a given GPU’s assets between completely different service replicas. A single GPU sometimes has sufficient VRAM to carry a number of of Veriff’s laptop imaginative and prescient fashions in reminiscence.

Though the answer did alleviate GPU prices, it additionally got here with the constraint that knowledge scientists wanted to point beforehand how a lot GPU reminiscence their mannequin would require. Moreover, DevOps have been burdened with manually provisioning GPU cases in response to demand patterns. This precipitated an operational overhead and overprovisioning of cases, which resulted in a suboptimal value profile.

Aside from GPU provisioning, this setup additionally required knowledge scientists to construct a REST API wrapper for every mannequin, which was wanted to offer a generic interface for different firm providers to devour, and to encapsulate preprocessing and postprocessing of mannequin knowledge. These APIs required production-grade code, which made it difficult for knowledge scientists to productionize fashions.

Veriff’s knowledge science platform staff regarded for other ways to this method. The primary goal was to assist the corporate’s knowledge scientists with a greater transition from analysis to manufacturing by offering easier deployment pipelines. The secondary goal was to cut back the operational prices of provisioning GPU cases.

Resolution overview

Veriff required a brand new answer that solved two issues:

- Permit constructing REST API wrappers round ML fashions with ease

- Permit managing provisioned GPU occasion capability optimally and, if attainable, mechanically

Finally, the ML platform staff converged on the choice to make use of Sagemaker multi-model endpoints (MMEs). This choice was pushed by MME’s assist for NVIDIA’s Triton Inference Server (an ML-focused server that makes it straightforward to wrap fashions as REST APIs; Veriff was additionally already experimenting with Triton), in addition to its functionality to natively handle the auto scaling of GPU cases through easy auto scaling insurance policies.

Two MMEs have been created at Veriff, one for staging and one for manufacturing. This method permits them to run testing steps in a staging setting with out affecting the manufacturing fashions.

SageMaker MMEs

SageMaker is a completely managed service that gives builders and knowledge scientists the power to construct, prepare, and deploy ML fashions rapidly. SageMaker MMEs present a scalable and cost-effective answer for deploying a lot of fashions for real-time inference. MMEs use a shared serving container and a fleet of assets that may use accelerated cases comparable to GPUs to host all your fashions. This reduces internet hosting prices by maximizing endpoint utilization in comparison with utilizing single-model endpoints. It additionally reduces deployment overhead as a result of SageMaker manages loading and unloading fashions in reminiscence and scaling them based mostly on the endpoint’s visitors patterns. As well as, all SageMaker real-time endpoints profit from built-in capabilities to handle and monitor fashions, comparable to together with shadow variants, auto scaling, and native integration with Amazon CloudWatch (for extra info, discuss with CloudWatch Metrics for Multi-Model Endpoint Deployments).

Customized Triton ensemble fashions

There have been a number of the reason why Veriff determined to make use of Triton Inference Server, the principle ones being:

- It permits knowledge scientists to construct REST APIs from fashions by arranging mannequin artifact information in an ordinary listing format (no code answer)

- It’s suitable with all main AI frameworks (PyTorch, Tensorflow, XGBoost, and extra)

- It gives ML-specific low-level and server optimizations comparable to dynamic batching of requests

Utilizing Triton permits knowledge scientists to deploy fashions with ease as a result of they solely must construct formatted mannequin repositories as a substitute of writing code to construct REST APIs (Triton additionally helps Python models if customized inference logic is required). This decreases mannequin deployment time and provides knowledge scientists extra time to give attention to constructing fashions as a substitute of deploying them.

One other vital characteristic of Triton is that it permits you to construct model ensembles, that are teams of fashions which are chained collectively. These ensembles could be run as in the event that they have been a single Triton mannequin. Veriff at present employs this characteristic to deploy preprocessing and postprocessing logic with every ML mannequin utilizing Python fashions (as talked about earlier), guaranteeing that there aren’t any mismatches within the enter knowledge or mannequin output when fashions are utilized in manufacturing.

The next is what a typical Triton mannequin repository appears like for this workload:

The mannequin.py file incorporates preprocessing and postprocessing code. The educated mannequin weights are within the screen_detection_inferencer listing, beneath mannequin model 1 (mannequin is in ONNX format on this instance, however will also be TensorFlow, PyTorch format, or others). The ensemble mannequin definition is within the screen_detection_pipeline listing, the place inputs and outputs between steps are mapped in a configuration file.

Extra dependencies wanted to run the Python fashions are detailed in a necessities.txt file, and must be conda-packed to construct a Conda setting (python_env.tar.gz). For extra info, discuss with Managing Python Runtime and Libraries. Additionally, config information for Python steps must level to python_env.tar.gz utilizing the EXECUTION_ENV_PATH directive.

The mannequin folder then must be TAR compressed and renamed utilizing model_version.txt. Lastly, the ensuing <model_name>_<model_version>.tar.gz file is copied to the Amazon Simple Storage Service (Amazon S3) bucket related to the MME, permitting SageMaker to detect and serve the mannequin.

Mannequin versioning and steady deployment

Because the earlier part made obvious, constructing a Triton mannequin repository is simple. Nonetheless, operating all the required steps to deploy it’s tedious and error inclined, if run manually. To beat this, Veriff constructed a monorepo containing all fashions to be deployed to MMEs, the place knowledge scientists collaborate in a Gitflow-like method. This monorepo has the next options:

- It’s managed utilizing Pants.

- Code high quality instruments comparable to Black and MyPy are utilized utilizing Pants.

- Unit assessments are outlined for every mannequin, which examine that the mannequin output is the anticipated output for a given mannequin enter.

- Mannequin weights are saved alongside mannequin repositories. These weights could be massive binary information, so DVC is used to sync them with Git in a versioned method.

This monorepo is built-in with a steady integration (CI) software. For each new push to the repo or new mannequin, the next steps are run:

- Go the code high quality examine.

- Obtain the mannequin weights.

- Construct the Conda setting.

- Spin up a Triton server utilizing the Conda setting and use it to course of requests outlined in unit assessments.

- Construct the ultimate mannequin TAR file (

<model_name>_<model_version>.tar.gz).

These steps be sure that fashions have the standard required for deployment, so for each push to a repo department, the ensuing TAR file is copied (in one other CI step) to the staging S3 bucket. When pushes are performed in the principle department, the mannequin file is copied to the manufacturing S3 bucket. The next diagram depicts this CI/CD system.

Value and deployment velocity advantages

Utilizing MMEs permits Veriff to make use of a monorepo method to deploy fashions to manufacturing. In abstract, Veriff’s new mannequin deployment workflow consists of the next steps:

- Create a department within the monorepo with the brand new mannequin or mannequin model.

- Outline and run unit assessments in a growth machine.

- Push the department when the mannequin is able to be examined within the staging setting.

- Merge the department into major when the mannequin is prepared for use in manufacturing.

With this new answer in place, deploying a mannequin at Veriff is a simple a part of the event course of. New mannequin growth time has decreased from 10 days to a median of two days.

The managed infrastructure provisioning and auto scaling options of SageMaker introduced Veriff added advantages. They used the InvocationsPerInstance CloudWatch metric to scale in response to visitors patterns, saving on prices with out sacrificing reliability. To outline the brink worth for the metric, they carried out load testing on the staging endpoint to search out the most effective trade-off between latency and value.

After deploying seven manufacturing fashions to MMEs and analyzing spend, Veriff reported a 75% value discount in GPU mannequin serving as in comparison with the unique Kubernetes-based answer. Operational prices have been diminished as nicely, as a result of the burden of provisioning cases manually was lifted from the corporate’s DevOps engineers.

Conclusion

On this put up, we reviewed why Veriff selected Sagemaker MMEs over self-managed mannequin deployment on Kubernetes. SageMaker takes on the undifferentiated heavy lifting, permitting Veriff to lower mannequin growth time, improve engineering effectivity, and dramatically decrease the associated fee for real-time inference whereas sustaining the efficiency wanted for his or her business-critical operations. Lastly, we showcased Veriff’s easy but efficient mannequin deployment CI/CD pipeline and mannequin versioning mechanism, which can be utilized as a reference implementation of mixing software program growth finest practices and SageMaker MMEs. You could find code samples on internet hosting a number of fashions utilizing SageMaker MMEs on GitHub.

Concerning the Authors

Ricard Borràs is a Senior Machine Studying at Veriff, the place he’s main MLOps efforts within the firm. He helps knowledge scientists to construct quicker and higher AI / ML merchandise by constructing a Knowledge Science Platform on the firm, and mixing a number of open supply options with AWS providers.

Ricard Borràs is a Senior Machine Studying at Veriff, the place he’s main MLOps efforts within the firm. He helps knowledge scientists to construct quicker and higher AI / ML merchandise by constructing a Knowledge Science Platform on the firm, and mixing a number of open supply options with AWS providers.

João Moura is an AI/ML Specialist Options Architect at AWS, based mostly in Spain. He helps prospects with deep studying mannequin large-scale coaching and inference optimization, and extra broadly constructing large-scale ML platforms on AWS.

João Moura is an AI/ML Specialist Options Architect at AWS, based mostly in Spain. He helps prospects with deep studying mannequin large-scale coaching and inference optimization, and extra broadly constructing large-scale ML platforms on AWS.

Miguel Ferreira works as a Sr. Options Architect at AWS based mostly in Helsinki, Finland. AI/ML has been a lifelong curiosity and he has helped a number of prospects combine Amazon SageMaker into their ML workflows.

Miguel Ferreira works as a Sr. Options Architect at AWS based mostly in Helsinki, Finland. AI/ML has been a lifelong curiosity and he has helped a number of prospects combine Amazon SageMaker into their ML workflows.