Fasten Your Seatbelt: Falcon 180B is Right here!

Picture by Creator

Just a few months in the past, we learnt about Falcon LLM, which was based by the Technology Innovation Institute (TII), an organization a part of the Abu Dhabi Authorities’s Superior Know-how Analysis Council. Quick ahead a couple of months, they’ve simply obtained even larger and higher – actually, a lot larger.

Falcon 180B is the biggest overtly obtainable language mannequin, with 180 billion parameters. Sure, that’s proper, you learn appropriately – 180 billion. It was educated on 3.5 trillion tokens utilizing TII’s RefinedWeb dataset. This represents the longest single-epoch pre-training for an open mannequin.

But it surely’s not simply in regards to the dimension of the mannequin that we’re going to concentrate on right here, it’s additionally in regards to the energy and potential behind it. Falcon 180B is creating new requirements with Massive language fashions (LLMs) in the case of capabilities.

The fashions which are obtainable:

The Falcon-180B Base mannequin is a causal decoder-only mannequin. I’d suggest utilizing this mannequin for additional fine-tuning your personal information.

The Falcon-180B-Chat mannequin is similar to the bottom model however goes in a bit deeper by fine-tuning utilizing a mixture of Ultrachat, Platypus, and Airoboros instruction (chat) datasets.

Coaching

Falcon 180B scaled up for its predecessor Falcon 40B, with new capabilities corresponding to multiquery consideration for enhanced scalability. The mannequin used 4096 GPUs on Amazon SageMaker and was educated on 3.5 trillion tokens. That is roughly round 7,000,000 GPU hours. Which means that Falcon 180B is 2.5x quicker than LLMs corresponding to Llama 2 and was educated on 4x extra computing.

Wow, that’s rather a lot.

Knowledge

The dataset used for Falcon 180B was predominantly sourced (85%) from RefinedWeb, in addition to being educated on a mixture of curated information corresponding to technical papers, conversations, and a few components of code.

Benchmark

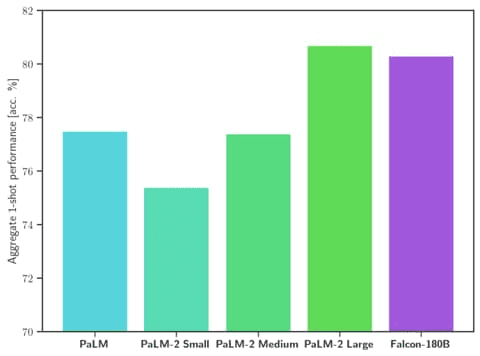

The half you all need to know – how is Falcon 180B doing amongst its opponents?

Falcon 180B is presently one of the best overtly launched LLM up to now (September 2023). It has been proven to outperform Llama 2 70B and OpenAI’s GPT-3.5 on MMLU. It sometimes sits someplace between GPT 3.5 and GPT 4.

Picture by HuggingFace Falcon 180B

Falcon 180B ranked 68.74 on the Hugging Face Leaderboard, making it the highest-scoring overtly launched pre-trained LLM the place it surpassed Meta’s LLaMA 2 which was at 67.35.

For the developer and pure language processing (NLP) fanatics on the market, Falcon 180B is obtainable on the Hugging Face ecosystem, beginning with Transformers model 4.33.

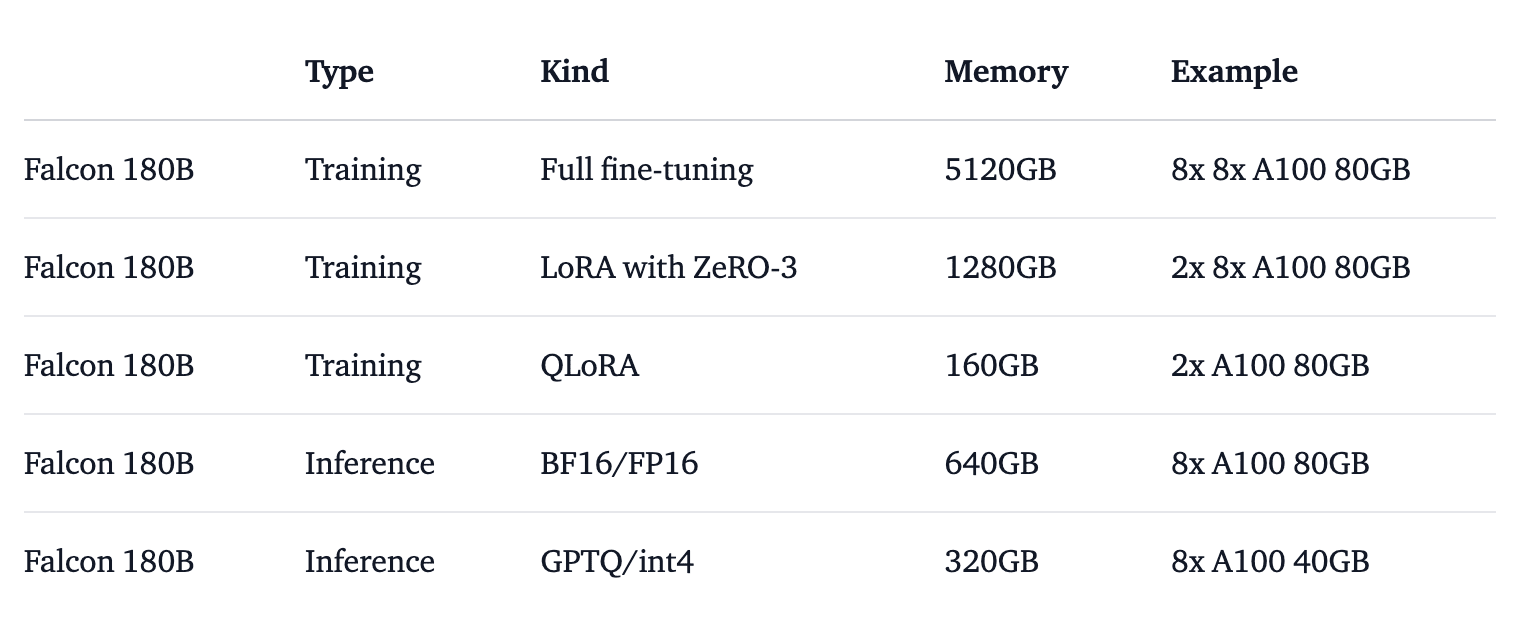

Nonetheless, as you may think about as a result of mannequin’s dimension, you have to to consider {hardware} necessities. To get a greater understanding of the {hardware} necessities, HuggingFace ran exams wanted to run the mannequin for various use circumstances, as proven within the picture under:

Picture by HuggingFace Falcon 180B

If you need to offer it a take a look at and mess around with it, you may check out Falcon 180B by means of the demo by clicking on this hyperlink: Falcon 180B Demo.

Falcon 180B vs ChatGPT

The mannequin has some critical {hardware} necessities which aren’t simply accessible to all people. Nonetheless, primarily based on different folks’s findings on testing each Falcon 180B in opposition to ChatGPT by asking them the identical questions, ChatGPT took the win.

It carried out nicely on code technology, nonetheless, it wants a lift on textual content extraction and summarization.

Should you’ve had an opportunity to mess around with it, tell us what your findings had been in opposition to different LLMs. Is Falcon 180B price all of the hype that’s round it as it’s presently the biggest publicly obtainable mannequin on the Hugging Face mannequin hub?

Properly, it appears to be because it has proven to be on the prime of the charts for open-access fashions, and fashions like PaLM-2, a run for his or her cash. We’ll discover out in the end.

Nisha Arya is a Knowledge Scientist, Freelance Technical Author and Neighborhood Supervisor at KDnuggets. She is especially excited by offering Knowledge Science profession recommendation or tutorials and idea primarily based information round Knowledge Science. She additionally needs to discover the alternative ways Synthetic Intelligence is/can profit the longevity of human life. A eager learner, searching for to broaden her tech information and writing abilities, while serving to information others.

Nisha Arya is a Knowledge Scientist and Freelance Technical Author. She is especially excited by offering Knowledge Science profession recommendation or tutorials and idea primarily based information round Knowledge Science. She additionally needs to discover the alternative ways Synthetic Intelligence is/can profit the longevity of human life. A eager learner, searching for to broaden her tech information and writing abilities, while serving to information others.