Area-time view synthesis from movies of dynamic scenes – Google Analysis Weblog

A cell phone’s digital camera is a robust device for capturing on a regular basis moments. Nonetheless, capturing a dynamic scene utilizing a single digital camera is essentially restricted. For example, if we wished to regulate the digital camera movement or timing of a recorded video (e.g., to freeze time whereas sweeping the digital camera round to spotlight a dramatic second), we’d sometimes want an costly Hollywood setup with a synchronized digital camera rig. Would it not be potential to attain related results solely from a video captured utilizing a cell phone’s digital camera, with out a Hollywood funds?

In “DynIBaR: Neural Dynamic Image-Based Rendering”, a best paper honorable mention at CVPR 2023, we describe a brand new methodology that generates photorealistic free-viewpoint renderings from a single video of a fancy, dynamic scene. Neural Dynamic Picture-Primarily based Rendering (DynIBaR) can be utilized to generate a spread of video results, corresponding to “bullet time” results (the place time is paused and the digital camera is moved at a standard pace round a scene), video stabilization, depth of discipline, and sluggish movement, from a single video taken with a telephone’s digital camera. We exhibit that DynIBaR considerably advances video rendering of advanced shifting scenes, opening the door to new sorts of video enhancing functions. We now have additionally launched the code on the DynIBaR project page, so you’ll be able to strive it out your self.

| Given an in-the-wild video of a fancy, dynamic scene, DynIBaR can freeze time whereas permitting the digital camera to proceed to maneuver freely by means of the scene. |

Background

The previous few years have seen large progress in laptop imaginative and prescient strategies that use neural radiance fields (NeRFs) to reconstruct and render static (non-moving) 3D scenes. Nonetheless, many of the movies individuals seize with their cellular units depict shifting objects, corresponding to individuals, pets, and automobiles. These shifting scenes result in a way more difficult 4D (3D + time) scene reconstruction drawback that can not be solved utilizing customary view synthesis strategies.

| Normal view synthesis strategies output blurry, inaccurate renderings when utilized to movies of dynamic scenes. |

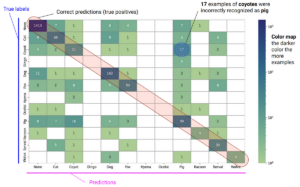

Different latest strategies deal with view synthesis for dynamic scenes utilizing space-time neural radiance fields (i.e., Dynamic NeRFs), however such approaches nonetheless exhibit inherent limitations that forestall their utility to casually captured, in-the-wild movies. Particularly, they wrestle to render high-quality novel views from movies that includes very long time length, uncontrolled digital camera paths and complicated object movement.

The important thing pitfall is that they retailer a sophisticated, shifting scene in a single information construction. Particularly, they encode scenes within the weights of a multilayer perceptron (MLP) neural community. MLPs can approximate any perform — on this case, a perform that maps a 4D space-time level (x, y, z, t) to an RGB coloration and density that we are able to use in rendering photos of a scene. Nonetheless, the capability of this MLP (outlined by the variety of parameters in its neural community) should improve in line with the video size and scene complexity, and thus, coaching such fashions on in-the-wild movies could be computationally intractable. In consequence, we get blurry, inaccurate renderings like these produced by DVS and NSFF (proven beneath). DynIBaR avoids creating such giant scene fashions by adopting a distinct rendering paradigm.

| DynIBaR (backside row) considerably improves rendering high quality in comparison with prior dynamic view synthesis strategies (prime row) for movies of advanced dynamic scenes. Prior strategies produce blurry renderings as a result of they should retailer the whole shifting scene in an MLP information construction. |

Picture-based rendering (IBR)

A key perception behind DynIBaR is that we don’t truly have to retailer all the scene contents in a video in a large MLP. As an alternative, we instantly use pixel information from close by enter video frames to render new views. DynIBaR builds on an image-based rendering (IBR) methodology referred to as IBRNet that was designed for view synthesis for static scenes. IBR strategies acknowledge {that a} new goal view of a scene ought to be similar to close by supply photos, and subsequently synthesize the goal by dynamically choosing and warping pixels from the close by supply frames, slightly than reconstructing the entire scene prematurely. IBRNet, specifically, learns to mix close by photos collectively to recreate new views of a scene inside a volumetric rendering framework.

DynIBaR: Extending IBR to advanced, dynamic movies

To increase IBR to dynamic scenes, we have to take scene movement into consideration throughout rendering. Subsequently, as a part of reconstructing an enter video, we remedy for the movement of each 3D level, the place we characterize scene movement utilizing a movement trajectory discipline encoded by an MLP. Not like prior dynamic NeRF strategies that retailer the whole scene look and geometry in an MLP, we solely retailer movement, a sign that’s extra clean and sparse, and use the enter video frames to find out all the things else wanted to render new views.

We optimize DynIBaR for a given video by taking every enter video body, rendering rays to type a 2D picture utilizing quantity rendering (as in NeRF), and evaluating that rendered picture to the enter body. That’s, our optimized illustration ought to be capable of completely reconstruct the enter video.

|

| We illustrate how DynIBaR renders photos of dynamic scenes. For simplicity, we present a 2D world, as seen from above. (a) A set of enter supply views (triangular camera frusta) observe a dice shifting by means of the scene (animated sq.). Every digital camera is labeled with its timestamp (t-2, t-1, and so forth). (b) To render a view from digital camera at time t, DynIBaR shoots a digital ray by means of every pixel (blue line), and computes colours and opacities for pattern factors alongside that ray. To compute these properties, DyniBaR tasks these samples into different views through multi-view geometry, however first, we should compensate for the estimated movement of every level (dashed crimson line). (c) Utilizing this estimated movement, DynIBaR strikes every level in 3D to the related time earlier than projecting it into the corresponding supply digital camera, to pattern colours to be used in rendering. DynIBaR optimizes the movement of every scene level as a part of studying find out how to synthesize new views of the scene. |

Nonetheless, reconstructing and deriving new views for a fancy, shifting scene is a extremely ill-posed drawback, since there are lots of options that may clarify the enter video — as an example, it would create disconnected 3D representations for every time step. Subsequently, optimizing DynIBaR to reconstruct the enter video alone is inadequate. To acquire high-quality outcomes, we additionally introduce a number of different strategies, together with a technique referred to as cross-time rendering. Cross-time rendering refers to using the state of our 4D illustration at one time immediate to render photos from a distinct time immediate, which inspires the 4D illustration to be coherent over time. To additional enhance rendering constancy, we routinely factorize the scene into two elements, a static one and a dynamic one, modeled by time-invariant and time-varying scene representations respectively.

Creating video results

DynIBaR permits varied video results. We present a number of examples beneath.

Video stabilization

We use a shaky, handheld enter video to check DynIBaR’s video stabilization efficiency to present 2D video stabilization and dynamic NeRF strategies, together with FuSta, DIFRINT, HyperNeRF, and NSFF. We exhibit that DynIBaR produces smoother outputs with larger rendering constancy and fewer artifacts (e.g., flickering or blurry outcomes). Particularly, FuSta yields residual digital camera shake, DIFRINT produces flicker round object boundaries, and HyperNeRF and NSFF produce blurry outcomes.

Simultaneous view synthesis and sluggish movement

DynIBaR can carry out view synthesis in each area and time concurrently, producing clean 3D cinematic results. Under, we exhibit that DynIBaR can take video inputs and produce clean 5X slow-motion movies rendered utilizing novel digital camera paths.

Video bokeh

DynIBaR can even generate high-quality video bokeh by synthesizing movies with dynamically altering depth of field. Given an all-in-focus enter video, DynIBar can generate high-quality output movies with various out-of-focus areas that decision consideration to shifting (e.g., the working individual and canine) and static content material (e.g., timber and buildings) within the scene.

Conclusion

DynIBaR is a leap ahead in our potential to render advanced shifting scenes from new digital camera paths. Whereas it presently entails per-video optimization, we envision sooner variations that may be deployed on in-the-wild movies to allow new sorts of results for shopper video enhancing utilizing cellular units.

Acknowledgements

DynIBaR is the results of a collaboration between researchers at Google Analysis and Cornell College. The important thing contributors to the work introduced on this put up embody Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, and Noah Snavely.