Meet MiniGPT-4: An Open-Supply AI Mannequin That Performs Complicated Imaginative and prescient-Language Duties Like GPT-4

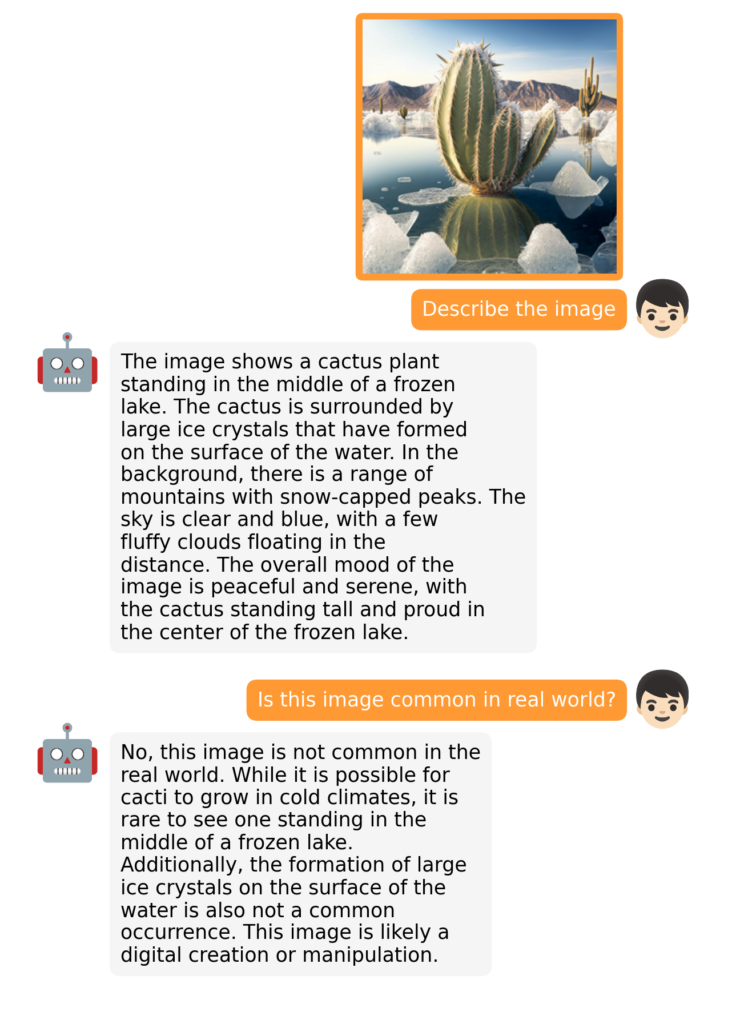

GPT-4 is the newest Giant Language Mannequin that OpenAI has launched. Its multimodal nature units it other than all of the beforehand launched LLMs. GPT’s transformer structure is the expertise behind the well-known ChatGPT that makes it able to imitating people by tremendous good Pure Language Understanding. GPT-4 has proven large efficiency in fixing duties like producing detailed and exact picture descriptions, explaining uncommon visible phenomena, growing web sites utilizing handwritten textual content directions, and so forth. Some customers have even used it to construct video video games and Chrome extensions and to clarify difficult reasoning questions.

The rationale behind GPT-4’s distinctive efficiency shouldn’t be absolutely understood. The authors of a just lately launched analysis paper consider that GPT-4’s superior skills could also be because of using a extra superior Giant Language Mannequin. Prior analysis has proven how LLMs include nice potential, which is usually not current in smaller fashions. The authors have thus proposed a brand new mannequin referred to as MiniGPT-4 to discover the speculation intimately. MiniGPT-4 is an open-source mannequin able to performing advanced vision-language duties similar to GPT-4.

Developed by a workforce of Ph.D. college students from King Abdullah College of Science and Expertise, Saudi Arabia, MiniGPT-4 consists of comparable skills to these portrayed by GPT-4, reminiscent of detailed picture description era and web site creation from hand-written drafts. MiniGPT-4 makes use of a sophisticated LLM referred to as Vicuna because the language decoder, which is constructed upon LLaMA and is reported to attain 90% of ChatGPT’s high quality as evaluated by GPT-4. MiniGPT-4 has used the pretrained imaginative and prescient element of BLIP-2 (Bootstrapping Language-Picture Pre-training) and has added a single projection layer to align the encoded visible options with the Vicuna language mannequin by freezing all different imaginative and prescient and language elements.

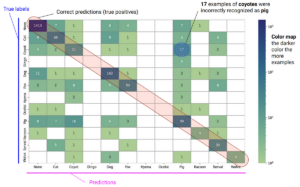

MiniGPT-4 confirmed nice outcomes when requested to determine issues from image enter. It offered an answer primarily based on offered picture enter of a diseased plant by a person with a immediate asking about what was fallacious with the plant. It even found uncommon content material in a picture, wrote product commercials, generated detailed recipes by observing scrumptious meals pictures, got here up with rap songs impressed by photos, and retrieved details about folks, motion pictures, or artwork instantly from photos.

In keeping with their examine, the workforce talked about that coaching one projection layer can effectively align the visible options with the LLM. MiniGPT-4 requires coaching of simply 10 hours roughly on 4 A100 GPUs. Additionally, the workforce has shared how growing a high-performing MiniGPT-4 mannequin is troublesome by simply aligning visible options with LLMs utilizing uncooked image-text pairs from public datasets, as this may end up in repeated phrases or fragmented sentences. To beat this limitation, MiniGPT-4 must be skilled utilizing a high-quality, well-aligned dataset, thus enhancing the mannequin’s usability by producing extra pure and coherent language outputs.

MiniGPT-4 looks like a promising growth because of its exceptional multimodal era capabilities. Probably the most necessary options is its excessive computational effectivity and the truth that it solely requires roughly 5 million aligned image-text pairs for coaching a projection layer. The code, pre-trained mannequin, and picked up dataset can be found

Take a look at the Paper, Project, and Github. Don’t overlook to affix our 19k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra. When you’ve got any questions relating to the above article or if we missed something, be happy to e mail us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club

Tanya Malhotra is a remaining 12 months undergrad from the College of Petroleum & Vitality Research, Dehradun, pursuing BTech in Laptop Science Engineering with a specialization in Synthetic Intelligence and Machine Studying.

She is a Knowledge Science fanatic with good analytical and important considering, together with an ardent curiosity in buying new expertise, main teams, and managing work in an organized method.