How Earth.com and Provectus applied their MLOps Infrastructure with Amazon SageMaker

This weblog submit is co-written with Marat Adayev and Dmitrii Evstiukhin from Provectus.

When machine studying (ML) fashions are deployed into manufacturing and employed to drive enterprise selections, the problem usually lies within the operation and administration of a number of fashions. Machine Studying Operations (MLOps) offers the technical resolution to this difficulty, helping organizations in managing, monitoring, deploying, and governing their fashions on a centralized platform.

At-scale, real-time picture recognition is a fancy technical drawback that additionally requires the implementation of MLOps. By enabling efficient administration of the ML lifecycle, MLOps may help account for numerous alterations in information, fashions, and ideas that the event of real-time picture recognition functions is related to.

One such utility is EarthSnap, an AI-powered picture recognition utility that allows customers to establish all sorts of vegetation and animals, utilizing the digital camera on their smartphone. EarthSnap was developed by Earth.com, a number one on-line platform for fanatics who’re passionate concerning the atmosphere, nature, and science.

Earth.com’s management crew acknowledged the huge potential of EarthSnap and got down to create an utility that makes use of the newest deep studying (DL) architectures for pc imaginative and prescient (CV). Nevertheless, they confronted challenges in managing and scaling their ML system, which consisted of assorted siloed ML and infrastructure elements that needed to be maintained manually. They wanted a cloud platform and a strategic accomplice with confirmed experience in delivering production-ready AI/ML options, to shortly deliver EarthSnap to the market. That’s the place Provectus, an AWS Premier Consulting Partner with competencies in Machine Studying, Knowledge & Analytics, and DevOps, stepped in.

This submit explains how Provectus and Earth.com have been in a position to improve the AI-powered picture recognition capabilities of EarthSnap, scale back engineering heavy lifting, and reduce administrative prices by implementing end-to-end ML pipelines, delivered as a part of a managed MLOps platform and managed AI companies.

Challenges confronted within the preliminary strategy

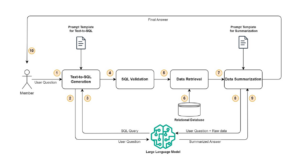

The manager crew at Earth.com was desirous to speed up the launch of EarthSnap. They swiftly started to work on AI/ML capabilities by constructing picture recognition fashions utilizing Amazon SageMaker. The next diagram exhibits the preliminary picture recognition ML workflow, run manually and sequentially.

The fashions developed by Earth.com lived throughout numerous notebooks. They required the guide sequential execution run of a collection of complicated notebooks to course of the info and retrain the mannequin. Endpoints needed to be deployed manually as nicely.

Earth.com didn’t have an in-house ML engineering crew, which made it arduous so as to add new datasets that includes new species, launch and enhance new fashions, and scale their disjointed ML system.

The ML elements for information ingestion, preprocessing, and mannequin coaching have been accessible as disjointed Python scripts and notebooks, which required loads of guide heavy lifting on the a part of engineers.

The preliminary resolution additionally required the help of a technical third celebration, to launch new fashions swiftly and effectively.

First iteration of the answer

Provectus served as a useful collaborator for Earth.com, taking part in a vital position in augmenting the AI-driven picture recognition options of EarthSnap. The appliance’s workflows have been automated by implementing end-to-end ML pipelines, which have been delivered as a part of Provectus’s managed MLOps platform and supported via managed AI services.

A collection of venture discovery periods have been initiated by Provectus to look at EarthSnap’s current codebase and stock the pocket book scripts, with the aim of reproducing the present mannequin outcomes. After the mannequin outcomes had been restored, the scattered elements of the ML workflow have been merged into an automatic ML pipeline utilizing Amazon SageMaker Pipelines, a purpose-built CI/CD service for ML.

The ultimate pipeline consists of the next elements:

- Knowledge QA & versioning – This step run as a SageMaker Processing job, ingests the supply information from Amazon Easy Storage Service (Amazon S3) and prepares the metadata for the subsequent step, containing solely legitimate pictures (URI and label) which are filtered in response to inner guidelines. It additionally persists a manifest file to Amazon S3, together with all needed data to recreate that dataset model.

- Knowledge preprocessing – This consists of a number of steps wrapped as SageMaker processing jobs, and run sequentially. The steps preprocess the pictures, convert them to RecordIO format, break up the pictures into datasets (full, prepare, check and validation), and put together the pictures to be consumed by SageMaker coaching jobs.

- Hyperparameter tuning – A SageMaker hyperparameter tuning job takes as enter a subset of the coaching and validation set and runs a collection of small coaching jobs beneath the hood to find out one of the best parameters for the complete coaching job.

- Full coaching – A step SageMaker coaching job launches the coaching job on the whole information, given one of the best parameters from the hyperparameter tuning step.

- Mannequin analysis – A step SageMaker processing job is run after the ultimate mannequin has been skilled. This step produces an expanded report containing the mannequin’s metrics.

- Mannequin creation – The SageMaker ModelCreate step wraps the mannequin into the SageMaker mannequin package deal and pushes it to the SageMaker mannequin registry.

All steps are run in an automatic method after the pipeline has been run. The pipeline may be run through any of following strategies:

- Routinely utilizing AWS CodeBuild, after the brand new adjustments are pushed to a main department and a brand new model of the pipeline is upserted (CI)

- Routinely utilizing Amazon API Gateway, which may be triggered with a sure API name

- Manually in Amazon SageMaker Studio

After the pipeline run (launched utilizing one among previous strategies), a skilled mannequin is produced that is able to be deployed as a SageMaker endpoint. Because of this the mannequin should first be accepted by the PM or engineer within the mannequin registry, then the mannequin is mechanically rolled out to the stage atmosphere utilizing Amazon EventBridge and examined internally. After the mannequin is confirmed to be working as anticipated, it’s deployed to the manufacturing atmosphere (CD).

The Provectus resolution for EarthSnap may be summarized within the following steps:

- Begin with absolutely automated, end-to-end ML pipelines to make it simpler for Earth.com to launch new fashions

- Construct on prime of the pipelines to ship a strong ML infrastructure for the MLOps platform, that includes all elements for streamlining AI/ML

- Assist the answer by offering managed AI services (together with ML infrastructure provisioning, upkeep, and value monitoring and optimization)

- Carry EarthSnap to its desired state (cellular utility and backend) via a collection of engagements, together with AI/ML work, information and database operations, and DevOps

After the foundational infrastructure and processes have been established, the mannequin was skilled and retrained on a bigger dataset. At this level, nonetheless, the crew encountered a further difficulty when making an attempt to develop the mannequin with even bigger datasets. We wanted to discover a option to restructure the answer structure, making it extra subtle and able to scaling successfully. The next diagram exhibits the EarthSnap AI/ML structure.

The AI/ML structure for EarthSnap is designed round a collection of AWS companies:

- Sagemaker Pipeline runs utilizing one of many strategies talked about above (CodeBuild, API, guide) that trains the mannequin and produces artifacts and metrics. In consequence, the brand new model of the mannequin is pushed to the Sagemaker Mannequin registry

- Then the mannequin is reviewed by an inner crew (PM/engineer) in mannequin registry and accepted/rejected primarily based on metrics offered

- As soon as the mannequin is accepted, the mannequin model is mechanically deployed to the stage atmosphere utilizing the Amazon EventBridge that tracks the mannequin standing change

- The mannequin is deployed to the manufacturing atmosphere if the mannequin passes all checks within the stage atmosphere

Closing resolution

To accommodate all needed units of labels, the answer for EarthSnap’s mannequin required substantial modifications, as a result of incorporating all species inside a single mannequin proved to be each pricey and inefficient. The plant class was chosen first for implementation.

A radical examination of plant information was carried out, to prepare it into subsets primarily based on shared inner traits. The answer for the plant mannequin was redesigned by implementing a multi-model mum or dad/little one structure. This was achieved by coaching little one fashions on grouped subsets of plant information and coaching the mum or dad mannequin on a set of information samples from every subcategory. The Youngster fashions have been employed for correct classification inside the internally grouped species, whereas the mum or dad mannequin was utilized to categorize enter plant pictures into subgroups. This design necessitated distinct coaching processes for every mannequin, resulting in the creation of separate ML pipelines. With this new design, together with the beforehand established ML/MLOps basis, the EarthSnap utility was in a position to embody all important plant species, leading to improved effectivity regarding mannequin upkeep and retraining. The next diagram illustrates the logical scheme of mum or dad/little one mannequin relations.

Upon finishing the redesign, the final word problem was to ensure that the AI resolution powering EarthSnap might handle the substantial load generated by a broad person base. Happily, the managed AI onboarding course of encompasses all important automation, monitoring, and procedures for transitioning the answer right into a production-ready state, eliminating the necessity for any additional capital funding.

Outcomes

Regardless of the urgent requirement to develop and implement the AI-driven picture recognition options of EarthSnap inside just a few months, Provectus managed to fulfill all venture necessities inside the designated time-frame. In simply 3 months, Provectus modernized and productionized the ML resolution for EarthSnap, guaranteeing that their cellular utility was prepared for public launch.

The modernized infrastructure for ML and MLOps allowed Earth.com to cut back engineering heavy lifting and reduce the executive prices related to upkeep and help of EarthSnap. By streamlining processes and implementing finest practices for CI/CD and DevOps, Provectus ensured that EarthSnap might obtain higher efficiency whereas bettering its adaptability, resilience, and safety. With a give attention to innovation and effectivity, we enabled EarthSnap to operate flawlessly, whereas offering a seamless and user-friendly expertise for all customers.

As a part of its managed AI companies, Provectus was in a position to scale back the infrastructure administration overhead, set up well-defined SLAs and processes, guarantee 24/7 protection and help, and improve general infrastructure stability, together with manufacturing workloads and important releases. We initiated a collection of enhancements to ship managed MLOps platform and increase ML engineering. Particularly, it now takes Earth.com minutes, as an alternative of a number of days, to launch new ML fashions for his or her AI-powered picture recognition utility.

With help from Provectus, Earth.com was in a position to launch its EarthSnap utility on the Apple Retailer and Playstore forward of schedule. The early launch signified the significance of Provectus’ complete work for the shopper.

”I’m extremely excited to work with Provectus. Phrases can’t describe how nice I really feel about handing over management of the technical facet of enterprise to Provectus. It’s a enormous aid realizing that I don’t have to fret about something apart from creating the enterprise facet.”

– Eric Ralls, Founder and CEO of EarthSnap.

The subsequent steps of our cooperation will embody: including superior monitoring elements to pipelines, enhancing mannequin retraining, and introducing a human-in-the-loop step.

Conclusion

The Provectus crew hopes that Earth.com will proceed to modernize EarthSnap with us. We look ahead to powering the corporate’s future enlargement, additional popularizing pure phenomena, and doing our half to guard our planet.

To be taught extra concerning the Provectus ML infrastructure and MLOps, go to Machine Studying Infrastructure and watch the webinar for extra sensible recommendation. You may also be taught extra about Provectus managed AI companies on the Managed AI Providers.

In case you’re fascinated by constructing a strong infrastructure for ML and MLOps in your group, apply for the ML Acceleration Program to get began.

Provectus helps corporations in healthcare and life sciences, retail and CPG, media and leisure, and manufacturing, obtain their targets via AI.

Provectus is an AWS Machine Studying Competency Companion and AI-first transformation consultancy and options supplier serving to design, architect, migrate, or construct cloud-native functions on AWS.

Contact Provectus | Partner Overview

Concerning the Authors

Marat Adayev is an ML Options Architect at Provectus

Dmitrii Evstiukhin is the Director of Managed Providers at Provectus

James Burdon is a Senior Startups Options Architect at AWS