Enhancing Activity-Particular Adaptation for Video Basis Fashions: Introducing Video Adapter as a Probabilistic Framework for Adapting Textual content-to-Video Fashions

Giant text-to-video fashions skilled on internet-scale information have proven extraordinary capabilities to generate high-fidelity movies from arbitrarily written descriptions. Nonetheless, fine-tuning a pretrained enormous mannequin may be prohibitively costly, making it tough to adapt these fashions to functions with restricted domain-specific information, corresponding to animation or robotics movies. Researchers from Google DeepMind, UC Berkeley, MIT and the College of Alberta look into how a big pretrained text-to-video mannequin will be custom-made to a wide range of downstream domains and duties with out fine-tuning, impressed by how a small modifiable element (corresponding to prompts, prefix-tuning) can allow a big language mannequin to carry out new duties with out requiring entry to the mannequin weights. To handle this, they current Video Adapter, a technique for producing task-specific tiny video fashions by utilizing a big pretrained video diffusion mannequin’s rating operate as a previous probabilistic. Experiments show that Video Adapters can use as few as 1.25 % of the pretrained mannequin’s parameters to incorporate the extensive information and preserve the excessive constancy of an enormous pretrained video mannequin in a task-specific tiny video mannequin. Excessive-quality, task-specific films will be generated utilizing Video Adapters for varied makes use of, together with however not restricted to animation, selfish modeling, and the modeling of simulated and real-world robotics information.

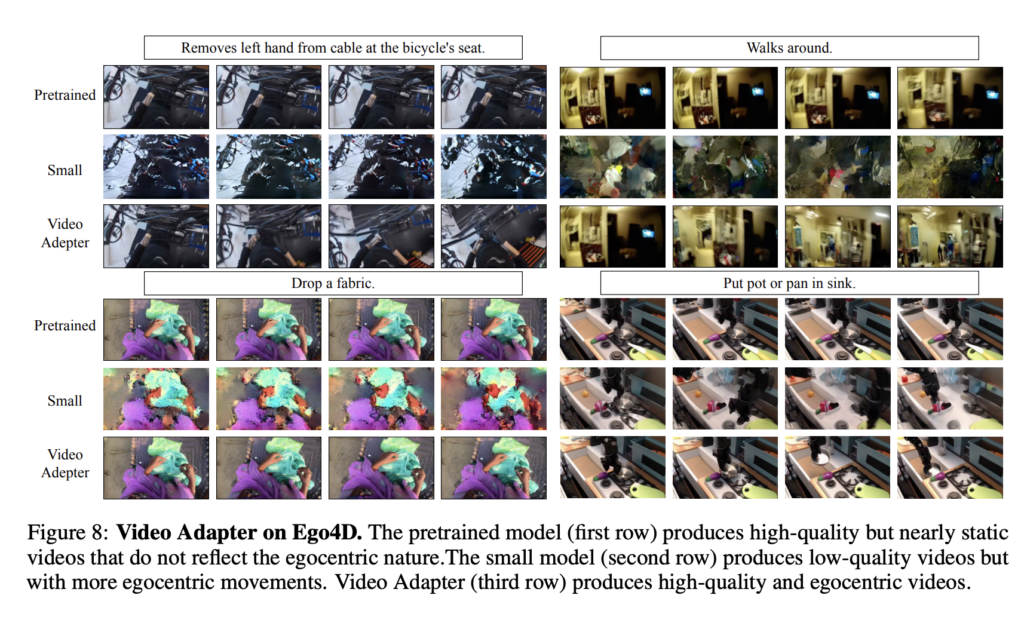

Researchers take a look at Video Adapter on varied video creation jobs. On the tough Ego4D information and the robotic Bridge information, Video Adapter generates movies with higher FVD and Inception Scores than a high-quality pretrained massive video mannequin whereas utilizing as much as 80x fewer parameters. Researchers show qualitatively that Video Adapter permits the manufacturing of genre-specific movies like these present in science fiction and animation. As well as, the examine’s authors present how Video Adapter can pave the best way for bridging robotics’ notorious sim-to-real hole by modeling each actual and simulated robotic movies and permitting information augmentation on precise robotic movies through individualized stylization.

Key Options

- To attain high-quality but versatile video synthesis with out requiring gradient updates on the pretrained mannequin, Video Adapter combines the scores of a pretrained text-to-video mannequin with the scores of a domain-specific tiny mannequin (with 1% parameters) at sampling time.

- Pretrained video fashions will be simply tailored utilizing Video Adapter to films of people and robotic information.

- Underneath the identical variety of TPU hours, Video Adapter will get increased FVD, FID, and Inception Scores than the pretrained and task-specific fashions.

- Potential makes use of for video adapters vary from use in anime manufacturing to area randomization to bridge the simulation-reality hole in robotics.

- Versus an enormous video mannequin pretrained from web information, Video Adapter requires coaching a tiny domain-specific text-to-video mannequin with orders of magnitude fewer parameters. Video Adapter achieves high-quality and adaptable video synthesis by composing the pretrained and domain-specific video mannequin scores throughout sampling.

- With Video Adapter, you might give a video a singular look utilizing a mannequin solely uncovered to 1 sort of animation.

- Utilizing a Video Adapter, a pretrained mannequin of appreciable measurement can tackle the visible traits of an animation mannequin of a a lot smaller measurement.

- With the assistance of a Video Adapter, an enormous pre-trained mannequin can tackle the visible aesthetic of a diminutive Sci-Fi animation mannequin.

- Video Adapters could generate varied films in varied genres and kinds, together with movies with selfish motions primarily based on manipulation and navigation, movies with individualized genres like animation and science fiction, and movies with simulated and real robotic motions.

Limitations

A small video mannequin nonetheless must be skilled on domain-specific information; due to this fact, whereas Video Adapter can successfully adapt massive pretrained text-to-video fashions, it’s not training-free. One other distinction between Video Adapter and different text-to-image and text-to-video APIs is that it requires the rating to be output alongside the ensuing video. Video Adapter successfully makes text-to-video analysis extra accessible to small industrial and educational establishments by addressing the shortage of free entry to mannequin weights and computing effectivity.

To sum it up

It’s apparent that when text-to-video basis fashions increase in measurement, they’ll must be successfully tailored to task-specific utilization. Researchers have developed Video Adapter, a strong methodology for producing area and task-specific movies by using enormous pretrained text-to-video fashions as a probabilistic prior. Video Adapters could synthesize high-quality movies in specialised disciplines or desired aesthetics with out requiring extra fine-tuning of the huge pretrained mannequin.

Examine Out The Paper and Github. Don’t overlook to affix our 23k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra. You probably have any questions concerning the above article or if we missed something, be happy to e mail us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club

Dhanshree Shenwai is a Pc Science Engineer and has a very good expertise in FinTech corporations masking Monetary, Playing cards & Funds and Banking area with eager curiosity in functions of AI. She is keen about exploring new applied sciences and developments in at present’s evolving world making everybody’s life simple.