Speed up machine studying time to worth with Amazon SageMaker JumpStart and PwC’s MLOps accelerator

This can be a visitor weblog publish co-written with Vik Pant and Kyle Bassett from PwC.

With organizations more and more investing in machine studying (ML), ML adoption has turn out to be an integral a part of enterprise transformation methods. A current PwC CEO survey unveiled that 84% of Canadian CEOs agree that synthetic intelligence (AI) will considerably change their enterprise inside the subsequent 5 years, making this expertise extra crucial than ever. Nevertheless, implementing ML into manufacturing comes with varied concerns, notably with the ability to navigate the world of AI safely, strategically, and responsibly. One of many first steps and notably an awesome problem to turning into AI powered is successfully growing ML pipelines that may scale sustainably within the cloud. Considering of ML when it comes to pipelines that generate and preserve fashions fairly than fashions by themselves helps construct versatile and resilient prediction programs which are higher in a position to stand up to significant modifications in related knowledge over time.

Many organizations begin their journey into the world of ML with a model-centric viewpoint. Within the early phases of constructing an ML observe, the main focus is on coaching supervised ML fashions, that are mathematical representations of relationships between inputs (unbiased variables) and outputs (dependent variables) which are discovered from knowledge (usually historic). Fashions are mathematical artifacts that take enter knowledge, carry out calculations and computations on them, and generate predictions or inferences.

Though this strategy is an affordable and comparatively easy start line, it isn’t inherently scalable or intrinsically sustainable as a result of guide and advert hoc nature of mannequin coaching, tuning, testing, and trialing actions. Organizations with better maturity within the ML area undertake an ML operations (MLOps) paradigm that comes with steady integration, steady supply, steady deployment, and steady coaching. Central to this paradigm is a pipeline-centric viewpoint for growing and working industrial-strength ML programs.

On this publish, we begin with an outline of MLOps and its advantages, describe an answer to simplify its implementations, and supply particulars on the structure. We end with a case research highlighting the advantages notice by a big AWS and PwC buyer who carried out this resolution.

Background

An MLOps pipeline is a set of interrelated sequences of steps which are used to construct, deploy, function, and handle a number of ML fashions in manufacturing. Such a pipeline encompasses the phases concerned in constructing, testing, tuning, and deploying ML fashions, together with however not restricted to knowledge preparation, characteristic engineering, mannequin coaching, analysis, deployment, and monitoring. As such, an ML mannequin is the product of an MLOps pipeline, and a pipeline is a workflow for creating a number of ML fashions. Such pipelines assist structured and systematic processes for constructing, calibrating, assessing, and implementing ML fashions, and the fashions themselves generate predictions and inferences. By automating the event and operationalization of phases of pipelines, organizations can scale back the time to supply of fashions, enhance the soundness of the fashions in manufacturing, and enhance collaboration between groups of information scientists, software program engineers, and IT directors.

Resolution overview

AWS provides a complete portfolio of cloud-native companies for growing and operating MLOps pipelines in a scalable and sustainable method. Amazon SageMaker contains a complete portfolio of capabilities as a completely managed MLOps service to allow builders to create, prepare, deploy, function, and handle ML fashions within the cloud. SageMaker covers the complete MLOps workflow, from amassing to making ready and coaching the information with built-in high-performance algorithms and complicated automated ML (AutoML) experiments in order that corporations can select particular fashions that match their enterprise priorities and preferences. SageMaker allows organizations to collaboratively automate nearly all of their MLOps lifecycle in order that they’ll deal with enterprise outcomes with out risking mission delays or escalating prices. On this manner, SageMaker permits companies to deal with outcomes with out worrying about infrastructure, improvement, and upkeep related to powering industrial-strength prediction companies.

SageMaker consists of Amazon SageMaker JumpStart, which provides out-of-the-box resolution patterns for organizations in search of to speed up their MLOps journey. Organizations can begin with pre-trained and open-source fashions that may be fine-tuned to fulfill their particular wants by means of retraining and switch studying. Moreover, JumpStart offers resolution templates designed to deal with widespread use circumstances, in addition to instance Jupyter notebooks with prewritten starter code. These assets might be accessed by merely visiting the JumpStart touchdown web page inside Amazon SageMaker Studio.

PwC has constructed a pre-packaged MLOps accelerator that additional hastens time to worth and will increase return on funding for organizations that use SageMaker. This MLOps accelerator enhances the native capabilities of JumpStart by integrating complementary AWS companies. With a complete suite of technical artifacts, together with infrastructure as code (IaC) scripts, knowledge processing workflows, service integration code, and pipeline configuration templates, PwC’s MLOps accelerator simplifies the method of growing and working production-class prediction programs.

Structure overview

The inclusion of cloud-native serverless companies from AWS is prioritized into the structure of the PwC MLOps accelerator. The entry level into this accelerator is any collaboration instrument, resembling Slack, {that a} knowledge scientist or knowledge engineer can use to request an AWS setting for MLOps. Such a request is parsed after which absolutely or semi-automatically authorised utilizing workflow options in that collaboration instrument. After a request is authorised, its particulars are used for parameterizing IaC templates. The supply code for these IaC templates is managed in AWS CodeCommit. These parameterized IaC templates are submitted to AWS CloudFormation for modeling, provisioning, and managing stacks of AWS and third-party assets.

The next diagram illustrates the workflow.

After AWS CloudFormation provisions an setting for MLOps on AWS, the setting is prepared to be used by knowledge scientists, knowledge engineers, and their collaborators. The PWC accelerator consists of predefined roles on AWS Identity and Access Management (IAM) which are associated to MLOps actions and duties. These roles specify the companies and assets within the MLOps setting that may be accessed by varied customers based mostly on their job profiles. After accessing the MLOps setting, customers can entry any of the modalities on SageMaker to carry out their duties. These embody SageMaker pocket book cases, Amazon SageMaker Autopilot experiments, and Studio. You may profit from all SageMaker options and capabilities, together with mannequin coaching, tuning, analysis, deployment, and monitoring.

The accelerator additionally consists of connections with Amazon DataZone for sharing, looking out, and discovering knowledge at scale throughout organizational boundaries to generate and enrich fashions. Equally, knowledge for coaching, testing, validating, and detecting mannequin drift can supply quite a lot of companies, together with Amazon Redshift, Amazon Relational Database Service (Amazon RDS), Amazon Elastic File System (Amazon EFS), and Amazon Simple Storage Service (Amazon S3). Prediction programs might be deployed in some ways, together with as SageMaker endpoints instantly, SageMaker endpoints wrapped in AWS Lambda capabilities, and SageMaker endpoints invoked by means of customized code on Amazon Elastic Kubernetes Service (Amazon EKS) or Amazon Elastic Compute Cloud (Amazon EC2). Amazon CloudWatch is used to watch the setting for MLOps on AWS in a complete method to watch alarms, logs, and occasions knowledge from throughout the whole stack (purposes, infrastructure, community, and companies).

The next diagram illustrates this structure.

Case research

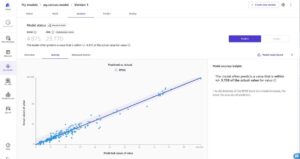

On this part, we share an illustrative case research from a big insurance coverage firm in Canada. It focuses on the transformative impression of the implementation of PwC Canada’s MLOps accelerator and JumpStart templates.

This consumer partnered with PwC Canada and AWS to handle challenges with inefficient mannequin improvement and ineffective deployment processes, lack of consistency and collaboration, and issue in scaling ML fashions. The implementation of this MLOps Accelerator in live performance with JumpStart templates achieved the next:

- Finish-to-end automation – Automation practically halved the period of time for knowledge preprocessing, mannequin coaching, hyperparameter tuning, and mannequin deployment and monitoring

- Collaboration and standardization – Standardized instruments and frameworks to advertise consistency throughout the group practically doubled the speed of mannequin innovation

- Mannequin governance and compliance – They carried out a mannequin governance framework to make sure that all ML fashions met regulatory necessities and adhered to the corporate’s moral pointers, which diminished danger administration prices by 40%

- Scalable cloud infrastructure – They invested in scalable infrastructure to successfully handle huge knowledge volumes and deploy a number of ML fashions concurrently, lowering infrastructure and platform prices by 50%

- Fast deployment – The prepackaged resolution diminished time to manufacturing by 70%

By delivering MLOps finest practices by means of fast deployment packages, our consumer was in a position to de-risk their MLOps implementation and unlock the complete potential of ML for a variety of enterprise capabilities, resembling danger prediction and asset pricing. General, the synergy between the PwC MLOps accelerator and JumpStart enabled our consumer to streamline, scale, safe, and maintain their knowledge science and knowledge engineering actions.

It ought to be famous that the PwC and AWS resolution is just not trade particular and is related throughout industries and sectors.

Conclusion

SageMaker and its accelerators enable organizations to boost the productiveness of their ML program. There are a lot of advantages, together with however not restricted to the next:

- Collaboratively create IaC, MLOps, and AutoML use circumstances to understand enterprise advantages from standardization

- Allow environment friendly experimental prototyping, with and with out code, to turbocharge AI from improvement to deployment with IaC, MLOps, and AutoML

- Automate tedious, time-consuming duties resembling characteristic engineering and hyperparameter tuning with AutoML

- Make use of a steady mannequin monitoring paradigm to align the chance of ML mannequin utilization with enterprise danger urge for food

Please contact the authors of this publish, AWS Advisory Canada, or PwC Canada to study extra about Jumpstart and PwC’s MLOps accelerator.

Concerning the Authors

Vik is a Companion within the Cloud & Information observe at PwC Canada He earned a PhD in Data Science from the College of Toronto. He’s satisfied that there’s a telepathic connection between his organic neural community and the bogus neural networks that he trains on SageMaker. Join with him on LinkedIn.

Vik is a Companion within the Cloud & Information observe at PwC Canada He earned a PhD in Data Science from the College of Toronto. He’s satisfied that there’s a telepathic connection between his organic neural community and the bogus neural networks that he trains on SageMaker. Join with him on LinkedIn.

Kyle is a Companion within the Cloud & Information observe at PwC Canada, alongside together with his crack workforce of tech alchemists, they weave enchanting MLOPs options that mesmerize purchasers with accelerated enterprise worth. Armed with the facility of synthetic intelligence and a sprinkle of wizardry, Kyle turns complicated challenges into digital fairy tales, making the inconceivable doable. Join with him on LinkedIn.

Kyle is a Companion within the Cloud & Information observe at PwC Canada, alongside together with his crack workforce of tech alchemists, they weave enchanting MLOPs options that mesmerize purchasers with accelerated enterprise worth. Armed with the facility of synthetic intelligence and a sprinkle of wizardry, Kyle turns complicated challenges into digital fairy tales, making the inconceivable doable. Join with him on LinkedIn.

Francois is a Principal Advisory Guide with AWS Skilled Companies Canada and the Canadian observe lead for Information and Innovation Advisory. He guides clients to ascertain and implement their total cloud journey and their knowledge packages, specializing in imaginative and prescient, technique, enterprise drivers, governance, goal working fashions, and roadmaps. Join with him on LinkedIn.

Francois is a Principal Advisory Guide with AWS Skilled Companies Canada and the Canadian observe lead for Information and Innovation Advisory. He guides clients to ascertain and implement their total cloud journey and their knowledge packages, specializing in imaginative and prescient, technique, enterprise drivers, governance, goal working fashions, and roadmaps. Join with him on LinkedIn.