Accelerating machine studying prototyping with interactive instruments – Google AI Weblog

Latest deep studying advances have enabled a plethora of high-performance, real-time multimedia purposes primarily based on machine studying (ML), corresponding to human body segmentation for video and teleconferencing, depth estimation for 3D reconstruction, hand and body tracking for interplay, and audio processing for distant communication.

Nonetheless, growing and iterating on these ML-based multimedia prototypes will be difficult and expensive. It normally entails a cross-functional workforce of ML practitioners who fine-tune the fashions, consider robustness, characterize strengths and weaknesses, examine efficiency within the end-use context, and develop the purposes. Furthermore, fashions are often up to date and require repeated integration efforts earlier than analysis can happen, which makes the workflow ill-suited to design and experiment.

In “Rapsai: Accelerating Machine Learning Prototyping of Multimedia Applications through Visual Programming”, introduced at CHI 2023, we describe a visible programming platform for fast and iterative growth of end-to-end ML-based multimedia purposes. Visible Blocks for ML, previously known as Rapsai, offers a no-code graph constructing expertise via its node-graph editor. Customers can create and join completely different elements (nodes) to quickly construct an ML pipeline, and see the ends in real-time with out writing any code. We show how this platform permits a greater mannequin analysis expertise via interactive characterization and visualization of ML mannequin efficiency and interactive knowledge augmentation and comparability. Sign up to be notified when Visible Blocks for ML is publicly out there.

|

| Visible Blocks makes use of a node-graph editor that facilitates fast prototyping of ML-based multimedia purposes. |

Formative research: Design targets for fast ML prototyping

To raised perceive the challenges of current fast prototyping ML options (LIME, VAC-CNN, EnsembleMatrix), we performed a formative study (i.e., the method of gathering suggestions from potential customers early within the design means of a know-how product or system) utilizing a conceptual mock-up interface. Examine contributors included seven laptop imaginative and prescient researchers, audio ML researchers, and engineers throughout three ML groups.

|

| The formative research used a conceptual mock-up interface to assemble early insights. |

By this formative research, we recognized six challenges generally present in current prototyping options:

- The enter used to judge fashions sometimes differs from in-the-wild enter with precise customers by way of decision, side ratio, or sampling fee.

- Individuals couldn’t rapidly and interactively alter the enter knowledge or tune the mannequin.

- Researchers optimize the mannequin with quantitative metrics on a set set of information, however real-world efficiency requires human reviewers to judge within the software context.

- It’s tough to match variations of the mannequin, and cumbersome to share the perfect model with different workforce members to attempt it.

- As soon as the mannequin is chosen, it may be time-consuming for a workforce to make a bespoke prototype that showcases the mannequin.

- In the end, the mannequin is simply half of a bigger real-time pipeline, wherein contributors want to look at intermediate outcomes to grasp the bottleneck.

These recognized challenges knowledgeable the event of the Visible Blocks system, which included six design targets: (1) develop a visible programming platform for quickly constructing ML prototypes, (2) assist real-time multimedia person enter in-the-wild, (3) present interactive knowledge augmentation, (4) examine mannequin outputs with side-by-side outcomes, (5) share visualizations with minimal effort, and (6) present off-the-shelf fashions and datasets.

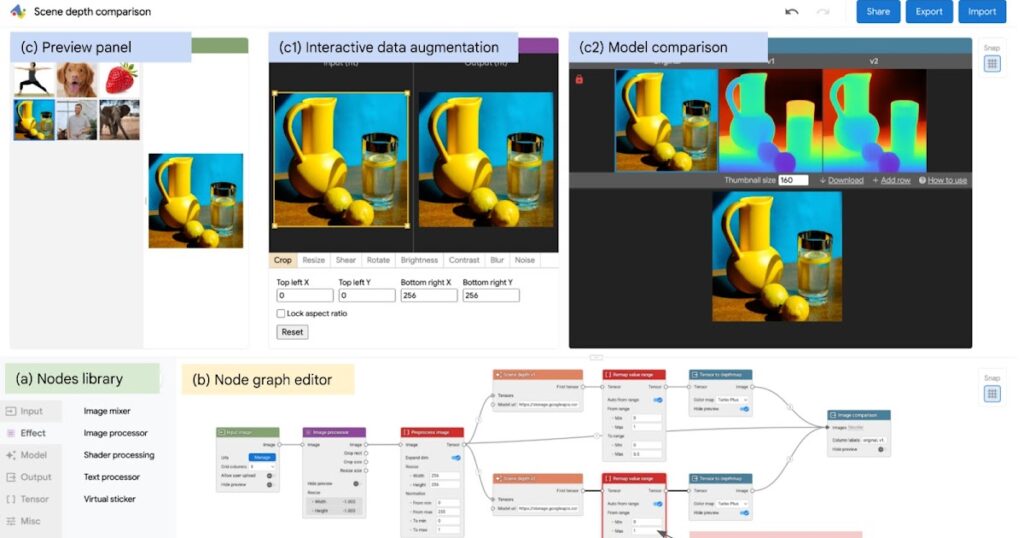

Node-graph editor for visually programming ML pipelines

Visible Blocks is principally written in JavaScript and leverages TensorFlow.js and TensorFlow Lite for ML capabilities and three.js for graphics rendering. The interface permits customers to quickly construct and work together with ML fashions utilizing three coordinated views: (1) a Nodes Library that accommodates over 30 nodes (e.g., Picture Processing, Physique Segmentation, Picture Comparability) and a search bar for filtering, (2) a Node-graph Editor that permits customers to construct and modify a multimedia pipeline by dragging and including nodes from the Nodes Library, and (3) a Preview Panel that visualizes the pipeline’s enter and output, alters the enter and intermediate outcomes, and visually compares completely different fashions.

|

| The visible programming interface permits customers to rapidly develop and consider ML fashions by composing and previewing node-graphs with real-time outcomes. |

Iterative design, growth, and analysis of distinctive fast prototyping capabilities

During the last yr, we’ve been iteratively designing and bettering the Visible Blocks platform. Weekly suggestions classes with the three ML groups from the formative research confirmed appreciation for the platform’s distinctive capabilities and its potential to speed up ML prototyping via:

- Help for varied sorts of enter knowledge (picture, video, audio) and output modalities (graphics, sound).

- A library of pre-trained ML fashions for widespread duties (physique segmentation, landmark detection, portrait depth estimation) and customized mannequin import choices.

- Interactive knowledge augmentation and manipulation with drag-and-drop operations and parameter sliders.

- Aspect-by-side comparability of a number of fashions and inspection of their outputs at completely different levels of the pipeline.

- Fast publishing and sharing of multimedia pipelines on to the online.

Analysis: 4 case research

To judge the usability and effectiveness of Visible Blocks, we performed 4 case research with 15 ML practitioners. They used the platform to prototype completely different multimedia purposes: portrait depth with relighting effects, scene depth with visual effects, alpha matting for virtual conferences, and audio denoising for communication.

|

| The system streamlining comparability of two Portrait Depth fashions, together with custom-made visualization and results. |

With a brief introduction and video tutorial, contributors have been capable of rapidly determine variations between the fashions and choose a greater mannequin for his or her use case. We discovered that Visible Blocks helped facilitate fast and deeper understanding of mannequin advantages and trade-offs:

“It provides me instinct about which knowledge augmentation operations that my mannequin is extra delicate [to], then I can return to my coaching pipeline, possibly improve the quantity of information augmentation for these particular steps which can be making my mannequin extra delicate.” (Participant 13)

“It’s a good quantity of labor so as to add some background noise, I’ve a script, however then each time I’ve to seek out that script and modify it. I’ve all the time achieved this in a one-off approach. It’s easy but additionally very time consuming. That is very handy.” (Participant 15)

|

| The system permits researchers to match a number of Portrait Depth fashions at completely different noise ranges, serving to ML practitioners determine the strengths and weaknesses of every. |

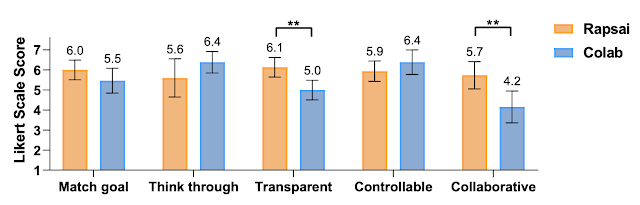

In a post-hoc survey utilizing a seven-point Likert scale, contributors reported Visible Blocks to be extra clear about the way it arrives at its remaining outcomes than Colab (Visible Blocks 6.13 ± 0.88 vs. Colab 5.0 ± 0.88, < .005) and extra collaborative with customers to give you the outputs (Visible Blocks 5.73 ± 1.23 vs. Colab 4.15 ± 1.43, < .005). Though Colab assisted customers in considering via the duty and controlling the pipeline extra successfully via programming, Customers reported that they have been capable of full duties in Visible Blocks in only a few minutes that might usually take as much as an hour or extra. For instance, after watching a 4-minute tutorial video, all contributors have been capable of construct a customized pipeline in Visible Blocks from scratch inside quarter-hour (10.72 ± 2.14). Individuals normally spent lower than 5 minutes (3.98 ± 1.95) getting the preliminary outcomes, then have been making an attempt out completely different enter and output for the pipeline.

|

| Consumer rankings between Rapsai (preliminary prototype of Visible Blocks) and Colab throughout 5 dimensions. |

Extra ends in our paper confirmed that Visible Blocks helped contributors speed up their workflow, make extra knowledgeable selections about mannequin choice and tuning, analyze strengths and weaknesses of various fashions, and holistically consider mannequin habits with real-world enter.

Conclusions and future instructions

Visible Blocks lowers growth limitations for ML-based multimedia purposes. It empowers customers to experiment with out worrying about coding or technical particulars. It additionally facilitates collaboration between designers and builders by offering a typical language for describing ML pipelines. Sooner or later, we plan to open this framework up for the neighborhood to contribute their very own nodes and combine it into many various platforms. We count on visible programming for machine studying to be a typical interface throughout ML tooling going ahead.

Acknowledgements

This work is a collaboration throughout a number of groups at Google. Key contributors to the mission embrace Ruofei Du, Na Li, Jing Jin, Michelle Carney, Xiuxiu Yuan, Kristen Wright, Mark Sherwood, Jason Mayes, Lin Chen, Jun Jiang, Scott Miles, Maria Kleiner, Yinda Zhang, Anuva Kulkarni, Xingyu “Bruce” Liu, Ahmed Sabie, Sergio Escolano, Abhishek Kar, Ping Yu, Ram Iyengar, Adarsh Kowdle, and Alex Olwal.

We wish to prolong our because of Jun Zhang and Satya Amarapalli for just a few early-stage prototypes, and Sarah Heimlich for serving as a 20% program supervisor, Sean Fanello, Danhang Tang, Stephanie Debats, Walter Korman, Anne Menini, Joe Moran, Eric Turner, and Shahram Izadi for offering preliminary suggestions for the manuscript and the weblog put up. We’d additionally prefer to thank our CHI 2023 reviewers for his or her insightful suggestions.