This AI Paper from SambaNova Presents a Machine Studying Technique to Adapt Pretrained LLMs to New Languages

The speedy development of enormous language fashions has ushered in a brand new period of pure language processing capabilities. Nonetheless, a big problem persists: most of those fashions are primarily skilled on a restricted set of broadly spoken languages, leaving an unlimited linguistic variety unexplored. This limitation not solely restricts the accessibility of cutting-edge language applied sciences but in addition perpetuates a technological divide throughout linguistic communities.

Researchers have tackled this problem on this examine by proposing a novel AI technique named SambaLingo. This method goals to adapt current, high-performing language fashions to new languages, leveraging the strengths of pre-trained fashions whereas tailoring them to the distinctive traits of the goal language.

Earlier efforts to deal with this concern have primarily centered on coaching monolithic multilingual or language-specific fashions from scratch. Nonetheless, these approaches face vital hurdles, together with the curse of multilinguality, information shortage, and the substantial computational sources required. Adapting English-centric fashions to new languages has emerged as a promising various, demonstrating the potential to outperform language-specific fashions pre-trained from scratch.

The SambaLingo methodology begins with the choice of an appropriate base mannequin that has already exhibited distinctive efficiency in its preliminary language. On this examine, the researchers selected the open-source Llama2 7B mannequin, famend for its English language capabilities, as their place to begin.

To successfully seize the linguistic nuances of the goal language, the researchers expanded the mannequin’s vocabulary by including non-overlapping tokens from the goal language and initializing them utilizing sub-word embeddings from the unique tokenizer. This important step ensures that the mannequin can precisely tokenize and symbolize the brand new language, paving the way in which for seamless adaptation.

Subsequent, the researchers employed a continuous pre-training method, feeding the mannequin a fastidiously curated combination of English and goal language net information sourced from CulturaX. The information combination adopted a 1:3 ratio, biased in direction of the goal language, to strike a fragile steadiness between preserving the mannequin’s current information and adapting it to the brand new linguistic panorama.

To additional improve the mannequin’s alignment with human preferences, the researchers applied a two-stage course of: supervised fine-tuning (SFT) and direct choice optimization (DPO). Throughout SFT, they utilized the ultrachat-200k dataset and its machine-translated model. For DPO, they employed extremely suggestions and cai-conversation-harmless datasets, mixing them with a ten:1 ratio of English to machine-translated information.

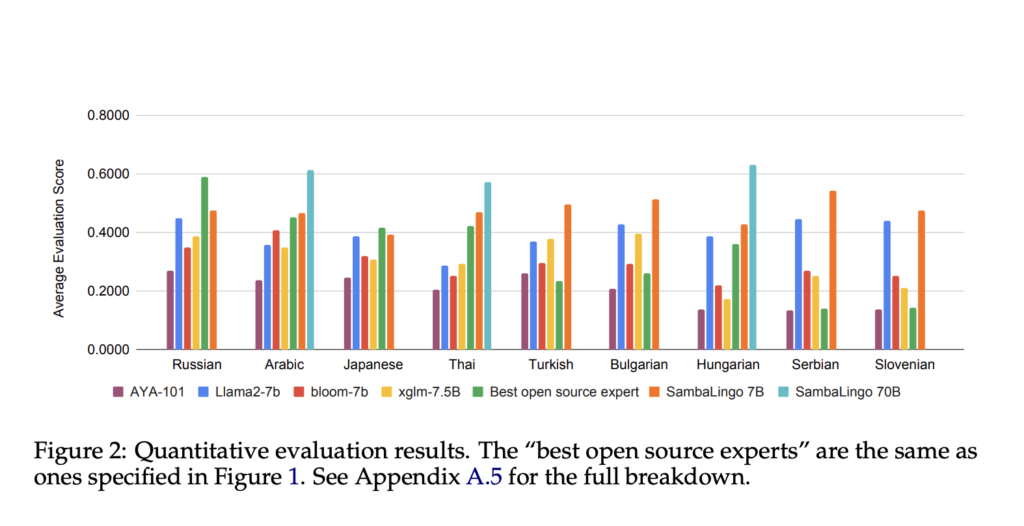

The researchers rigorously evaluated the SambaLingo fashions throughout varied duties and languages, together with language modeling, translation, textual content classification, open-book and closed-book query answering, and varied pure language understanding benchmarks as proven in Desk 1. The fashions had been examined on 9 typologically numerous languages: Arabic, Thai, Turkish, Japanese, Hungarian, Russian, Bulgarian, Serbian, and Slovenian.

Throughout a number of benchmarks, the SambaLingo fashions persistently outperformed current state-of-the-art fashions in these languages. As an example, on the perplexity benchmark, which measures language modeling efficiency, the SambaLingo fashions achieved decrease perplexity scores than all current baselines on a held-out set from their coaching information (Determine 1). Moreover, when scaled to the bigger Llama2 70B parameter scale, the SambaLingo fashions exhibited even higher efficiency, surpassing their 7B counterparts throughout a number of benchmarks, regardless of being skilled on fewer tokens.

To validate the standard of the mannequin’s outputs and their alignment with human preferences, the researchers employed GPT-4 as an neutral choose, evaluating the mannequin’s responses to actual consumer prompts. The outcomes had been promising, with SambaLingo persistently outperforming different fashions in the identical languages, as judged by GPT-4’s preferences and logical explanations.

In abstract, the SambaLingo methodology represents a big stride in direction of democratizing synthetic intelligence throughout linguistic variety. By leveraging the strengths of current high-performing fashions and tailoring them to new linguistic landscapes, this method provides a scalable and environment friendly resolution to the problem of language obstacles. With its state-of-the-art efficiency and alignment with human preferences, SambaLingo paves the way in which for a future the place the advantages of AI transcend linguistic boundaries, fostering inclusivity and accessibility for all.

Try the Paper. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t overlook to observe us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

Should you like our work, you’ll love our newsletter..

Don’t Overlook to hitch our 40k+ ML SubReddit

Wish to get in entrance of 1.5 Million AI Viewers? Work with us here