Benchmark and optimize endpoint deployment in Amazon SageMaker JumpStart

When deploying a big language mannequin (LLM), machine studying (ML) practitioners sometimes care about two measurements for mannequin serving efficiency: latency, outlined by the point it takes to generate a single token, and throughput, outlined by the variety of tokens generated per second. Though a single request to the deployed endpoint would exhibit a throughput roughly equal to the inverse of mannequin latency, this isn’t essentially the case when a number of concurrent requests are concurrently despatched to the endpoint. On account of mannequin serving strategies, similar to client-side steady batching of concurrent requests, latency and throughput have a posh relationship that varies considerably based mostly on mannequin structure, serving configurations, occasion kind {hardware}, variety of concurrent requests, and variations in enter payloads similar to variety of enter tokens and output tokens.

This publish explores these relationships through a complete benchmarking of LLMs obtainable in Amazon SageMaker JumpStart, together with Llama 2, Falcon, and Mistral variants. With SageMaker JumpStart, ML practitioners can select from a broad number of publicly obtainable basis fashions to deploy to devoted Amazon SageMaker cases inside a network-isolated surroundings. We offer theoretical rules on how accelerator specs affect LLM benchmarking. We additionally reveal the affect of deploying a number of cases behind a single endpoint. Lastly, we offer sensible suggestions for tailoring the SageMaker JumpStart deployment course of to align along with your necessities on latency, throughput, price, and constraints on obtainable occasion varieties. All of the benchmarking outcomes in addition to suggestions are based mostly on a flexible notebook which you can adapt to your use case.

Deployed endpoint benchmarking

The next determine exhibits the bottom latencies (left) and highest throughput (proper) values for deployment configurations throughout quite a lot of mannequin varieties and occasion varieties. Importantly, every of those mannequin deployments use default configurations as offered by SageMaker JumpStart given the specified mannequin ID and occasion kind for deployment.

These latency and throughput values correspond to payloads with 256 enter tokens and 256 output tokens. The bottom latency configuration limits mannequin serving to a single concurrent request, and the very best throughput configuration maximizes the doable variety of concurrent requests. As we will see in our benchmarking, rising concurrent requests monotonically will increase throughput with diminishing enchancment for big concurrent requests. Moreover, fashions are absolutely sharded on the supported occasion. For instance, as a result of the ml.g5.48xlarge occasion has 8 GPUs, all SageMaker JumpStart fashions utilizing this occasion are sharded utilizing tensor parallelism on all eight obtainable accelerators.

We are able to be aware a number of takeaways from this determine. First, not all fashions are supported on all cases; some smaller fashions, similar to Falcon 7B, don’t assist mannequin sharding, whereas bigger fashions have larger compute useful resource necessities. Second, as sharding will increase, efficiency sometimes improves, however might not essentially enhance for small fashions. It is because small fashions similar to 7B and 13B incur a considerable communication overhead when sharded throughout too many accelerators. We focus on this in additional depth later. Lastly, ml.p4d.24xlarge cases are likely to have considerably higher throughput resulting from reminiscence bandwidth enhancements of A100 over A10G GPUs. As we focus on later, the choice to make use of a selected occasion kind is dependent upon your deployment necessities, together with latency, throughput, and price constraints.

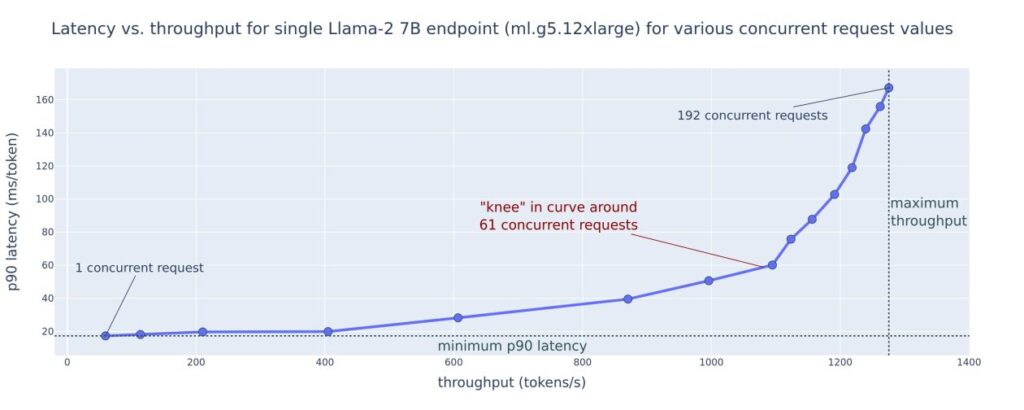

How are you going to get hold of these lowest latency and highest throughput configuration values? Let’s begin by plotting latency vs. throughput for a Llama 2 7B endpoint on an ml.g5.12xlarge occasion for a payload with 256 enter tokens and 256 output tokens, as seen within the following curve. The same curve exists for each deployed LLM endpoint.

As concurrency will increase, throughput and latency additionally monotonically improve. Due to this fact, the bottom latency level happens at a concurrent request worth of 1, and you’ll cost-effectively improve system throughput by rising concurrent requests. There exists a definite “knee” on this curve, the place it’s apparent that the throughput positive factors related to extra concurrency don’t outweigh the related improve in latency. The precise location of this knee is use case-specific; some practitioners might outline the knee on the level the place a pre-specified latency requirement is exceeded (for instance, 100 ms/token), whereas others might use load take a look at benchmarks and queueing idea strategies just like the half-latency rule, and others might use theoretical accelerator specs.

We additionally be aware that the utmost variety of concurrent requests is restricted. Within the previous determine, the road hint ends with 192 concurrent requests. The supply of this limitation is the SageMaker invocation timeout restrict, the place SageMaker endpoints timeout an invocation response after 60 seconds. This setting is account-specific and never configurable for a person endpoint. For LLMs, producing a lot of output tokens can take seconds and even minutes. Due to this fact, massive enter or output payloads could cause the invocation requests to fail. Moreover, if the variety of concurrent requests may be very massive, then many requests will expertise massive queue occasions, driving this 60-second timeout restrict. For the aim of this research, we use the timeout restrict to outline the utmost throughput doable for a mannequin deployment. Importantly, though a SageMaker endpoint might deal with a lot of concurrent requests with out observing an invocation response timeout, you might wish to outline most concurrent requests with respect to the knee within the latency-throughput curve. That is doubtless the purpose at which you begin to think about horizontal scaling, the place a single endpoint provisions a number of cases with mannequin replicas and cargo balances incoming requests between the replicas, to assist extra concurrent requests.

Taking this one step additional, the next desk incorporates benchmarking outcomes for various configurations for the Llama 2 7B mannequin, together with totally different variety of enter and output tokens, occasion varieties, and variety of concurrent requests. Observe that the previous determine solely plots a single row of this desk.

| . | Throughput (tokens/sec) | Latency (ms/token) | ||||||||||||||||||

| Concurrent Requests | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 256 | 512 | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 256 | 512 |

| Variety of whole tokens: 512, Variety of output tokens: 256 | ||||||||||||||||||||

| ml.g5.2xlarge | 30 | 54 | 115 | 208 | 343 | 475 | 486 | — | — | — | 33 | 33 | 35 | 39 | 48 | 97 | 159 | — | — | — |

| ml.g5.12xlarge | 59 | 117 | 223 | 406 | 616 | 866 | 1098 | 1214 | — | — | 17 | 17 | 18 | 20 | 27 | 38 | 60 | 112 | — | — |

| ml.g5.48xlarge | 56 | 108 | 202 | 366 | 522 | 660 | 707 | 804 | — | — | 18 | 18 | 19 | 22 | 32 | 50 | 101 | 171 | — | — |

| ml.p4d.24xlarge | 49 | 85 | 178 | 353 | 654 | 1079 | 1544 | 2312 | 2905 | 2944 | 21 | 23 | 22 | 23 | 26 | 31 | 44 | 58 | 92 | 165 |

| Variety of whole tokens: 4096, Variety of output tokens: 256 | ||||||||||||||||||||

| ml.g5.2xlarge | 20 | 36 | 48 | 49 | — | — | — | — | — | — | 48 | 57 | 104 | 170 | — | — | — | — | — | — |

| ml.g5.12xlarge | 33 | 58 | 90 | 123 | 142 | — | — | — | — | — | 31 | 34 | 48 | 73 | 132 | — | — | — | — | — |

| ml.g5.48xlarge | 31 | 48 | 66 | 82 | — | — | — | — | — | — | 31 | 43 | 68 | 120 | — | — | — | — | — | — |

| ml.p4d.24xlarge | 39 | 73 | 124 | 202 | 278 | 290 | — | — | — | — | 26 | 27 | 33 | 43 | 66 | 107 | — | — | — | — |

We observe some extra patterns on this information. When rising context dimension, latency will increase and throughput decreases. For example, on ml.g5.2xlarge with a concurrency of 1, throughput is 30 tokens/sec when the variety of whole tokens is 512, vs. 20 tokens/sec if the variety of whole tokens is 4,096. It is because it takes extra time to course of the bigger enter. We are able to additionally see that rising GPU functionality and sharding impacts the utmost throughput and most supported concurrent requests. The desk exhibits that Llama 2 7B has notably totally different most throughput values for various occasion varieties, and these most throughput values happen at totally different values of concurrent requests. These traits would drive an ML practitioner to justify the price of one occasion over one other. For instance, given a low latency requirement, the practitioner may choose an ml.g5.12xlarge occasion (4 A10G GPUs) over an ml.g5.2xlarge occasion (1 A10G GPU). If given a excessive throughput requirement, the usage of an ml.p4d.24xlarge occasion (8 A100 GPUs) with full sharding would solely be justified beneath excessive concurrency. Observe, nevertheless, that it’s typically helpful to as a substitute load a number of inference parts of a 7B mannequin on a single ml.p4d.24xlarge occasion; such multi-model assist is mentioned later on this publish.

The previous observations had been made for the Llama 2 7B mannequin. Nevertheless, comparable patterns stay true for different fashions as effectively. A major takeaway is that latency and throughput efficiency numbers are depending on payload, occasion kind, and variety of concurrent requests, so you have to to seek out the perfect configuration on your particular software. To generate the previous numbers on your use case, you may run the linked notebook, the place you may configure this load take a look at evaluation on your mannequin, occasion kind, and payload.

Making sense of accelerator specs

Deciding on appropriate {hardware} for LLM inference depends closely on particular use instances, consumer expertise objectives, and the chosen LLM. This part makes an attempt to create an understanding of the knee within the latency-throughput curve with respect to high-level rules based mostly on accelerator specs. These rules alone don’t suffice to decide: actual benchmarks are obligatory. The time period gadget is used right here to embody all ML {hardware} accelerators. We assert the knee within the latency-throughput curve is pushed by one among two components:

- The accelerator has exhausted reminiscence to cache KV matrices, so subsequent requests are queued

- The accelerator nonetheless has spare reminiscence for the KV cache, however is utilizing a big sufficient batch dimension that processing time is pushed by compute operation latency fairly than reminiscence bandwidth

We sometimes desire to be restricted by the second issue as a result of this suggests the accelerator sources are saturated. Principally, you might be maximizing the sources you payed for. Let’s discover this assertion in larger element.

KV caching and gadget reminiscence

Commonplace transformer consideration mechanisms compute consideration for every new token towards all earlier tokens. Most fashionable ML servers cache consideration keys and values in gadget reminiscence (DRAM) to keep away from re-computation at each step. That is referred to as this the KV cache, and it grows with batch dimension and sequence size. It defines what number of consumer requests will be served in parallel and can decide the knee within the latency-throughput curve if the compute-bound regime within the second situation talked about earlier just isn’t but met, given the obtainable DRAM. The next system is a tough approximation for the utmost KV cache dimension.

On this system, B is batch dimension and N is variety of accelerators. For instance, the Llama 2 7B mannequin in FP16 (2 bytes/parameter) served on an A10G GPU (24 GB DRAM) consumes roughly 14 GB, leaving 10 GB for the KV cache. Plugging within the mannequin’s full context size (N = 4096) and remaining parameters (n_layers=32, n_kv_attention_heads=32, and d_attention_head=128), this expression exhibits we’re restricted to serving a batch dimension of 4 customers in parallel resulting from DRAM constraints. For those who observe the corresponding benchmarks within the earlier desk, this can be a good approximation for the noticed knee on this latency-throughput curve. Strategies similar to grouped query attention (GQA) can cut back the KV cache dimension, in GQA’s case by the identical issue it reduces the variety of KV heads.

Arithmetic depth and gadget reminiscence bandwidth

The expansion within the computational energy of ML accelerators has outpaced their reminiscence bandwidth, which means they will carry out many extra computations on every byte of knowledge within the period of time it takes to entry that byte.

The arithmetic depth, or the ratio of compute operations to reminiscence accesses, for an operation determines whether it is restricted by reminiscence bandwidth or compute capability on the chosen {hardware}. For instance, an A10G GPU (g5 occasion kind household) with 70 TFLOPS FP16 and 600 GB/sec bandwidth can compute roughly 116 ops/byte. An A100 GPU (p4d occasion kind household) can compute roughly 208 ops/byte. If the arithmetic depth for a transformer mannequin is beneath that worth, it’s memory-bound; whether it is above, it’s compute-bound. The eye mechanism for Llama 2 7B requires 62 ops/byte for batch dimension 1 (for a proof, see A guide to LLM inference and performance), which suggests it’s memory-bound. When the eye mechanism is memory-bound, costly FLOPS are left unutilized.

There are two methods to raised make the most of the accelerator and improve arithmetic depth: cut back the required reminiscence accesses for the operation (that is what FlashAttention focuses on) or improve the batch dimension. Nevertheless, we would not have the ability to improve our batch dimension sufficient to succeed in a compute-bound regime if our DRAM is just too small to carry the corresponding KV cache. A crude approximation of the essential batch dimension B* that separates compute-bound from memory-bound regimes for traditional GPT decoder inference is described by the next expression, the place A_mb is the accelerator reminiscence bandwidth, A_f is accelerator FLOPS, and N is the variety of accelerators. This essential batch dimension will be derived by discovering the place reminiscence entry time equals computation time. Check with this blog post to know Equation 2 and its assumptions in larger element.

This is identical ops/byte ratio we beforehand calculated for A10G, so the essential batch dimension on this GPU is 116. One solution to method this theoretical, essential batch dimension is to extend mannequin sharding and break up the cache throughout extra N accelerators. This successfully will increase the KV cache capability in addition to the memory-bound batch dimension.

One other advantage of mannequin sharding is splitting mannequin parameter and information loading work throughout N accelerators. This sort of sharding is a sort of mannequin parallelism additionally known as tensor parallelism. Naively, there’s N occasions the reminiscence bandwidth and compute energy in combination. Assuming no overhead of any sort (communication, software program, and so forth), this could lower decoding latency per token by N if we’re memory-bound, as a result of token decoding latency on this regime is certain by the point it takes to load the mannequin weights and cache. In actual life, nevertheless, rising the diploma of sharding ends in elevated communication between units to share intermediate activations at each mannequin layer. This communication pace is restricted by the gadget interconnect bandwidth. It’s tough to estimate its affect exactly (for particulars, see Model parallelism), however this will ultimately cease yielding advantages or deteriorate efficiency — that is very true for smaller fashions, as a result of smaller information transfers result in decrease switch charges.

To check ML accelerators based mostly on their specs, we suggest the next. First, calculate the approximate essential batch dimension for every accelerator kind based on the second equation and the KV cache dimension for the essential batch dimension based on the primary equation. You’ll be able to then use the obtainable DRAM on the accelerator to calculate the minimal variety of accelerators required to suit the KV cache and mannequin parameters. If deciding between a number of accelerators, prioritize accelerators so as of lowest price per GB/sec of reminiscence bandwidth. Lastly, benchmark these configurations and confirm what’s the finest price/token on your higher certain of desired latency.

Choose an endpoint deployment configuration

Many LLMs distributed by SageMaker JumpStart use the text-generation-inference (TGI) SageMaker container for mannequin serving. The next desk discusses learn how to regulate quite a lot of mannequin serving parameters to both have an effect on mannequin serving which impacts the latency-throughput curve or defend the endpoint towards requests that will overload the endpoint. These are the first parameters you should utilize to configure your endpoint deployment on your use case. Except in any other case specified, we use default text generation payload parameters and TGI environment variables.

| Setting Variable | Description | SageMaker JumpStart Default Worth |

| Mannequin serving configurations | . | . |

MAX_BATCH_PREFILL_TOKENS |

Limits the variety of tokens within the prefill operation. This operation generates the KV cache for a brand new enter immediate sequence. It’s reminiscence intensive and compute certain, so this worth caps the variety of tokens allowed in a single prefill operation. Decoding steps for different queries pause whereas prefill is happening. | 4096 (TGI default) or model-specific most supported context size (SageMaker JumpStart offered), whichever is bigger. |

MAX_BATCH_TOTAL_TOKENS |

Controls the utmost variety of tokens to incorporate inside a batch throughout decoding, or a single ahead go by the mannequin. Ideally, that is set to maximise the utilization of all obtainable {hardware}. | Not specified (TGI default). TGI will set this worth with respect to remaining CUDA reminiscence throughout mannequin heat up. |

SM_NUM_GPUS |

The variety of shards to make use of. That’s, the variety of GPUs used to run the mannequin utilizing tensor parallelism. | Occasion dependent (SageMaker JumpStart offered). For every supported occasion for a given mannequin, SageMaker JumpStart supplies the very best setting for tensor parallelism. |

| Configurations to protect your endpoint (set these on your use case) | . | . |

MAX_TOTAL_TOKENS |

This caps the reminiscence price range of a single consumer request by limiting the variety of tokens within the enter sequence plus the variety of tokens within the output sequence (the max_new_tokens payload parameter). |

Mannequin-specific most supported context size. For instance, 4096 for Llama 2. |

MAX_INPUT_LENGTH |

Identifies the utmost allowed variety of tokens within the enter sequence for a single consumer request. Issues to contemplate when rising this worth embody: longer enter sequences require extra reminiscence, which impacts steady batching, and lots of fashions have a supported context size that shouldn’t be exceeded. | Mannequin-specific most supported context size. For instance, 4095 for Llama 2. |

MAX_CONCURRENT_REQUESTS |

The utmost variety of concurrent requests allowed by the deployed endpoint. New requests past this restrict will instantly increase a mannequin overloaded error to forestall poor latency for the present processing requests. | 128 (TGI default). This setting permits you to get hold of excessive throughput for quite a lot of use instances, however it’s best to pin as applicable to mitigate SageMaker invocation timeout errors. |

The TGI server makes use of steady batching, which dynamically batches concurrent requests collectively to share a single mannequin inference ahead go. There are two sorts of ahead passes: prefill and decode. Every new request should run a single prefill ahead go to populate the KV cache for the enter sequence tokens. After the KV cache is populated, a decode ahead go performs a single next-token prediction for all batched requests, which is iteratively repeated to supply the output sequence. As new requests are despatched to the server, the following decode step should wait so the prefill step can run for the brand new requests. This should happen earlier than these new requests are included in subsequent repeatedly batched decode steps. On account of {hardware} constraints, the continual batching used for decoding might not embody all requests. At this level, requests enter a processing queue and inference latency begins to considerably improve with solely minor throughput acquire.

It’s doable to separate LLM latency benchmarking analyses into prefill latency, decode latency, and queue latency. The time consumed by every of those parts is basically totally different in nature: prefill is a one-time computation, decoding happens one time for every token within the output sequence, and queueing includes server batching processes. When a number of concurrent requests are being processed, it turns into tough to disentangle the latencies from every of those parts as a result of the latency skilled by any given consumer request includes queue latencies pushed by the necessity to prefill new concurrent requests in addition to queue latencies pushed by the inclusion of the request in batch decoding processes. Because of this, this publish focuses on end-to-end processing latency. The knee within the latency-throughput curve happens on the level of saturation the place queue latencies begin to considerably improve. This phenomenon happens for any mannequin inference server and is pushed by accelerator specs.

Frequent necessities throughout deployment embody satisfying a minimal required throughput, most allowed latency, most price per hour, and most price to generate 1 million tokens. You need to situation these necessities on payloads that symbolize end-user requests. A design to fulfill these necessities ought to think about many components, together with the particular mannequin structure, dimension of the mannequin, occasion varieties, and occasion rely (horizontal scaling). Within the following sections, we deal with deploying endpoints to reduce latency, maximize throughput, and decrease price. This evaluation considers 512 whole tokens and 256 output tokens.

Reduce latency

Latency is a vital requirement in lots of real-time use instances. Within the following desk, we have a look at minimal latency for every mannequin and every occasion kind. You’ll be able to obtain minimal latency by setting MAX_CONCURRENT_REQUESTS = 1.

| Minimal Latency (ms/token) | |||||

| Mannequin ID | ml.g5.2xlarge | ml.g5.12xlarge | ml.g5.48xlarge | ml.p4d.24xlarge | ml.p4de.24xlarge |

| Llama 2 7B | 33 | 17 | 18 | 20 | — |

| Llama 2 7B Chat | 33 | 17 | 18 | 20 | — |

| Llama 2 13B | — | 22 | 23 | 23 | — |

| Llama 2 13B Chat | — | 23 | 23 | 23 | — |

| Llama 2 70B | — | — | 57 | 43 | — |

| Llama 2 70B Chat | — | — | 57 | 45 | — |

| Mistral 7B | 35 | — | — | — | — |

| Mistral 7B Instruct | 35 | — | — | — | — |

| Mixtral 8x7B | — | — | 33 | 27 | — |

| Falcon 7B | 33 | — | — | — | — |

| Falcon 7B Instruct | 33 | — | — | — | — |

| Falcon 40B | — | 53 | 33 | 27 | — |

| Falcon 40B Instruct | — | 53 | 33 | 28 | — |

| Falcon 180B | — | — | — | — | 42 |

| Falcon 180B Chat | — | — | — | — | 42 |

To realize minimal latency for a mannequin, you should utilize the next code whereas substituting your required mannequin ID and occasion kind:

Observe that the latency numbers change relying on the variety of enter and output tokens. Nevertheless, the deployment course of stays the identical besides the surroundings variables MAX_INPUT_TOKENS and MAX_TOTAL_TOKENS. Right here, these surroundings variables are set to assist assure endpoint latency necessities as a result of bigger enter sequences might violate the latency requirement. Observe that SageMaker JumpStart already supplies the opposite optimum surroundings variables when deciding on occasion kind; for example, utilizing ml.g5.12xlarge will set SM_NUM_GPUS to 4 within the mannequin surroundings.

Maximize throughput

On this part, we maximize the variety of generated tokens per second. That is sometimes achieved on the most legitimate concurrent requests for the mannequin and the occasion kind. Within the following desk, we report the throughput achieved on the largest concurrent request worth achieved earlier than encountering a SageMaker invocation timeout for any request.

| Most Throughput (tokens/sec), Concurrent Requests | |||||

| Mannequin ID | ml.g5.2xlarge | ml.g5.12xlarge | ml.g5.48xlarge | ml.p4d.24xlarge | ml.p4de.24xlarge |

| Llama 2 7B | 486 (64) | 1214 (128) | 804 (128) | 2945 (512) | — |

| Llama 2 7B Chat | 493 (64) | 1207 (128) | 932 (128) | 3012 (512) | — |

| Llama 2 13B | — | 787 (128) | 496 (64) | 3245 (512) | — |

| Llama 2 13B Chat | — | 782 (128) | 505 (64) | 3310 (512) | — |

| Llama 2 70B | — | — | 124 (16) | 1585 (256) | — |

| Llama 2 70B Chat | — | — | 114 (16) | 1546 (256) | — |

| Mistral 7B | 947 (64) | — | — | — | — |

| Mistral 7B Instruct | 986 (128) | — | — | — | — |

| Mixtral 8x7B | — | — | 701 (128) | 3196 (512) | — |

| Falcon 7B | 1340 (128) | — | — | — | — |

| Falcon 7B Instruct | 1313 (128) | — | — | — | — |

| Falcon 40B | — | 244 (32) | 382 (64) | 2699 (512) | — |

| Falcon 40B Instruct | — | 245 (32) | 415 (64) | 2675 (512) | — |

| Falcon 180B | — | — | — | — | 1100 (128) |

| Falcon 180B Chat | — | — | — | — | 1081 (128) |

To realize most throughput for a mannequin, you should utilize the next code:

Observe that the utmost variety of concurrent requests is dependent upon the mannequin kind, occasion kind, most variety of enter tokens, and most variety of output tokens. Due to this fact, it’s best to set these parameters earlier than setting MAX_CONCURRENT_REQUESTS.

Additionally be aware {that a} consumer involved in minimizing latency is usually at odds with a consumer involved in maximizing throughput. The previous is involved in real-time responses, whereas the latter is involved in batch processing such that the endpoint queue is at all times saturated, thereby minimizing processing downtime. Customers who wish to maximize throughput conditioned on latency necessities are sometimes involved in working on the knee within the latency-throughput curve.

Reduce price

The primary choice to reduce price includes minimizing price per hour. With this, you may deploy a particular mannequin on the SageMaker occasion with the bottom price per hour. For real-time pricing of SageMaker cases, seek advice from Amazon SageMaker pricing. Generally, the default occasion kind for SageMaker JumpStart LLMs is the lowest-cost deployment choice.

The second choice to reduce price includes minimizing the fee to generate 1 million tokens. It is a easy transformation of the desk we mentioned earlier to maximise throughput, the place you may first compute the time it takes in hours to generate 1 million tokens (1e6 / throughput / 3600). You’ll be able to then multiply this time to generate 1 million tokens with the worth per hour of the desired SageMaker occasion.

Observe that cases with the bottom price per hour aren’t the identical as cases with the bottom price to generate 1 million tokens. For example, if the invocation requests are sporadic, an occasion with the bottom price per hour is perhaps optimum, whereas within the throttling eventualities, the bottom price to generate 1,000,000 tokens is perhaps extra applicable.

Tensor parallel vs. multi-model trade-off

In all earlier analyses, we thought-about deploying a single mannequin duplicate with a tensor parallel diploma equal to the variety of GPUs on the deployment occasion kind. That is the default SageMaker JumpStart habits. Nevertheless, as beforehand famous, sharding a mannequin can enhance mannequin latency and throughput solely as much as a sure restrict, past which inter-device communication necessities dominate computation time. This suggests that it’s typically helpful to deploy a number of fashions with a decrease tensor parallel diploma on a single occasion fairly than a single mannequin with a better tensor parallel diploma.

Right here, we deploy Llama 2 7B and 13B endpoints on ml.p4d.24xlarge cases with tensor parallel (TP) levels of 1, 2, 4, and eight. For readability in mannequin habits, every of those endpoints solely load a single mannequin.

| . | Throughput (tokens/sec) | Latency (ms/token) | ||||||||||||||||||

| Concurrent Requests | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 256 | 512 | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 256 | 512 |

| TP Diploma | Llama 2 13B | |||||||||||||||||||

| 1 | 38 | 74 | 147 | 278 | 443 | 612 | 683 | 722 | — | — | 26 | 27 | 27 | 29 | 37 | 45 | 87 | 174 | — | — |

| 2 | 49 | 92 | 183 | 351 | 604 | 985 | 1435 | 1686 | 1726 | — | 21 | 22 | 22 | 22 | 25 | 32 | 46 | 91 | 159 | — |

| 4 | 46 | 94 | 181 | 343 | 655 | 1073 | 1796 | 2408 | 2764 | 2819 | 23 | 21 | 21 | 24 | 25 | 30 | 37 | 57 | 111 | 172 |

| 8 | 44 | 86 | 158 | 311 | 552 | 1015 | 1654 | 2450 | 3087 | 3180 | 22 | 24 | 26 | 26 | 29 | 36 | 42 | 57 | 95 | 152 |

| . | Llama 2 7B | |||||||||||||||||||

| 1 | 62 | 121 | 237 | 439 | 778 | 1122 | 1569 | 1773 | 1775 | — | 16 | 16 | 17 | 18 | 22 | 28 | 43 | 88 | 151 | — |

| 2 | 62 | 122 | 239 | 458 | 780 | 1328 | 1773 | 2440 | 2730 | 2811 | 16 | 16 | 17 | 18 | 21 | 25 | 38 | 56 | 103 | 182 |

| 4 | 60 | 106 | 211 | 420 | 781 | 1230 | 2206 | 3040 | 3489 | 3752 | 17 | 19 | 20 | 18 | 22 | 27 | 31 | 45 | 82 | 132 |

| 8 | 49 | 97 | 179 | 333 | 612 | 1081 | 1652 | 2292 | 2963 | 3004 | 22 | 20 | 24 | 26 | 27 | 33 | 41 | 65 | 108 | 167 |

Our earlier analyses already confirmed vital throughput benefits on ml.p4d.24xlarge cases, which regularly interprets to raised efficiency when it comes to price to generate 1 million tokens over the g5 occasion household beneath excessive concurrent request load circumstances. This evaluation clearly demonstrates that it’s best to think about the trade-off between mannequin sharding and mannequin replication inside a single occasion; that’s, a totally sharded mannequin just isn’t sometimes the very best use of ml.p4d.24xlarge compute sources for 7B and 13B mannequin households. The truth is, for the 7B mannequin household, you get hold of the very best throughput for a single mannequin duplicate with a tensor parallel diploma of 4 as a substitute of 8.

From right here, you may extrapolate that the very best throughput configuration for the 7B mannequin includes a tensor parallel diploma of 1 with eight mannequin replicas, and the very best throughput configuration for the 13B mannequin is probably going a tensor parallel diploma of two with 4 mannequin replicas. To study extra about learn how to accomplish this, seek advice from Reduce model deployment costs by 50% on average using the latest features of Amazon SageMaker, which demonstrates the usage of inference component-based endpoints. On account of load balancing strategies, server routing, and sharing of CPU sources, you may not absolutely obtain throughput enhancements precisely equal to the variety of replicas occasions the throughput for a single duplicate.

Horizontal scaling

As noticed earlier, every endpoint deployment has a limitation on the variety of concurrent requests relying on the variety of enter and output tokens in addition to the occasion kind. If this doesn’t meet your throughput or concurrent request requirement, you may scale as much as make the most of a couple of occasion behind the deployed endpoint. SageMaker routinely performs load balancing of queries between cases. For instance, the next code deploys an endpoint supported by three cases:

The next desk exhibits the throughput acquire as an element of variety of cases for the Llama 2 7B mannequin.

| . | . | Throughput (tokens/sec) | Latency (ms/token) | ||||||||||||||

| . | Concurrent Requests | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 |

| Occasion Rely | Occasion Sort | Variety of whole tokens: 512, Variety of output tokens: 256 | |||||||||||||||

| 1 | ml.g5.2xlarge | 30 | 60 | 115 | 210 | 351 | 484 | 492 | — | 32 | 33 | 34 | 37 | 45 | 93 | 160 | — |

| 2 | ml.g5.2xlarge | 30 | 60 | 115 | 221 | 400 | 642 | 922 | 949 | 32 | 33 | 34 | 37 | 42 | 53 | 94 | 167 |

| 3 | ml.g5.2xlarge | 30 | 60 | 118 | 228 | 421 | 731 | 1170 | 1400 | 32 | 33 | 34 | 36 | 39 | 47 | 57 | 110 |

Notably, the knee within the latency-throughput curve shifts to the precise as a result of larger occasion counts can deal with bigger numbers of concurrent requests throughout the multi-instance endpoint. For this desk, the concurrent request worth is for your complete endpoint, not the variety of concurrent requests that every particular person occasion receives.

You too can use autoscaling, a function to observe your workloads and dynamically regulate the capability to keep up regular and predictable efficiency on the doable lowest price. That is past the scope of this publish. To study extra about autoscaling, seek advice from Configuring autoscaling inference endpoints in Amazon SageMaker.

Invoke endpoint with concurrent requests

Let’s suppose you’ve gotten a big batch of queries that you simply wish to use to generate responses from a deployed mannequin beneath excessive throughput circumstances. For instance, within the following code block, we compile an inventory of 1,000 payloads, with every payload requesting the technology of 100 tokens. In all, we’re requesting the technology of 100,000 tokens.

When sending a lot of requests to the SageMaker runtime API, you might expertise throttling errors. To mitigate this, you may create a customized SageMaker runtime consumer that will increase the variety of retry makes an attempt. You’ll be able to present the ensuing SageMaker session object to both the JumpStartModel constructor or sagemaker.predictor.retrieve_default if you want to connect a brand new predictor to an already deployed endpoint. Within the following code, we use this session object when deploying a Llama 2 mannequin with default SageMaker JumpStart configurations:

This deployed endpoint has MAX_CONCURRENT_REQUESTS = 128 by default. Within the following block, we use the concurrent futures library to iterate over invoking the endpoint for all payloads with 128 employee threads. At most, the endpoint will course of 128 concurrent requests, and each time a request returns a response, the executor will instantly ship a brand new request to the endpoint.

This ends in producing 100,000 whole tokens with a throughput of 1255 tokens/sec on a single ml.g5.2xlarge occasion. This takes roughly 80 seconds to course of.

Observe that this throughput worth is notably totally different than the utmost throughput for Llama 2 7B on ml.g5.2xlarge within the earlier tables of this publish (486 tokens/sec at 64 concurrent requests). It is because the enter payload makes use of 8 tokens as a substitute of 256, the output token rely is 100 as a substitute of 256, and the smaller token counts permit for 128 concurrent requests. It is a closing reminder that every one latency and throughput numbers are payload dependent! Altering payload token counts will have an effect on batching processes throughout mannequin serving, which can in flip have an effect on the emergent prefill, decode, and queue occasions on your software.

Conclusion

On this publish, we offered benchmarking of SageMaker JumpStart LLMs, together with Llama 2, Mistral, and Falcon. We additionally offered a information to optimize latency, throughput, and price on your endpoint deployment configuration. You will get began by operating the associated notebook to benchmark your use case.

In regards to the Authors

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart group. His analysis pursuits embody scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has revealed papers in NeurIPS, Cell, and Neuron.

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart group. His analysis pursuits embody scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has revealed papers in NeurIPS, Cell, and Neuron.

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart group. He received his PhD from College of Illinois at Urbana-Champaign and was a Submit Doctoral Researcher at Georgia Tech. He’s an lively researcher in machine studying and algorithm design and has revealed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart group. He received his PhD from College of Illinois at Urbana-Champaign and was a Submit Doctoral Researcher at Georgia Tech. He’s an lively researcher in machine studying and algorithm design and has revealed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker JumpStart and helps develop machine studying algorithms. He received his PhD from College of Illinois Urbana-Champaign. He’s an lively researcher in machine studying and statistical inference, and has revealed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker JumpStart and helps develop machine studying algorithms. He received his PhD from College of Illinois Urbana-Champaign. He’s an lively researcher in machine studying and statistical inference, and has revealed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

João Moura is a Senior AI/ML Specialist Options Architect at AWS. João helps AWS prospects – from small startups to massive enterprises – practice and deploy massive fashions effectively, and extra broadly construct ML platforms on AWS.

João Moura is a Senior AI/ML Specialist Options Architect at AWS. João helps AWS prospects – from small startups to massive enterprises – practice and deploy massive fashions effectively, and extra broadly construct ML platforms on AWS.