Unlocking Intent Alignment in Smaller Language Fashions: A Complete Information to Zephyr-7B’s Breakthrough with Distilled Supervised Fantastic-Tuning and AI Suggestions

ZEPHYR-7B, a smaller language mannequin optimized for consumer intent alignment by means of distilled direct desire optimization (dDPO) utilizing AI Suggestions (AIF) knowledge. This method notably enhances intent alignment with out human annotation, reaching prime efficiency on chat benchmarks for 7B parameter fashions. The tactic depends on desire knowledge from AIF, requiring minimal coaching time and no further sampling throughout fine-tuning, setting a brand new state-of-the-art.

Researchers handle the proliferation of LLMs like ChatGPT and its derivatives, reminiscent of LLaMA, MPT, RedPajama-INCITE, Falcon, and Llama 2. It underscores developments in fine-tuning, context, retrieval-augmented technology, and quantization. Distillation strategies for bettering smaller mannequin efficiency are mentioned, together with instruments and benchmarks for mannequin analysis. The research evaluates ZEPHYR-7B’s efficiency on MTBench, AlpacaEval, and the HuggingFace Open LLM Leaderboard.

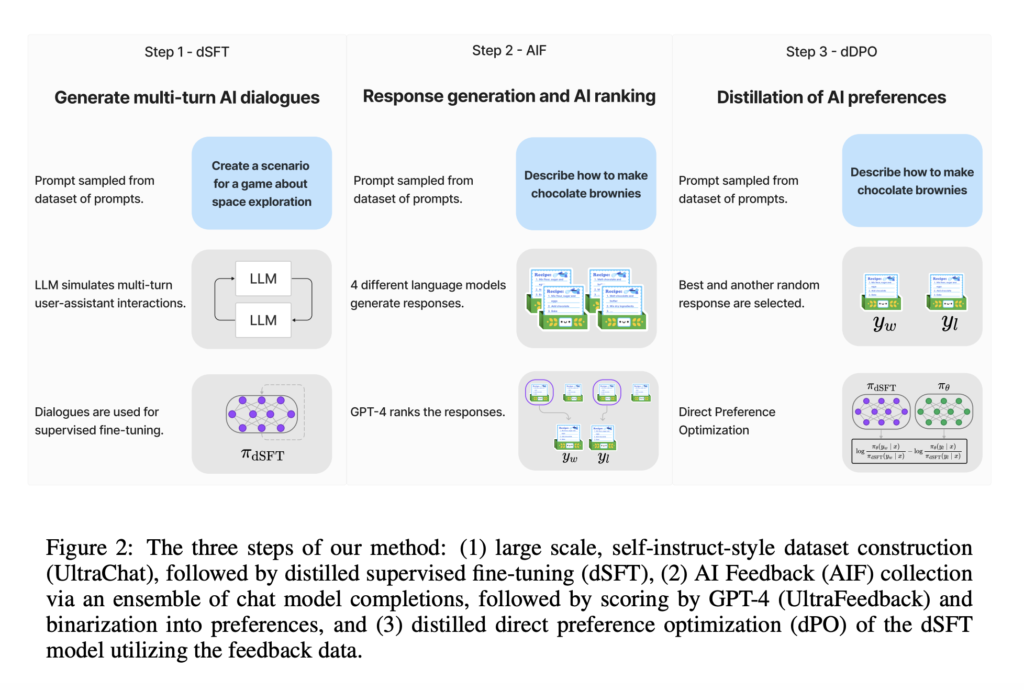

The research mentioned enhancing smaller open LLMs utilizing distilled supervised fine-tuning (dSFT) for improved accuracy and consumer intent alignment. It introduces dDPO to align LLMs with out human annotation, counting on AIF from trainer fashions. Researchers current ZEPHYR-7B, an aligned model of Mistral-7B, achieved by means of dSFT, AIF knowledge, and dDPO, demonstrating its efficiency similar to 70B-parameter chat fashions aligned with human suggestions. It emphasizes the importance of intent alignment in LLM improvement.

The method outlines a way for enhancing language fashions, combining dSFT to coach the mannequin with high-quality knowledge and dDPO to refine it by optimizing response preferences. AIF from trainer fashions is used to enhance alignment with consumer intent. The method includes iterative self-prompting to generate a coaching dataset. The ensuing ZEPHYR-7B mannequin, achieved by means of dSFT, AIF knowledge, and dDPO, represents a state-of-the-art chat mannequin with improved intent alignment.

ZEPHYR-7B, a 7B parameter mannequin, establishes a brand new state-of-the-art in chat benchmarks, surpassing LLAMA2-CHAT-70B, the most effective open-access RLHF-based mannequin. It competes favourably with GPT-3.5-TURBO and CLAUDE 2 in AlpacaEval however lags in math and coding duties. Amongst 7B fashions, the dDPO mannequin excels, outperforming dSFT and Xwin-LM dPPO. Nevertheless, bigger fashions outperform ZEPHYR in knowledge-intensive duties. Analysis on the Open LLM Leaderboard exhibits ZEPHYR’s energy in multiclass classification duties, affirming its reasoning and truthfulness capabilities after fine-tuning.

ZEPHYR-7B employs direct desire optimization to reinforce intent alignment. The research underscores potential biases in utilizing GPT-4 as an evaluator and encourages exploring smaller open fashions’ capability for consumer intent alignment. It notes the omission of security issues, reminiscent of dangerous outputs or unlawful recommendation, indicating the necessity for future analysis on this important space.

The research identifies a number of avenues for future analysis. Security issues, addressing dangerous outputs and unlawful recommendation, stay unexplored. Investigating the impression of bigger trainer fashions on distillation for bettering scholar mannequin efficiency is recommended. Using artificial knowledge in distillation, although difficult, is acknowledged as a priceless analysis space. Additional exploration of smaller open fashions and their capability for aligning with consumer intent is inspired for potential developments. Evaluating ZEPHYR-7B on a broader vary of benchmarks and duties is beneficial to evaluate its capabilities comprehensively.

Try the Paper, Github, and Demo. All Credit score For This Analysis Goes To the Researchers on This Mission. Additionally, don’t neglect to affix our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra.

If you like our work, you will love our newsletter..

We’re additionally on Telegram and WhatsApp.

Sana Hassan, a consulting intern at Marktechpost and dual-degree scholar at IIT Madras, is enthusiastic about making use of know-how and AI to handle real-world challenges. With a eager curiosity in fixing sensible issues, he brings a recent perspective to the intersection of AI and real-life options.